Install PySpark in Google Colab with Github Integration

Arun R Nair

Arun R Nair

Pre-requisites

Colab account (https://colab.research.google.com/)

Github account (https://github.com/)

Introduction:

Google Colab is an excellent environment for learning and practicing data processing and big data tools like Apache Spark. For beginners, PySpark (the Python API for Spark) is an ideal way to get started with Spark, allowing you to run Spark applications using Python. Moreover, integrating your Colab notebook with a GitHub repository provides a convenient way to save, share, and version your work, making collaboration and future reference easy.

This guide will walk you through the steps to set up PySpark in Google Colab, run a basic Spark application, and save your work in a GitHub repository.

Step 1:

Setting Up PySpark in Google Colab

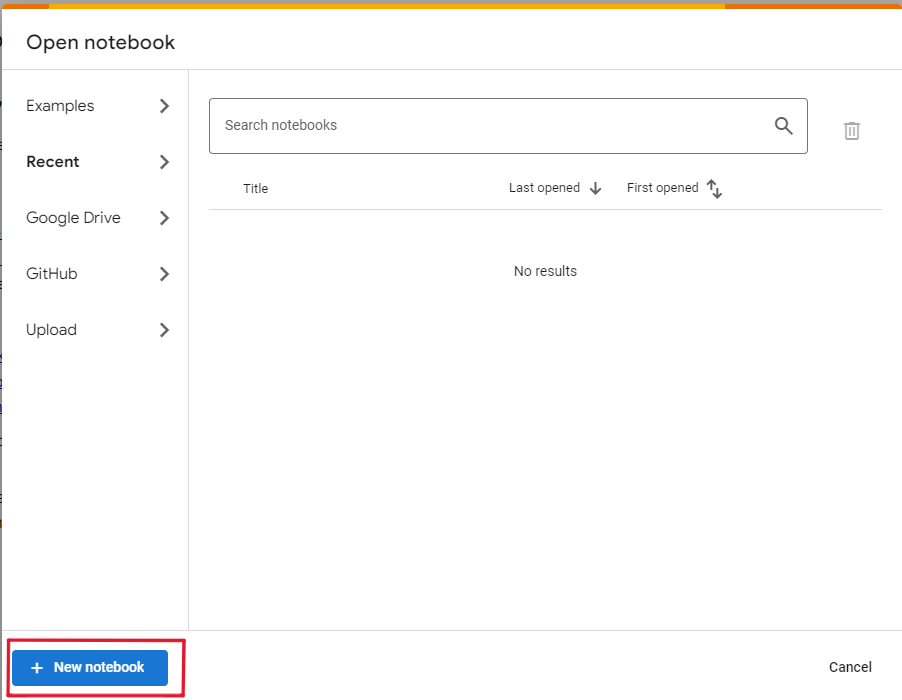

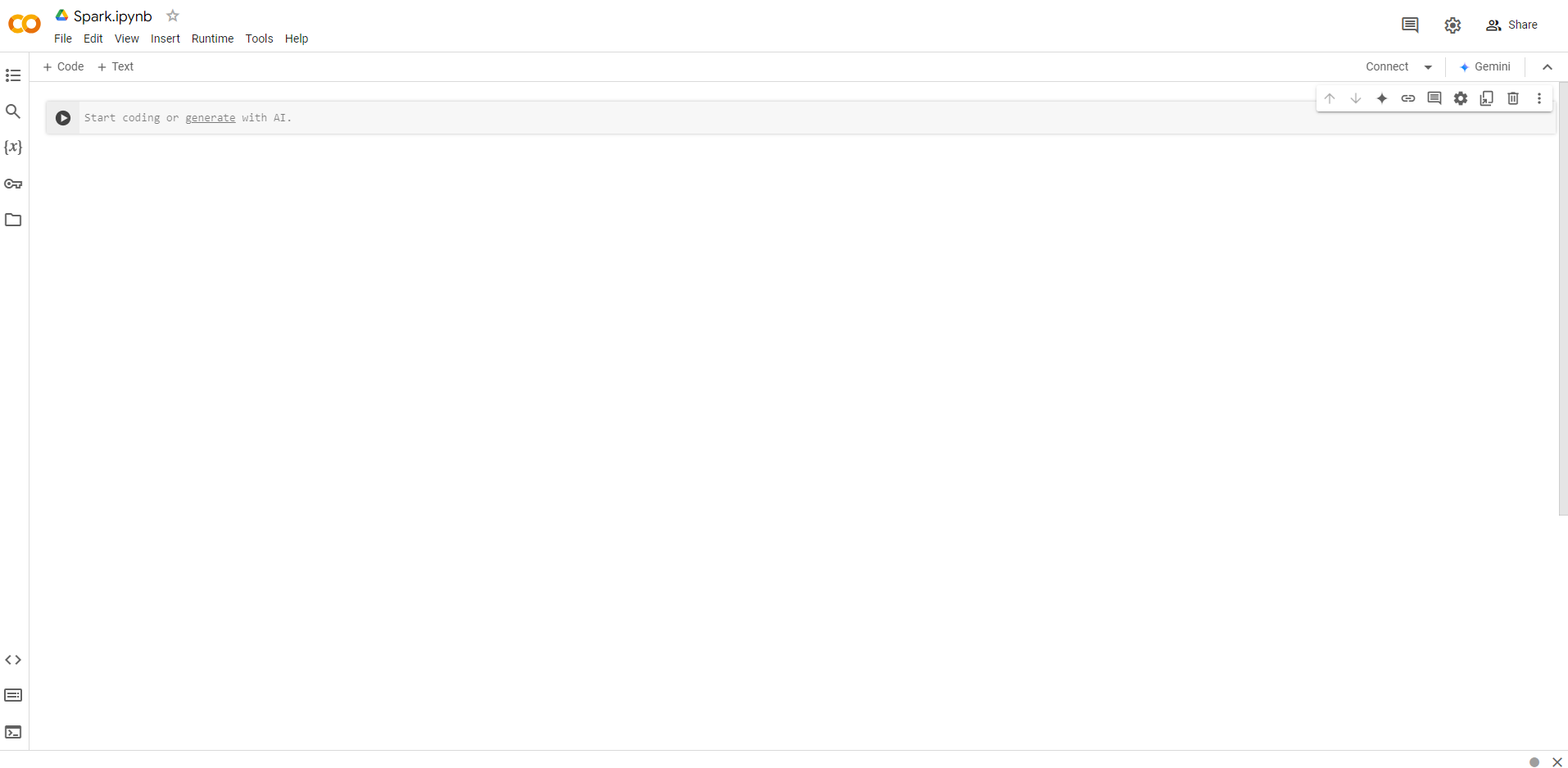

Create new Colab notebook

Step 2:

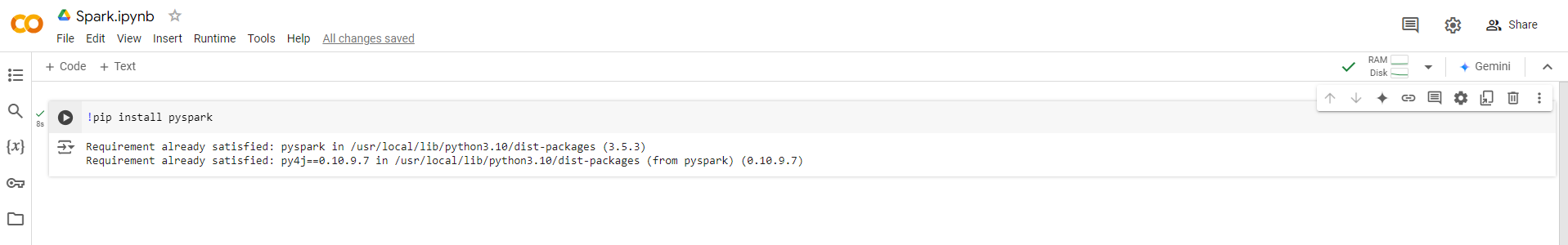

Install PySpark using below command

!pip install pyspark

Spark got installed successfully. Now we have to create a Spark application.

Step 3:

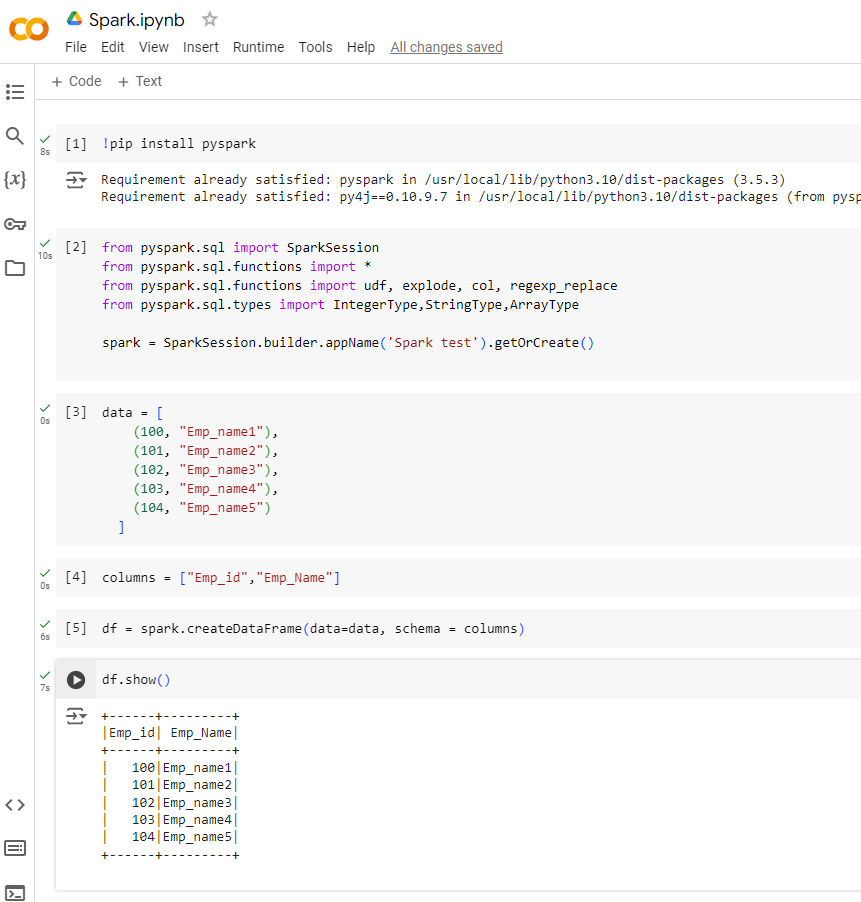

Create a Spark application with required libraries

from pyspark.sql import SparkSession

from pyspark.sql.functions import *

from pyspark.sql.functions import udf, explode, col, regexp_replace

from pyspark.sql.types import IntegerType,StringType,ArrayType

spark = SparkSession.builder.appName('Spark_test_App').getOrCreate()

You are done. Lets run some spark codes.

Step 4:

Connect Colab to GitHub:

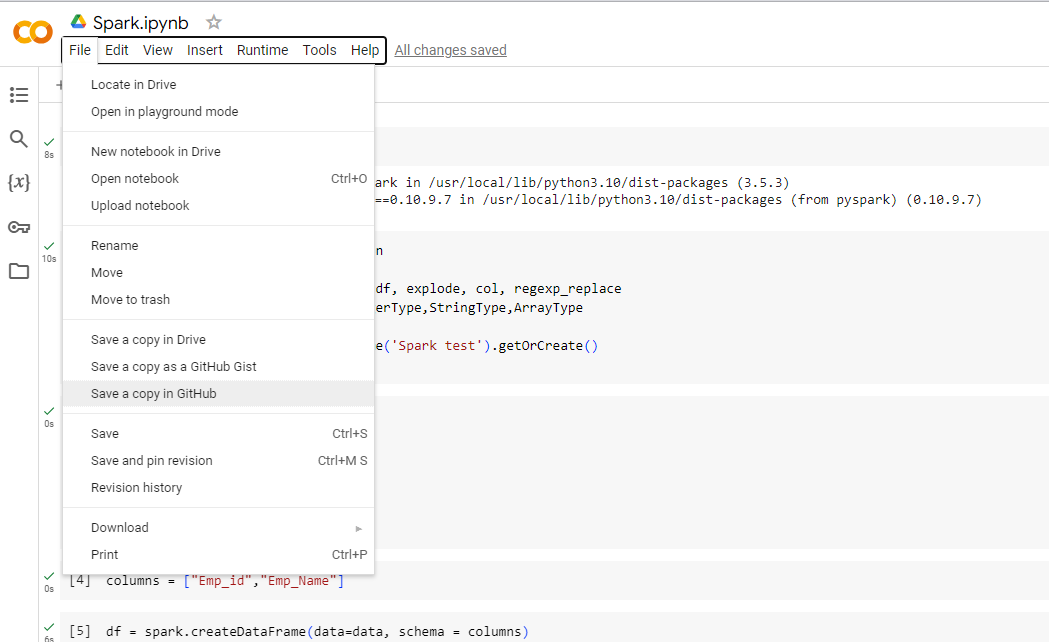

Go to File > Save a Copy in GitHub.

Sign in with your GitHub account if prompted.

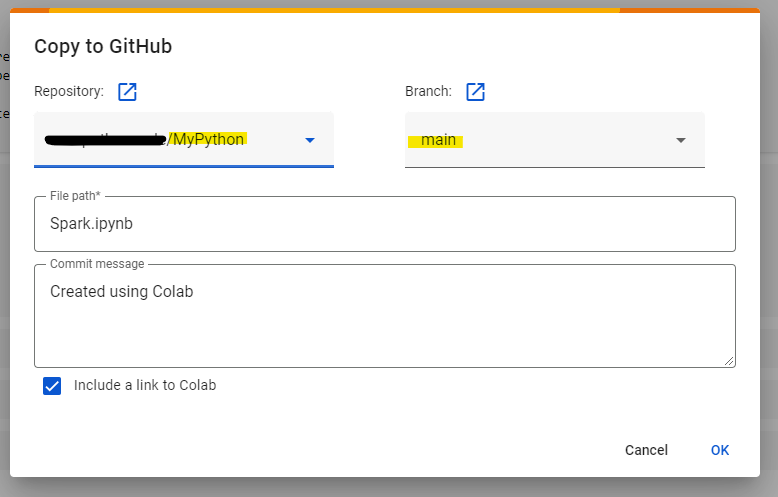

Choose the desired repository and directory where you’d like to save the notebook.

Provide a commit message and save.

Saving Updates: Whenever you make updates to the notebook, you can repeat the same steps to save new versions or edits directly to your GitHub repository. This maintains version control, so you can track changes over time.

Accessing from GitHub: Your notebook will be stored as an

.ipynbfile in your repository, which you can share or revisit later. This makes it easy for others to view your work or collaborate with you on learning Spark.

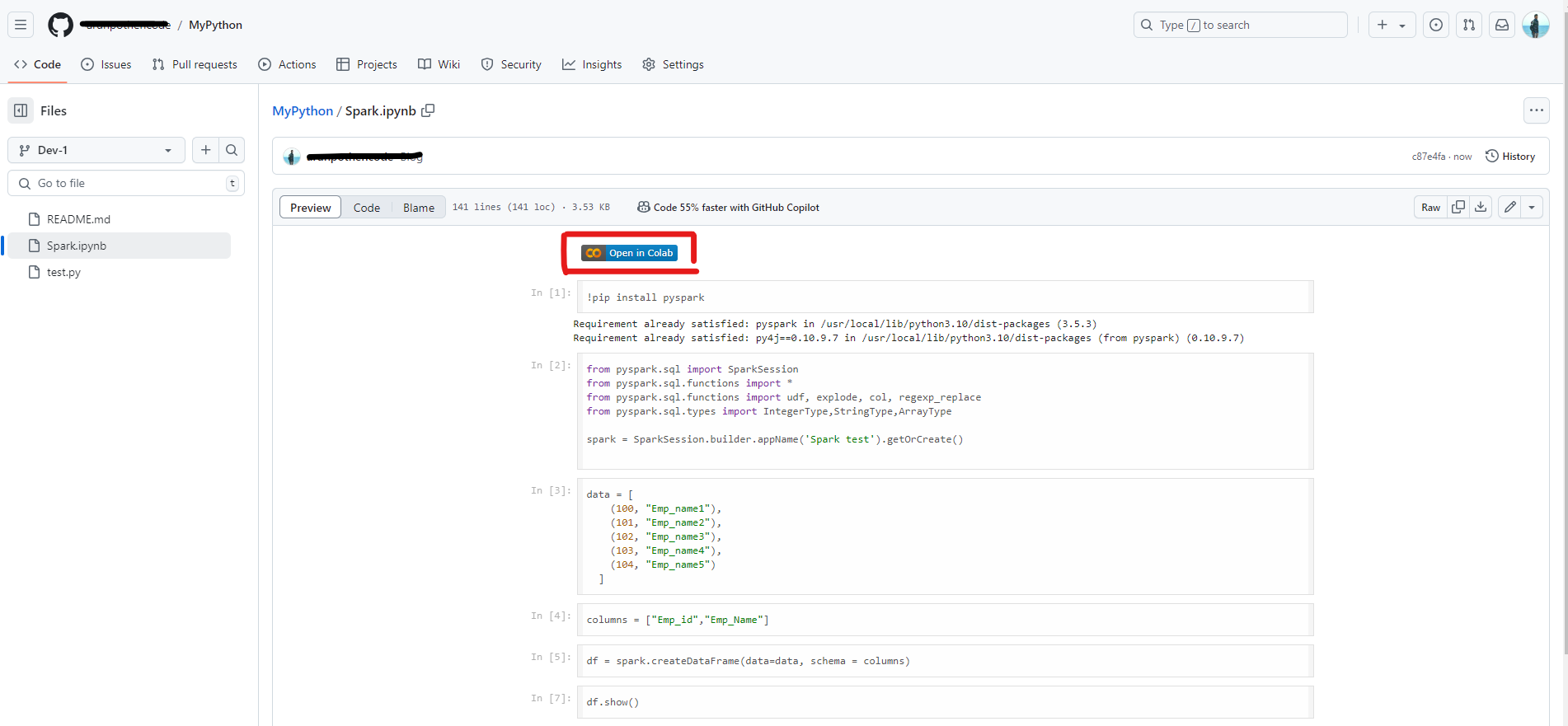

Now when you are opening your code repository, you will get it like below.

Just click the Open in Colab button to open the notebook.

Conclusion

This approach is particularly beneficial for beginners in Spark because it allows you to leverage the free resources of Google Colab without needing a local Spark installation. You can easily run Spark applications, test different transformations, and develop a deeper understanding of Spark's capabilities. Plus, integrating your work with GitHub adds an extra layer of organization, allowing you to track your progress, collaborate with others, and revisit past projects as needed.

By following these steps, you’re set up to dive into Spark learning, explore different features, and continuously save and improve your work in a professional, shareable format.

Subscribe to my newsletter

Read articles from Arun R Nair directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by