Automating MLOps with AWS: A Complete Guide to CI/CD with CodePipeline

Pranjal Chaubey

Pranjal Chaubey

Welcome to the fourth chapter of our CI/CD for MLOps Using AWS series! In our previous installments, we delved into GitHub, AWS CodeBuild, and AWS CodeDeploy. Today, we're excited to explore AWS CodePipeline and how it seamlessly integrates build and deploy processes to streamline your MLOps and CI/CD workflows.

What is AWS CodePipeline?

AWS CodePipeline is a fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure updates. It automates the build, test, and deploy phases of your release process every time there is a code change, based on the release model you define.

Key Features of AWS CodePipeline:

Continuous Integration and Continuous Delivery (CI/CD): Automate your entire release process.

Integration with AWS Services: Works seamlessly with other AWS services like CodeBuild, CodeDeploy, and third-party tools.

Customizable Pipelines: Define multiple stages and actions tailored to your workflow.

Visual Interface: Easily visualize your pipeline and track progress.

Why Use CodePipeline for MLOps?

In the realm of MLOps, managing the lifecycle of machine learning models—from data ingestion to model deployment—requires robust automation. CodePipeline provides the flexibility and scalability needed to handle complex workflows, ensuring that every change is tested and deployed efficiently.

Benefits for MLOps:

Automated Model Training and Testing: Integrate automated training and validation steps.

Consistent Deployments: Ensure models are deployed reliably across different environments.

Scalability: Handle increasing complexity as your ML projects grow.

Collaboration: Facilitate teamwork by integrating with version control systems like GitHub.

Understanding the Workflow

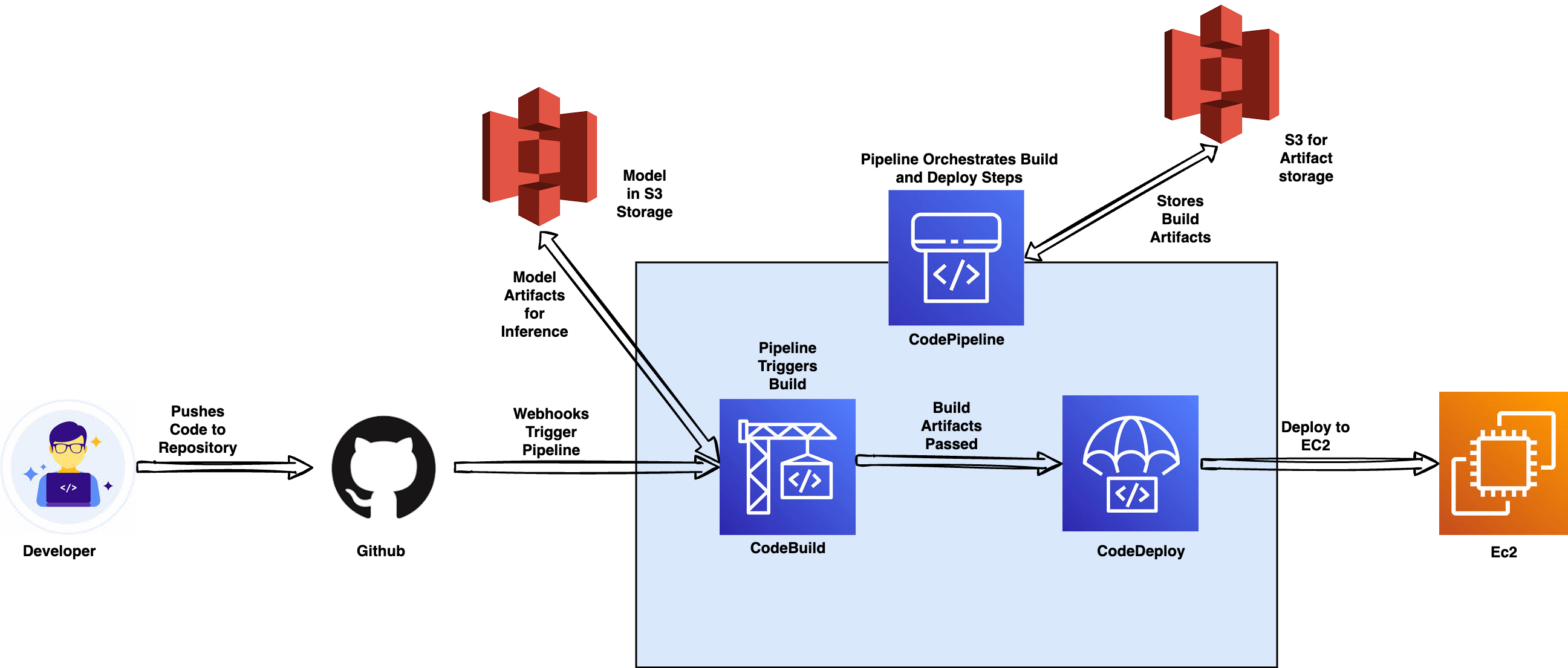

This diagram illustrates the CI/CD pipeline workflow in an MLOps context, using AWS services to automate code integration, building, and deployment. Here's a step-by-step breakdown of each stage and the actions taking place:

Developer to GitHub: A developer pushes code changes to the GitHub repository, which serves as the source control. This repository holds both the machine learning code and configuration files.

Webhooks Trigger Pipeline: When code is pushed to GitHub, a webhook is triggered, notifying AWS CodePipeline of the new changes. This event-driven mechanism allows CodePipeline to begin its automated process whenever code updates occur.

CodePipeline Orchestrates Build and Deploy Steps: AWS CodePipeline is the core orchestrator in this workflow, managing each stage of the CI/CD process. CodePipeline initiates and coordinates the build and deploy actions, ensuring a smooth flow from code integration to deployment.

Pipeline Triggers Build in CodeBuild: CodePipeline triggers AWS CodeBuild to compile the code, run tests, and generate any necessary artifacts. This build step ensures that the code is validated and packaged correctly before deployment.

Build Artifacts Passed to CodeDeploy: The output from CodeBuild, known as build artifacts, is then passed to AWS CodeDeploy. These artifacts can include packaged application code, model files, or any dependencies needed for deployment.

Deploy to EC2 via CodeDeploy: CodeDeploy takes the build artifacts and deploys them to the specified target, such as an EC2 instance. This stage makes the application or machine learning model available in the desired environment, ready for use.

S3 for Artifact Storage: AWS CodePipeline uses an S3 bucket to store intermediate artifacts and logs. This ensures that artifacts are accessible for subsequent stages or for review if needed.

Model Artifacts in S3 for Inference: In this pipeline, an additional S3 bucket is used to store machine learning model artifacts for inference or training. This storage serves as a repository for models that can be accessed by other services or applications for real-time predictions or further training.

Each component in the pipeline works together to create a continuous and automated workflow from code changes to production deployment, making it easier to manage and deploy machine learning models in a structured, repeatable manner. This setup enhances collaboration, improves deployment reliability, and simplifies model management, which are essential for successful MLOps.

Setting Up AWS CodePipeline for MLOps

Let's walk through the steps to set up a CodePipeline that integrates with GitHub, CodeBuild, and CodeDeploy for a comprehensive MLOps CI/CD pipeline.

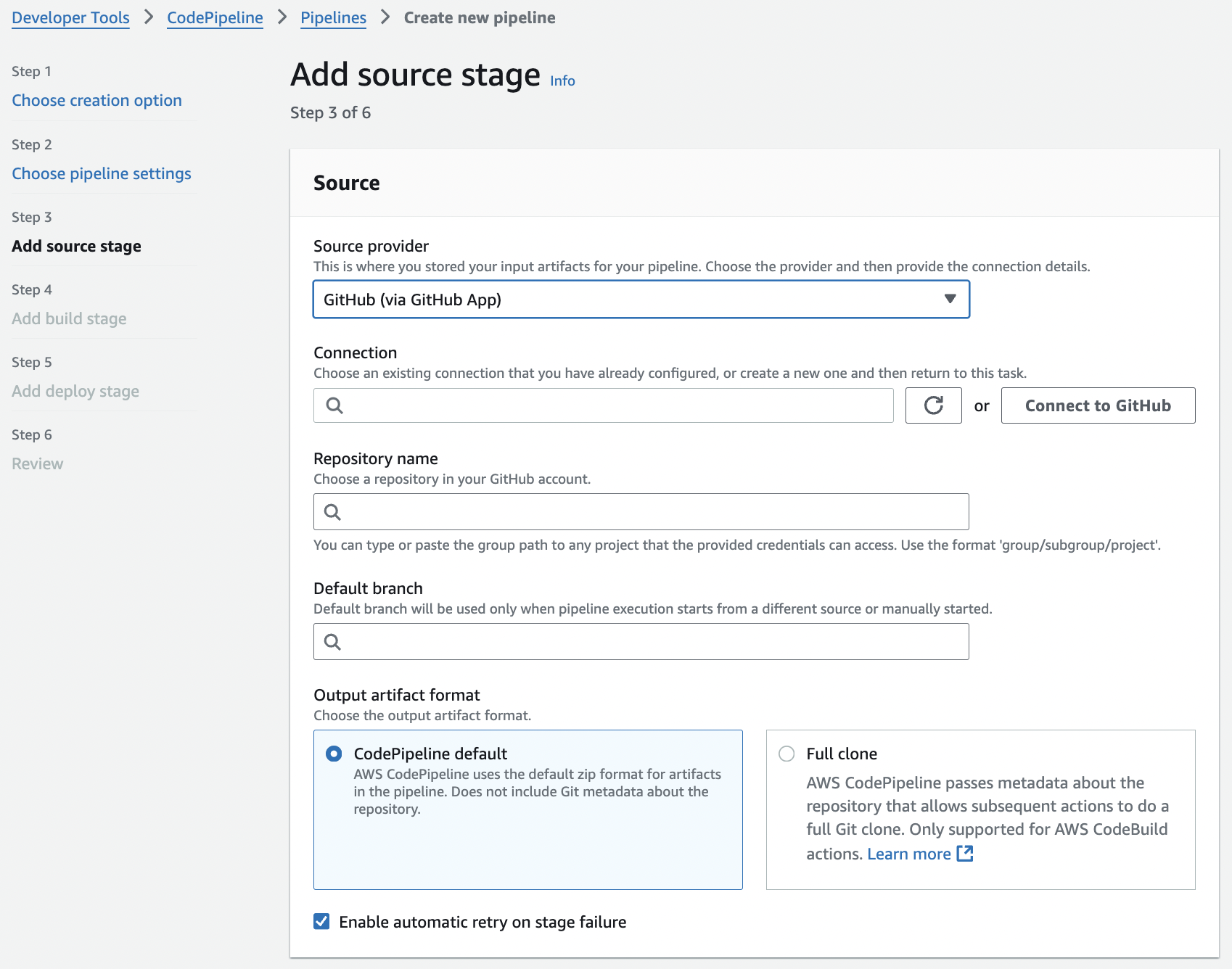

1. Connect CodePipeline to Your Source Repository

Start by connecting CodePipeline to your source code repository, such as GitHub.

Figure 1: Connecting CodePipeline to GitHub

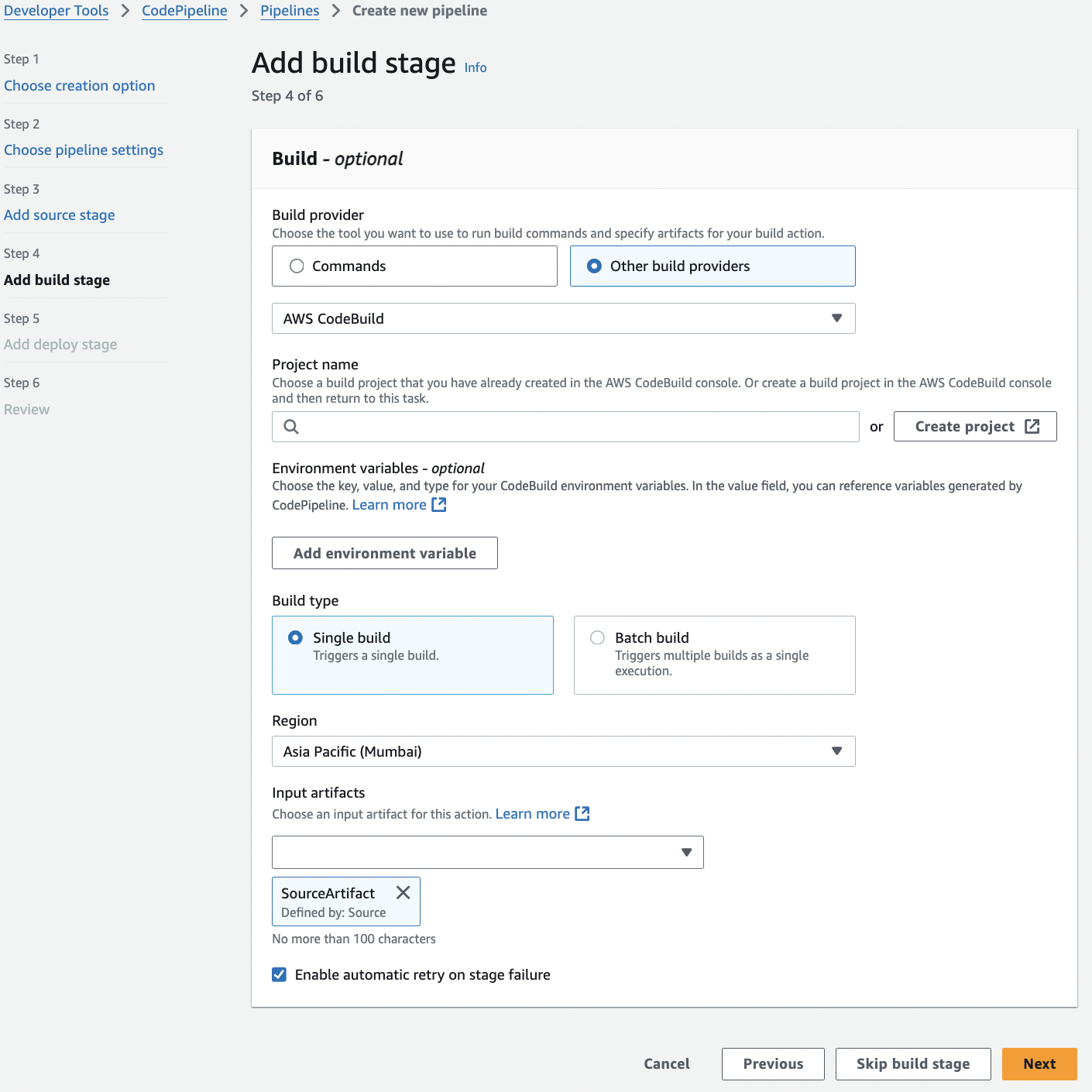

2. Configure the Build Stage with CodeBuild

Next, set up the build stage using AWS CodeBuild to compile your code, run tests, and build Docker images if necessary.

Figure 2: Configuring CodeBuild in CodePipeline

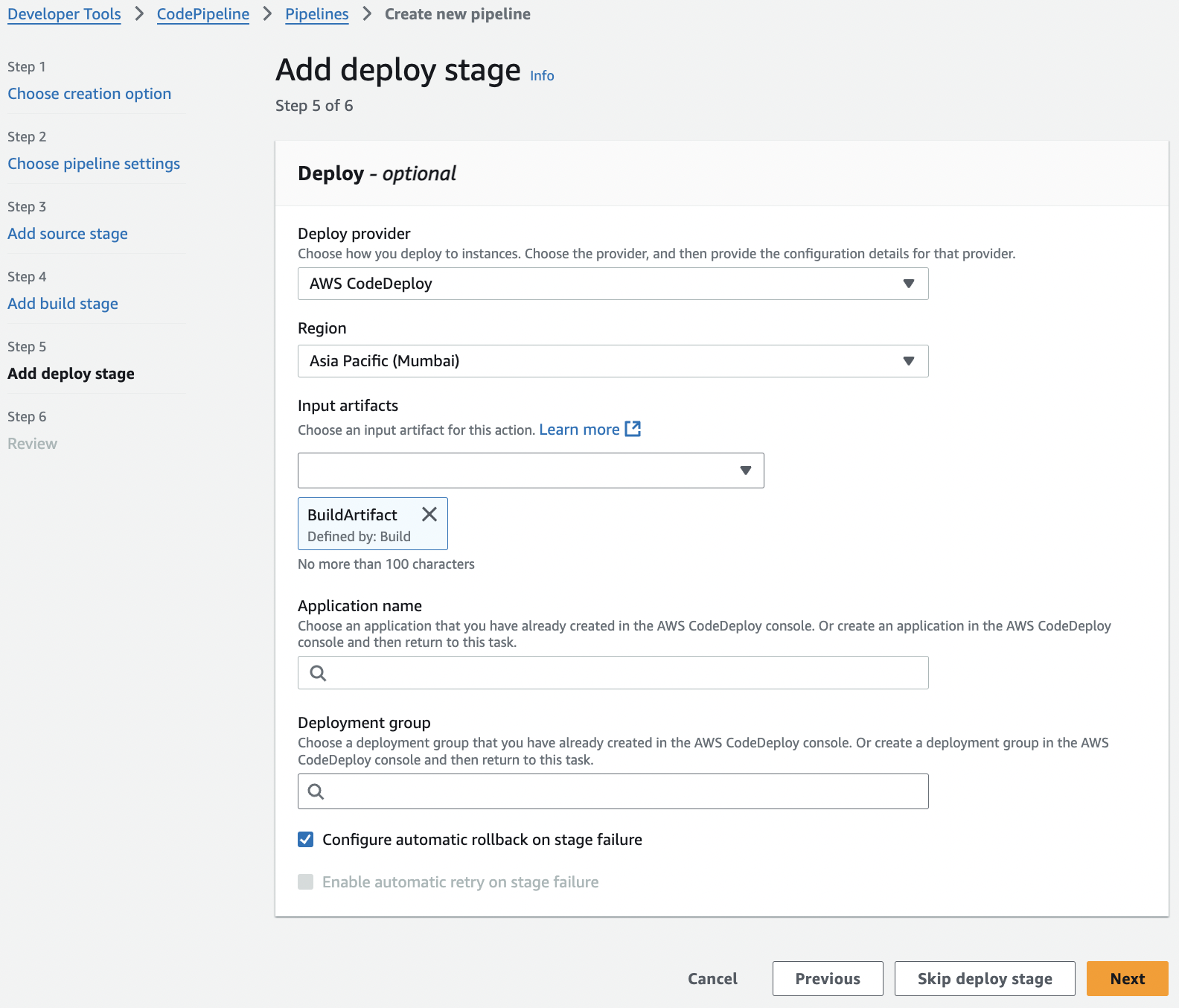

3. Add the Deploy Stage with CodeDeploy

Finally, add a deploy stage using AWS CodeDeploy to deploy your ML models or applications to your chosen environment, such as Amazon EC2, AWS Lambda, or Amazon ECS.

Figure 3: Configuring CodeDeploy in CodePipeline

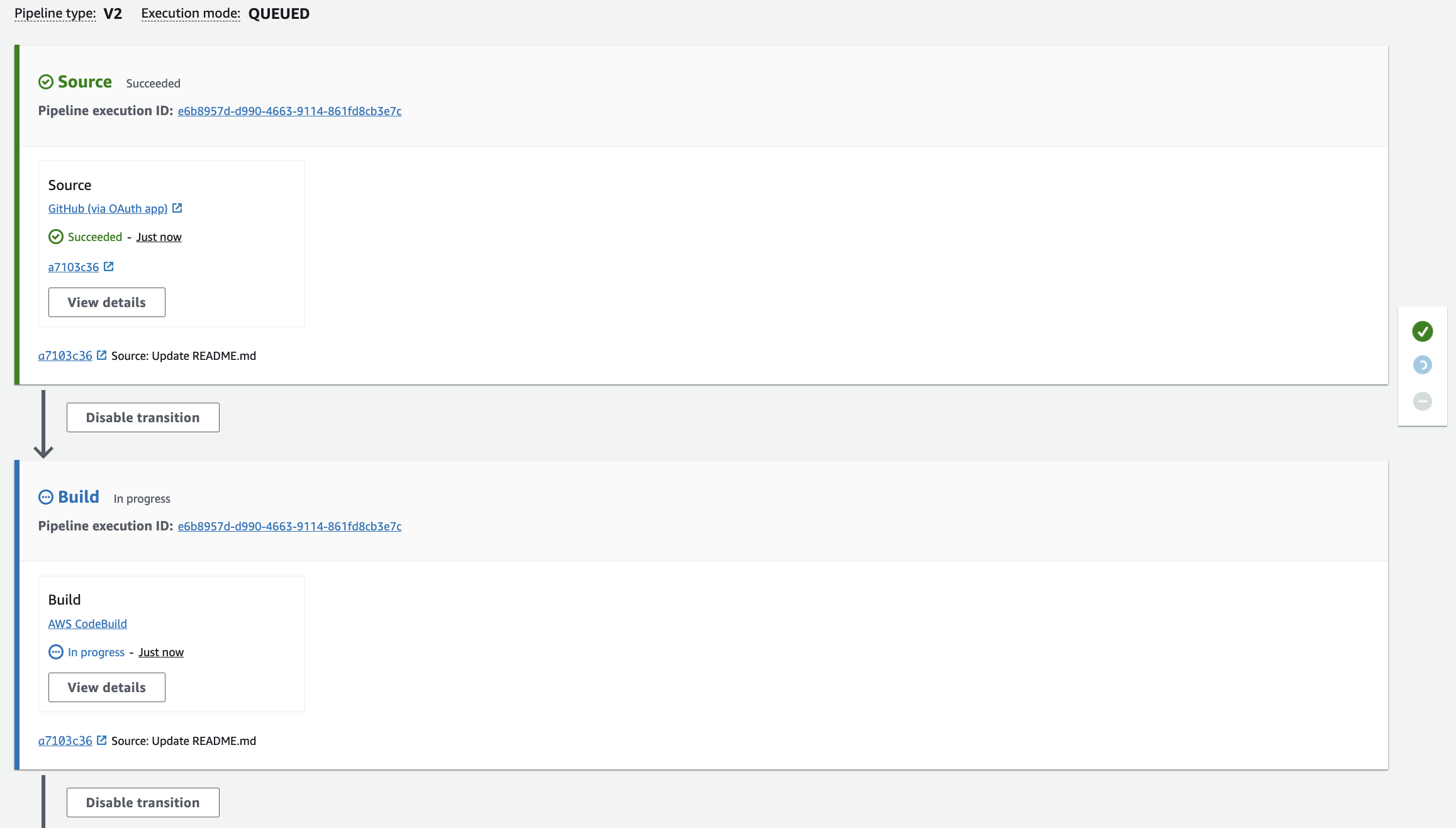

4. Visualizing the Complete Pipeline

Once all stages are configured, your pipeline should look something like this:

Figure 4: Complete CodePipeline Workflow

Best Practices for Using CodePipeline in MLOps

Version Control Everything: Ensure that your code, configuration files, and even data schemas are version-controlled.

Automate Testing: Incorporate automated tests to validate your models and code before deployment.

Monitor and Log: Use AWS CloudWatch and other monitoring tools to keep track of pipeline performance and detect issues early.

Secure Your Pipeline: Implement proper IAM roles and policies to secure access to your pipeline resources.

Conclusion

AWS CodePipeline is a powerful tool that can significantly enhance your MLOps and CI/CD workflows by automating the integration and deployment processes. By integrating CodePipeline with GitHub, CodeBuild, and CodeDeploy, you can create a seamless and efficient pipeline that ensures your machine learning models are consistently built, tested, and deployed with minimal manual intervention.

Stay tuned for our next chapter, where we will explore advanced pipeline configurations and how to optimize your CI/CD processes further.

Subscribe to my newsletter

Read articles from Pranjal Chaubey directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pranjal Chaubey

Pranjal Chaubey

Hey! I'm Pranjal and I am currently doing my bachelors in CSE with Artificial Intelligence and Machine Learning. The purpose of these blogs is to help me share my machine-learning journey. I write articles about new things I learn and the projects I do.