Implementing Blue-Green Deployment in Kubernetes with TLS Encryption Using Cert-Manager and Nginx Ingress

George Ezejiofor

George Ezejiofor

Introduction

🌟 In modern cloud-native environments, ensuring zero-downtime deployments while maintaining robust security is critical. Blue-Green Deployment is a proven strategy that allows teams to switch traffic between different versions of an application seamlessly. Combined with TLS encryption for secure communication, this approach ensures a smooth and secure user experience.

🚀 In this guide, we’ll implement a Blue-Green Deployment in Kubernetes, utilizing Cert-Manager for automated TLS certificate management and Nginx Ingress for traffic routing. By the end of this project, you’ll have a production-ready setup that you can replicate in your own environments.

Tech Stack

🔧 Kubernetes: Cluster orchestration and management.

🔒 Cert-Manager: Automated TLS certificate management.

🌐 Nginx Ingress Controller: Routing HTTP(S) traffic to your services.

📦 Helm (optional): Simplifying deployments.

🛡️ Let's Encrypt: Free TLS certificates for HTTPS.

📡 MetalLB: LoadBalancer for bare-metal Kubernetes clusters.

💻 kubectl: Command-line tool for interacting with Kubernetes.

Prerequisites

✅ Kubernetes Cluster: I will be using MICROK8S on this project on a bare-metal setup with MetalLB for LoadBalancer.

✅ kubectl: Install and configure kubectl to interact with your cluster.

✅ Helm: Install Helm, the Kubernetes package manager, for simplified application deployment and configuration.

✅ Cert-Manager: Ensure Cert-Manager is installed in the cluster for TLS certificate management.

✅ Nginx Ingress Controller: Deploy the Nginx Ingress Controller to handle HTTP(S) traffic routing.

✅ Namespace Configuration: Create separate namespaces or labels for blue and green deployments.

✅ Domain Name: Set up a domain name (or subdomain) terranetes.com pointing to your LoadBalancer IP address. I Used Cloudflare to manage my DNS .

✅ Let's Encrypt Account: Prepare for certificate issuance by having a valid email for Let's Encrypt configuration.

✅ Basic Networking Knowledge: Familiarity with Kubernetes networking concepts, including Ingress and Services.

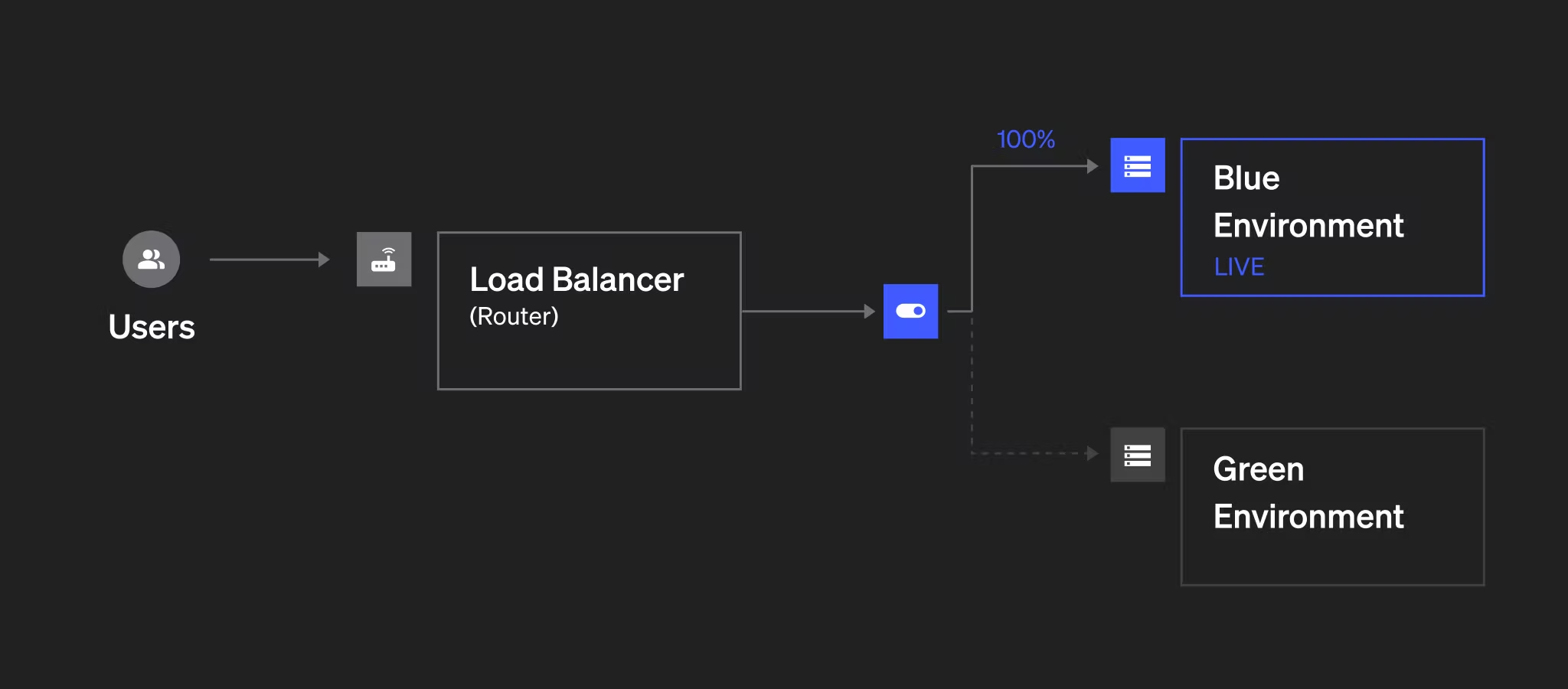

Architecture

DEPLOYMENTS

Let’s create different namespaces for the project. And also add some environment variables for the project

export CLOUDFLARE_API_KEY="xxxx change your token xxxxxxx"

export EMAIL="changeYOURemail@gmail.com"

echo $CLOUDFLARE_API_KEY

echo $EMAIL

kubectl create namespace blue-green

kubectl create namespace cert-manager

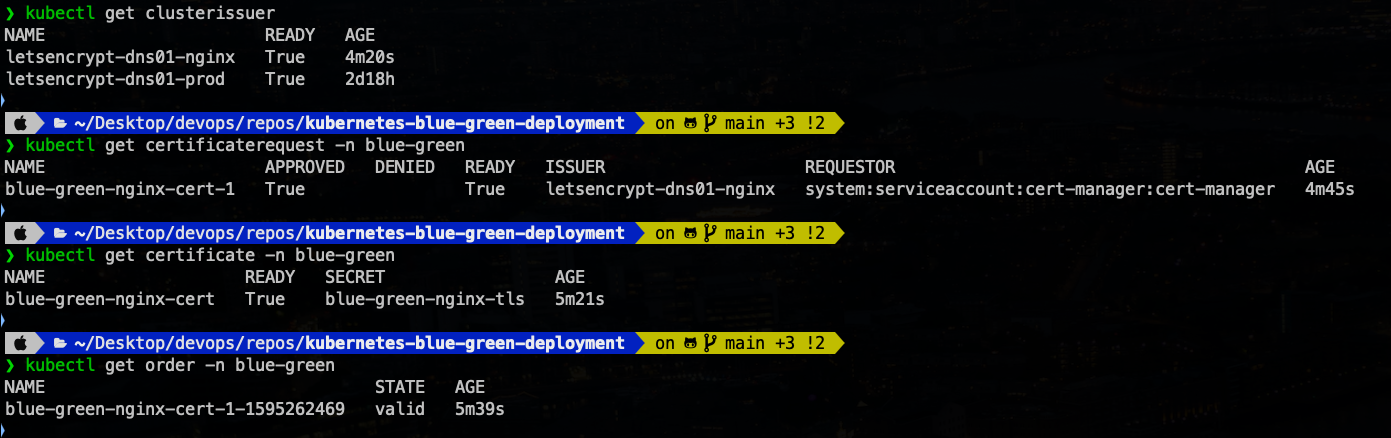

Deploy Certificate components

Create Cloudflare API Token Secret for cert-manager.

# Create Cloudflare API Token Secret for cert-manager kubectl create secret generic cloudflare-api-token-cert-manager \ --namespace cert-manager \ --from-literal=api-token="$CLOUDFLARE_API_KEY" kubectl get secret cloudflare-api-token-cert-manager -n cert-manager -o yamlCreate a ClusterIssuer with Cloudflare DNS-01 validation.

apiVersion: cert-manager.io/v1 kind: ClusterIssuer metadata: name: letsencrypt-dns01-nginx spec: acme: server: https://acme-v02.api.letsencrypt.org/directory email: $EMAIL privateKeySecretRef: name: letsencrypt-dns01-private-nginx-key solvers: - dns01: cloudflare: email: $EMAIL apiTokenSecretRef: name: cloudflare-api-token-cert-manager key: api-tokenCreate a Certificate that references the ClusterIssuer

letsencrypt-dns01-nginx. I want to create the certificate inblue-greennamespace.apiVersion: cert-manager.io/v1 kind: Certificate metadata: name: blue-green-nginx-cert namespace: blue-green spec: secretName: blue-green-nginx-tls # Reference the secret name on encoding secretName value duration: 2160h # 90 days renewBefore: 360h # 15 days isCA: false privateKey: algorithm: RSA encoding: PKCS1 size: 4096 issuerRef: name: letsencrypt-dns01-nginx kind: ClusterIssuer group: cert-manager.io dnsNames: - "blue.terranetes.com" - "green.terranetes.com"

Green Environment is my default live environment.

apiVersion: apps/v1

kind: Deployment

metadata:

name: terranetes-nodegreen

namespace: blue-green

labels:

app: terranetes-nodegreen

version: green

spec:

replicas: 3

selector:

matchLabels:

app: terranetes-nodegreen

version: green

template:

metadata:

labels:

app: terranetes-nodegreen

version: green

spec:

containers:

- name: terranetes-nodegreen

image: georgeezejiofor/terranetes-nodegreen:green-v1

imagePullPolicy: Always

ports:

- containerPort: 3000

env:

- name: VERSION

value: "green"

- name: HOSTNAME

valueFrom:

fieldRef:

fieldPath: metadata.name

---

apiVersion: v1

kind: Service

metadata:

name: terranetes-nodegreen-svc

namespace: blue-green

spec:

selector:

app: terranetes-nodegreen

ports:

- protocol: TCP

port: 80

targetPort: 3000

Blue Environment is my NEW live environment.

apiVersion: apps/v1

kind: Deployment

metadata:

name: terranetes-nodeblue

namespace: blue-green

labels:

app: terranetes-nodeblue

version: blue

spec:

replicas: 3

selector:

matchLabels:

app: terranetes-nodeblue

version: blue

template:

metadata:

labels:

app: terranetes-nodeblue

version: blue

spec:

containers:

- name: terranetes-nodeblue

image: georgeezejiofor/terranetes-nodeblue:blue-v1

imagePullPolicy: Always

ports:

- containerPort: 3000

env:

- name: VERSION

value: "blue"

- name: HOSTNAME

valueFrom:

fieldRef:

fieldPath: metadata.name

---

apiVersion: v1

kind: Service

metadata:

name: terranetes-nodeblue-svc

namespace: blue-green

spec:

selector:

app: terranetes-nodeblue

ports:

- protocol: TCP

port: 80

targetPort: 3000

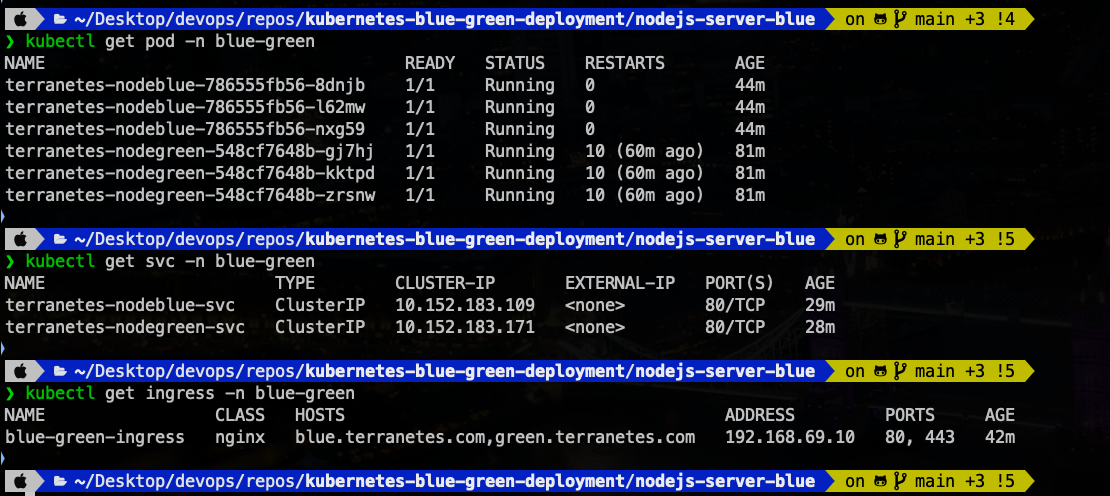

This services terranetes-nodeblue-svc and terranetes-nodegreen-svc acts as a Router or Switch to different environments.

The services are also deployed as a cluster IP. Hence can only be access within the cluster. I’m going to expose the service from the Loadbalancer of my ingress Controller. Also Update the Ingress resource with TLS configuration.

Deploy Ingress resource to expose both services

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: blue-green-ingress

namespace: blue-green

annotations:

cert-manager.io/cluster-issuer: letsencrypt-dns01-nginx

spec:

ingressClassName: nginx

tls:

- hosts:

- blue.terranetes.com

- green.terranetes.com

secretName: blue-green-nginx-tls

rules:

- host: blue.terranetes.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: terranetes-nodeblue-svc

port:

number: 80

- host: green.terranetes.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: terranetes-nodegreen-svc

port:

number: 80

---

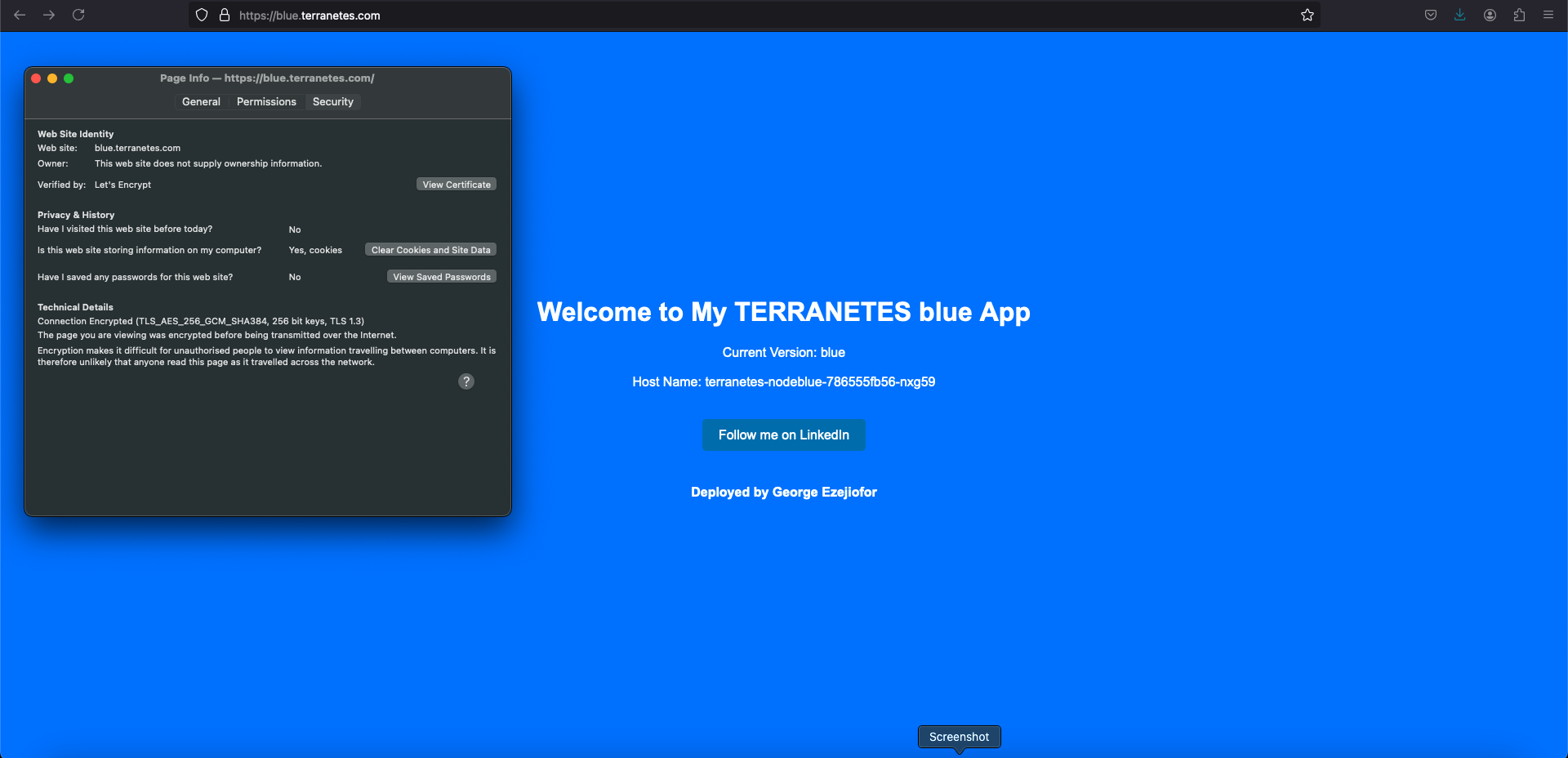

Initial Traffic Routing

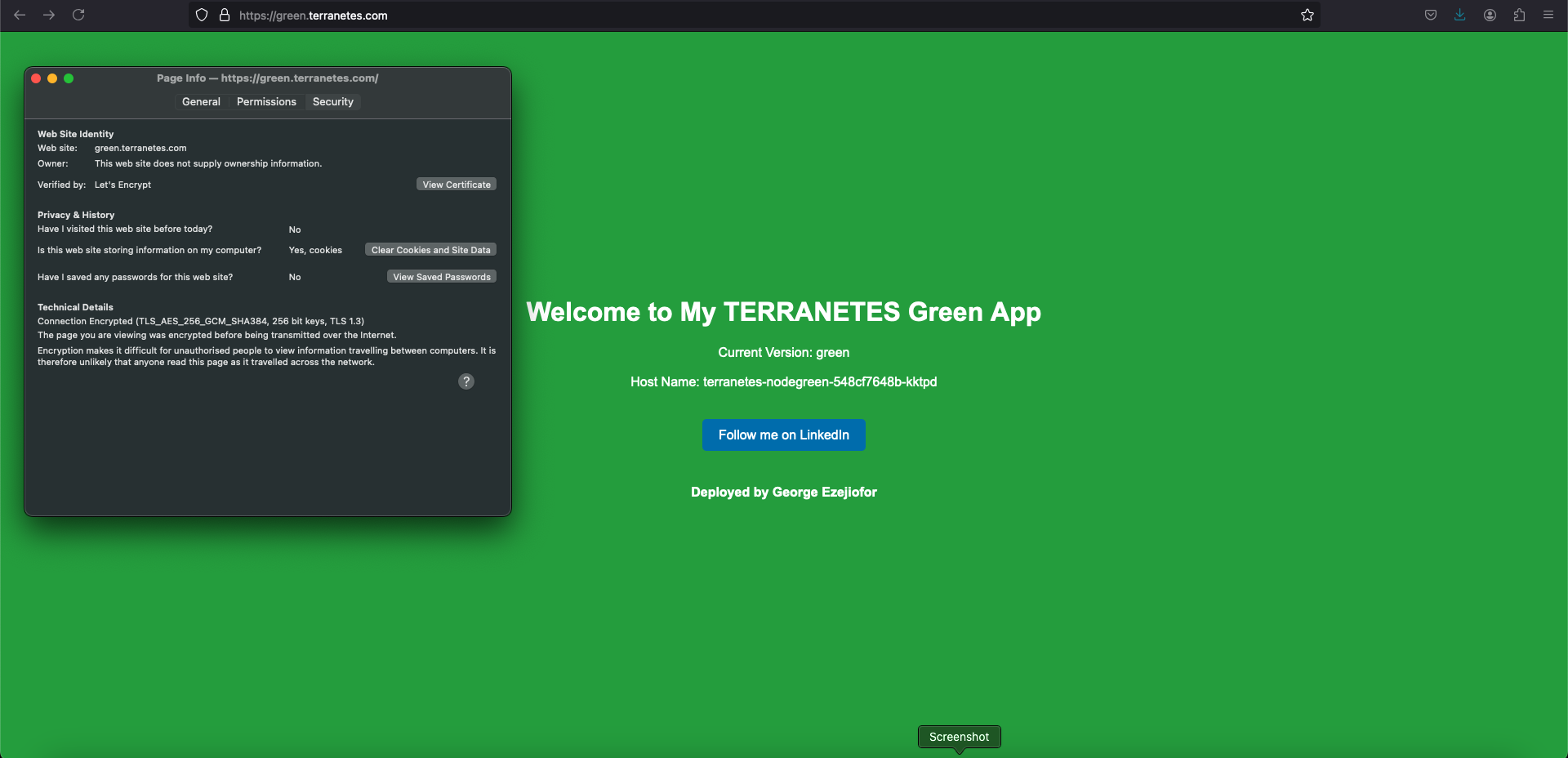

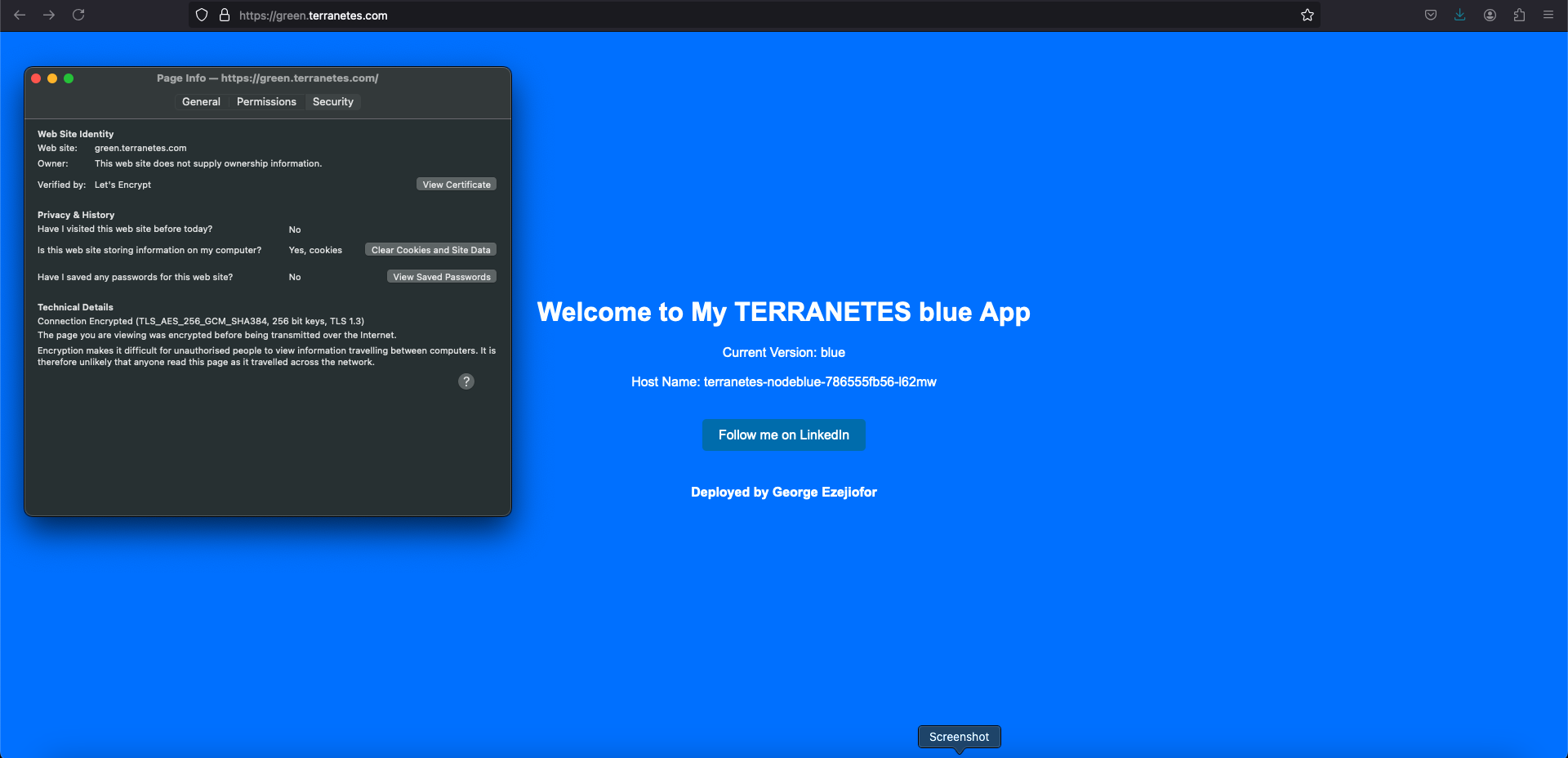

Currently, the traffic is split between blue.terranetes.com (blue app) and green.terranetes.com (green app). This setup routes users based on the hostname. Now let’s switching traffic from green environment (Live) to blue environment (New). And we are doing this switching on ingress resource. This is acting as out router .

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: blue-green-ingress

namespace: blue-green

annotations:

cert-manager.io/cluster-issuer: letsencrypt-dns01-nginx

spec:

ingressClassName: nginx

tls:

- hosts:

- blue.terranetes.com

- green.terranetes.com

secretName: blue-green-nginx-tls

rules:

- host: blue.terranetes.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: terranetes-nodeblue-svc

port:

number: 80

- host: green.terranetes.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: terranetes-nodeblue-svc # update blue svc here

port:

number: 80

You will notice that even green.terranetes.com url will access (blue app).

We just switch the green app users from green environment to blue environment we can easily rollback with this strategy by switching the service back to green svc on green.terranetes.com .

Rolling Back the Switch

You can roll back by modifying the Ingress again to restore the original routing:

blue.terranetes.com-> blue appgreen.terranetes.com-> green app

Optional: Weighted Traffic Splitting

If you want to gradually switch users from green to blue (or vice versa), consider implementing canary deployment or weighted routing using tools like:

NGINX annotations (if supported).

Istio or Traefik for advanced traffic management.

This allows for:

Gradual routing (e.g., 80% green, 20% blue).

Monitoring the behavior of users before full migration.

Conclusion 🎉

Through this guide, we’ve successfully implemented a Blue-Green Deployment strategy on Kubernetes with robust TLS encryption, utilizing Cert-Manager and Nginx Ingress. This architecture ensures zero-downtime deployments, seamless traffic switching, and enhanced security, making it a reliable choice for production environments.

By switching users between green and blue environments effortlessly, we’ve demonstrated the power of dynamic traffic management. Whether it’s for releasing new features or mitigating issues with instant rollbacks, this approach minimizes risk and enhances user experience.

Additionally, the option to incorporate weighted traffic splitting or canary deployments provides further flexibility for gradual rollouts, enabling better control and monitoring during transitions.

Key Takeaways 🗝️

Ease of Switching: ✨ Modify the Ingress resource to direct traffic instantly.

Enhanced Security: 🔒 Automated TLS certificates ensure secure communication.

Rollback Ready: 🔄 Revert traffic with minimal effort.

Scalability: 📈 Extend the setup to support more complex routing patterns like weighted traffic.

With this setup, you’re equipped to deploy applications confidently, ensuring both reliability and user satisfaction. Ready to try this out in your environment? 🚀

Happy Deploying! 🌟

Subscribe to my newsletter

Read articles from George Ezejiofor directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

George Ezejiofor

George Ezejiofor

As a Senior DevSecOps Engineer, I’m dedicated to building secure, resilient, and scalable cloud-native infrastructures tailored for modern applications. With a strong focus on microservices architecture, I design solutions that empower development teams to deliver and scale applications swiftly and securely. I’m skilled in breaking down monolithic systems into agile, containerised microservices that are easy to deploy, manage, and monitor. Leveraging a suite of DevOps and DevSecOps tools—including Kubernetes, Docker, Helm, Terraform, and Jenkins—I implement CI/CD pipelines that support seamless deployments and automated testing. My expertise extends to security tools and practices that integrate vulnerability scanning, automated policy enforcement, and compliance checks directly into the SDLC, ensuring that security is built into every stage of the development process. Proficient in multi-cloud environments like AWS, Azure, and GCP, I work with tools such as Prometheus, Grafana, and ELK Stack to provide robust monitoring and logging for observability. I prioritise automation, using Ansible, GitOps workflows with ArgoCD, and IaC to streamline operations, enhance collaboration, and reduce human error. Beyond my technical work, I’m passionate about sharing knowledge through blogging, community engagement, and mentoring. I aim to help organisations realize the full potential of DevSecOps—delivering faster, more secure applications while cultivating a culture of continuous improvement and security awareness.