Boost Java Service Performance Using Async Non-Blocking IO

Rishav Paul

Rishav PaulTable of contents

- Identifying the Performance Bottleneck

- Solution: Migrating to Asynchronous, Non-Blocking Patterns

- Introducing Type Safety with a Custom SafeAsyncResponse Class

- Testing and Performance Gains

- Discovering a Hidden Bug in the HTTP Client

- The Choice Between Virtual Threads and Traditional Async Code

- Conclusion

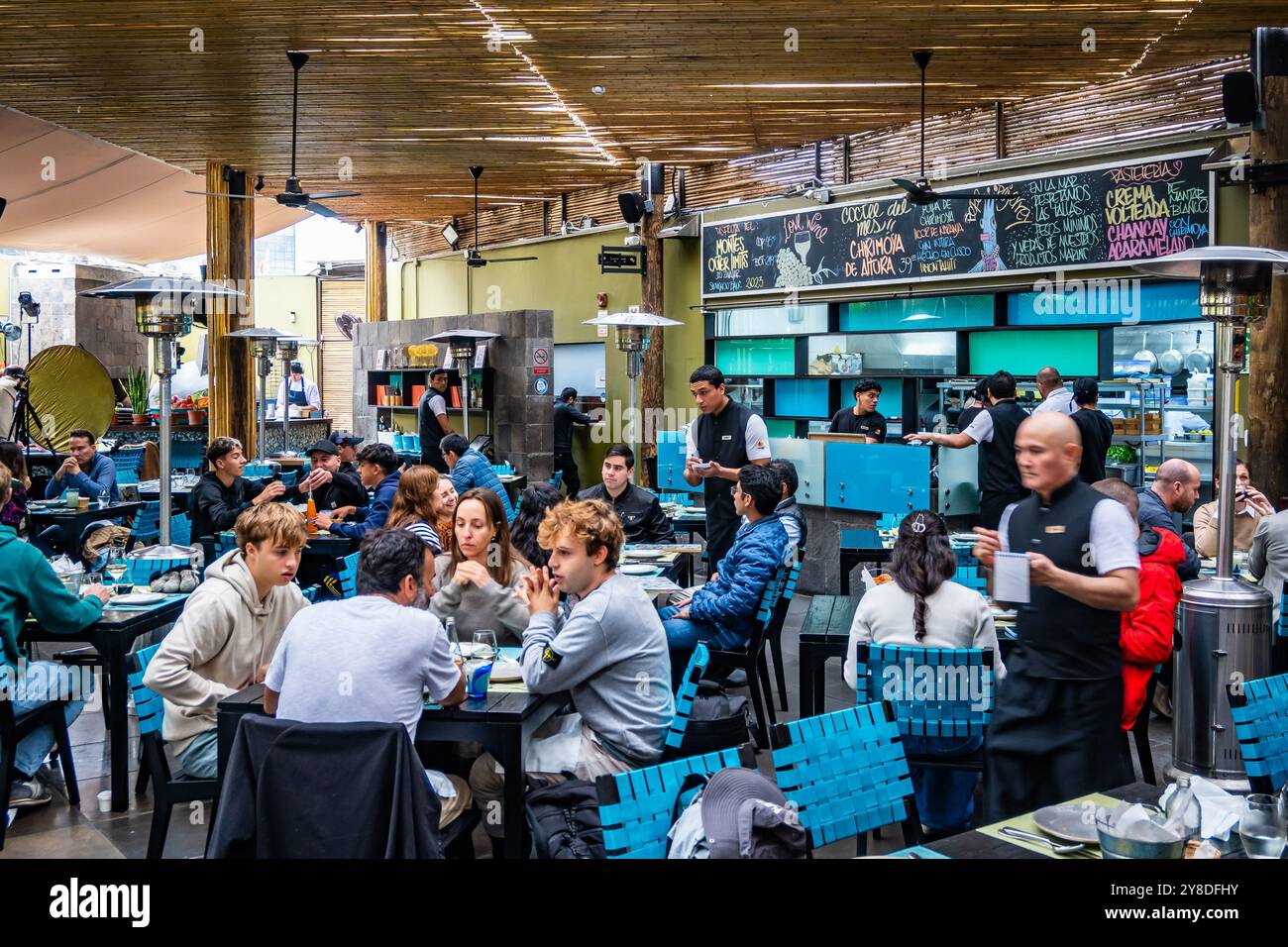

Imagine you're at a busy restaurant. You place your order at the counter and receive a pager that buzzes when your food is ready. While you wait, the staff serves other customers, and the kitchen works on multiple orders simultaneously. When your pager buzzes, you collect your food—this is non-blocking behavior, where no one is left idle waiting for one task to finish.

Now, contrast this with a scenario where you place your order and stand at the counter, waiting until the kitchen finishes before moving on. Meanwhile, the line behind you grows. This is blocking behavior, where progress halts until one task is fully complete.

In a recent project, I modernized an aging monolithic Java web service written in Dropwizard. This service handles a number of third-party (3P) clients’ code execution through AWS Lambda functions, but performance was lagging due to the blocking nature of the architecture. The service had a P99 latency of 20 seconds, blocking request threads while waiting for the serverless functions to complete. This blocking caused thread pool saturation, leading to frequent request failures during peak traffic.

Identifying the Performance Bottleneck

The crux of the issue was that each request to a Lambda function occupied a request thread in the Java service. Since these 3P functions often took considerable time to complete, the threads handling them would remain blocked, consuming resources and limiting scalability. Here’s an example of what this blocking behavior looks like in code:

// Blocking code example

public String callLambdaService(String payload) {

String response = externalLambdaService.invoke(payload);

return response;

}

In this example, the callLambdaService method waits until externalLambdaService.invoke() returns a response. Meanwhile, no other tasks can utilize the thread.

Solution: Migrating to Asynchronous, Non-Blocking Patterns

To address these bottlenecks, I re-architected the service using asynchronous and non-blocking methods. This change involved using an HTTP client that invoked the Lambda functions to use AsyncHttpClient from the org.asynchttpclient library, which internally uses an EventLoopGroup to handle requests asynchronously.

Using AsyncHttpClient helped to offload blocking operations without consuming threads from the pool. Here’s an example of what the updated non-blocking call looks like:

// Non-blocking code example

public CompletableFuture<String> callLambdaServiceAsync(String payload) {

return CompletableFuture.supplyAsync(() -> {

return asyncHttpClient.invoke(payload);

});

}

Leveraging Java's CompletableFuture for Chaining Async Calls

In addition to making individual calls non-blocking, I chained multiple dependency calls using CompletableFuture. With methods like thenCombine and thenApply, I could asynchronously fetch and combine data from multiple sources, significantly boosting throughput.

CompletableFuture<String> future1 = callLambdaServiceAsync(payload1);

CompletableFuture<String> future2 = callLambdaServiceAsync(payload2);

CompletableFuture<String> combinedResult = future1.thenCombine(future2, (result1, result2) -> {

return processResults(result1, result2);

});

Introducing Type Safety with a Custom SafeAsyncResponse Class

During implementation, I observed that Java’s default AsyncResponse object lacked type safety, allowing arbitrary Java objects to be passed around. To address this, I created a SafeAsyncResponse class with generics, which ensured that only the specified response type could be returned, promoting maintainability and reducing the risk of runtime errors. This class also logs errors if a response is written more than once.

public class SafeAsyncResponse<T> {

private static final Logger LOGGER = Logger.getLogger(SafeAsyncResponse.class.getName());

private final AsyncResponse asyncResponse;

private final AtomicInteger invocationCount = new AtomicInteger(0);

private SafeAsyncResponse(AsyncResponse asyncResponse) {

this.asyncResponse = asyncResponse;

}

/**

* Factory method to create a SafeAsyncResponse from an AsyncResponse.

*

* @param asyncResponse the AsyncResponse to wrap

* @param <T> the type of the response

* @return a new instance of SafeAsyncResponse

*/

public static <T> SafeAsyncResponse<T> from(AsyncResponse asyncResponse) {

return new SafeAsyncResponse<>(asyncResponse);

}

/**

* Resume the async response with a successful result.

*

* @param response the successful response of type T

*/

public void withSuccess(T response) {

if (invocationCount.incrementAndGet() > 1) {

logError("withSuccess");

return;

}

asyncResponse.resume(response);

}

/**

* Resume the async response with an error.

*

* @param error the throwable representing the error

*/

public void withError(Throwable error) {

if (invocationCount.incrementAndGet() > 1) {

logError("withError");

return;

}

asyncResponse.resume(error);

}

/**

* Logs an error message indicating multiple invocations.

*

* @param methodName the name of the method that was invoked multiple times

*/

private void logError(String methodName) {

LOGGER.severe(() -> String.format(

"SafeAsyncResponse method '%s' invoked more than once. Ignoring subsequent invocations.", methodName

));

}

}

Sample Usage of SafeAsyncResponse

@GET

@Path("/example")

public void exampleEndpoint(@Suspended AsyncResponse asyncResponse) {

SafeAsyncResponse<String> safeResponse = SafeAsyncResponse.from(asyncResponse);

// Simulate success

safeResponse.withSuccess("Operation successful!");

// Simulate multiple invocations (only the first one will be processed)

safeResponse.withError(new RuntimeException("This should not be processed"));

safeResponse.withSuccess("This will be ignored");

}

Testing and Performance Gains

To verify the effectiveness of these changes, I wrote load tests using virtual threads to simulate maximum throughput on a single machine. I generated different levels of serverless function execution times (ranging from 1 to 20 seconds) and found that the new async non-blocking implementation increased throughput by 8x for lower execution times and by about 4x for higher execution times.

In setting up these load tests, I made sure to adjust client-level connection limits to maximize throughput, which is essential to avoid bottlenecks in asynchronous systems.

Discovering a Hidden Bug in the HTTP Client

While running these stress tests, I discovered a hidden bug in our custom HTTP client. The client used a semaphore with a connection timeout set to Integer.MAX_VALUE, meaning if the client ran out of available connections, it would block the thread indefinitely. Resolving this bug was crucial to prevent potential deadlocks in high-load scenarios.

The Choice Between Virtual Threads and Traditional Async Code

One might wonder why we didn’t simply switch to virtual threads, which can reduce the need for asynchronous code by allowing threads to block without a significant resource cost. However, there’s a current limitation with virtual threads: they are pinned during synchronized operations. This means that when a virtual thread enters a synchronized block, it cannot unmount, potentially blocking OS resources until the operation completes.

For example:

synchronized byte[] getData() {

byte[] buf = ...;

int nread = socket.getInputStream().read(buf); // Can block here

...

}

In this code, if read blocks because there’s no data available, the virtual thread is pinned to an OS thread, preventing it from unmounting and blocking the OS thread as well.

Fortunately, with JEP 491 on the horizon, Java developers can look forward to improved behavior for virtual threads, where blocking operations in synchronized code can be handled more efficiently without exhausting platform threads.

Conclusion

Through refactoring our service to an async non-blocking architecture, we achieved significant performance improvements. By implementing AsyncHttpClient, introducing SafeAsyncResponse for type safety, and conducting load tests, we were able to optimize our Java service and greatly improve throughput. This project was a valuable exercise in modernizing monolithic applications and revealed the importance of proper async practices for scalability.

As Java evolves, we may be able to leverage virtual threads more effectively in the future, but for now, async and non-blocking architecture remains an essential approach for performance optimization in high-latency, third-party-dependent services.

Subscribe to my newsletter

Read articles from Rishav Paul directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by