End-to-End DevOps for a Golang Web App: Docker, EKS, AWS CI/CD

Amit Maurya

Amit Maurya

In this article, we are going to learn how to deploy Golang application end to end with on AWS EKS (Elastic Kubernetes Service) with AWS CI/CD. This will enhance your skills and you can add this project in your resume.

Before heading to Introduction section, do read my latest blogs:

Step-by-Step Guide: How to Create and Mount AWS EFS (Elastic File System)

Deploying Your Website on AWS S3 with Terraform

Learn How to Deploy Scalable 3-Tier Applications with AWS ECS

Introduction

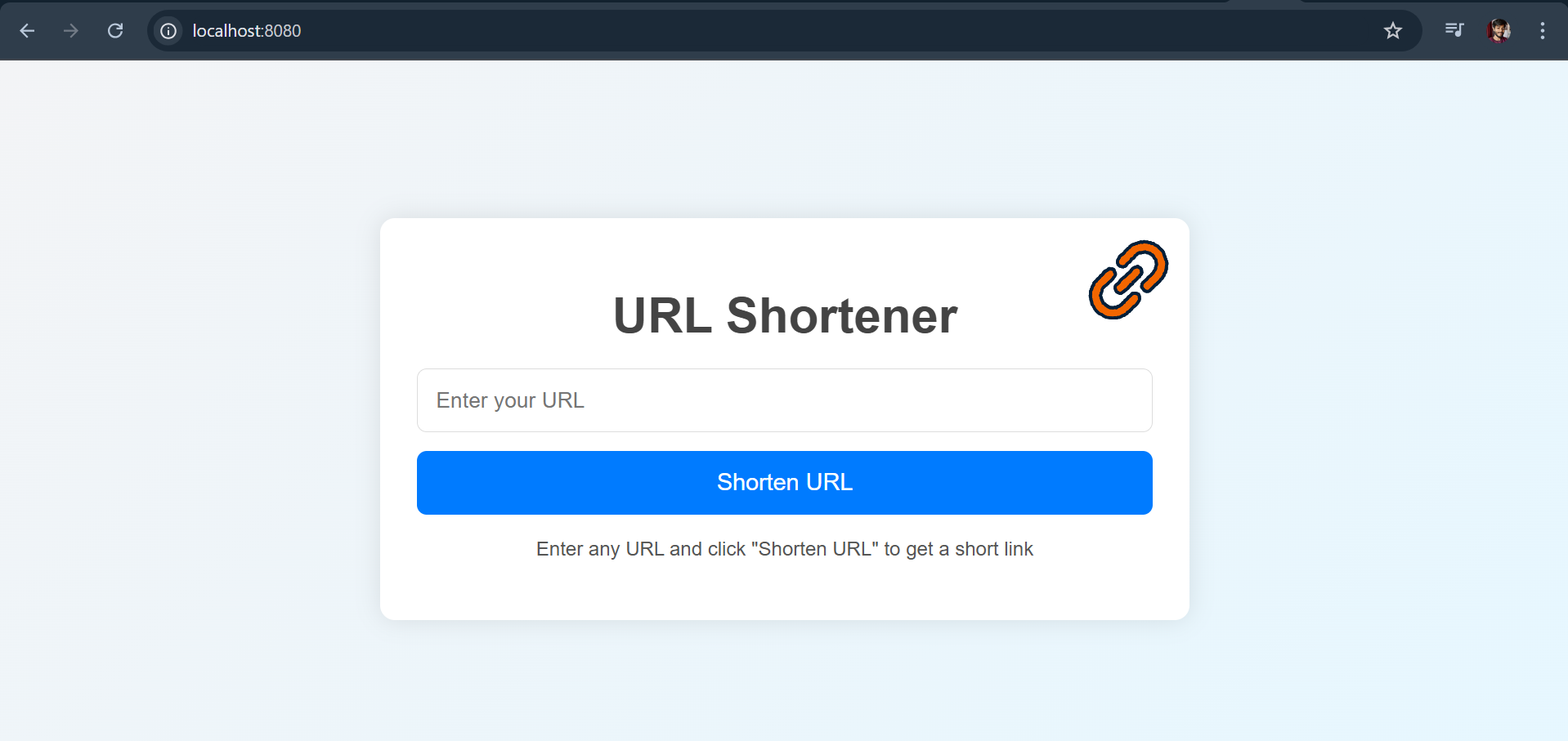

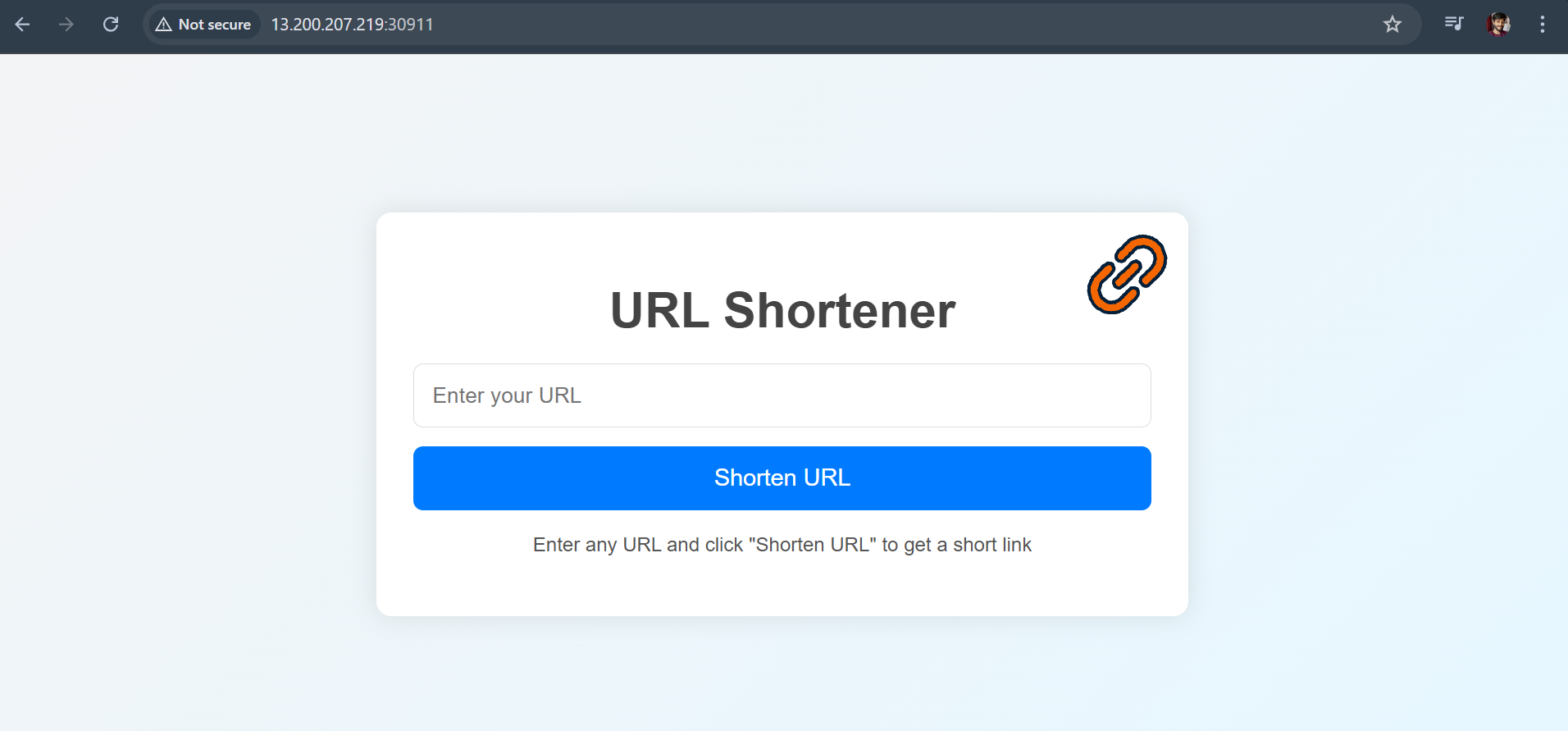

Starting from the Project understanding we have a simple Golang application that will shorten the URL it has the main.go file and one index.html which will serve as frontend.

So, this will look like this which is running locally on http://localhost:8080 To run locally first we need to build go.mod file which is used for dependency management.

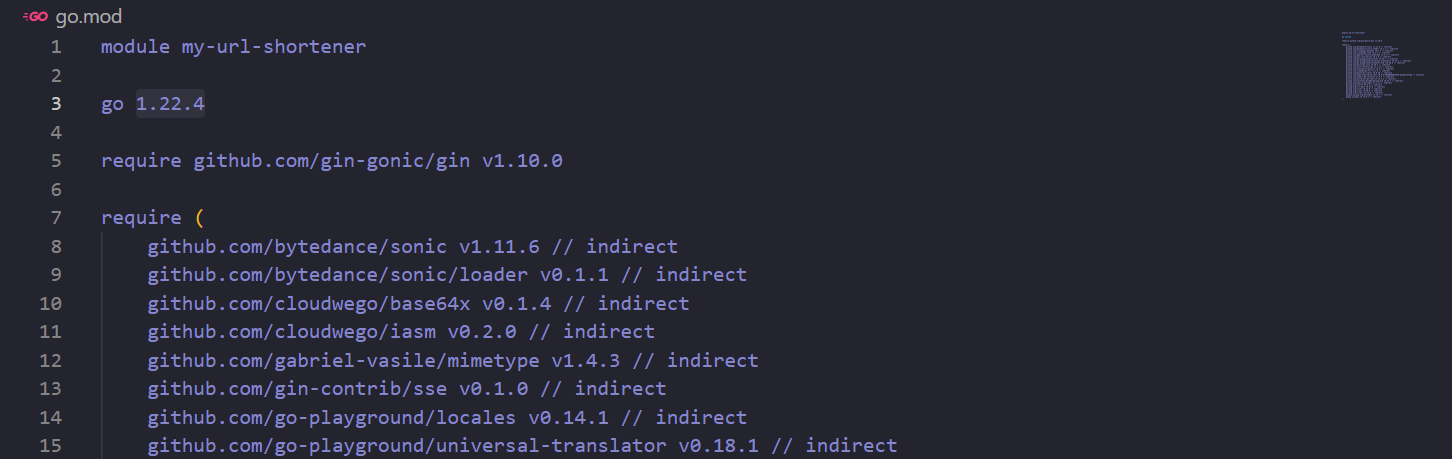

go mod init my-url-shortener

go mod tidy

go get github.com/gin-gonic/gin

This will generate the go.mod and go.sum file. In go.mod file we have the dependencies that is used in code with the specific Golang version. The go get command is installing the gin package HTTP web framework.

After this execute the command to run go file i.e. main.go

go run main.go

Tools and Technologies Used

Golang Programming Language

Docker for Containerization

Kubernetes for Orchestration

CodePipeline and CodeBuild

Argo CD for Continuous Deployments

Multi-Stage Docker Build

As a DevOps engineer our responsibility is to automate everything and reach to zero downtime. It all starts from building the Dockerfile where we package all code dependencies in single binary file and run it. To build Dockerfile we need some points to be considered like build the Dockerfile with a small size, always use best-practices means use smaller size or distroless images.

For our golang code, we are building Multi-Stage Dockerfile that will reduce the size from 1 GB to 33 MB !! Yes, we can achieve this to learn about best practices for creating a Dockerfile follow this article

Dockerfile Best Practices: How to Optimize Your Docker Images

Creating Dockerfile

FROM golang:1.23 AS base

WORKDIR /app

COPY go.mod ./

RUN go mod download

COPY . .

RUN go build -o main .

FROM gcr.io/distroless/base

COPY --from=base /app/main .

COPY --from=base /app/static ./static

EXPOSE 8080

CMD ["./main"]

In this Dockerfile we have used 2 stages in which 1st stage where we are building main by executing go build -o main . and storing in /app directory. The first stage is named as base and in second stage we are using distroless image gcr.io/distroless/base and copying the /app working directory from Stage 1 to Stage 2 by using COPY instruction —from=base and in the next stage we are copying static folder which contains index.html file and in last stage we added CMD instruction which will execute the main binary file.

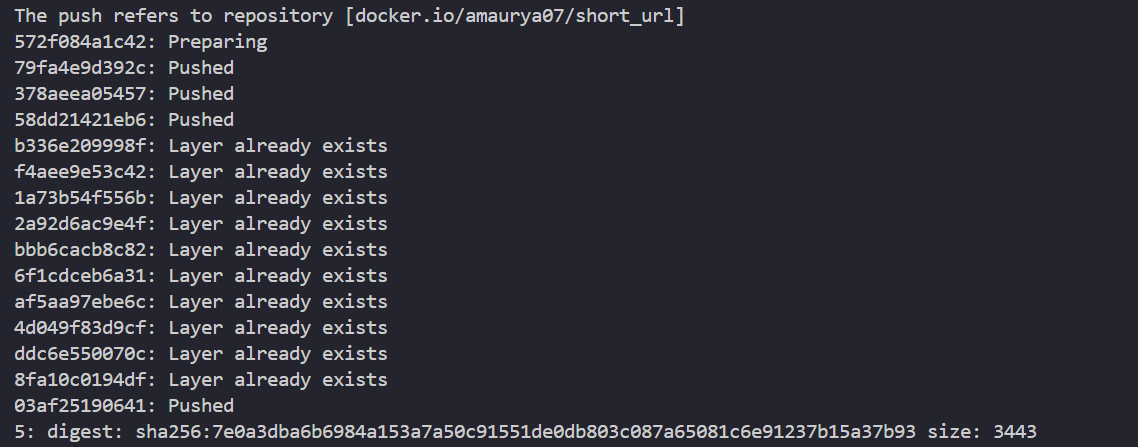

Now, let’s build the Dockerfile and push it to DockerHub.

docker build -t amaurya07/short_url:1 .

docker push amaurya07/short_url:1

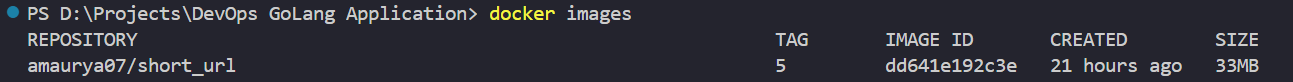

Remember to replace the tag with your own docker hub username/short_url and then give version number. After building the docker image successfully we had reduced the image size to 33 MB.

docker images

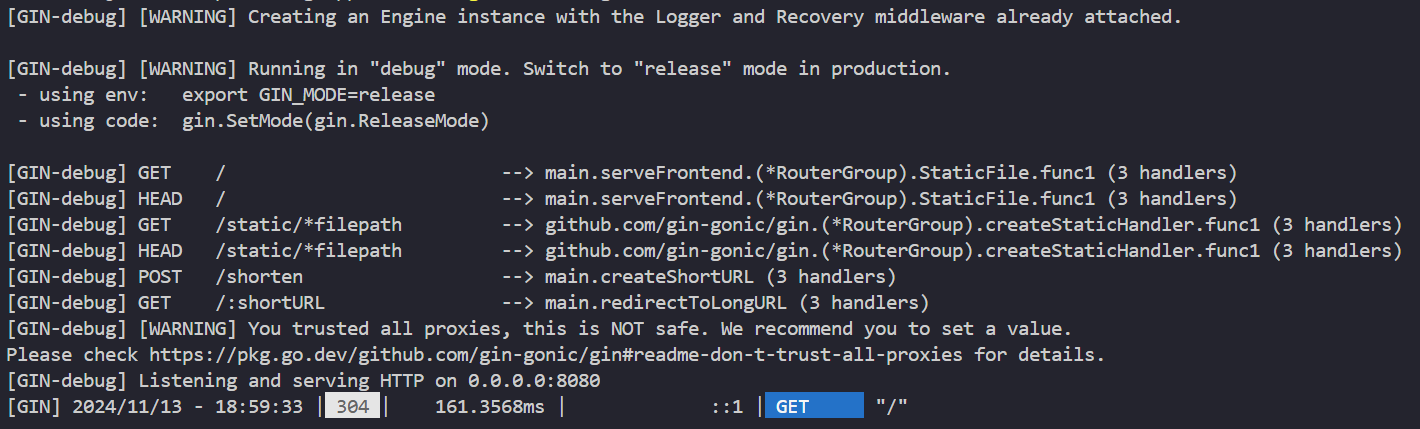

Now check that the docker image is successfully running or not, create a container and bind the port 8080.

docker run -p 8080:8080 amaurya07/short_url:1

Open the browser and open http://localhost:8080 it will open the Shorten URL page.

Creating Kubernetes Manifests

We had created the Dockerfile and pushed to the DockerHub after testing the image by creating the container and we had successfully completed the first stage. Now, we had to deploy this image on EKS Cluster i.e. Elastic Kubenetes Service so for this we need to create Kubernetes manifests file that include deployment.yaml, service.yaml and ingress.yaml.

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: shorturl

spec:

selector:

matchLabels:

app: shorturl

template:

metadata:

labels:

app: shorturl

spec:

containers:

- name: shortenurlapp

image: amaurya07/short_url:1

resources:

limits:

memory: "128Mi"

cpu: "500m"

ports:

- containerPort: 8080

In this deployment.yaml file we will create 1 pod of image amaurya07/short_url:1 on the container Port 8080.

service.yaml

apiVersion: v1

kind: Service

metadata:

name: shorturl

spec:

selector:

app: shorturl

ports:

- port: 8080

targetPort: 8080

type: NodePort

Now, to access the application outside we need to create service of type NodePort that will assign the ports between 30000 to 32000.

ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: shorturl

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

spec:

ingressClassName: alb

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: shorturl

port:

number: 8080

In ingress.yaml file we had defined the annotations of alb i.e. Application Load Balancer which will create the Application Load Balancer of IP and then define the ingressClassName “alb”. But before this we need to install Ingress controller that will create the Ingress resource and load balancer.

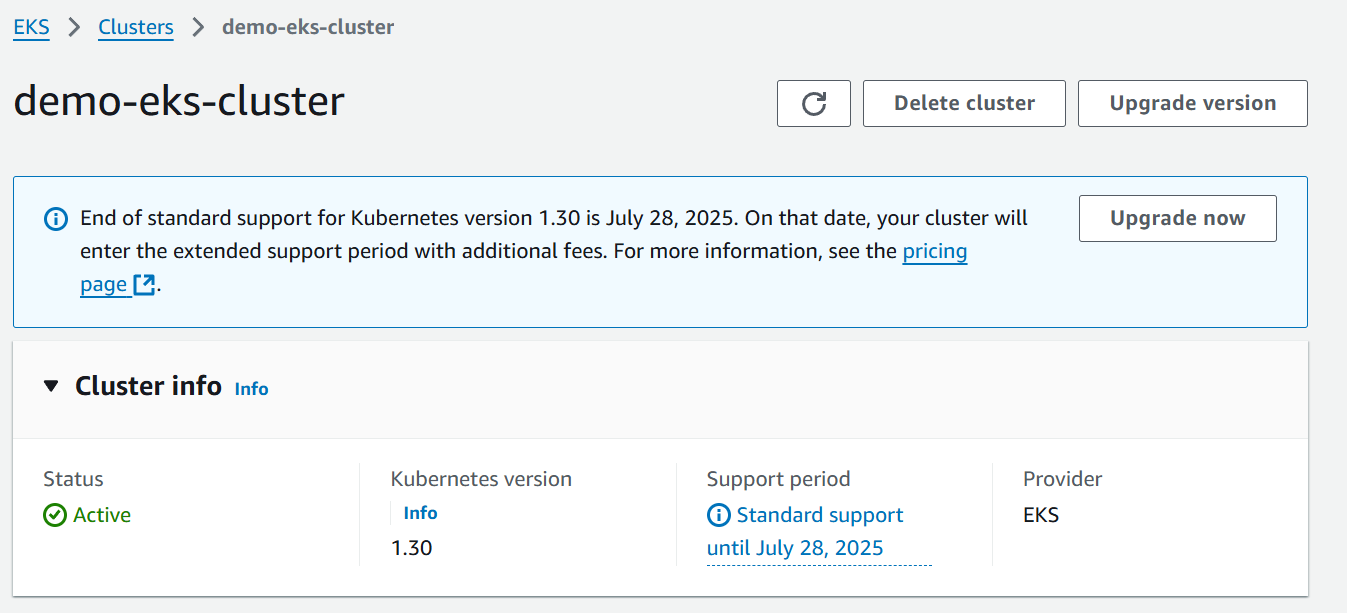

Steps to create EKS Cluster

AWS provides a managed Kubernetes cluster, i.e., EKS. With this, we can configure a Kubernetes managed cluster in minutes on AWS. In AWS, we don't need to manage the Control Plane nodes like provisioning, scaling, and management of the Kubernetes control plane node as they are managed by AWS. We only need to manage the worker nodes if we create self-managed node groups, i.e., EC2; otherwise, in Fargate, it becomes serverless.

We need to install the kubectl, eksctl and aws CLI to create Kubernetes Cluster on EKS.

I am using Windows so these installations are for Windows.

kubectl Installation: https://kubernetes.io/docs/tasks/tools/install-kubectl-windows/

aws CLI: https://docs.aws.amazon.com/cli/latest/userguide/getting-started-install.html

eksctl Install: https://eksctl.io/installation/

By installing these pre-requisites now configure the AWS CLI with Access Keys, Secret Keys and region where we want to deploy our cluster.

In this article I created the AWS Access and Secret Keys and assign the permissions for Administrator access for now.

https://amitmaurya.hashnode.dev/learn-how-to-deploy-scalable-3-tier-applications-with-aws-ecs

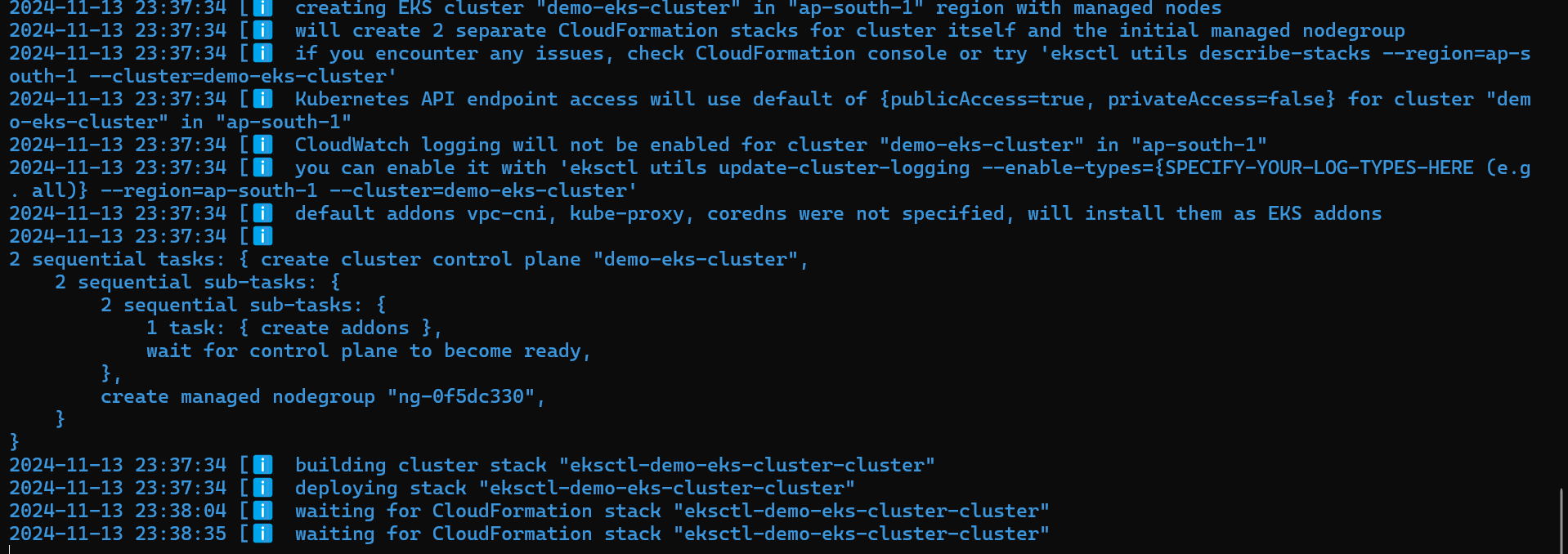

Now create EKS cluster where we are creating the Self Managed NodeGroups by eksctl CLI.

eksctl create cluster --name demo-eks-cluster --region ap-south-1 --nodes 3 --node-type t3.mediumBy executing this command it will create 3 worker nodes in the region Mumbai (ap-south-1) and it is all created by CloudFormation Stack.

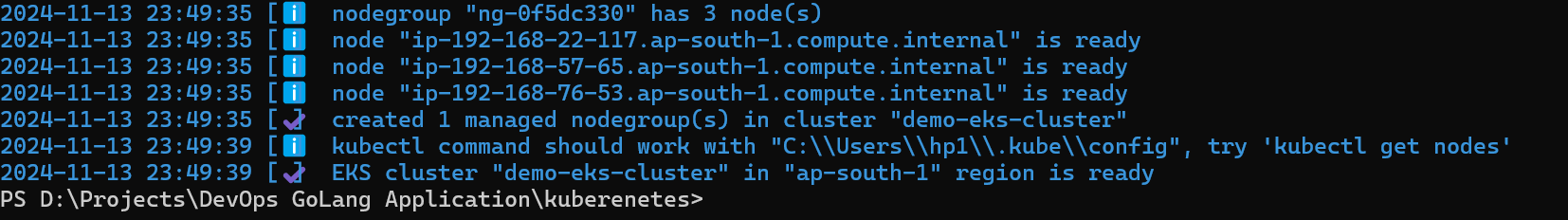

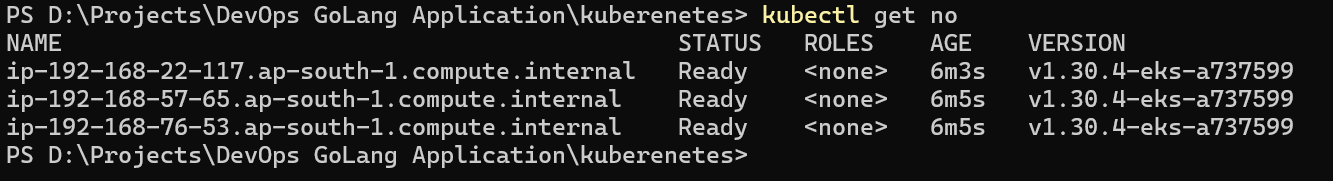

Now, the EKS cluster is ready execute the commands to check the nodes

kubectl get nodes

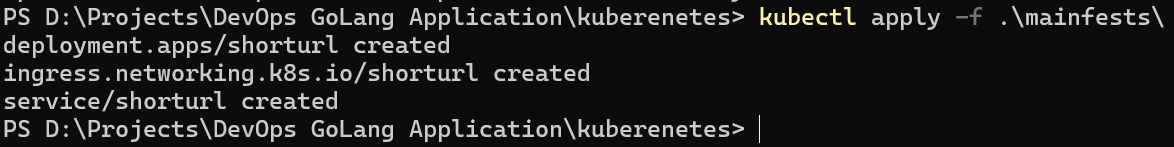

Let’s deploy the Kubernetes manifests yaml files on EKS cluster.

kubectl apply -f ./manifests

So our deployment.yaml, service.yaml and ingress.yaml are created in the default namespace.

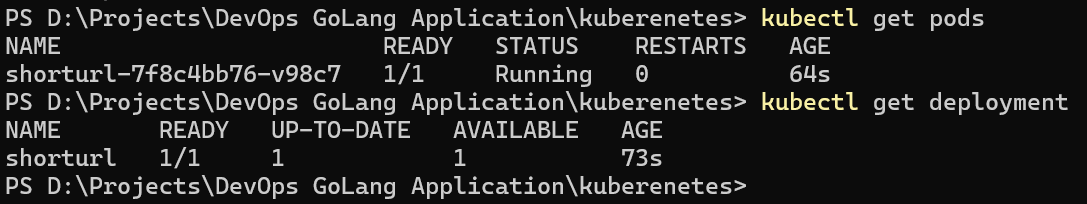

kubectl get pods kubectl get deployment

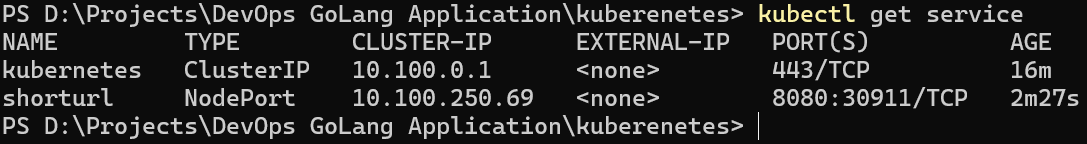

Now, check service it was assigned the NodePort number and we will check that our application is working or not.

kubectl get service

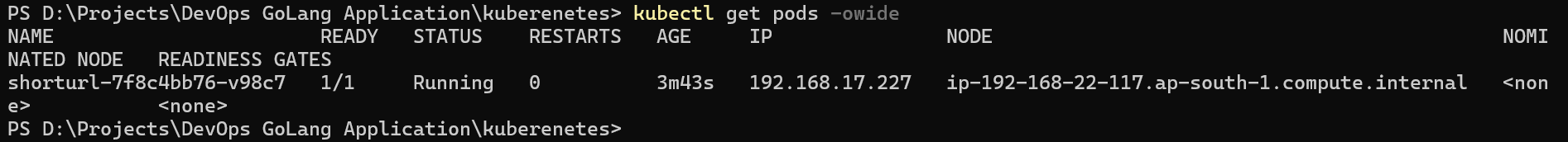

To access the application check the pod is scheduled on which node as we had created 3 nodes and get the External IP of the node.

kubectl get pods -owide

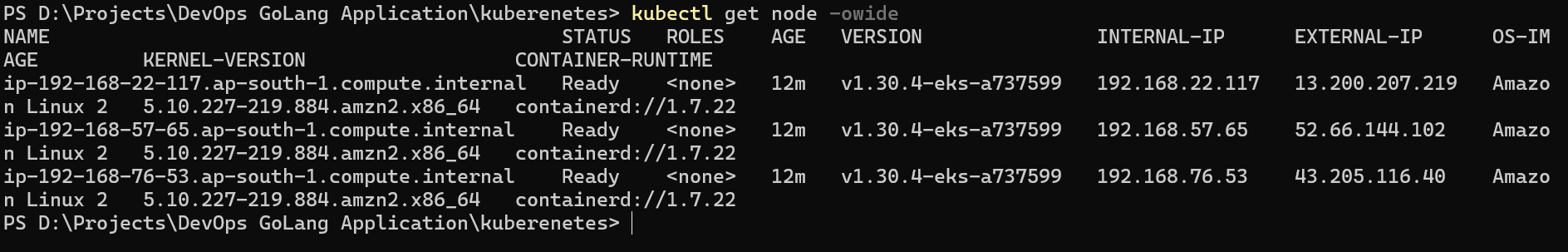

Now, check the External IP of this node by executing

kubectl get node -owide

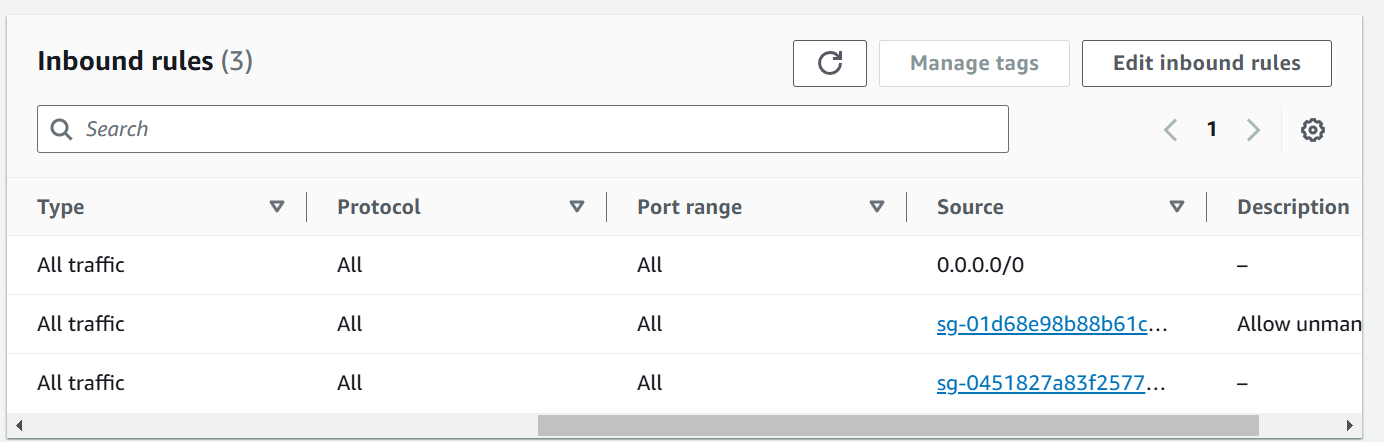

The pod is scheduled on the first node so the External IP is 13.200.207.219. But before this open All traffic of the instance where the Pod is scheduled. Navigate to EKS Cluster and go to Compute section and open the Instance security Group.

Now open the browser and go to

http://13.200.207.219:30911with nodePort.

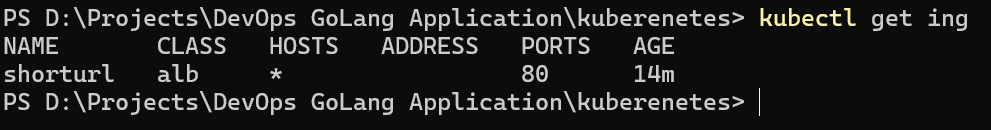

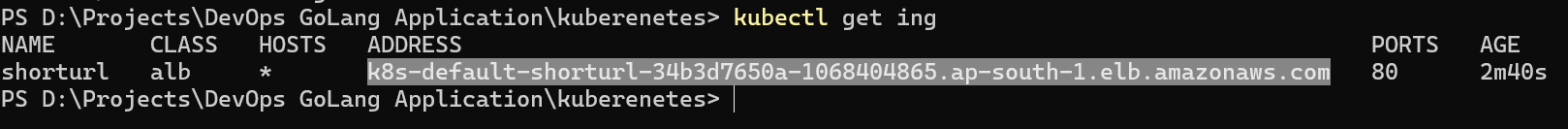

Now, check the ingress resource is created or not.

As we can see there is no Address is there because we have not deployed the Ingress Controller of ALB. Let’s deploy this, we will be using Readme.md instructions to deploy this:

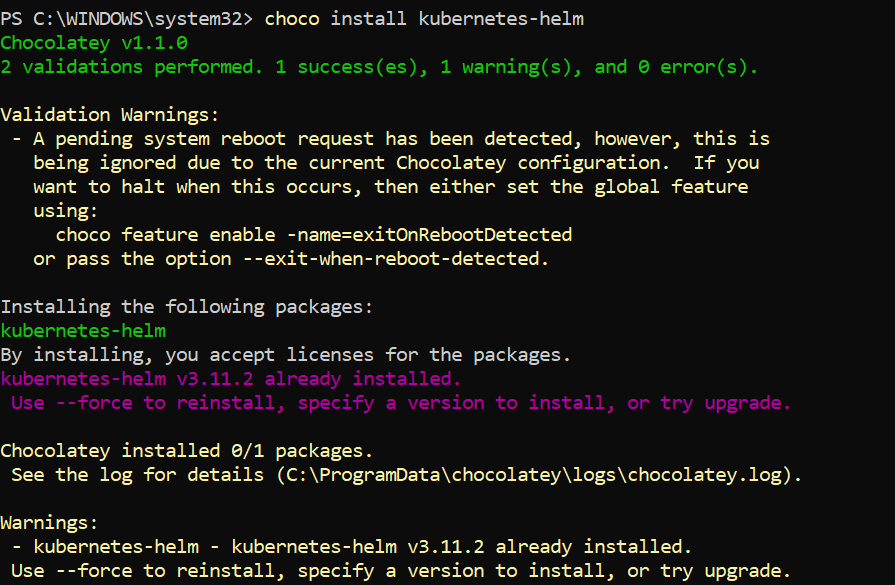

Install Helm so that we can deploy the ALB Ingress Controller on EKS cluster. As I am using Windows OS I am installing with chocolatey.

https://helm.sh/docs/intro/install/

choco install kubernetes-helm

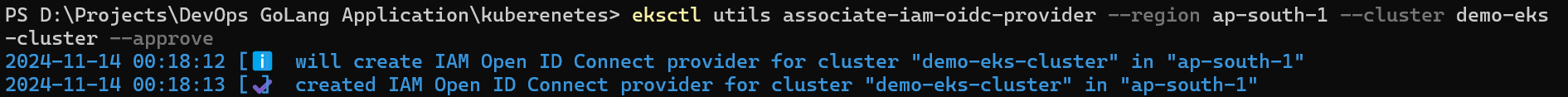

Now, we need to create OIDC (Open ID Connect) IAM provider.

eksctl utils associate-iam-oidc-provider \ --region ap-south-1 \ --cluster demo-eks-cluster \ --approve

Then we need to download the IAM policy for ALB Ingress controller.

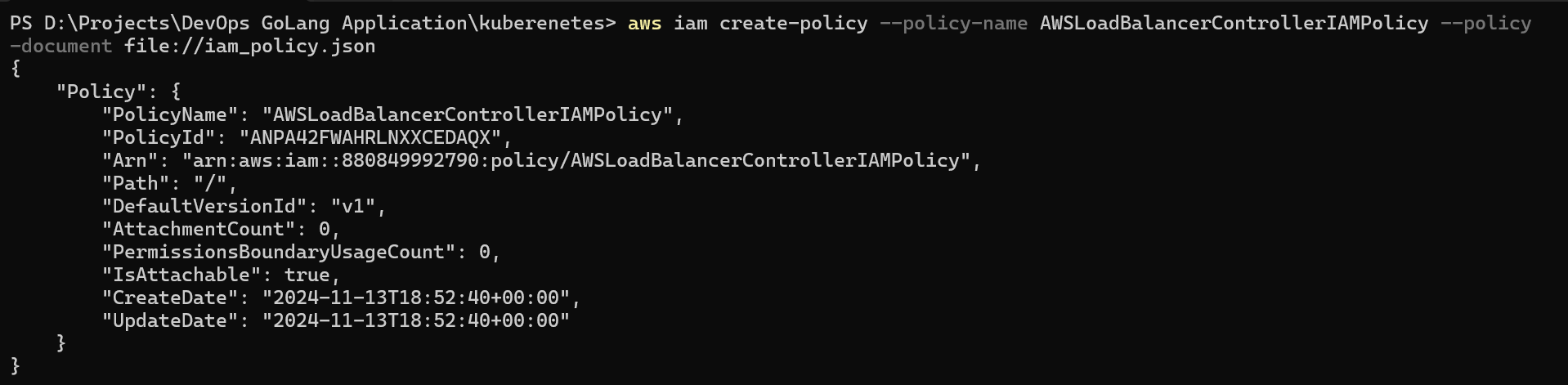

curl -o iam-policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/main/docs/install/iam_policy.jsonAfter the policy is downloaded we need to create the IAM policy.

aws iam create-policy \ --policy-name AWSLoadBalancerControllerIAMPolicy \ --policy-document file://iam_policy.json

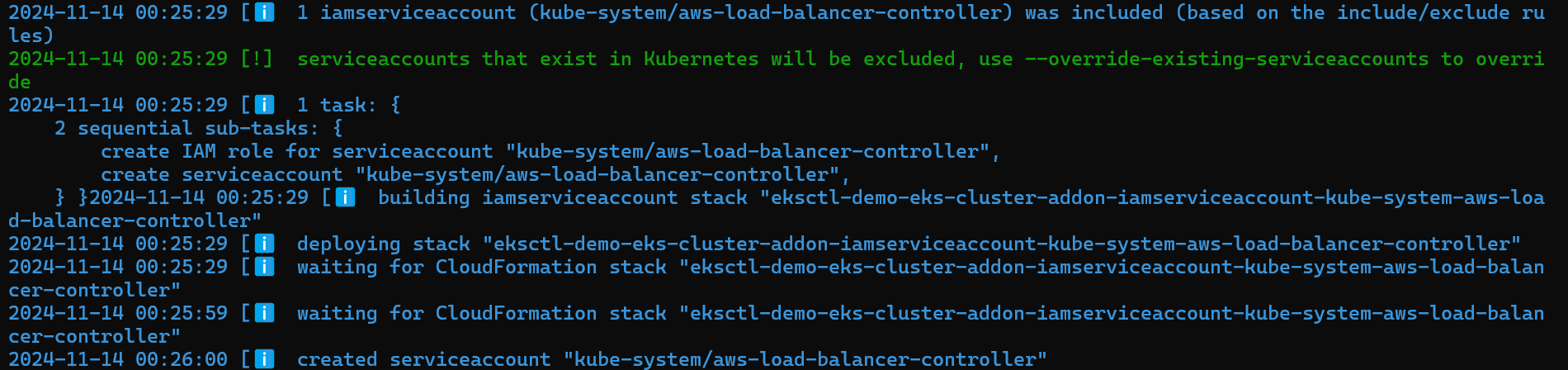

Now, we need to create IAM role and service account to interact with other AWS service from EKS.

eksctl create iamserviceaccount \ --cluster=<cluster-name> \ --namespace=kube-system \ --name=aws-load-balancer-controller \ --attach-policy-arn=arn:aws:iam::<AWS_ACCOUNT_ID>:policy/AWSLoadBalancerControllerIAMPolicy \ --approve

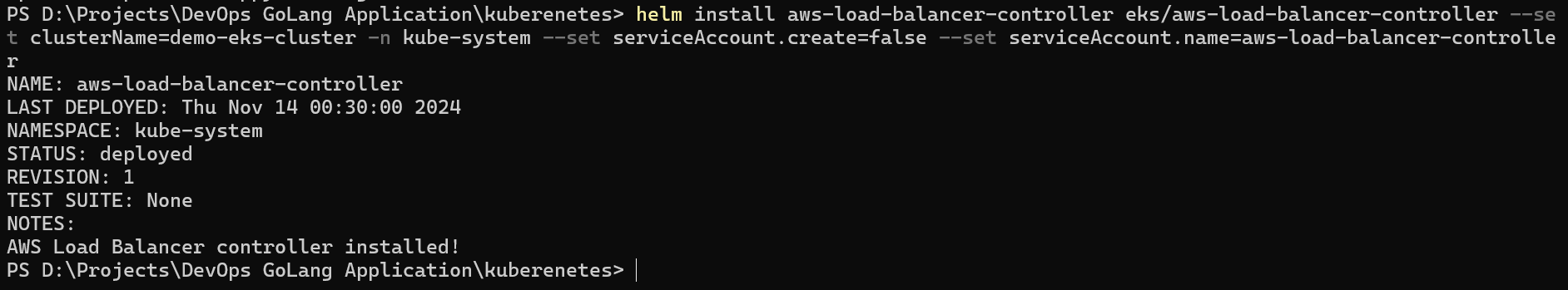

After all this, we have to install the helm charts.

helm repo add eks https://aws.github.io/eks-charts

helm repo update eks

helm install aws-load-balancer-controller eks/aws-load-balancer-controller --set clusterName=demo-eks-cluster -n kube-system --set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller --set region=ap-south-1 --set vpcId=<vpc-id>

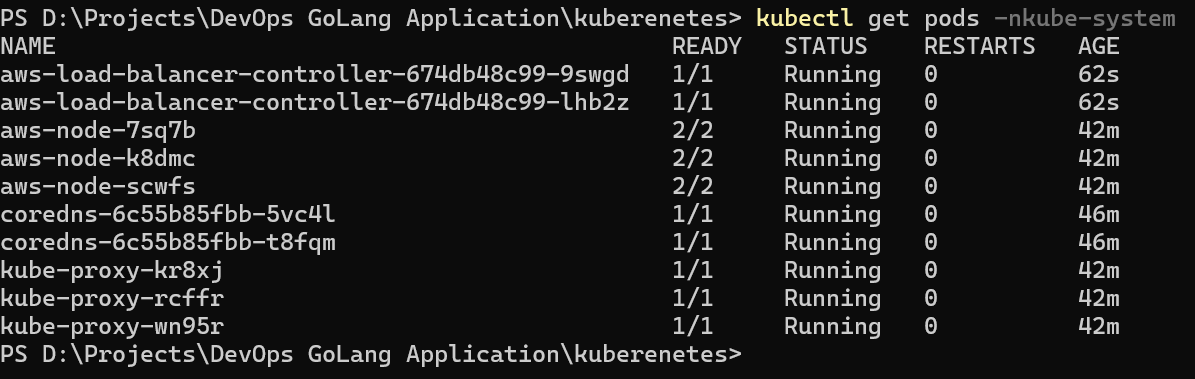

kubectl get pods -nkube-system

As we can see the pods of ALB Ingress controller are deployed in kube-system namespace. Now, let’s check the ingress got the address i.e load balancer DNS name or not.

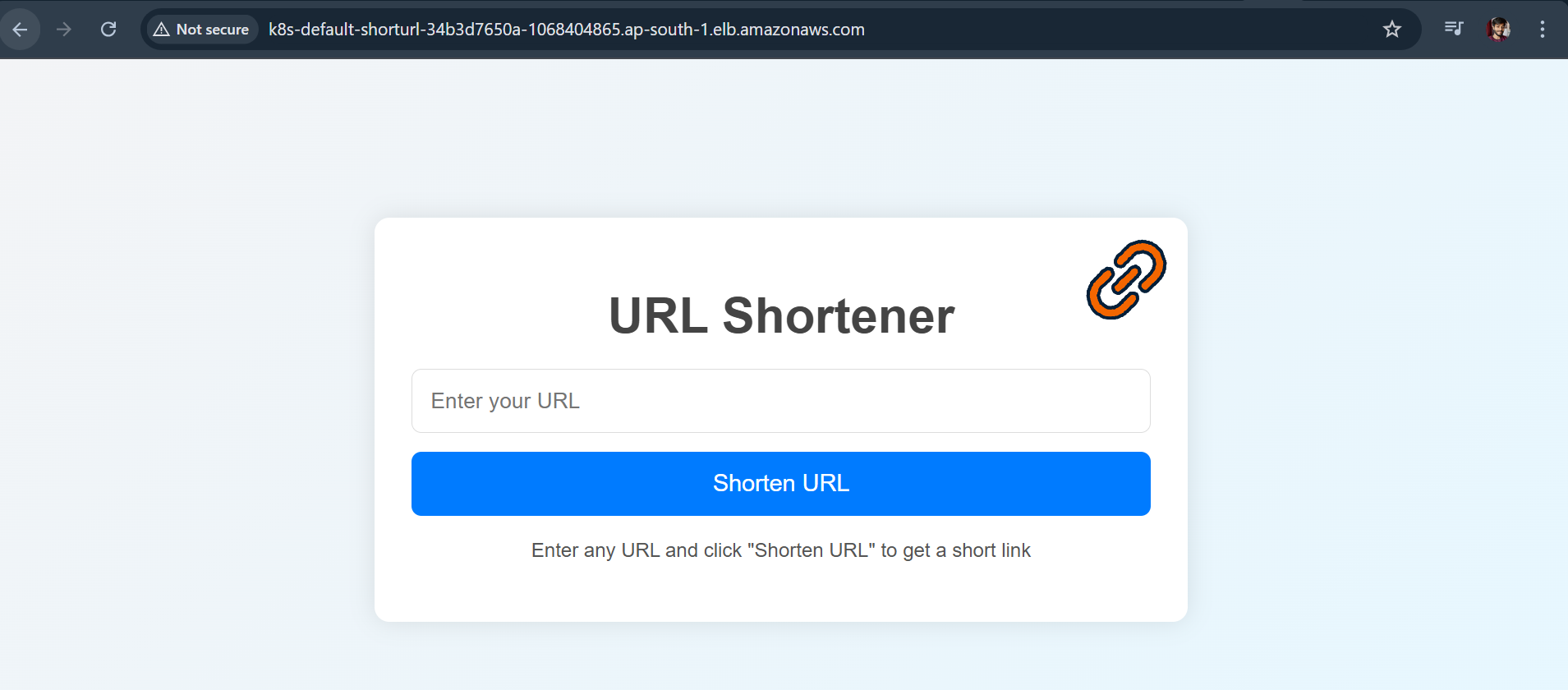

We can check here we got the address of DNS name of Load Balancer, now let’s copy this and paste it on browser.

http://k8s-default-shorturl-34b3d7650a-1068404865.ap-south-1.elb.amazonaws.com

Hurray !! we have achieved the third phase where we deployed the application on EKS and accessed with the Load Balancer DNS name, but now I want my URL to access this, for this we need to modify the ingress.yaml file and add the

hosts entry.apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: shorturl annotations: alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/target-type: ip spec: ingressClassName: alb rules: - host: shortenurl.local http: paths: - path: / pathType: Prefix backend: service: name: shorturl port: number: 8080

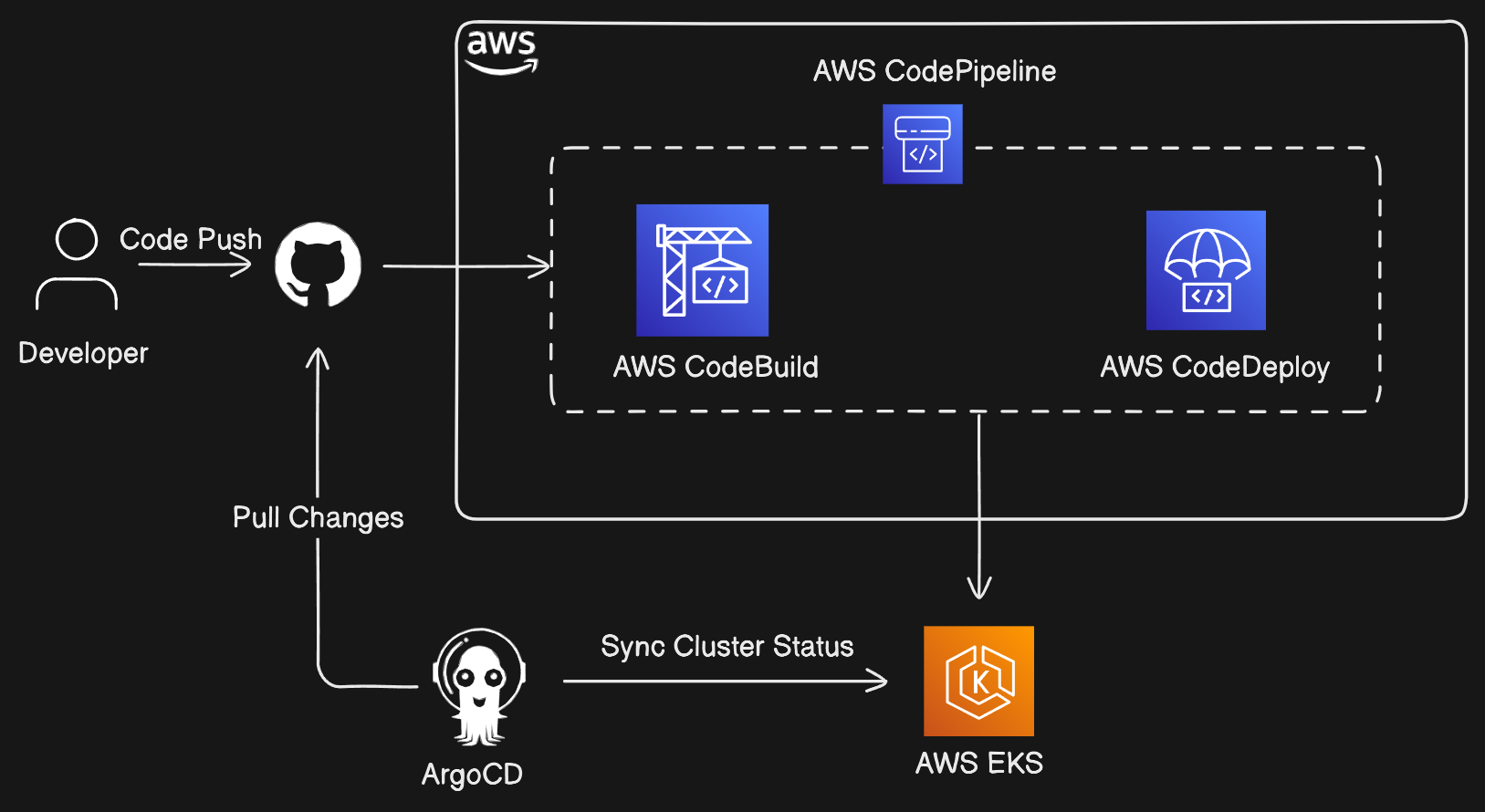

AWS CI/CD Pipeline

Now, we had dockerize the application, deployed on the EKS Cluster access the application with the ingress by deploying the ALB Ingress Controller but as a DevOps Engineer we had to implement CI/CD pipeline so that developers can run the pipeline if any changes happens in the code it will automatically deploy to EKS Cluster and we can see the new feature or new version of the image is implemented.

In AWS CI/CD pipeline, AWS offers 4 services by which we can automate the whole CI/CD process.

CodeCommit - Version Control System

CodePipeline - CI Process

CodeBuild - Write buildspec.yaml file that includes stages for the pipeline.

CodeDeploy - CD Process

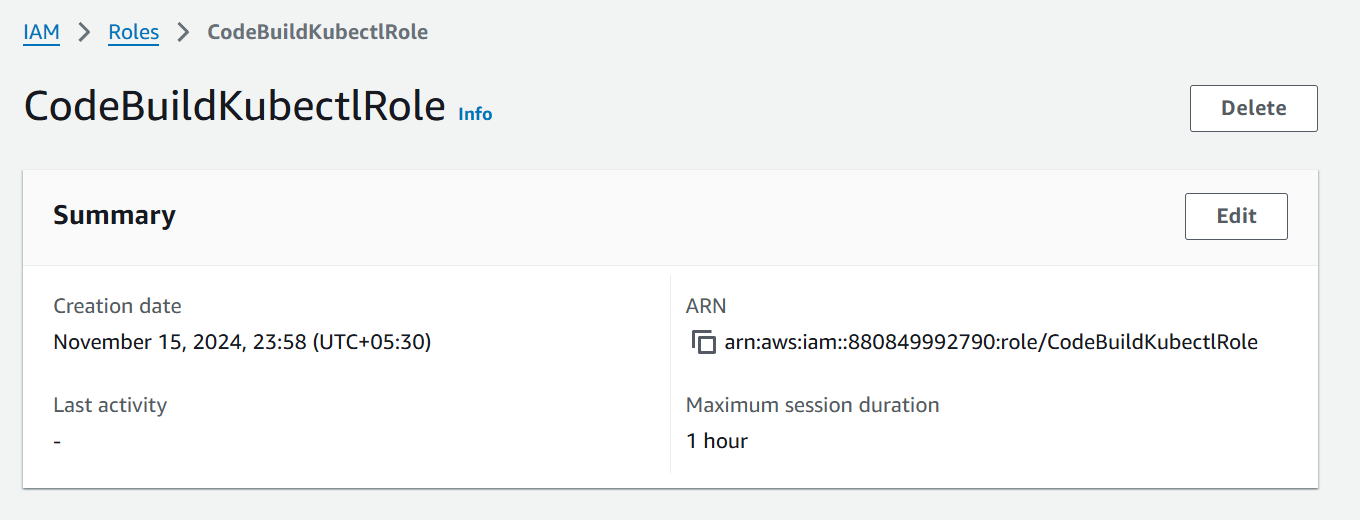

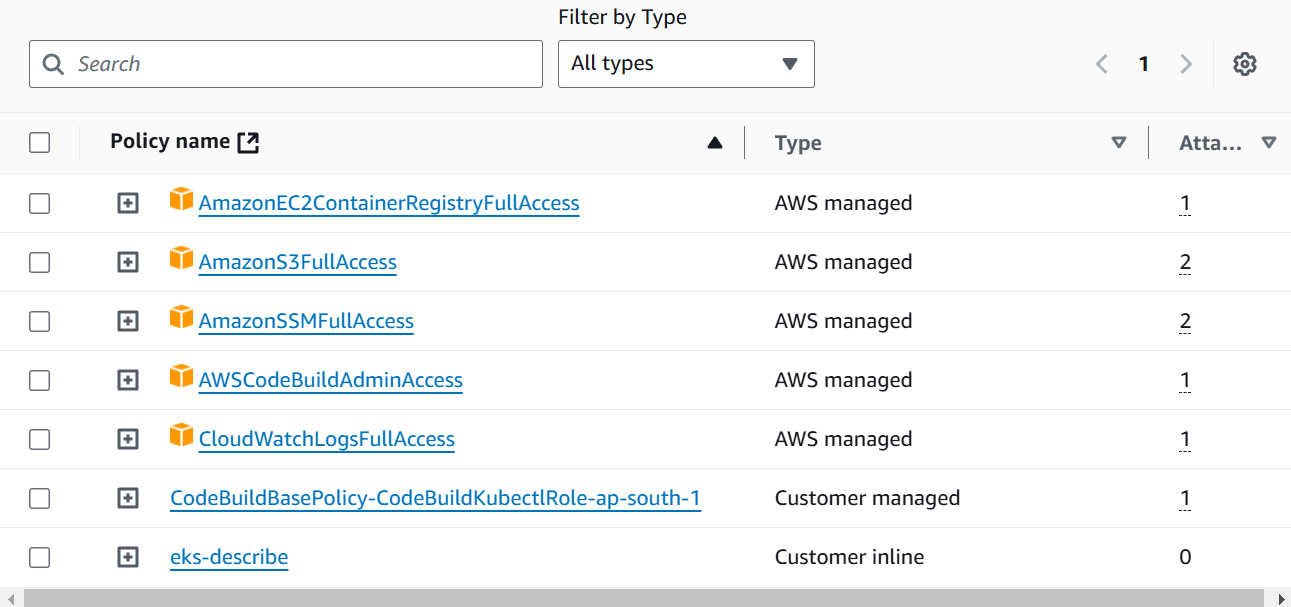

Before heading to CodePipeline, we first create the IAM Role which will be used in CodeBuild to build the phases with necessary permissions.

For this, copy this script and run the command to execute the script.

iamrole.sh:

#!/usr/bin/env bash

TRUST="{ \"Version\": \"2012-10-17\", \"Statement\": [ { \"Effect\": \"Allow\", \"Principal\": { \"Service\": \"codebuild.amazonaws.com\" }, \"Action\": \"sts:AssumeRole\" } ] }"

echo '{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": "eks:Describe*", "Resource": "*" } ] }' > /tmp/iam-role-policy

aws iam create-role --role-name CodeBuildKubectlRole --assume-role-policy-document "$TRUST" --output text --query 'Role.Arn'

aws iam put-role-policy --role-name CodeBuildKubectlRole --policy-name eks-describe --policy-document file:///tmp/iam-role-policy

aws iam attach-role-policy --role-name CodeBuildKubectlRole --policy-arn arn:aws:iam::aws:policy/CloudWatchLogsFullAccess

aws iam attach-role-policy --role-name CodeBuildKubectlRole --policy-arn arn:aws:iam::aws:policy/AWSCodeBuildAdminAccess

aws iam attach-role-policy --role-name CodeBuildKubectlRole --policy-arn arn:aws:iam::aws:policy/AmazonS3FullAccess

aws iam attach-role-policy --role-name CodeBuildKubectlRole --policy-arn arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryFullAccess

In this script we are attaching the permissions for CloudWatch Logs, AWS Code Build Admin, AWS S3, and if you are using ECR the permission would be AmazonEC2ContainerRegistryFullAccess.

When you run this shell script go to IAM > Roles > CodeBuildKubectlRole, we will see the attached permissions.

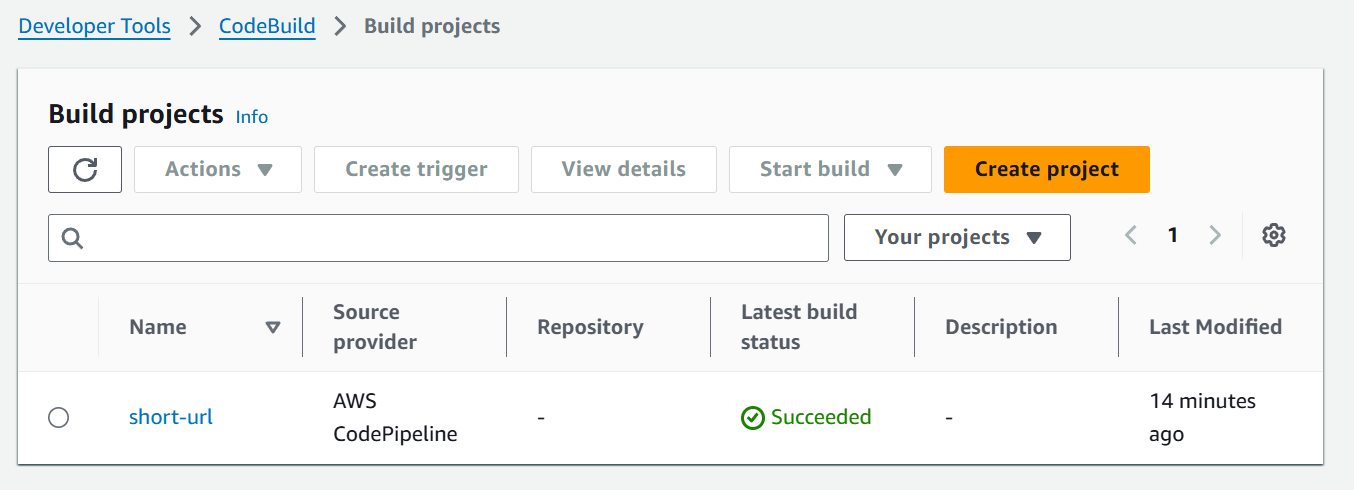

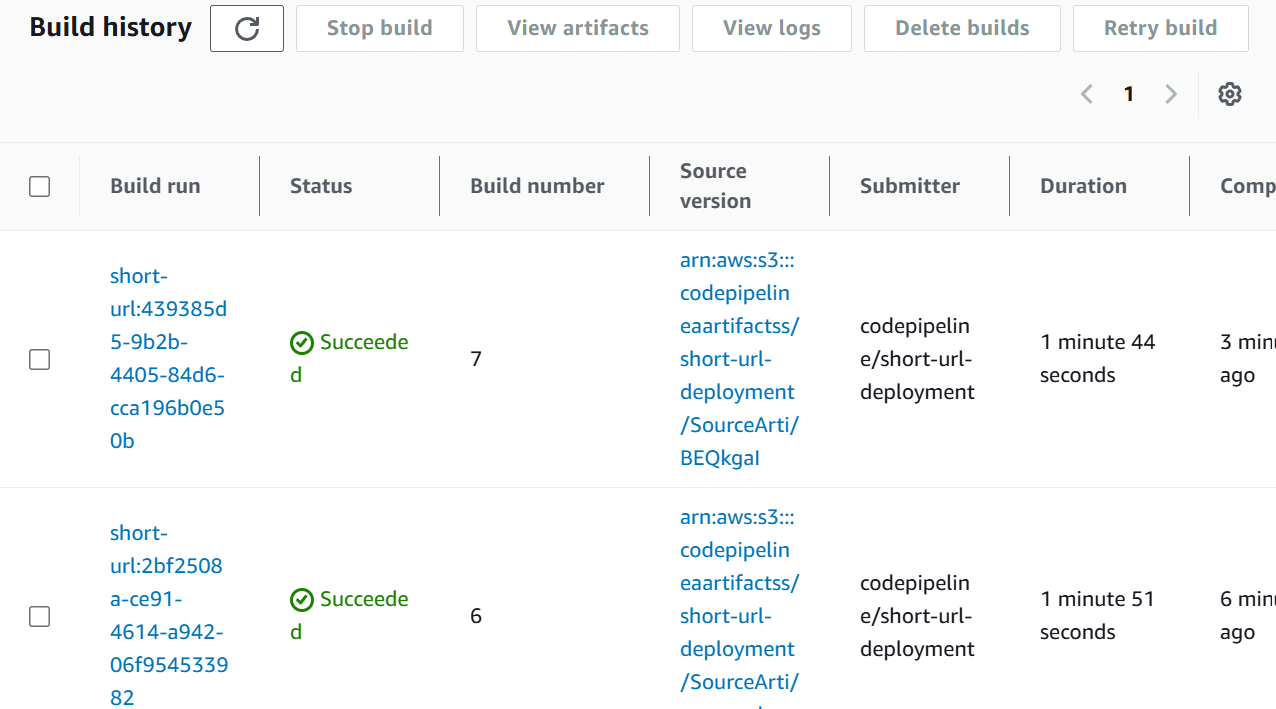

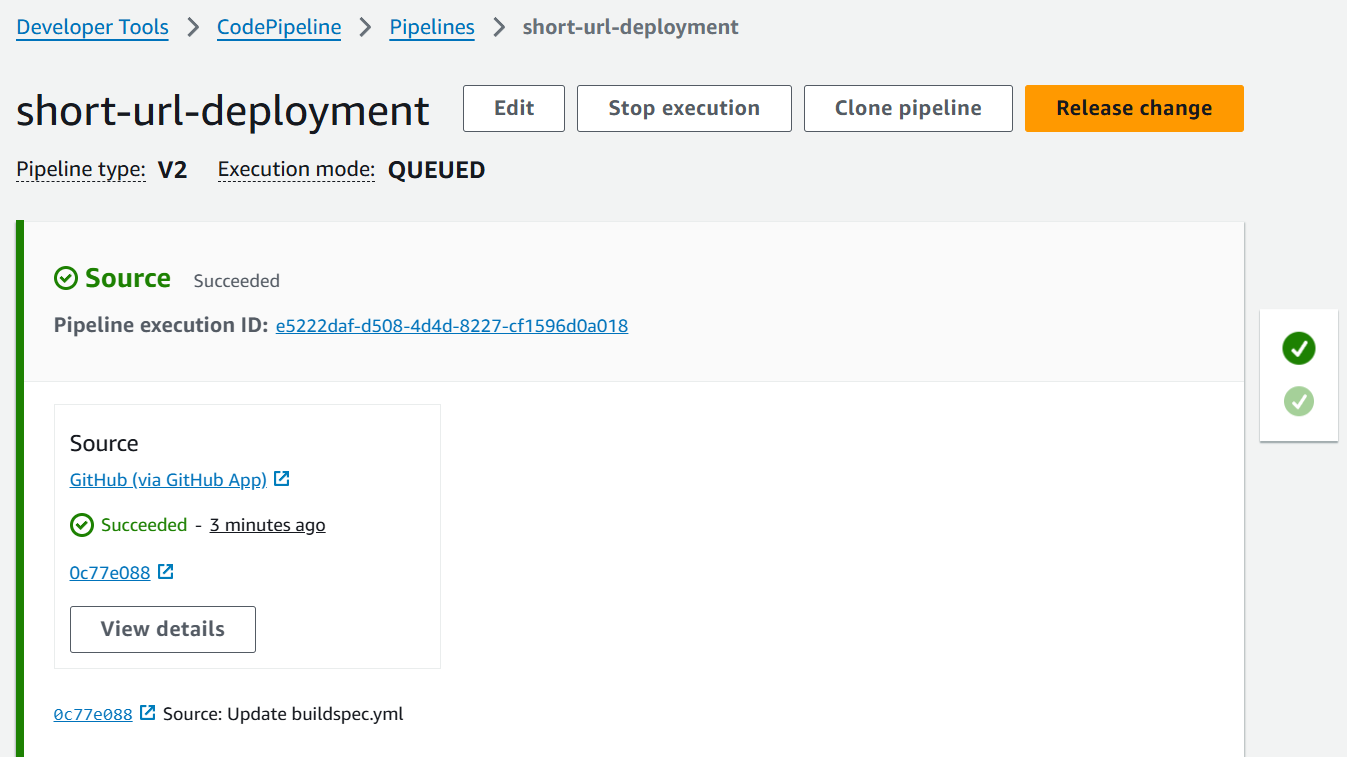

Now, Let’s navigate to AWS CodePipeline and create the Pipeline of Build Stage. As you can see I had created the pipeline named short-url-deployment

Click on Create Pipeline.

Select Build Custom Pipeline, click on Next.

Name the pipeline

short-url-deployment, choose execution mode Queued.In Service Role, create New Service Role, leave it as it is named.

Scroll Down click on Advanced Settings choose S3 Bucket for Default Location and Default AWS Managed Keys.

Now, choose the Source Provider where your code is hosted and connect it to respected source provider (VCS). In my case, my code is hosted on GitHub so I choose GitHub via GitHub App. After connecting to GitHub scroll down to trigger select No filter that will execute the pipeline on Code Push.

Now, you move to the Build Stage where you had to add BuildSource provider, so click on Other Build Provider and choose AWS CodeBuild.

As you can see click on Project it will open the new window of CodeBuild.

Name the CodeBuild Provider as

short-urlScroll Down, leave it as it is Provisioning Model On-demand, Environment Image Managed Instance, Compute EC2.

Choose Operating System Amazon Linux, Runtime Standard and select latest image.

In the Service Role choose the Existing Role

CodeBuildKubectlRolewhich we had created earlier.Open Additional Configuration select Privileged.

Scroll down, in buildspec choose Use a buildspec file if you have custom name of buildspec you can specify it otherwise it will automatically look

buildspec.ymlfile in GitHub.Select CloudWatch Logs to show logs of phases that we defined in

buildspec.ymlwhen it runs.Then continue to CodePipeline you will move to codepipeline section

Click on Next, skip the Continuous Delivery Stage for now.

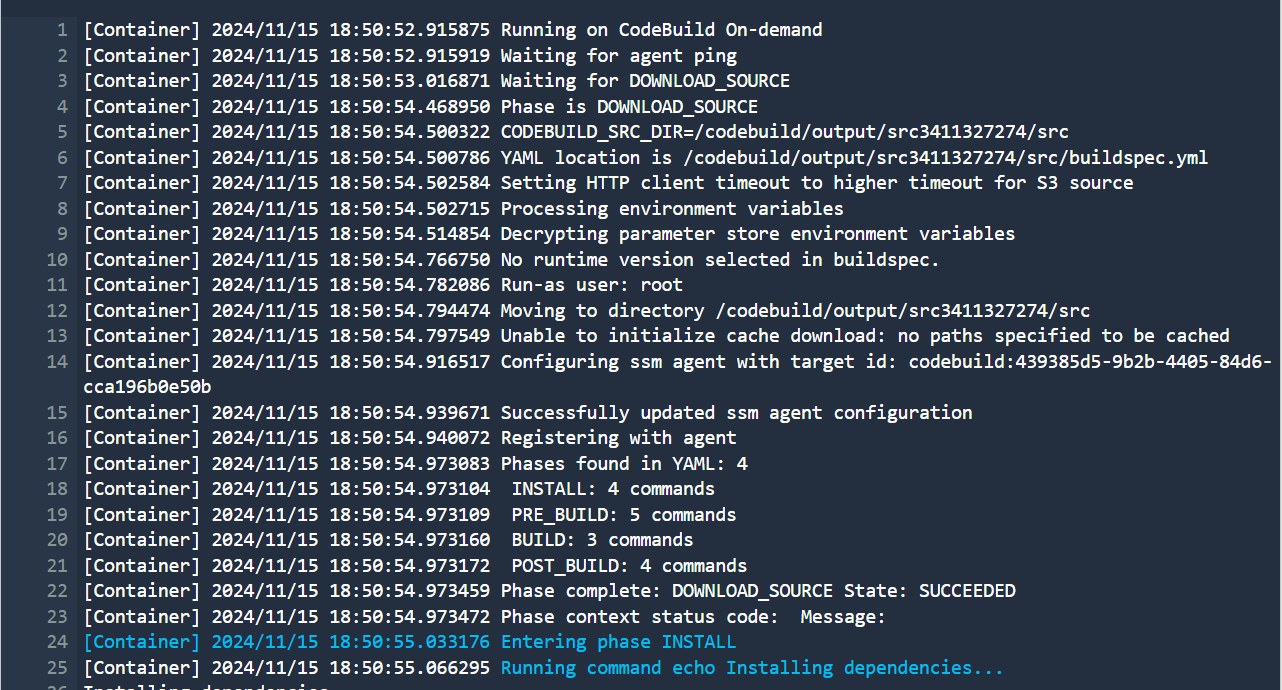

As we had talked about the buildspec.yml file in CodeBuild creation what it is ?

If we had used any other CI tool like Jenkins, GitLab CI it has Jenkinsfile and gitlab-ci.yml file where we write multiple stages like Docker build, push that will be built and deploy to Kubernetes cluster.

As same as in AWS CI/CD CodeBuild uses buildspec.yml file. So let’s understand this file.

version: 0.2

run-as: root

env:

parameter-store:

DOCKER_REGISTRY_USERNAME: /golang-app/docker-credentials/username

DOCKER_REGISTRY_PASSWORD: /golang-app/docker-credentials/password

DOCKER_REGISTRY_URL: /golang-app/docker-credentials/url

phases:

install:

commands:

- echo Installing dependencies...

- curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

- chmod +x kubectl

- mv kubectl /usr/local/bin/

pre_build:

commands:

- echo "Logging to DockerHub"

- echo "$DOCKER_REGISTRY_PASSWORD" | docker login -u "$DOCKER_REGISTRY_USERNAME" --password-stdin $DOCKER_REGISTRY_URL

- aws sts get-caller-identity

- aws eks update-kubeconfig --region ap-south-1 --name demo-eks-cluster

- kubectl config current-context

build:

commands:

- echo "Building Docker Image"

- docker build -t "$DOCKER_REGISTRY_USERNAME/short_url:$CODEBUILD_RESOLVED_SOURCE_VERSION" .

- docker push "$DOCKER_REGISTRY_USERNAME/short_url:$CODEBUILD_RESOLVED_SOURCE_VERSION"

post_build:

commands:

- echo "Docker Image is Pushed"

- echo "Deploying the application to EKS"

- kubectl set image deployment/shorturl shortenurlapp=$DOCKER_USERNAME/short_url:$CODEBUILD_RESOLVED_SOURCE_VERSION

- kubectl get svc

We are defining the version, like in docker-compose we define the version when we create docker-compose.yml file it was same as that.

Now, this will be build as root.

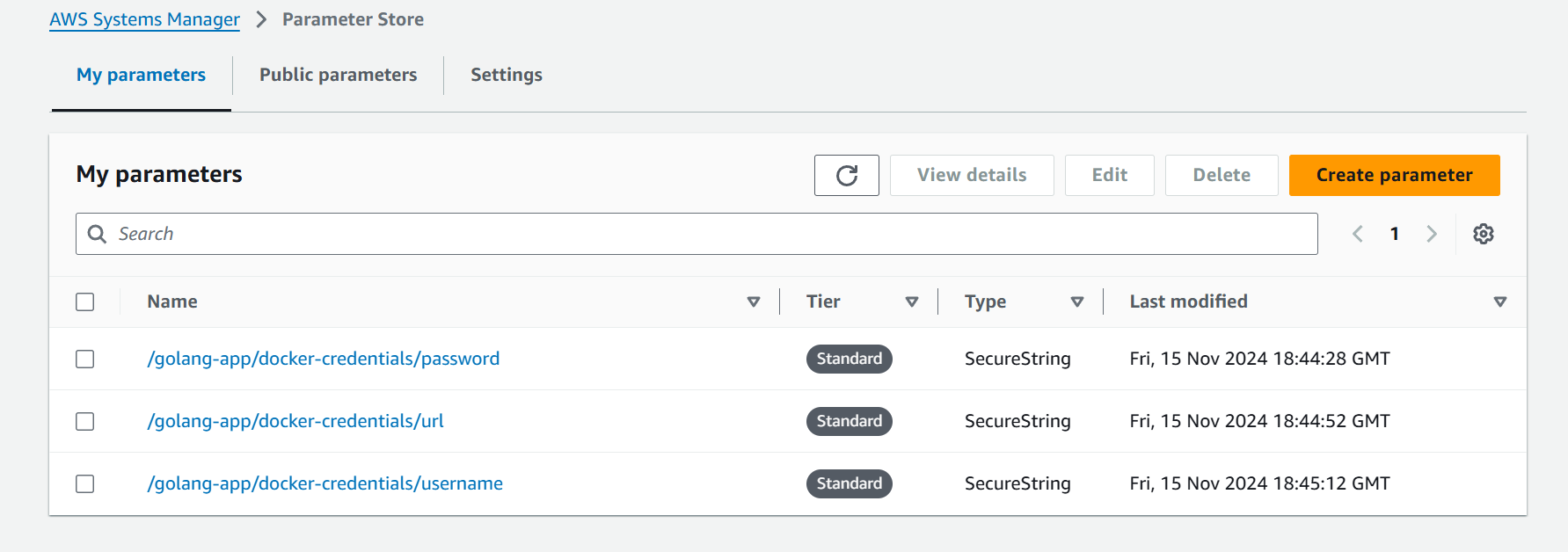

In env section, we had defined 3 parameters for Docker Build, Push commands.

Navigate to AWS Parameter, it is a service of SSM i.e. AWS System Manager.

Click on Create Parameter and follow this name path so that it can be better understable

/golang-app/docker-credentials/urlwhich means our application-name then which kind of credentials then what we need to store.Scroll down, select the Type SecureString and fill the value for URL (docker.io).

Now, create the 2 parameters for Docker Username and Docker Password. Remember in Docker the password is PAT Token.

Add these parameters in buildspec file in env section.

env: parameter-store: DOCKER_REGISTRY_USERNAME: /golang-app/docker-credentials/username DOCKER_REGISTRY_PASSWORD: /golang-app/docker-credentials/password DOCKER_REGISTRY_URL: /golang-app/docker-credentials/urlNow we have phases section where we 3 phase install, pre-build, build and post-build.

In install phase we are installing the required CLI like of kubectl.

install: commands: - echo Installing dependencies... - curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl" - chmod +x kubectl - mv kubectl /usr/local/bin/In pre-build phase, first we are logging to DockerHub account so that we can push the image, then we are updating the kubeconfig of our EKS Cluster and at last we check the current-context of kubeconfig.

pre_build: commands: - echo "Logging to DockerHub" - echo "$DOCKER_REGISTRY_PASSWORD" | docker login -u "$DOCKER_REGISTRY_USERNAME" --password-stdin $DOCKER_REGISTRY_URL - aws sts get-caller-identity - aws eks update-kubeconfig --region ap-south-1 --name demo-eks-cluster - kubectl config current-contextNow, come to build section in which we are building Docker Image and Pushing to DockerHub.

build: commands: - echo "Building Docker Image" - docker build -t "$DOCKER_REGISTRY_USERNAME/short_url:$CODEBUILD_RESOLVED_SOURCE_VERSION" . - docker push "$DOCKER_REGISTRY_USERNAME/short_url:$CODEBUILD_RESOLVED_SOURCE_VERSION"In post-build, we are setting the new image of the deployed app on our EKS Cluster with the command

kubectl set imagepost_build: commands: - echo "Docker Image is Pushed" - echo "Deploying the application to EKS" - kubectl set image deployment/shorturl shortenurlapp=$DOCKER_USERNAME/short_url:$CODEBUILD_RESOLVED_SOURCE_VERSION - kubectl get svc

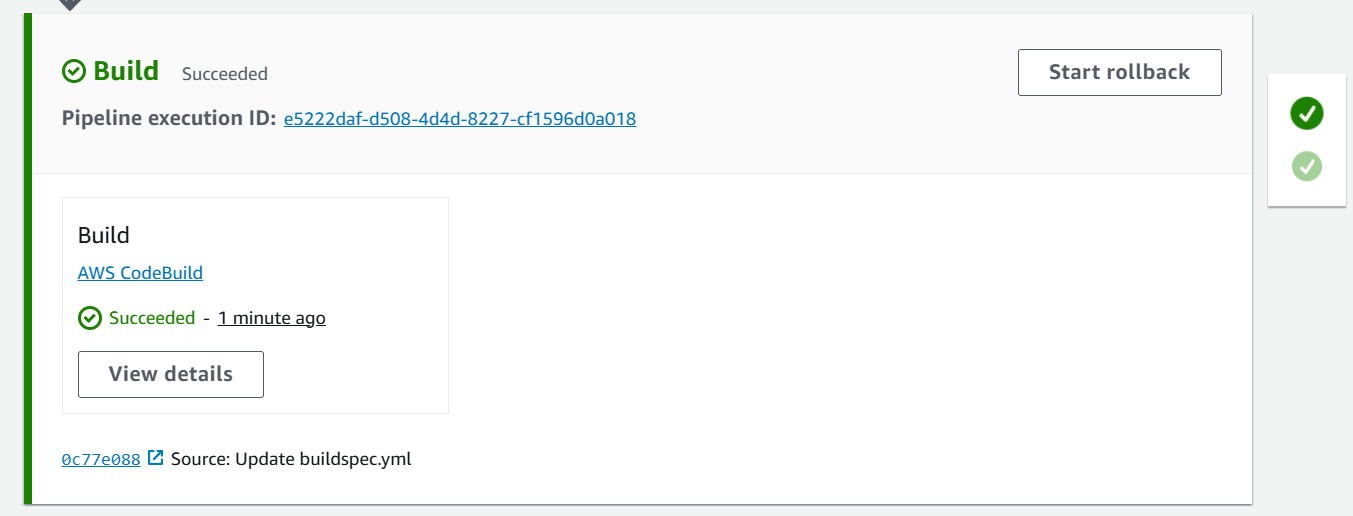

As we can see our 2 stages Source and Build both are successful and shows the output in CodePipeline.

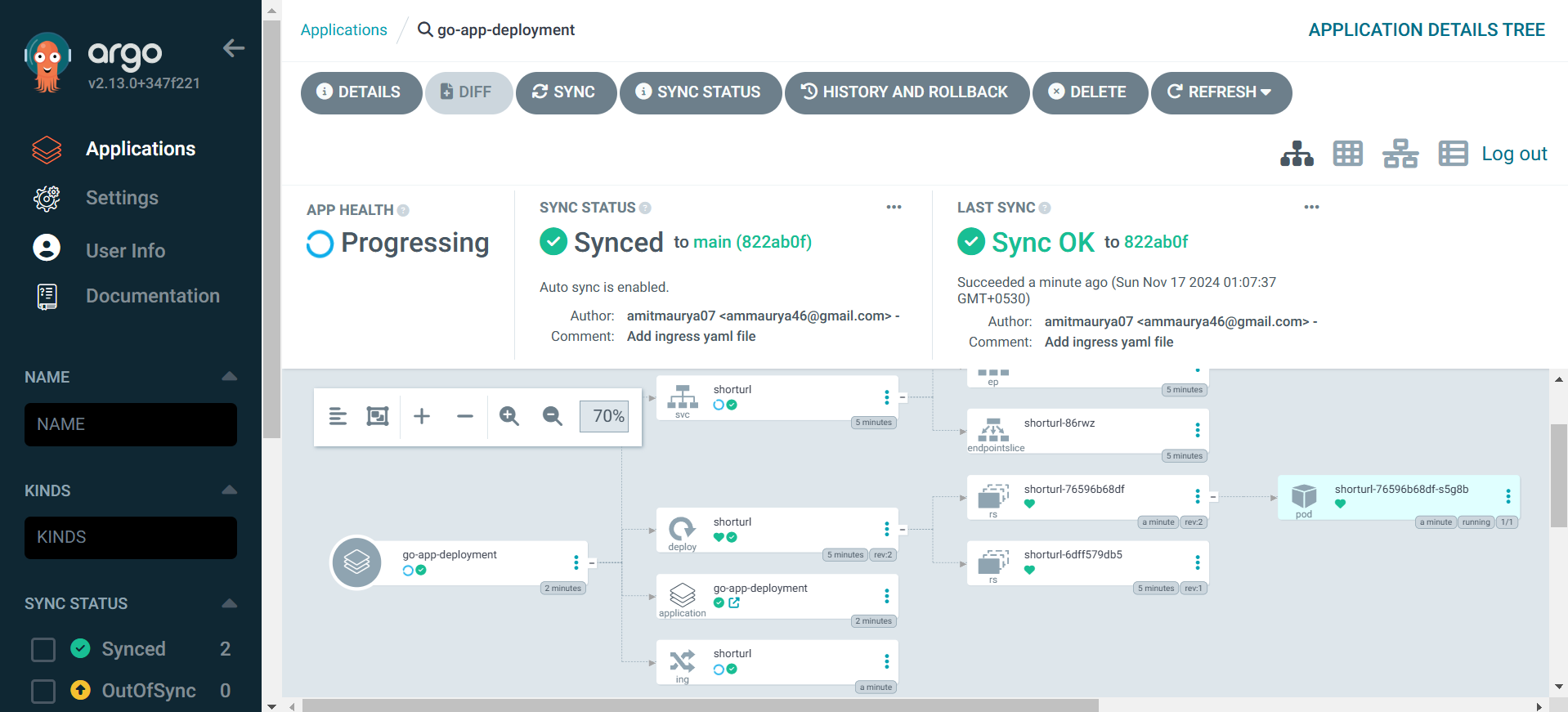

Deploy ArgoCD on EKS Cluster

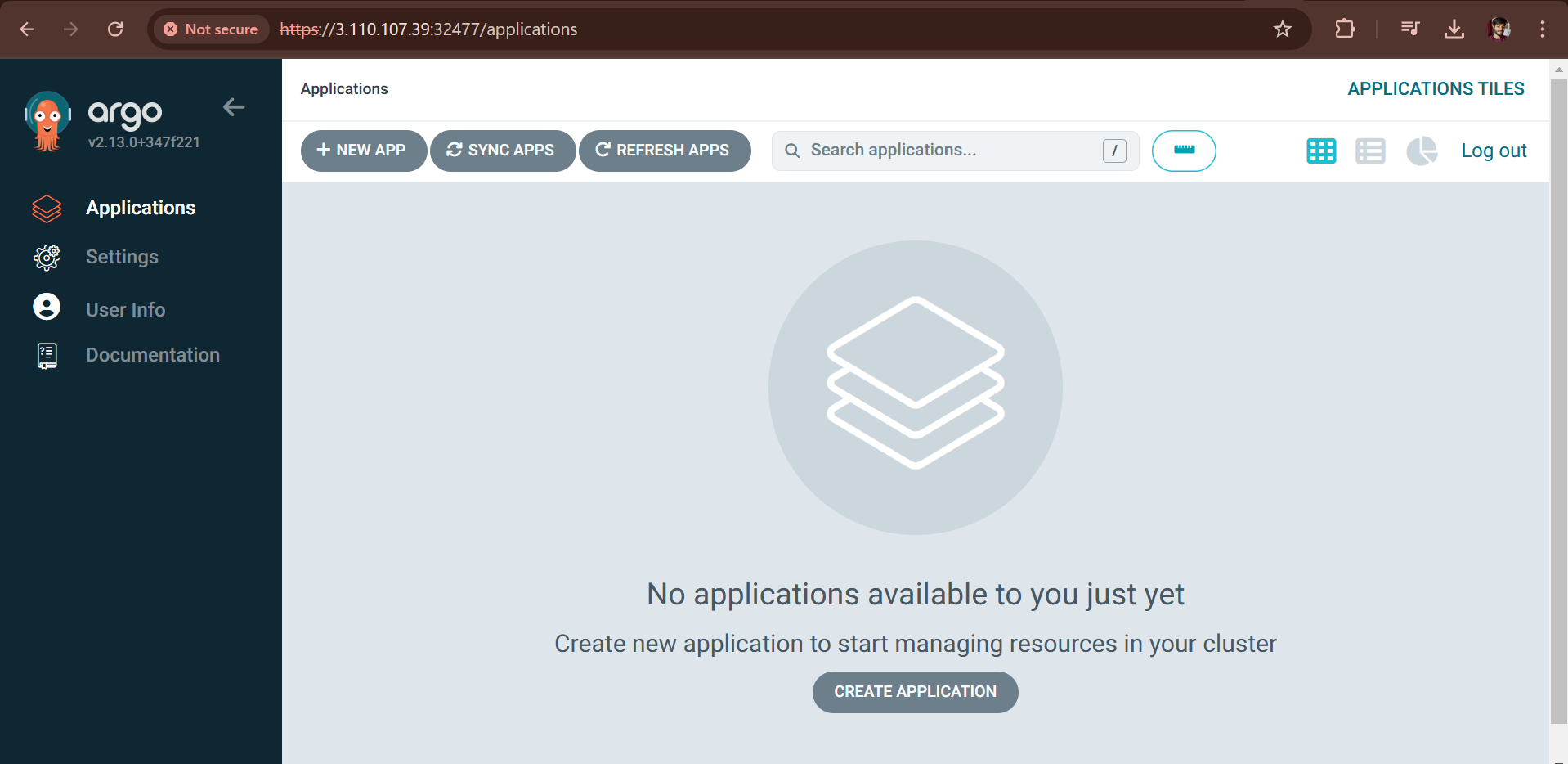

Now, we will deploy the CD part (Continuous Deployment) on EKS cluster so that when any changes happens in kubernetes manifests it will automatically deploy to the EKS cluster.

ArgoCD is a GitOps tool that will manage the deployments of applications in Kubernetes clusters. It pulls the desired state and sync with the current state and if not matched it update the changes on Kubernetes cluster.

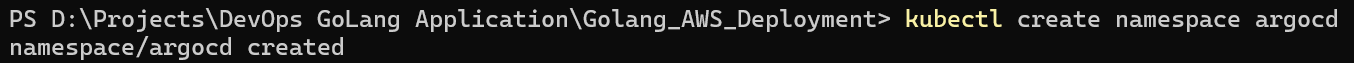

Create the namespace of ArgoCD

kubectl create namespace argocd

Now deploy the CRD’s (Custom Resource Definitions), deployments, services in namespace of argocd.

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

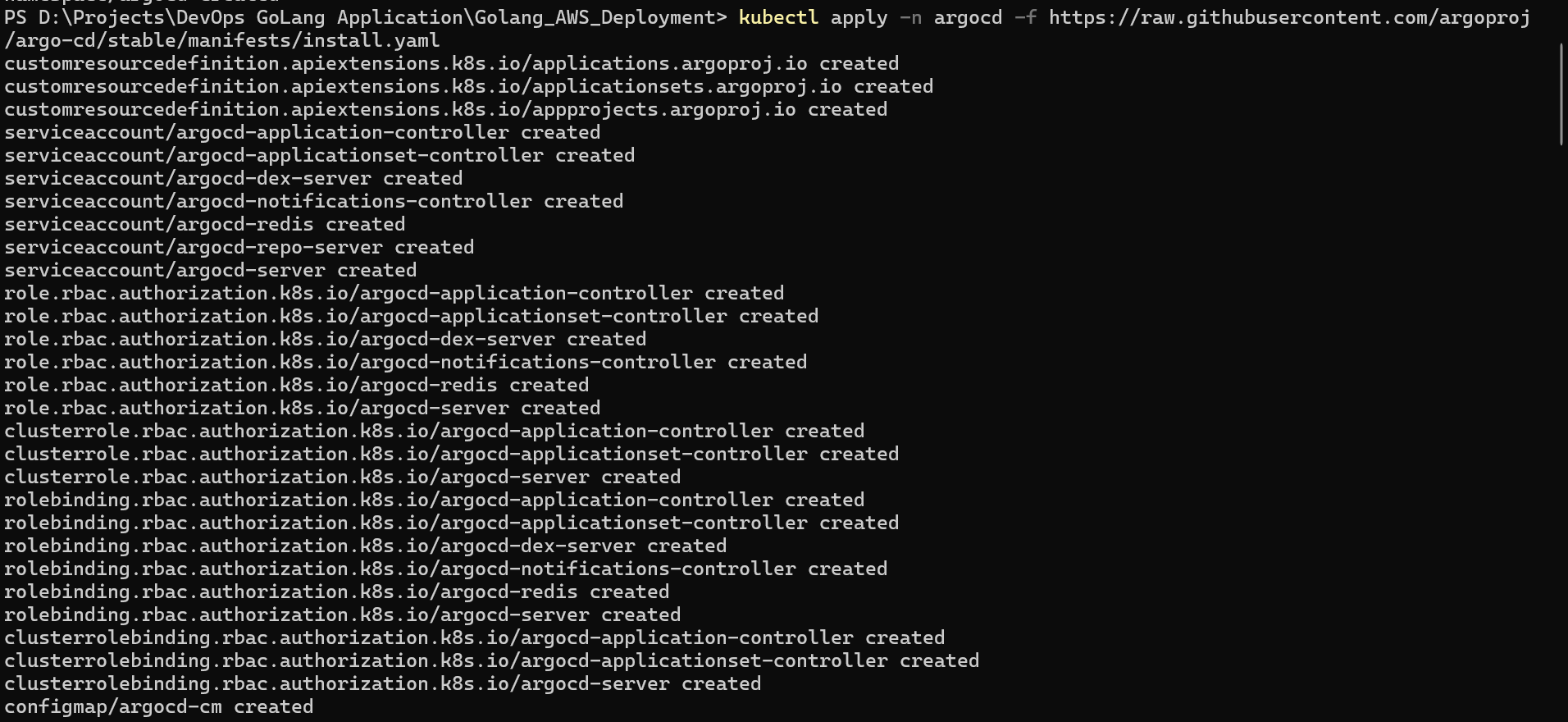

kubectl get po -n argocd

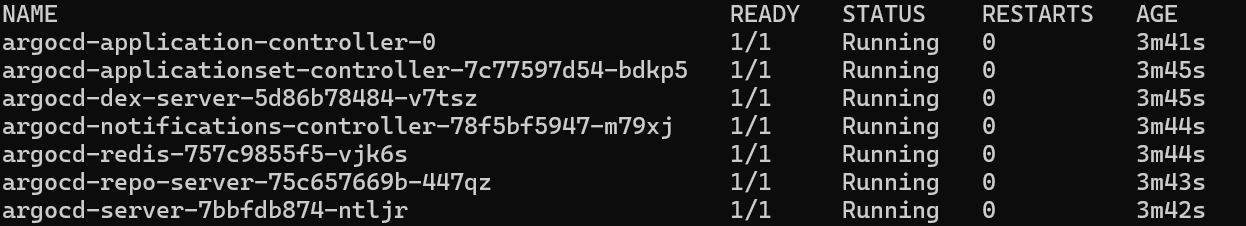

Edit the service of argocd server to access the ArgoCD outside.

kubectl patch svc argocd-server -n argocd -p '{"spec": {"type": "NodePort"}}' kubectl get svc -n argocd

Now, we can access the ArgoCD UI.

Username: admin

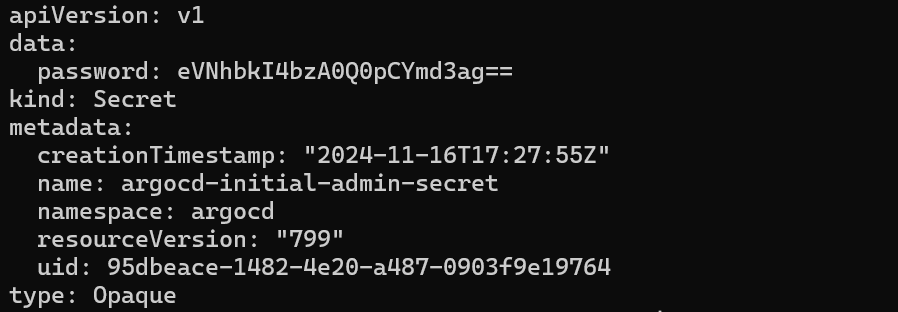

Password: It is stored in secrets of

argocd-initial-admin-secretin namespace of argocd.kubectl get secrets argocd-initial-admin-secret -n argocd -oyaml echo "Your password" | base64 -dAs secrets are stored in base64 encrypted, so we had decoded it.

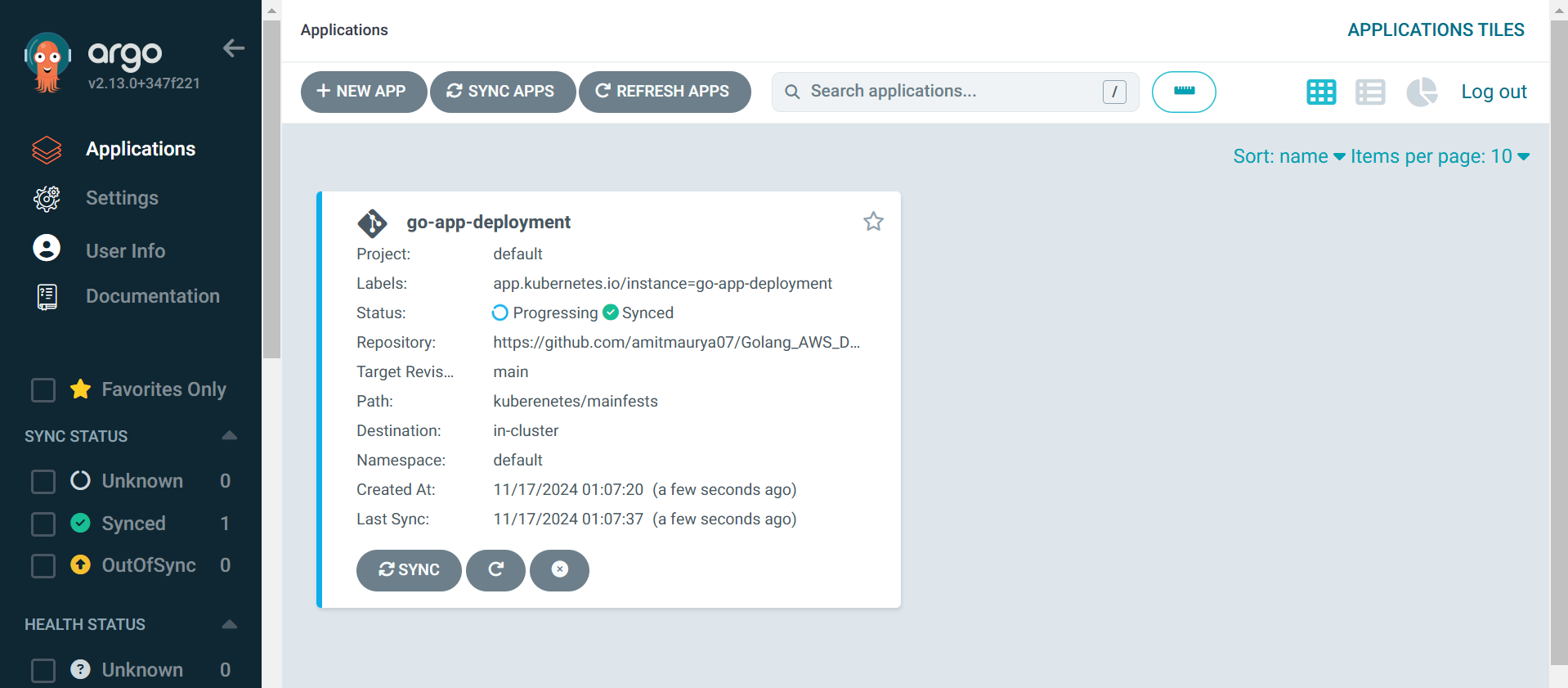

We need to create an application in ArgoCD, so I had created the file name

go-app-deployment.yaml.apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: go-app-deployment namespace: default spec: project: default source: repoURL: 'https://github.com/amitmaurya07/Golang_AWS_Deployment.git' targetRevision: main path: kuberenetes/manifests destination: server: 'https://kubernetes.default.svc' namespace: default syncPolicy: automated: prune: true selfHeal: trueIn this yaml file see the apiVersion this is the CRD of ArgoCD to create an application of kind. Then we had given the repoURL of our github repository where argocd will check the changes and there is path of kuberenetes manifests where our manifests files are stored. In syncPolicy there is automated selfHeal is true which means it monitors the kubernetes resources if any changes happens it matches the desired state to the current state automatically.

As we can see the application on ArgoCD and our pods with service and ingress is shown in ArgoCD UI.

Conclusion

In this article, we had learnt how to deploy Golang Application on EKS Cluster through CodePipeline and CodeBuild. Meanwhile in the upcoming blogs you will see a lot of AWS Services creation and automating it with terraform. Stay tuned for the next blog !!!

GitHub Code : Golang AWS Deployment

Twitter : x.com/amitmau07

LinkedIn : linkedin.com/in/amit-maurya07

If you have any queries you can drop the message on LinkedIn and Twitter.

Subscribe to my newsletter

Read articles from Amit Maurya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Amit Maurya

Amit Maurya

DevOps Enthusiast, Learning Linux