Accelerating Software Delivery with AWS.

Lajah Shrestha

Lajah ShresthaTable of contents

Accelerating speed in deployment is essential in today's competitive landscape, where user expectations for seamless and responsive applications are higher than ever. Rapid deployment allows organizations to quickly deliver new features, fix bugs, and respond to market changes, thus enhancing user satisfaction and engagement. This is where DevOps engineers play a crucial role in the software development lifecycle.

In this blog, I narrate the story of Shuri and her journey of application development, transitioning from a local setup to a cloud-based solution at an industry scale, all while incorporating characters from the Marvel Cinematic Universe to add an engaging twist.

The Story:

Our story begins with our first Character Ms. Shuri. You guys must recognize her from the Marvel Cinematic Universe. But since we’re in the multiverse now, this is not MCU, This IS ACU, and you guys know what ACU mean. AWS Cinematic Universe. So Shuri is a college student and a part time developer at a startup company in her nation who happens to be a very creative problem solver.

Being a full time student, She needs to take notes for multiple subjects and keep track of assignments.Shuri struggles to manage and track the enormous amount of documents that need to be submitted as she needs to manage her professional job as well. So She comes up with an idea: Anote: A digital notebook application for taking notes and managing documents.

Phase 1: Local Development

Shuri develops the application locally on her own system. She uses Python with Flask for the backend, React for the frontend, and SQLite for the database.

Initial Features:

Create, edit, and delete notes

Share notes via unique links

User authentication with basic username and password

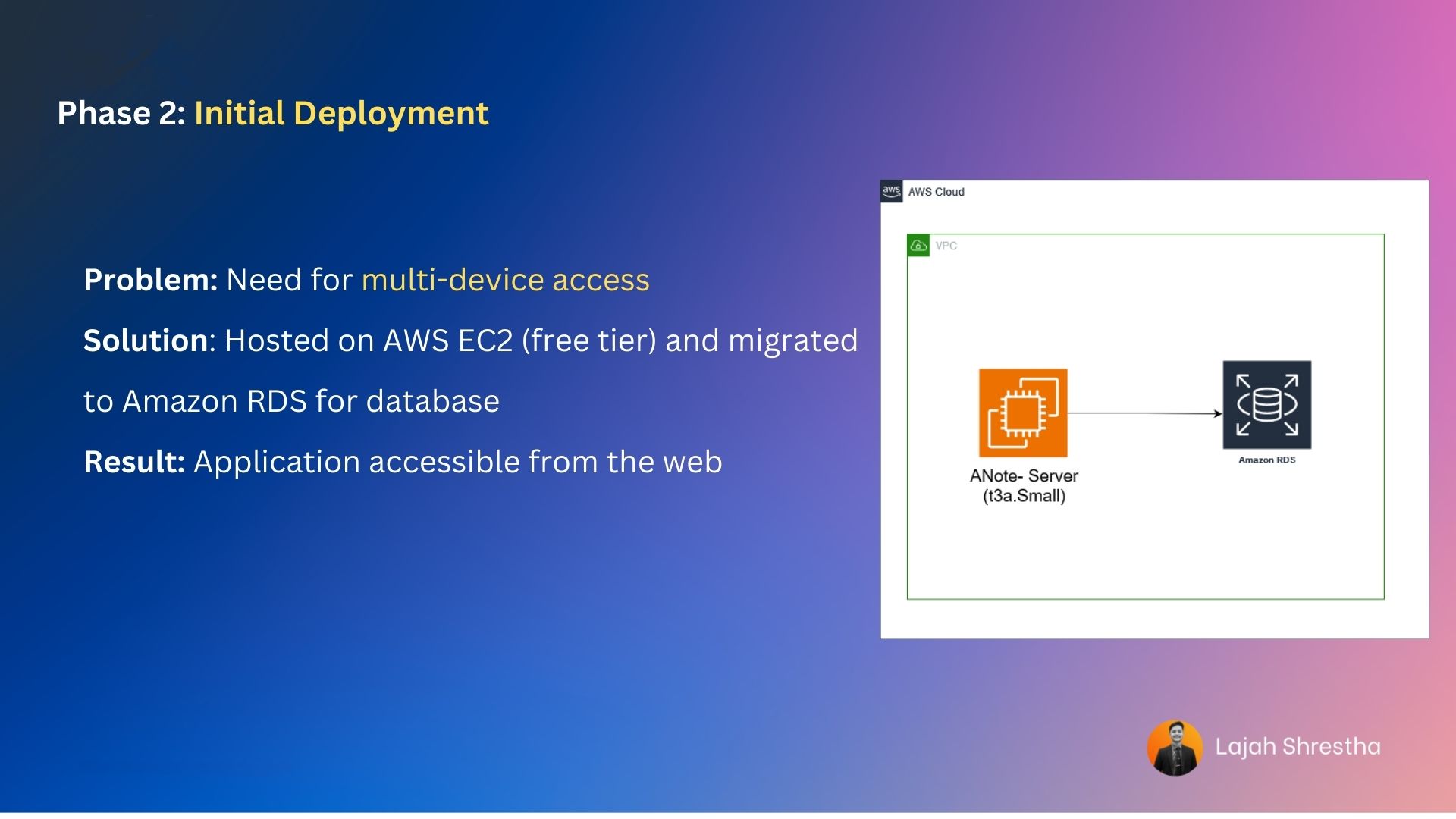

Phase 2: Initial Deployment

Shuri realizes she can't use the same device every time and needs to access her notes from multiple devices.

To make the contents accessible from the web, she decides to host the application.

She deploys it on her own using an EC2 instance with AWS's free tier service.

She replaces SQLite with Amazon RDS for improved database management.

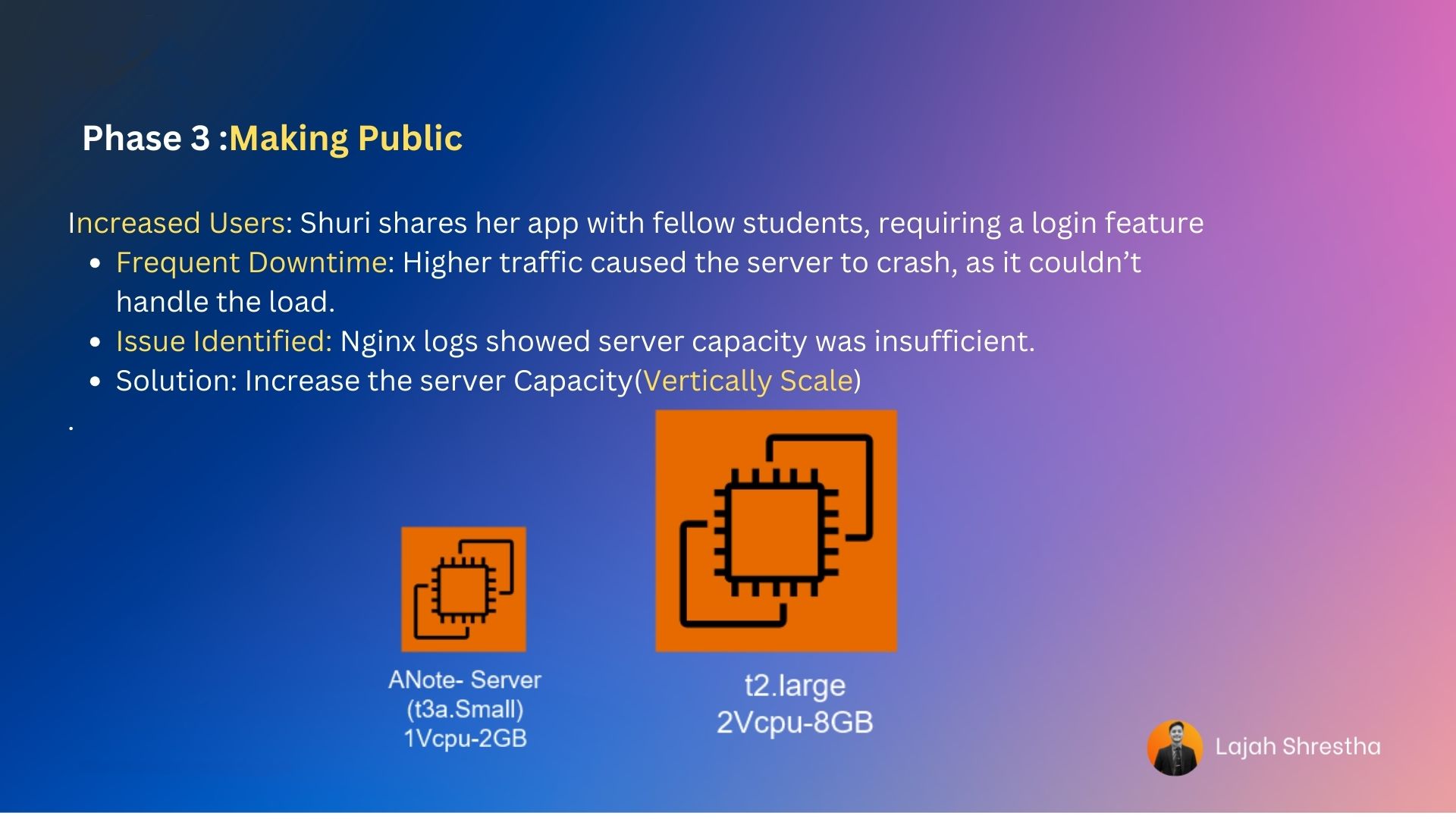

Phase 3: Growing User Base

Shuri shares her application with college students, realizing she needs to create a login functionality.

The application proves helpful, but it frequently goes down. Upon examining the nginx logs, Shuri notices that higher request volumes cause the server to crash, indicating insufficient server capacity.

To address the curent issue, Given the small but growing user base and the server capacity issues, Shuri vertically scales the application. She upgrades the server from 2 GB to 8 GB of RAM, accommodating the fourfold increase in users.

Phase 4: CI/CD

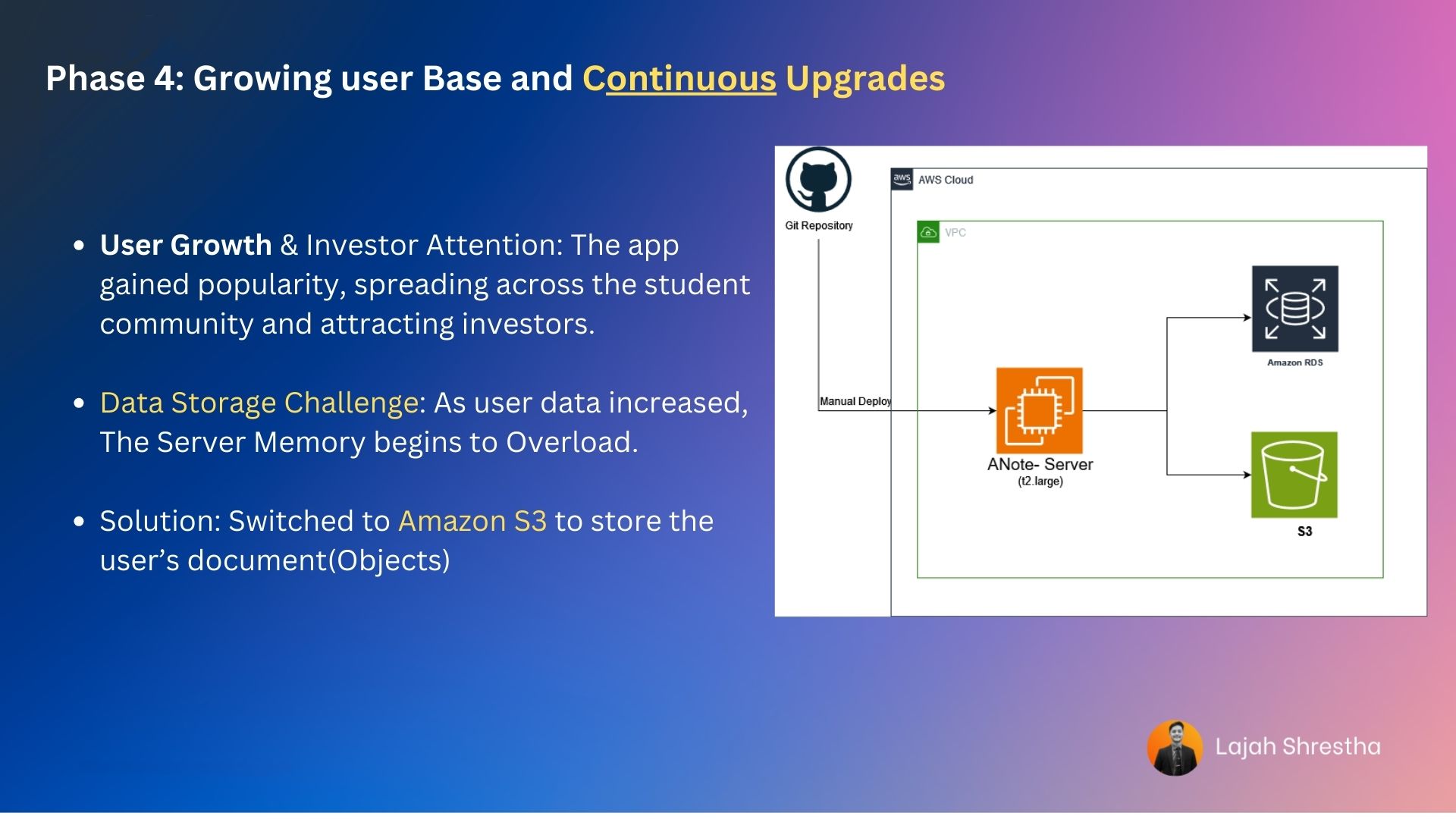

- As the application gained popularity among students and attracted investors' attention, the user base expanded rapidly.

With this growth, data storage became a significant challenge. Shuri switched from EBS volumes to Amazon S3, a serverless object-level storage service. As demand increased, the need for continuous improvement in reliability and user-friendliness became apparent. Shuri would make changes locally, test them, and push the code to the repository.

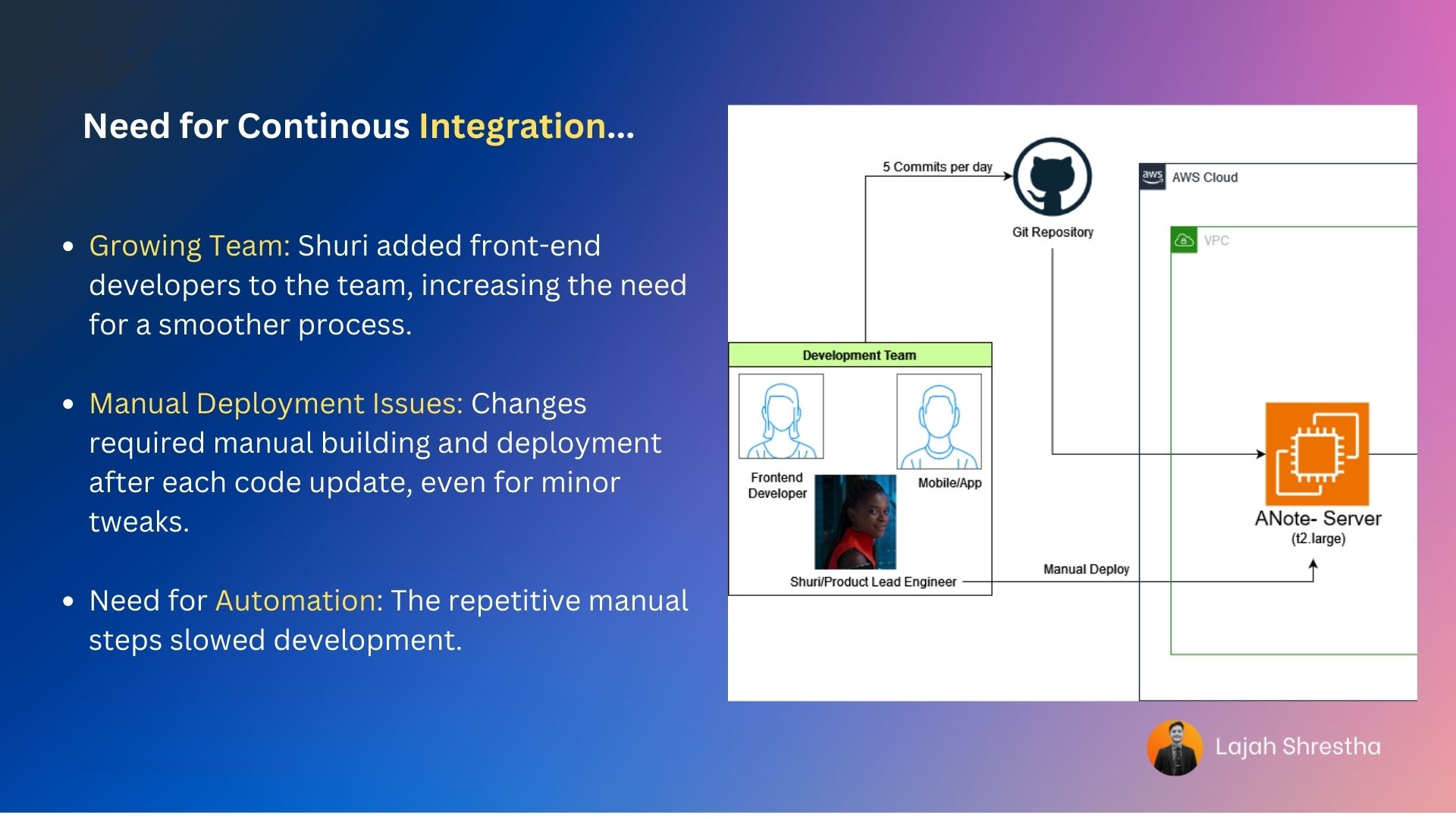

New team members, including front-end developers, were brought on board as the application needed full upgrade.

The development process involved pulling the latest code from the repository and running build and run commands to reflect changes—even for minor updates like adjusting font sizes. This manual and repetitive process proved time-consuming and inefficient.

This demanded the need for automation to avoid time consuming manual and repetitive task. This is where our next character Peter comes into the story:

Automation: Peter addressed this challenge by implementing a CI/CD pipeline. This pipeline automates the repetitive build and deploy process, triggering automatically when code is pushed to the repository. (CodePipeline/CodeBuild)

With this automation in place, Shuri can now focus on adding new functionality without worrying about server deployment.

Phase 5: Need for a Standardized Deployment Process

Shuri's passion for her product and the positive user feedback drove her to continuously add features and address reported issues. However, a problem emerged: while the changes worked perfectly on her local machine, deploying them to the live server often caused the application to crash, resulting in downtime. This highlighted the need for a more robust deployment strategy.

2 major pre deployment procedure seems to be missing in the whole process.

Two key issues emerged:

Issue 1:

Inconsistency between development and live environments.

Solution By peter: Containerization

So in order to maintain the consistency the application was containerized using Docker.

Docker is a platform for developing, shipping, and running applications in containers. Containers are lightweight, portable, and self-sufficient units that can run consistently across different environments. Docker solves several key problems:

Environment consistency: It ensures that the application runs the same way in development, testing, and production environments.

Isolation: Containers isolate applications from each other and from the underlying system, reducing conflicts and improving security.

Efficiency: Docker containers are more lightweight and use fewer resources compared to traditional virtual machines.

Portability: Containerized applications can easily be moved between different systems and cloud platforms.

By using Docker, Shuri and her team can package the application and its dependencies into a container, ensuring that it behaves consistently across all environments and simplifying the deployment process.

Issue 2:

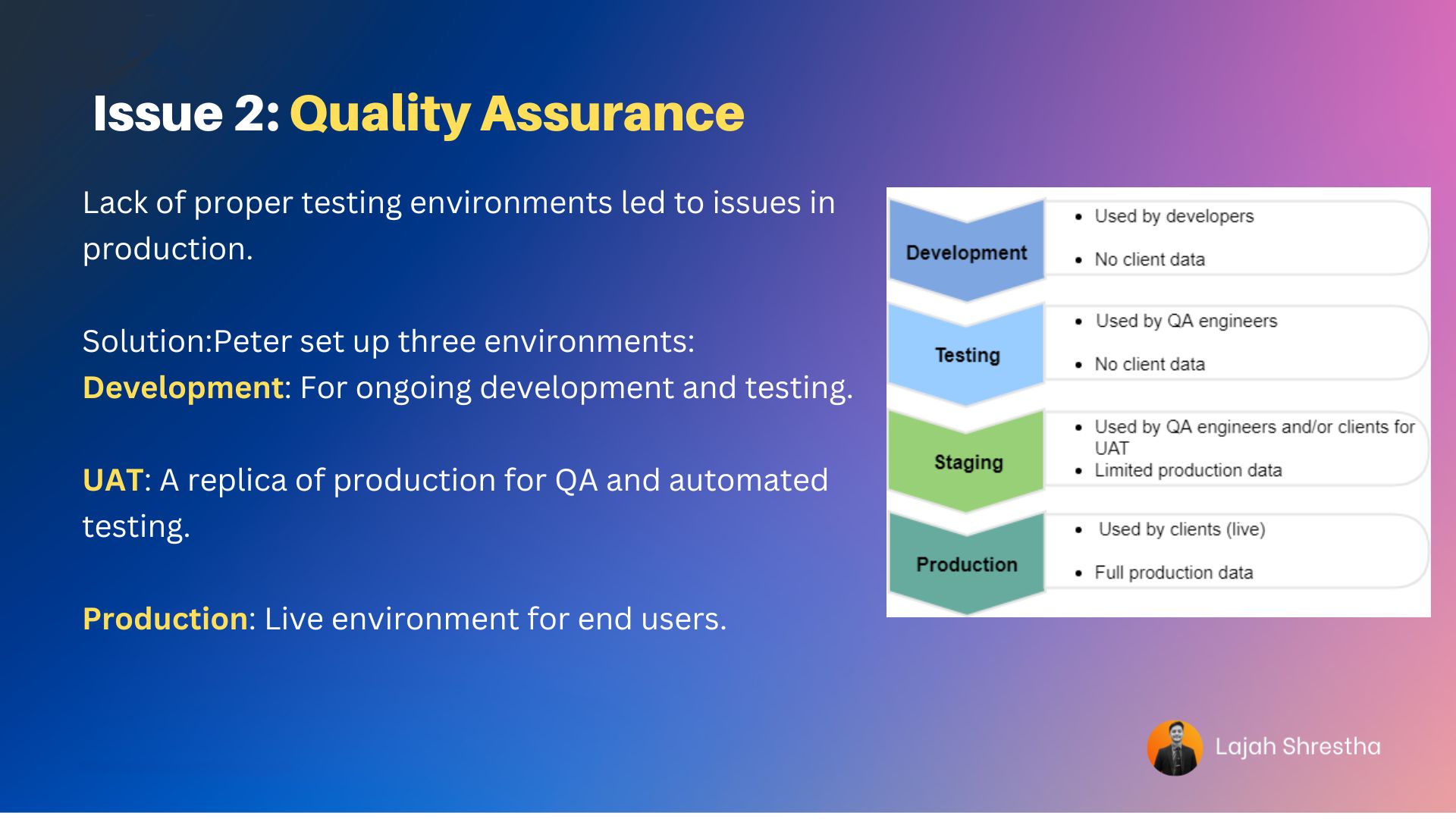

Lack of thorough testing and validation. Solution: Set up development, UAT, and production environments.

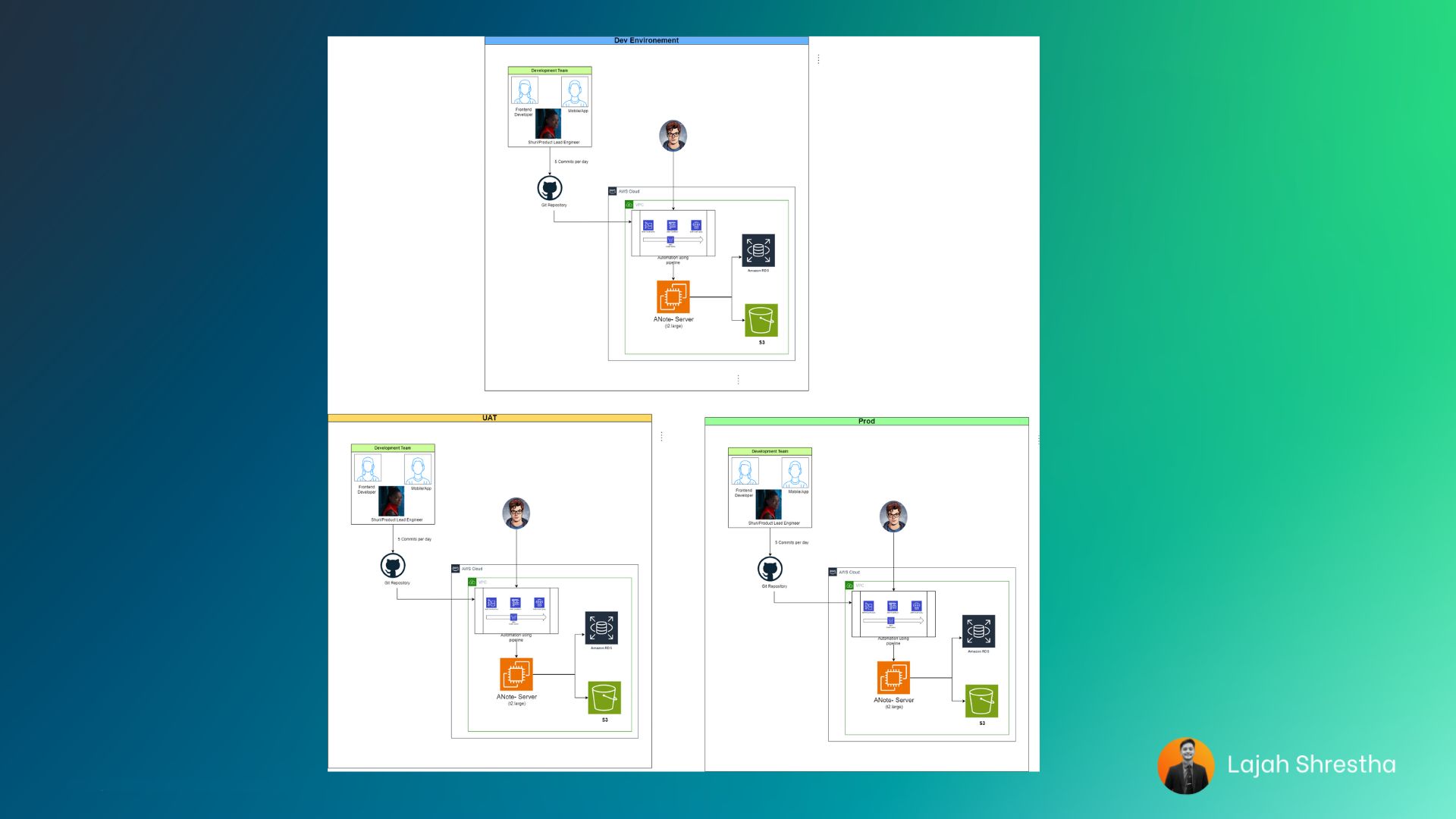

Peter, our DevOps hero, steps in. He establishes three crucial stages: Development → Staging → Production.

Peter implements a three-stage deployment process:

Development: Where new features are built and initially tested

Staging (UAT): A mirror of production for final testing and validation

Production: The live environment accessed by users

This approach ensures thorough testing and reduces the risk of issues in the live environment, improving overall application stability and user experience.

And also to tackle the manual repetitive job of replicating the environment twice, Peter had created a CloudFormation Script as infrastructure as a code tool.

Infrastructure as Code (IaaC) is a practice of managing and provisioning computing infrastructure through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools. Here's how it helps Peter:

Consistency: IaaC ensures that the same environment is reproduced every time, eliminating discrepancies between development, staging, and production.

Automation: Peter can automatically create and manage multiple environments without manual intervention, saving time and reducing human errors.

Version Control: Infrastructure configurations can be versioned, allowing Peter to track changes and roll back if needed.

Scalability: As Shuri's application grows, Peter can easily scale the infrastructure by modifying the CloudFormation script.

Documentation: The CloudFormation script serves as living documentation of the infrastructure, making it easier for team members to understand the setup.

By using CloudFormation as an IaaC tool, Peter significantly streamlines the process of creating and managing multiple environments, ensuring consistency and reducing the time and effort required for infrastructure management.

Billing Hazard: Replicating environments and using more resources led to continuous, never-ending billing.

Peter's solution: Turn off EC2 instances and RDS during off-hours and at night using EventBridge Scheduler and auto-shutdown features. This significantly reduced costs by nearly half.

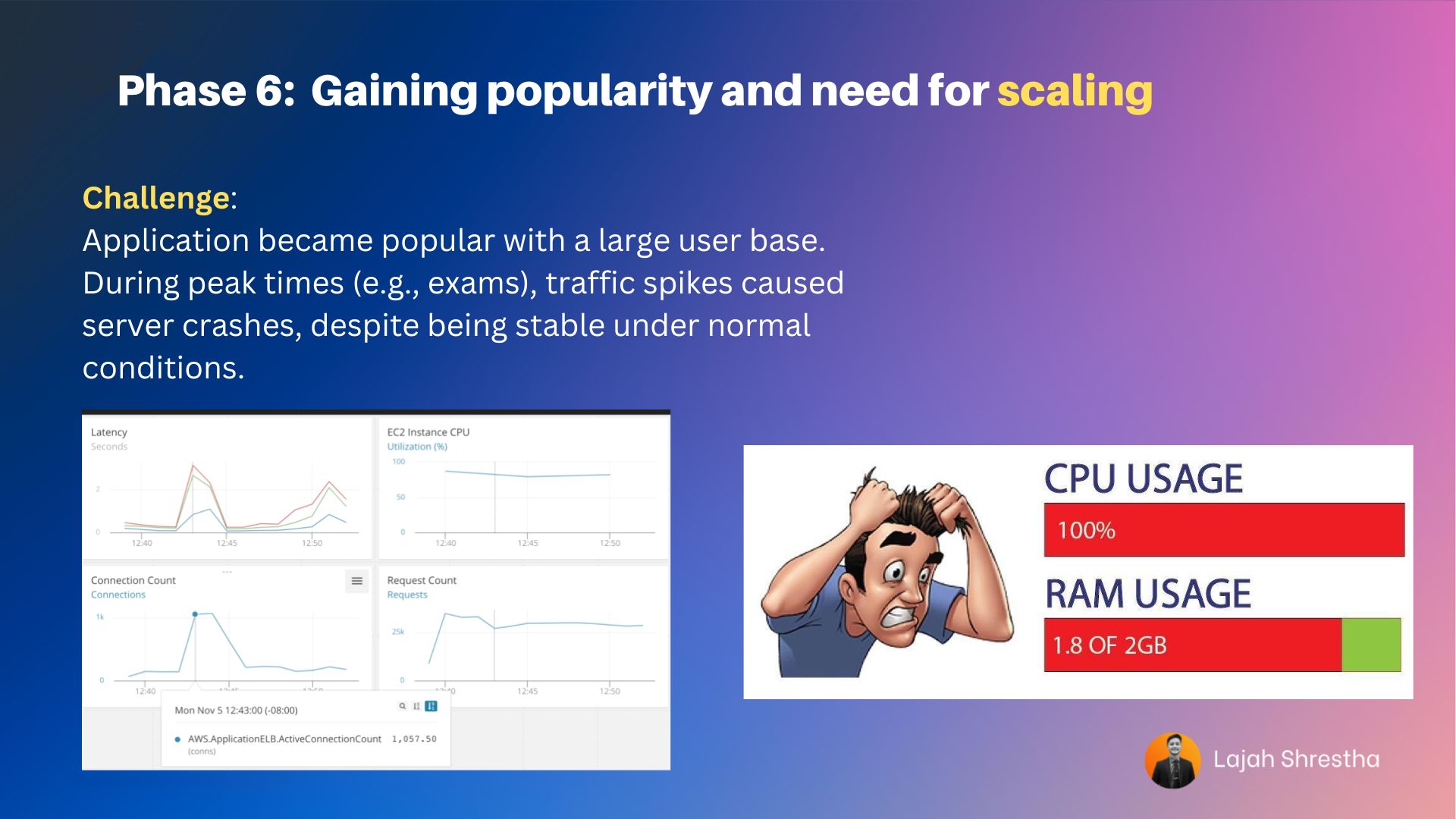

Phase 6: Gaining Popularity and the Need for Auto Scaling

As the application gained popularity, it attracted a large audience. During exam periods, there were sudden, unpredictable spikes in traffic. These huge surges caused the server to crash, even though it operated within normal limits on typical days.

To address this challenge, Peter implements an auto-scaling architecture that dynamically adjusts server capacity based on real-time metrics. He utilizes two key AWS services: Auto Scaling Groups (ASG) and Application Load Balancers (ALB). The ASG automatically increases or decreases the number of EC2 instances in response to fluctuating demand, while the ALB efficiently distributes incoming traffic across these instances. This setup ensures optimal performance during traffic spikes, such as exam periods, while maintaining cost-effectiveness during periods of lower usage.

Chain of Solutions: As we solve one problem, a new challenge often emerges, requiring another solution. This cycle of problem-solving and adaptation has been a key feature of peter, Shuri’s journey so far.

Even after implementing autoscaling, the application was slowing down despite CPU and memory usage being within normal limits.

Peter examined the statistics provided by AWS CloudWatch logs and alarms. He noticed that the database response time was increasing, indicating that the function responsible for fetching data from RDS was becoming a bottleneck due to numerous simultaneous requests.

To address this, Shuri restructured the application into a microservice architecture. This allowed the module responsible for fetching data to be scaled and updated independently without causing system-wide downtime.

The team deployed the new architecture to Amazon ECS using Fargate for automated, managed scaling.

Summary:

This story illustrates the overall DevOps process, demonstrating how DevOps culture accelerates software delivery to users. It specifically showcases the journey from basic to advanced implementations using AWS services.

Subscribe to my newsletter

Read articles from Lajah Shrestha directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by