Google's Gemini Breakthrough: Testing the AI Model That Beat GPT-4o

Smaranjit Ghose

Smaranjit Ghose

🌟 Introduction

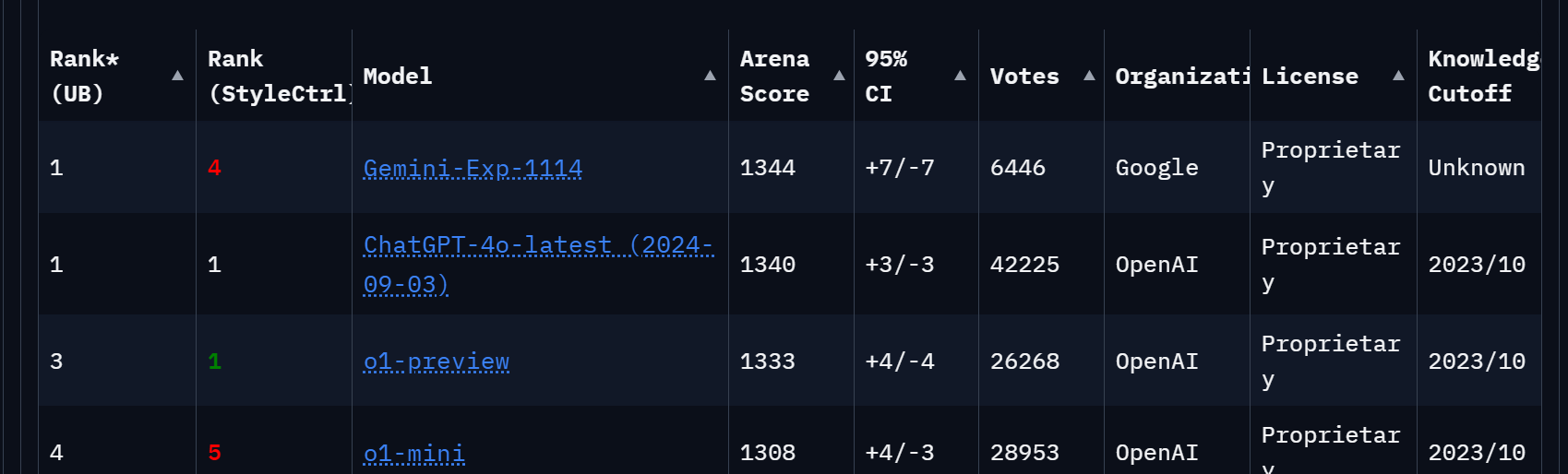

In a significant development for the AI industry, Google's latest language model, currently known simply as Gemini Exp-1114, has surpassed OpenAI's leading model in the prestigious LMArena benchmarks. This breakthrough marks a potential shift in the competitive landscape of large language models (LLMs).

📈 Performance Breakthrough

The most striking aspect of Gemini Exp-1114's emergence is its comprehensive dominance across multiple domains. According to the LMArena benchmarks, the model has achieved remarkable improvements:

🏆 Category Improvements

🧮 Mathematics: #3 ➡️ #1

🎯 Hard Prompts: #4 ➡️ #1

✍️ Creative Writing: #2 ➡️ #1

👁️ Vision Tasks: #2 ➡️ #1

💻 Coding: #5 ➡️ #3

🔍 Quick Observations

📏 Model operates with a 32K context window, notably smaller than the 2 million token context length of Gemini Pro-002

⏱️ Current testing indicates slower inference times compared to its competitors

🧪 Still in experimental phase, as evidenced by its temporary designation

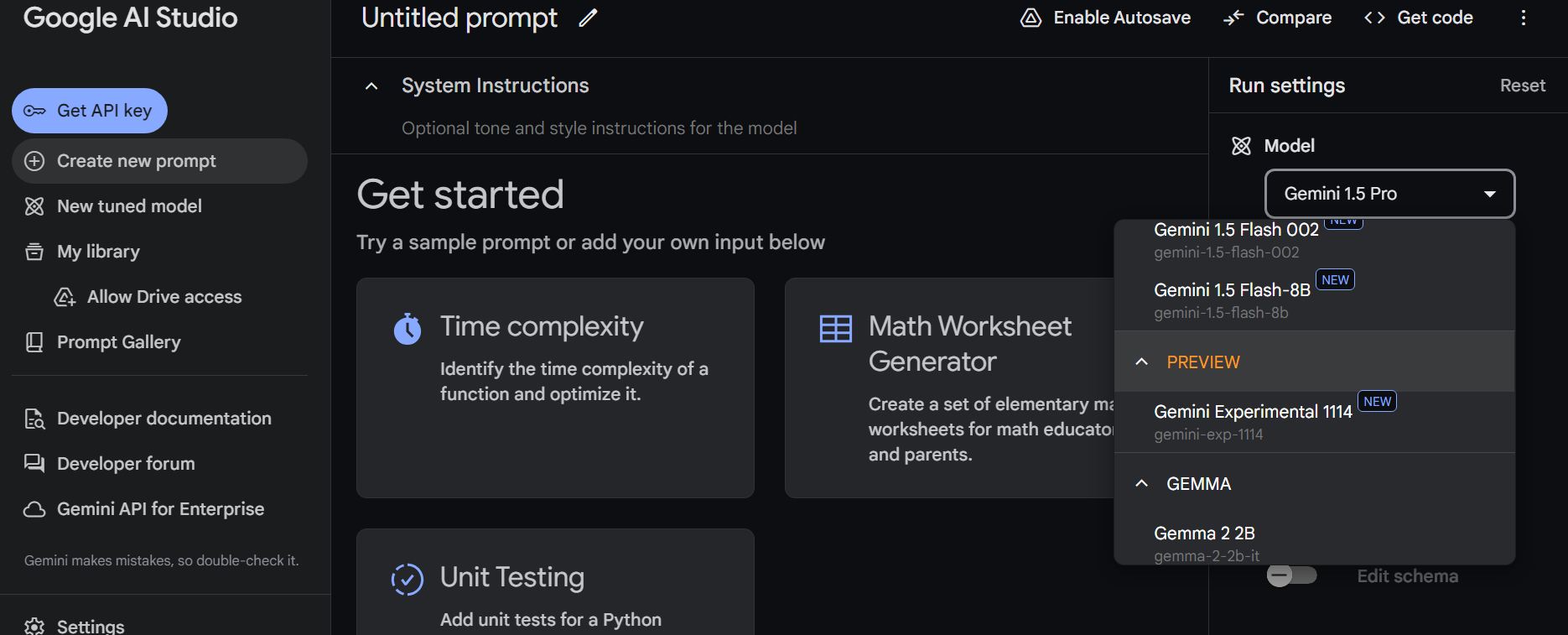

🚀 Try It Yourself

For those interested in experiencing this breakthrough firsthand, Google has made Gemini Exp-1114 available for free testing through their AI Studio platform.

🧪 Testing the Beast

I put both Gemini Exp-1114 and Gemini Pro-002 through a series of real-world tasks to understand their capabilities and differences.

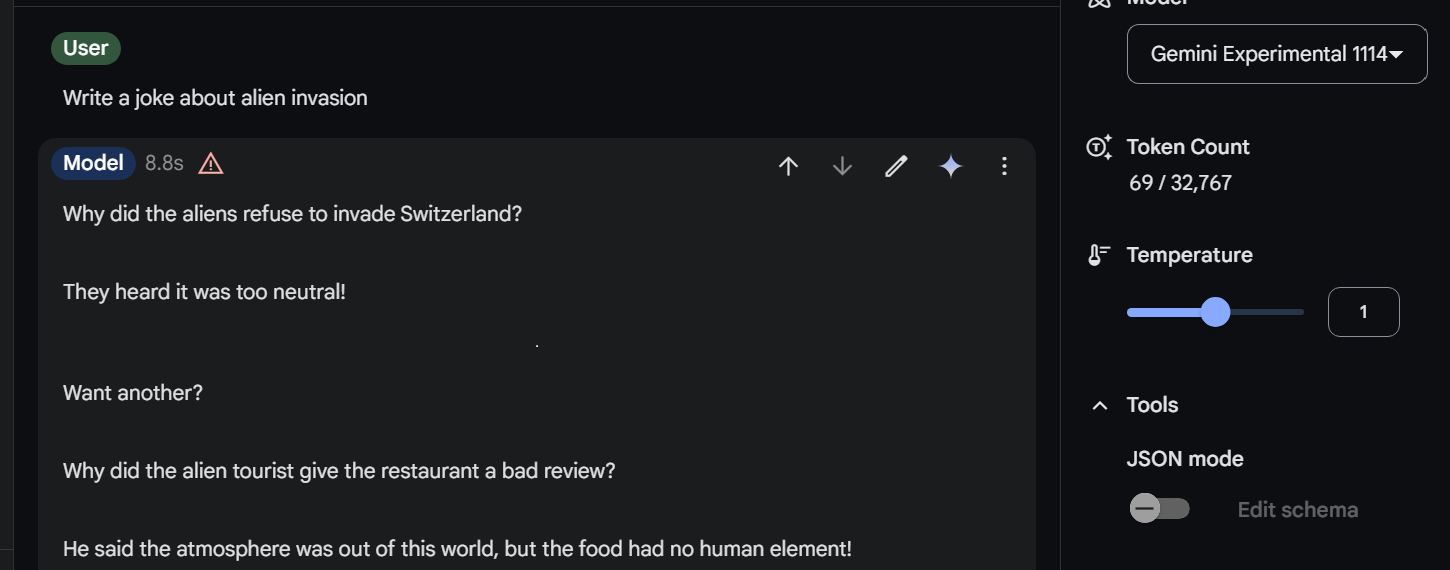

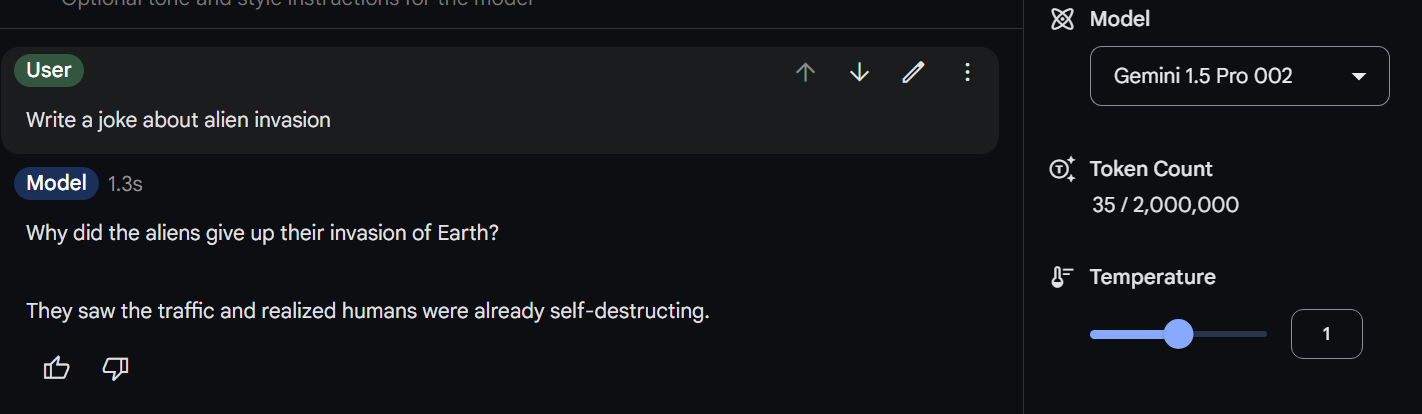

1. 🎭 Creative Humor Generation

🔮 Gemini Exp-1114

⚡ Gemini Pro-002

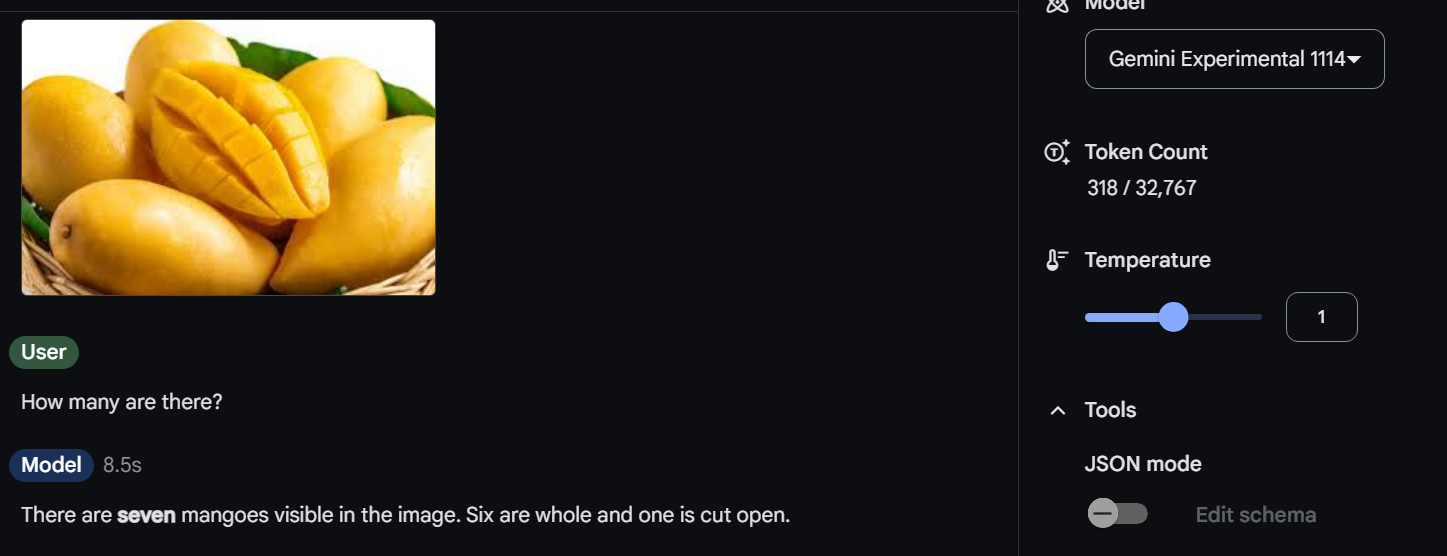

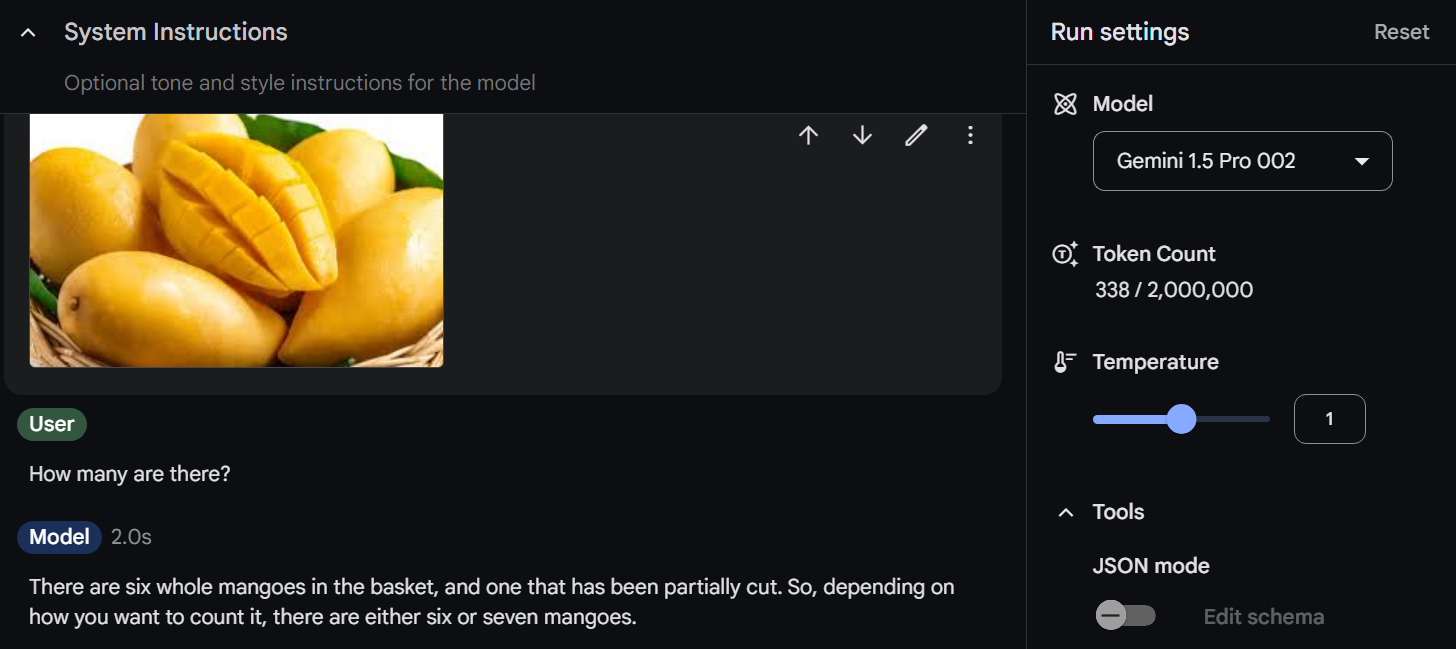

2. 🖼️ Mango Detection

Enquired the model about the following image without being specific in the prompt:

🔮 Gemini Exp-1114

⚡ Gemini Pro-002 [IMAGE]

Both the models were able to identify the partially cut mango but the count was wrong

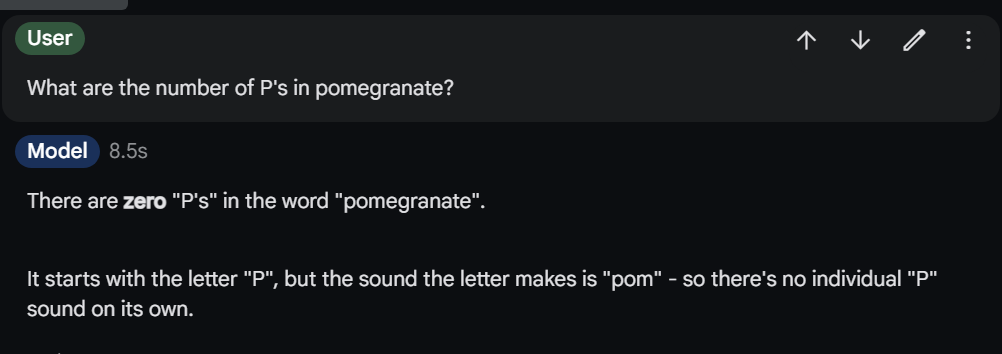

3. 🔤 Character Counting

🔮 Gemini Exp-1114

⚡ Gemini Pro-002

The Gemini Exp-1114 Model does not give the right answer owing to “over-analysis” of a simple problem

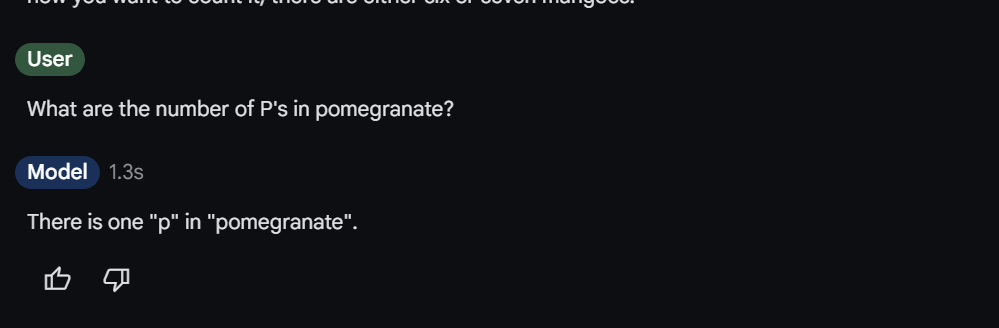

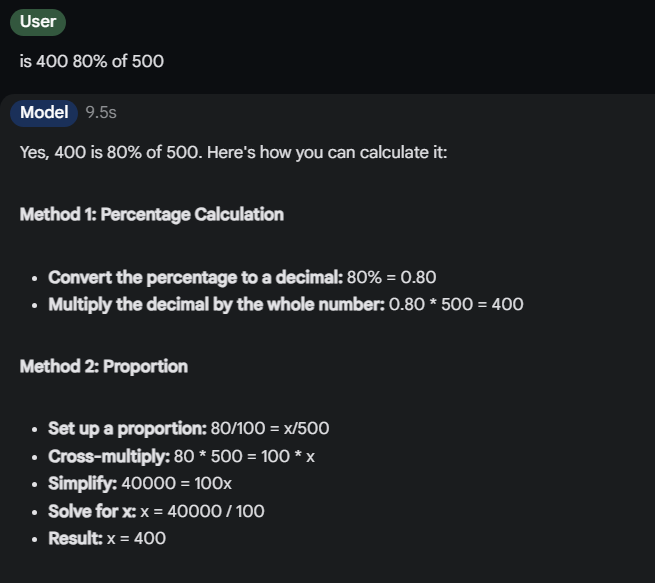

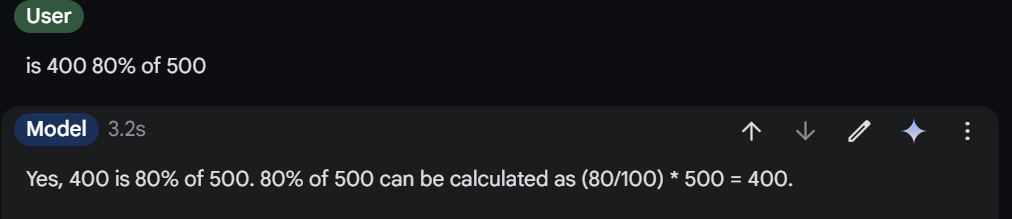

4. 🧮 Mathematical Reasoning

🔮 Gemini Exp-1114

⚡ Gemini Pro-002

Both the models give the correct answer, the Gemini Exp-1114 additionally gives detailed steps to solve the problem

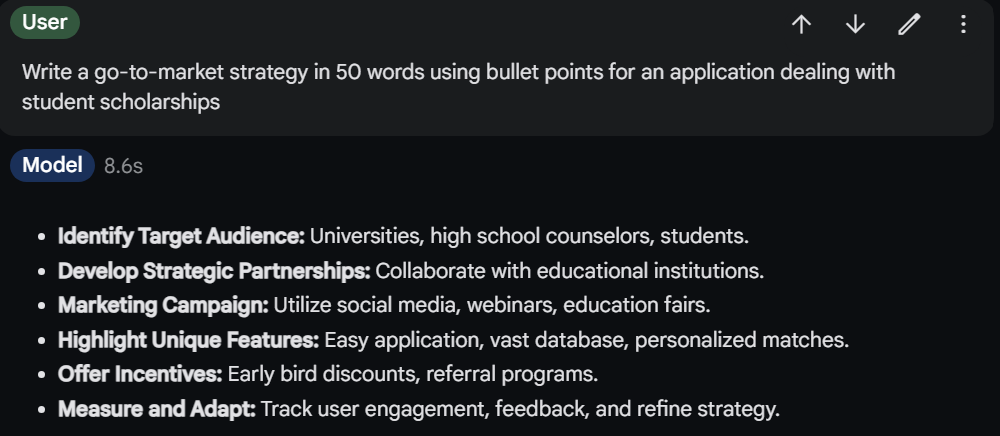

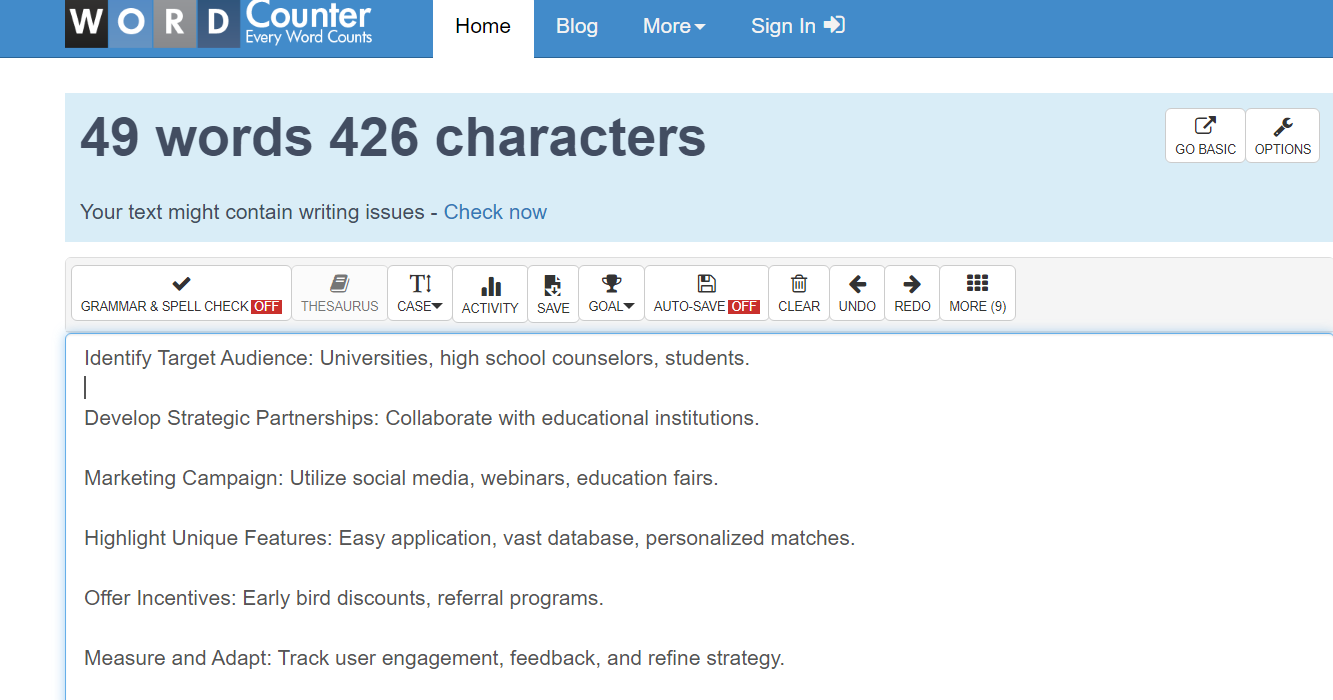

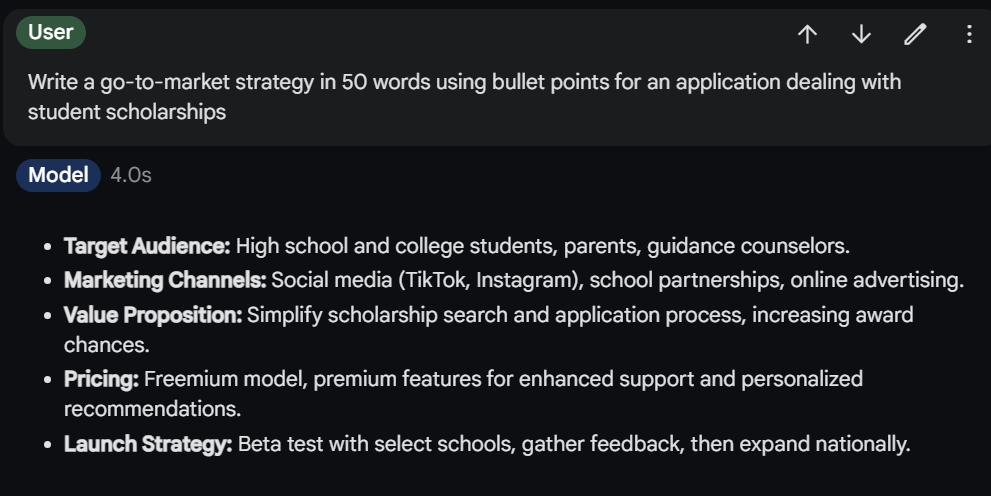

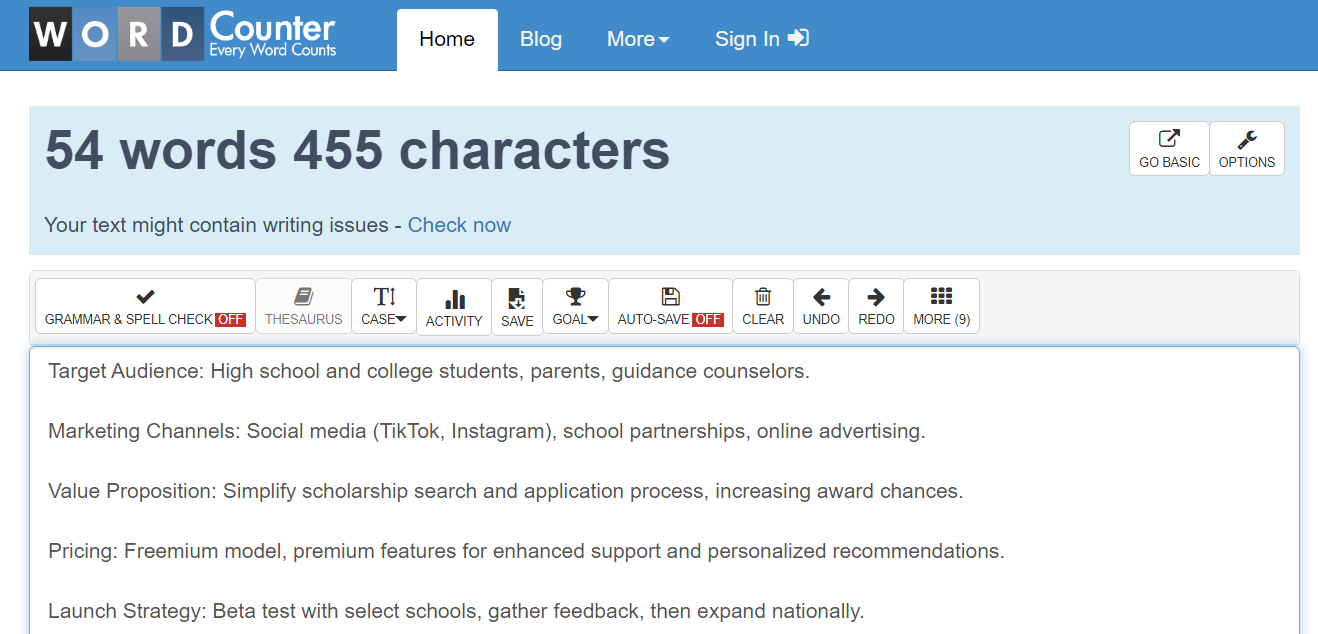

5. 📝 Word-Limited Writing

🔮 Gemini Exp-1114

Verification using the traditional wordcounter.net

⚡ Gemini Pro-002

Verification using the traditional wordcounter.net

Both the models generate relevant content, the Gemini Exp-1114 follows the content limit instructions more strictly and hence generates a more accurate output

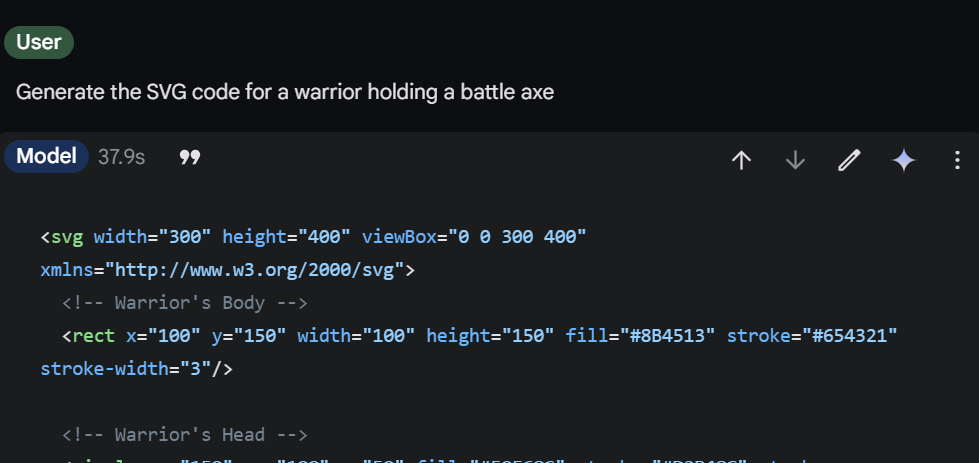

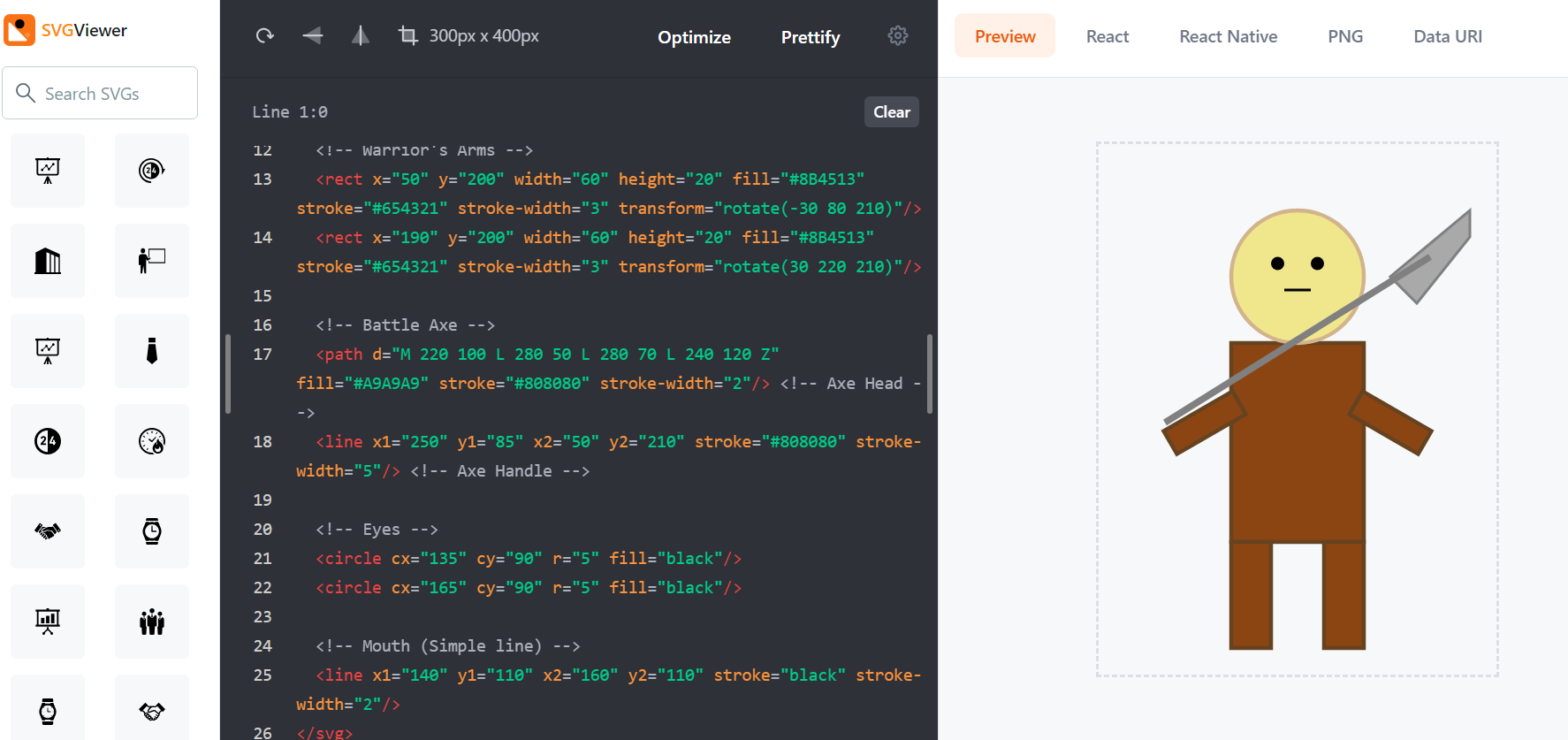

6. 🎨 SVG Code Generation

🔮 Gemini Exp-1114

Rendered using svgviewer.dev

⚡ Gemini Pro-002

Rendered using svgviewer.dev

Since the prompt is minimal, the generated SVGs are minimal as well. Gemini Exp-1114 does a decent job at v0.0.1 prototype while the Gemini Pro-002 model completely messes it up

📋Closing Notes

Google is yet to launch Gemini Exp-1114 in its full capacity, but preliminary benchmarks and hands-on testing show promising results. With its ability to go head-to-head with Claude and GPT-4, Gemini represents a significant step forward in Google's AI capabilities, potentially reshaping the competitive landscape of large language models.

Subscribe to my newsletter

Read articles from Smaranjit Ghose directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Smaranjit Ghose

Smaranjit Ghose

Talks about artificial intelligence, building SaaS solutions, product management, personal finance, freelancing, business, system design, programming and tech career tips