Simplifying Reinforcement Learning Terminologies - AWS DeepRacer Edition

Sreekesh Iyer

Sreekesh Iyer

If you’re new to machine learning, reinforcement learning, especially, then it’s completely okay to have a hard time understanding these fancy jargons in front of you. Here’s my attempt to simplify these while we work on a little robo-car :)

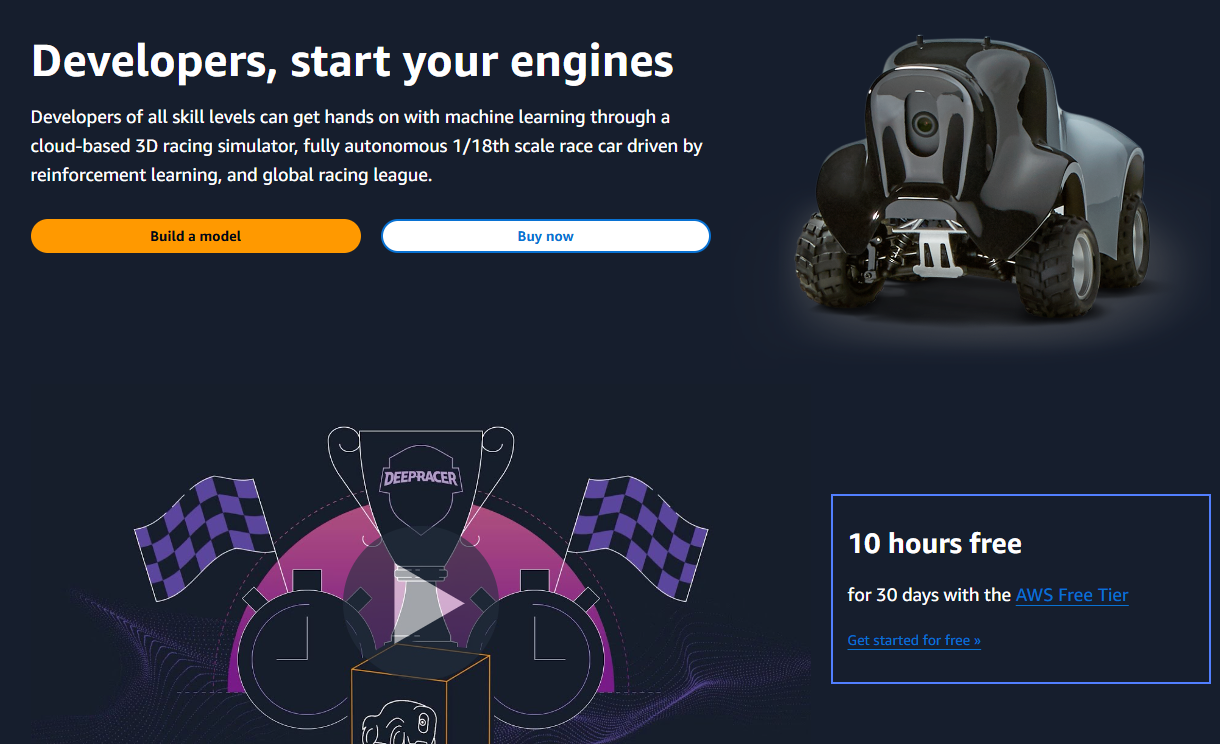

About AWS DeepRacer

A contest that is discontinued after this year, unfortunately, DeepRacer is arguably the most entertaining service available on the AWS Console today. This is the arena where you can train a virtual car to run through a racetrack, and the challenge is to complete 3 laps as quickly as possible without going off-road.

—

Before I get to the jargons, let’s first try to understand

What is Reinforcement Learning?

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal ~ Wikipedia

While I barely made it through that sentence, let’s imagine reinforcement learning as training a dog to fetch the ball for you. Every time the doggo fetches the ball, you give the little guy some treats. You’re giving him positive reinforcement, or as we call it incentive, to perform that action. This is basically what we do in RL as well. We give a reward to our intelligent agents based on their actions to get a desired result.

In case of AWS DeepRacer, the agent is a car, and the desired result is for it to complete the track without going off-track, as quickly as possible. This means that our rewards need to account for the speed of the car, the nature of the track, the turns and more.

Now that we have an understanding of what RL is, let’s try to understand the hyperparameters for our model that may have an impact on our end-results.

Batch Size

Models based on Neural Network train in iterations, meaning they update themselves after every round of learning. This hyperparameter gives you control over how much your model learns in every iteration. Keep in mind that all hyperparameters have their benefits and trade-offs. In this case, a larger batch size (say 128 over 64) would mean that the model would have a wider set of experiences to learn through, and generate a steady output, however, over iterations, it would take more memory and time.

In Deepracer context, the batch size can essentially decide on the exploration that your model will remember across various iterations of its training. Larger batch sizes can delay the convergence times but with every iteration, it’ll get your model closer to the optimal path.

Entropy

I heard about this term for the first time in Chemistry, and very simply - it’s a measure of randomness. Think about how much you want your model to explore while training. The possibilities it can figure out to eventually find out an optimal result. In case of your robo-car, it can find different ways to cover a track, for example. taking fast, shorter turns, taking slow, longer turns, etc. and it eventually finds out the best option based on the incentive it gets.

You might want to reduce the entropy with time as the model matures, as you’d figure out it’s closer to the optimal path, you wouldn’t want it to diverge from that.

Discount factor

A value that ranges between 0 and 1, it signifies the importance your model gives to short term gains over the long term rewards. Discounting Factor closer to 1 means that you prefer future success over immediate high rewards on that particular action on the iteration. Whereas a factor closer to 0 means that it’ll prioritise the incentives it just received. In DeepRacer, it’s critical that the car has a long-term foresight so as to ensure it doesn’t land itself is bad positions around curves or turns that can lead to crashes.

Learning rate

This parameter basically controls how quickly your model reaches the point of convergence. However, with higher learning rates, you run the risk of overshooting, wherein you might get to an optimal path quicker and immediately diverge from it in the next iteration. The key, like most other parameters, is balance. You can adjust the learning rate based on observation of the patterns that your model creates while training. In DeepRacer, this can potentially define how quickly your model is able to adapt to changes, eg. sharp turns after a straight road, where your car is initially intending to run at full speed, but then has to slow down and turn 140 degrees.

Number of epochs

Epoch - can be defined as a collective batch of experiences combined by various episodes in a training window. Your complete process can have many different batches, meaning the model has to move on from one set to another. The number of epochs signifies the amount of time your model spends on one such set. This can essentially refine how your model thinks over a certain set of experiences. Longer epochs naturally mean longer training times and compute usage.

Hope this was a good read to cover various Deep Learning jargons and simplify your experience working with neural networks going forward. Thank you for reading:)

Subscribe to my newsletter

Read articles from Sreekesh Iyer directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sreekesh Iyer

Sreekesh Iyer

Few interesting things about me: Software Engineer @ JP Morgan Chase & Co. AWS Community Builder 2023 I love working with technology I enjoy playing video games 😄