Understanding Kubernetes Volumes: Using EmptyDir, HostPath, and NFS for Persistent Storage

Kandlagunta Venkata Siva Niranjan Reddy

Kandlagunta Venkata Siva Niranjan Reddy

In Kubernetes, volumes provide a way to persist data outside the lifecycle of containers. This ensures that data generated by a pod remains accessible even if the pod is deleted, restarted, or replaced. Volumes play a critical role in supporting stateful applications, where the data must survive beyond a container’s lifecycle. In this blog, we'll explore different types of Kubernetes volumes and their use cases.

Common Types of Kubernetes Volumes

EmptyDir in Kubernetes

An EmptyDir is a temporary storage volume created for a pod in Kubernetes. The data in this volume exists only while the pod is running and is deleted automatically when the pod stops or is removed.

When to Use EmptyDir:

- It’s perfect for temporary data, like cache files or logs, that don’t need to stick around after the pod is gone.

Key Features:

Temporary Storage: Data is stored on the same node where the pod runs.

Pod Lifecycle: Data is deleted as soon as the pod stops or is deleted.

Easy to Use: No complicated setup; it works automatically with the pod.

Example:

Imagine your pod needs a space to temporarily save some files while it’s processing data. You can use an EmptyDir for this. When the pod finishes and stops, the temporary files are automatically cleaned up. It’s also useful for sharing data between containers in the same pod.

HostPath in Kubernetes

A HostPath is used to mount a file or folder from the host machine (the node where the pod is running) into the container.

Key Points:

- Mounts from Host:

It connects directly to the host's file system, allowing the container to access host files or folders.

Data Remains on Node: If the pod is deleted or rescheduled to the same node, the data will still be there. However, if the pod moves to a different node, the data won’t be available because it’s tied to the original node.

No PV or PVC Needed: Unlike persistent volumes (PVs) and claims (PVCs), HostPath doesn’t require any extra setup for storage. It uses the node's local file system.

Example:

A MongoDB pod stores its database files using HostPath on Node1.

If the pod is deleted and rescheduled to Node2, the database files won’t be available because they are still on Node1.

But if the pod is deleted again and rescheduled back to Node1, the database will regain access to the original data because it was saved on Node1's filesystem

MongoDB Deployment with HostPath Volume

apiVersion: apps/v1

kind: Deployment

metadata:

name: mongodb-deployment

labels:

app: mongodb

spec:

replicas: 1

selector:

matchLabels:

app: mongodb

template:

metadata:

labels:

app: mongodb

spec:

containers:

- name: mongodb

image: mongo:latest

ports:

- containerPort: 27017

volumeMounts:

- name: mongo-data

mountPath: /data/db

volumes:

- name: mongo-data

hostPath:

path: /data/mongo

MongoDB Container:

Uses the official MongoDB image.

Stores MongoDB data at

/data/dbinside the container.

HostPath Volume:

- Maps the host directory

/data/mongoto the container’s/data/dbpath

- Maps the host directory

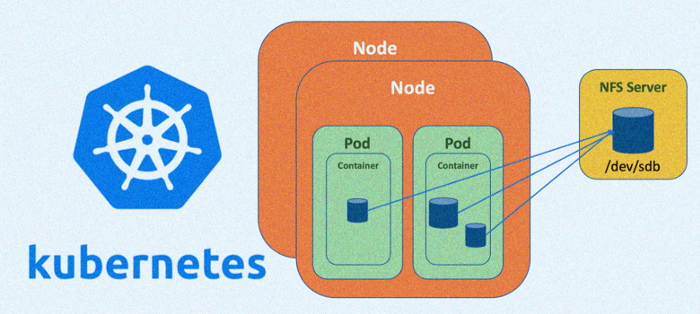

NFS in Kubernetes

NFS (Network File System) is a way for multiple pods in Kubernetes to access the same folder or directory stored on an external server. This is helpful when you need several pods to read from or write to the same set of files.

Shared Storage: With NFS, all the pods can access the same data, even if they are running on different nodes in the Kubernetes cluster.

Persistent Data: The data stored on the NFS server doesn’t disappear when a pod restarts or gets moved to another node. This makes NFS a great choice for storing important data like databases or logs.

So, in short, NFS helps you keep your data available and shared across different pods, even if they are running on different nodes.

Why We Need PV and PVC for External Storage in Kubernetes

When you want to store data outside a pod in Kubernetes, we use Persistent Volumes (PV) and Persistent Volume Claims (PVC).

PV is the actual storage resource (like a disk or NFS) available in the cluster.

PVC is a request made by the pod for storage. It asks for a specific amount of storage based on access modes and storage class.

Why Access Modes and Storage Class Matter:

Access Modes: These define how the storage can be used:

ReadWriteOnce (RWO): One pod can read and write to it.

ReadWriteMany (RWX): Multiple pods can access it.

Storage Class: This specifies the type of storage, like cloud storage or NFS.

How PV and PVC Work:

The PVC is created by the pod with specific requests (size, access mode, storage class).

Kubernetes automatically finds a matching PV and binds them.

The data stays persistent, even if the pod is deleted or moved to another node, because the storage is tied to the PV.

Example:

1. Create the Persistent Volume (PV) for NFS:

apiVersion: v1

kind: PersistentVolume

metadata:

name: mongodb-nfs-pv

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany # Allows multiple pods to access the same data

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs-storage

nfs:

path: /mnt/data/mongodb # Path on the NFS server where MongoDB data will be stored

server: <nfs-server-ip> # IP address or hostname of the NFS server

2. Create the Persistent Volume Claim (PVC):

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mongodb-pvc

spec:

accessModes:

- ReadWriteMany # Allow multiple pods to access the data

resources:

requests:

storage: 10Gi

storageClassName: nfs-storage

3. Create the MongoDB Deployment with the PVC:

apiVersion: apps/v1

kind: Deployment

metadata:

name: mongodb-deployment

spec:

replicas: 1

selector:

matchLabels:

app: mongodb

template:

metadata:

labels:

app: mongodb

spec:

containers:

- name: mongodb

image: mongo:latest

ports:

- containerPort: 27017

volumeMounts:

- name: mongodb-storage

mountPath: /data/db # MongoDB default data directory

volumes:

- name: mongodb-storage

persistentVolumeClaim:

claimName: mongodb-pvc

Key Points:

NFS allows MongoDB pods to share data across different nodes in the Kubernetes cluster.

PVC and PV ensure that MongoDB's data remains persistent even if the pod is deleted or moved to another node.

The ReadWriteMany (RWX) access mode allows multiple pods to read and write to the same NFS volume, ensuring data consistency.

This setup provides high availability and data persistence for MongoDB using NFS in Kubernetes.

Thankyou

Subscribe to my newsletter

Read articles from Kandlagunta Venkata Siva Niranjan Reddy directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by