How to Keep Oracle Database Schema(s) Under Version Control (git, mercurial) Using DDLFS

Urh Srecnik

Urh SrecnikI've just released new (beta) version of ddlfs, which now also supports running natively on Windows (thanks to Dokan). Originally it written for Linux/libfuse only. I made it so that I wouldn't need to use graphical interfaces when interacting with Oracle databases, but since it was written, there came other interesting uses for it. Let's discuss one of them:

How can we keep DDL changes of production database in git/mercurial repositoy? Application schemas of production database can be migrated using tools such as FlyWay or Liquibase which generally keep their history under version control.

But what about ad-hoc changes that happen on production? Wouldn't it be nice to have those, too, automatically under version control?

This blog post will provide a recipe to achive that using ddlfs. I decided to write examples in this blog post in Windows syntax, just because I'm still considering Windows support experimental (until I get more feedback), and I think writing this kind of blog post is a nice way to maybe catch some bugs before I mark the release as production-ready.

What is DDLFS?

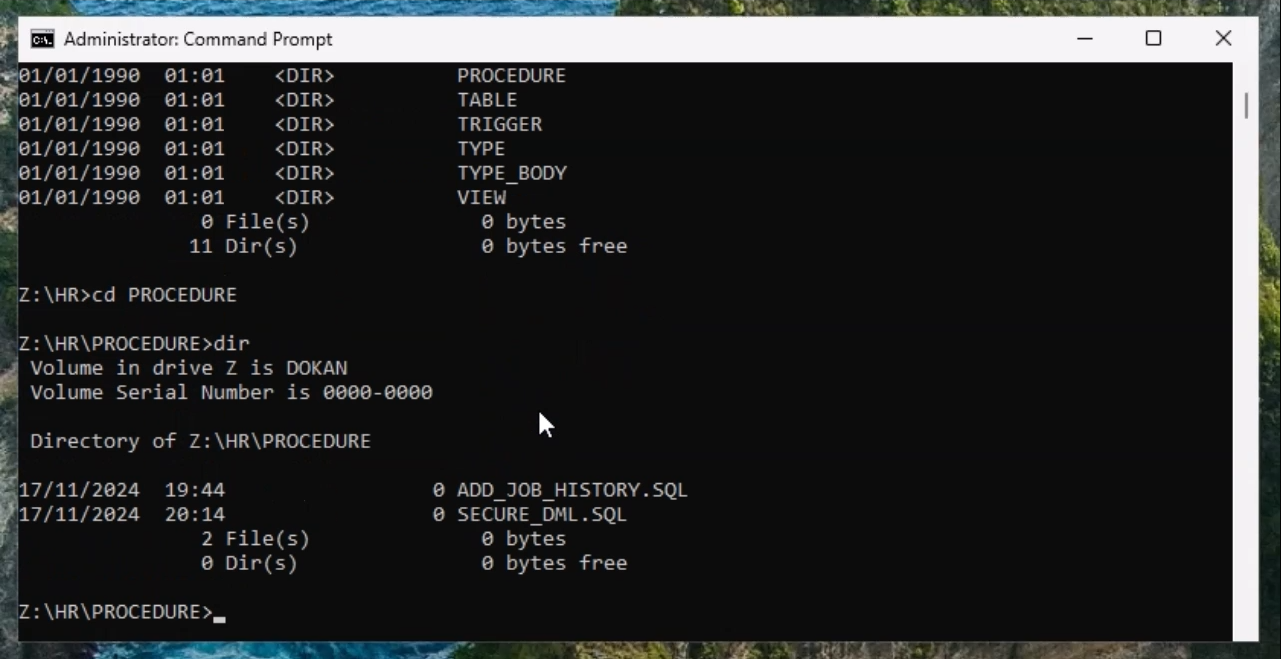

It's an open-source filesystem which exposes every database object (such as packages, views, procedures) as an .sql file. Here is a screenshot on Windows of how it looks like in practice. Please visit ddlfs GitHub page for more detailed description.

Also, here is a video from which the screenshot was taken.

What about git/mercurial

They're both distributed version control systems, which, you, if you're reading blogs such as this one, should definitively be already familiar with. If not, here are the links to their manuals:

We do need to address one important detail regarding those two; They keep all their data under .git and .hg folder. So, if you have a project in, say, C:\Users\John\repos\my-project, there will be a .git or .hg folder in there.

Creating a project

Let's start by creating a project folder, say, F:\database\prod which contains .\ddlfs\ folder. Like this:

F:\>mkdir F:\database\prod\ddlfs

F:\>mkdir F:\temp

The F:\database\prod will contain .hg or .git folder and .\ddlfs\ folder will reflect the current state of database objects in the database.

The F:\temp is optional and can be deleted at any time when ddlfs is not mounted. We'll use it as temppath= (see details in the following section).

Mounting Database as a Filesystem

ddlfs -o ro,dbro,keepcache,temppathusername=SCOTT,password=tiger,database=dbhost:1521/service.name D:\database\prod\ddlfs

Regarding the folder D:\database\prod\ddlfs - this is mountpoint, it does not need to be a letter, e.g. X:\. Well, to be exact with terminology, on Windows, this "folder" becomes a junction after we successfully execute this ddlfs command:

F:\database\prod>dir

Volume in drive F is Data

Volume Serial Number is E277-8A48

Directory of F:\database\prod

17/11/2024 14:02 <DIR> .

15/11/2024 17:00 <DIR> ..

17/11/2024 14:02 <JUNCTION> ddlfs [\??\Volume{d6cc17c5-176c-4085-bce7-964f1e9f5de9}\]

0 File(s) 0 bytes

3 Dir(s) 21.358.292.992 bytes free

The only mandatory options are username, password and database. And everything described in this blog post should work if you'd only use those three. All the other options are added merely for performance optimization. On Linux, all mount options are described if you say man ddlfs, and all the mount options are also documented on the ddlfs GitHub page.

Let's discuss them in the context of using ddlfs for hg/git:

ro: Do not allow changes to packages. Without this option, it is possible to open the .SQL files in.\ddlfsfolder and save them. We intend to only read the current state of the database and, with this option, prohibit incidental changes.dbro: Let ddlfs assume your database is opened asREAD ONLY. We need this assumption for better caching - once we read the contents of a package, we can trust that the state of this package didn't change even if we read it again after a while (untilumount).keepcache: ddlfs keeps temporary files in which cached contents of database objects are kept. Those files can be reused between mounts, because cache validation is faster than reading all the objects again.temppath: Simply a path where previously mentioned cached contents are stored. Note that if you point it to a filesystem which relies on inodes - this folder can potentially have a lot of files, thus, very smallext4may not cut it. Default isC:\Users\%USER%\AppData\Local\Temp\on Windows and/tmpon Linux.filesize=-1: Probably the most important one; Using this option, ddlfs will always provide correct, actual, size of each file. Default is0- if this would be used, then files would have reported size=0 bytes until they're read. Once read, they report the correct size. Problem with correct size is that we need to export every database object in order to obtain every size. Which takes time. But we need to do that anyway in case of git/hg. It's also why wekeepcachebetween the mounts.

You can optionally also specify schemas=MY_APP% to only include database objects belonging to schemas with name starting with MY_APP. You can also include list of schemas, like schemas=SCHEMA_1:SCHEMA_2:SCHEMA_3.

If you're scripting this, note that on Windows, ddlfs will go to background before everything is mounted, so before proceeding, make sure file D:\database\prod\ddlfs\ddlfs.log exists.

Git

git init is needed only the first time to create .git repository.

F:\database\prod>git init

Initialized empty Git repository in F:/database/prod/.git/

F:\database\prod>git config core.autocrlf false

F:\database\prod>git add ddlfs

core.autocrlf=false is here because we don't want git to care about line endings - we want it to add files exactly as they are, without line feed conversions (note that we've mounted .\ddlfs as read only).

The add part will take quite some time if your schemas are huge (it needs to export every object). Especially if you chose to log in using SYS user, which has access to all the objects in the database.

It will take the first time the longest (to build up a cache in temppath). Once this is built, we'll only deal with changes, which is way faster. This is also one of the differences between using custom export scripts (such as datapump metadata export, dbms_metadata.get_ddl or any other similar method - those methods will read everything they're exporting each time they're exporting it. Using ddlfs keepcache we're reusing everything that hasn't changed yet (according to all_objects.last_ddl_time).

Waiting on command to complete without knowing what it is doing and how much progress it's already made usually leads to lacking trust in any given software. So here's how you can monitor what is going on if you'd like:

You can run ddlfs in foreground by specifying -f flag to ddlfs command on Linux or use ddlfs-fg command (instead of ddlfs) on Windows. Furthermore, you can add loglevel=DEBUG to mount options. This way you'll see each object that is accessed by git printed to the console.

Once this is complete, simply commit (and maybe also push) and umount. On Windows, you need to be Administrator to do umount:

F:\database\prod>git commit -m "daily commit"

F:\database\prod>ddlfs-umount

Mercurial

Now, let's do the same with Mercurial, this time as a Linux example:

$ ddlfs -o... ./ddlfs

$ hg init

$ hg add ddlfs

$ hg commit -m "daily commit"

$ fusermount -u ./ddlfs

Replace three dots in the first command with mount options as described for git.

Also note, on Linux, you can mount/umount as any user, whereas on Windows, you need to be Administrator to umount.

Final Thoughts

Everything described so far is free and open-source. It is, however, also optionally integrated in my company's commercial offering Abakus Backup Server which uses physical standby databases to maintain physical backup copies of Oracle Databases using deduplication to save space.

Also keep in mind that ddlfs 3.x is not yet considered production ready because there has been many changes in order to accommodate both, Linux and Windows with the same source. So, feel free to report any issues on ddlfs GitHub page.

And if anyone happens to "convert" this article into an open-sourced generic script (e.g. bash or powershell), let me know, I'd be happy to publish a link to it.

Subscribe to my newsletter

Read articles from Urh Srecnik directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Urh Srecnik

Urh Srecnik

I'm an Oracle DBA and a developer at Abakus Plus d.o.o.. My team is responsible for pro-active maintenance of many Oracle Databases and their infrastructure. I am co-author of Abakus's solutions for monitoring Oracle Database performance, APPM, also available as a Free Edition. for backup and recovery: Backup Server for quick provisioning of test & development databases: Deja Vu Also author of open-source DDLFS filesystem which is available on GitHub. I am: OCP Database Administrator OCP Java SE Programmer OCIS Exadata Database Machine and a few more, check my LinkedIn profile for the complete list.