ODC with AWS S3 - Browser to CloudFront

Stefan Weber

Stefan Weber

This article explains how to securely upload and download files directly from a client's browser to a private Amazon Simple Storage Service (S3) bucket via a CloudFront distribution.

This article is part of a series that explores ways to interact with Amazon S3 buckets. Be sure to read the introductory article to understand why S3 can be a valuable addition to your ODC applications and the challenges you might encounter.

AWS CloudFront

CloudFront is a content delivery network (CDN) service. CDNs are geographically distributed groups of servers that provide any Internet content efficiently and with low latency. This is achieved by ensuring that content in the CDN is always retrieved from the group of servers — edge servers — that are closest to a client (point of presence).

Content in a CDN can be videos, audio files, images, and documents, but also HTML, JavaScript, and CSS. Complete web presences can be stored and distributed on a CDN, which is particularly interesting for static websites, or for websites generated via a static site generator framework.

Content is not automatically distributed to all CloudFront Edge server groups. Instead, content is initially retrieved from a defined source (origin) — for example, an S3 bucket — and cached for a defined period. Subsequent requests are then served directly from the cache. This means that the first retrieval takes a little longer, as the content must first be retrieved from the source.

CloudFront can be used not only to retrieve objects but also to modify or create objects at the configured origin.

With CDN content, the first thing that comes to mind would be that content is publicly available. With CloudFront, it is possible to make private content securely available, which should only be accessible after authorization. One way to achieve this is by using signed URLs or cookies. The signature is generated from within your OutSystems application using a private key.

Differences between S3 pre-signed URLs and CloudFront Signed URLs

If you've read about the Browser to S3 pattern, you might wonder about the differences between S3 pre-signed URLs and CloudFront Signed URLs.

Both S3 pre-signed URLs and CloudFront Signed URLs provide access to private content. In both cases, a URL is signed, and the signature is added to the URL. When accessed, the signature is checked, and if it's valid, access to the resource is granted.

However, there are some key differences:

S3 pre-signed URLs are signed using IAM credentials. The permissions for accessing a resource are defined by the policies assigned to that IAM user or specified in a bucket policy.

CloudFront Signed URLs are signed using a self-generated private key. The corresponding public key is uploaded to CloudFront and used to verify signatures. Access to a resource is determined by the URL and the validity of the signature. If the signature is valid for a URL, access is granted regardless of other factors.

S3 pre-signed URLs are specific to one object in storage and are limited to a single request type (GET, POST, etc.). CloudFront Signed URLs can use wildcards, allowing access to multiple objects (e.g., all files under a path) using a custom signed URL. CloudFront Signed URLs apply to all allowed request types configured in the CloudFront distribution, which is important to note because you cannot limit it on a per-request basis.

In this pattern we are exploring CloudFront signed URLs for authorizing access to private S3 bucket objects.

Prerequisites

There are several steps required to set up the environment before you can try out the reference application.

S3 Bucket and AWS Credentials

Create an S3 bucket in a region of your choice. Unlike the other patterns in this series, you do not need AWS credentials for this one.

Apply a cross-origin policy in the Permissions tab of your bucket to allow HEAD, GET and PUT requests (you might want to restrict it further).

[

{

"AllowedHeaders": [

"*"

],

"AllowedMethods": [

"HEAD",

"GET",

"PUT"

],

"AllowedOrigins": [

"*"

],

"ExposeHeaders": []

}

]

CloudFront Signing Key Pair

As stated above we need a private key in our OutSystems application to sign URLs for accessing our private S3 files via CloudFront. CloudFront needs the public key to validate the signature.

We are using OpenSSL here to create a key pair. You can download a Microsoft Windows version from Shining Light Productions or use the OpenSSL command from a WSL installation of a Linux distribution.

Create private key

openssl genrsa -out aws_cloudfront_privatekey.pem 2048

Create public key

openssl rsa -pubout -in aws_cloudfront_privatekey.pem -out aws_cloudfront_publickey.pem

Add Public Key to CloudFront

Open the public key PEM file with a text editor and copy the content to your clipboard.

In the AWS console, switch to the CloudFront service.

In the left menu under Key Management, select Public Keys and click on Create Public Key.

Name the public key, paste the contents of the PEM file into the Key field, and then click Create Public Key.

Make a note of the ID of your created public key, as we will need it later.

Create a Key Group

Keys are organized into key groups, which are later referenced by CloudFront distributions. A signature is valid if any of the public keys in the associated key group can validate it.

Under Key Management, select Key Groups and click Create Key Group.

Name the key group and select your added public key from the list of available public keys. Then click on Create Key Group.

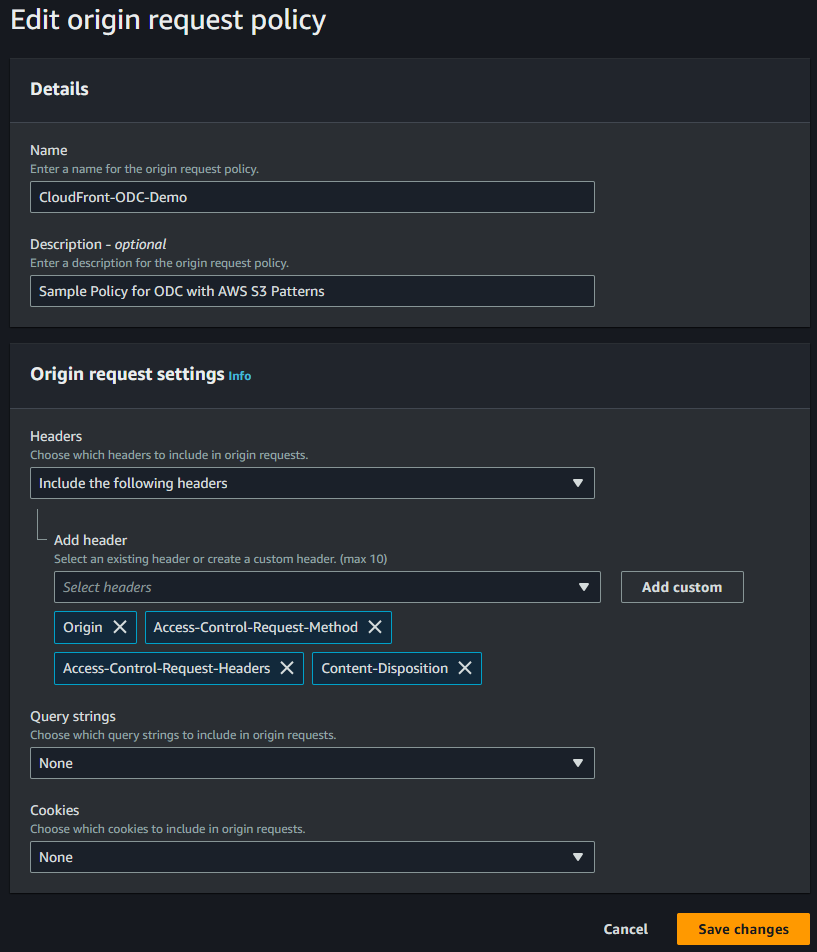

Custom Origin Request Policy

Origin Request policies control which values (e.g. query string parameters, headers) are forwarded from CloudFront to the origin, which in our case is a S3 bucket.

In the reference application when uploading files, we are going to set an additional header content-disposition which is not part of the default policies.

In the CloudFront console select the Policies menu and then the Origin request tab.

In the Custom policies section Click Create origin request policy

Select Include the following headers in Headers and select Origin, Access-Control-Request-Method and Access-Control-Request-Headers from the list. Add another custom header Content-Disposition.

Leave Query string and Cookies to None. Then Save the policy.

CloudFront Distribution

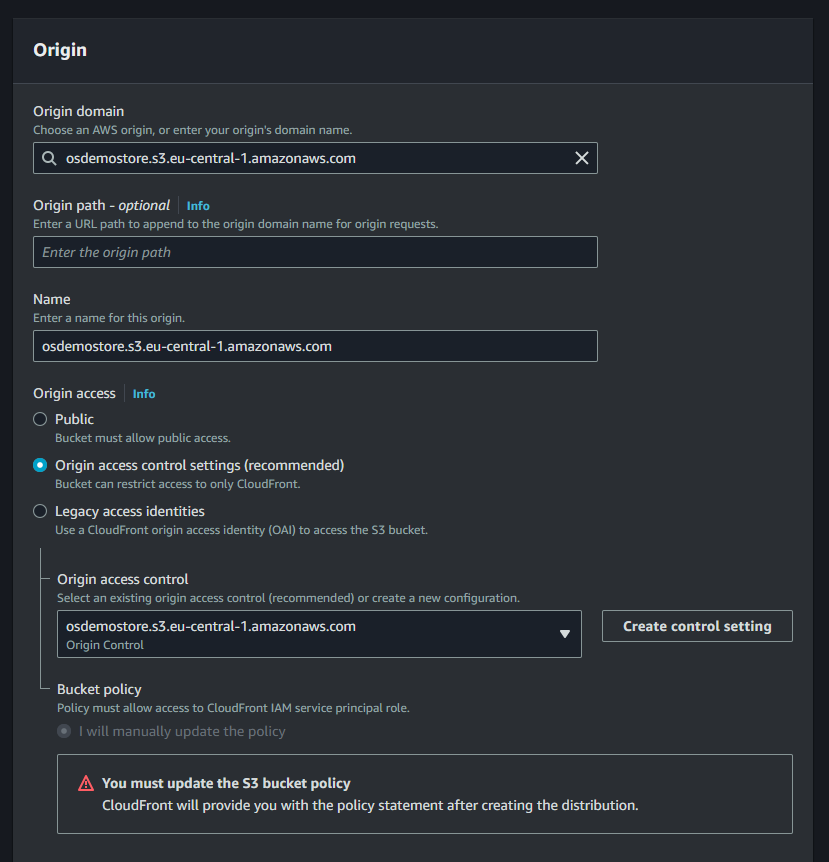

Now it's time to create the CloudFront distribution using our S3 bucket as the origin.

In the CloudFront menu, select Distributions and click Create distribution.

Choose your S3 bucket from the Origin domain list and provide a name for the new distribution.

Under Origin access, select Origin access control settings (recommended).

Click the Create control setting button and create a new setting with the default options

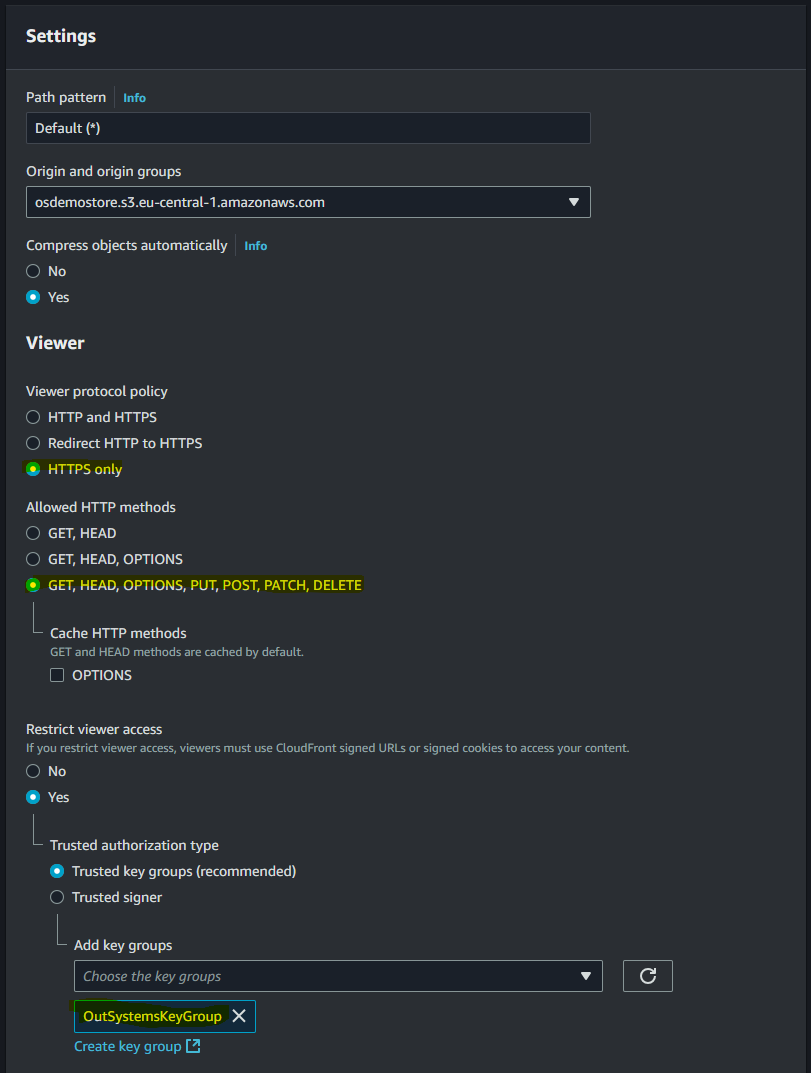

Make sure to click Yes on the Restrict viewer access setting under Default cache behavior.

Then select your created Key group from the list of available Key groups.

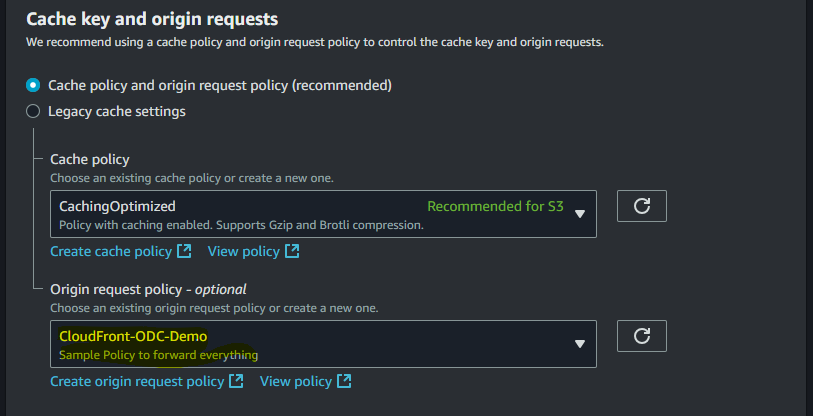

- Set the Origin request policy to the creates custom origin request policy. This policy ensures that the CORS request headers are forwarded to the S3 origin, allowing browser-based requests.

- Leave the rest of the settings as they are and click on Create distribution.

After creating your CloudFront distribution, note your Distribution domain name (e.g., gfds76768das.cloudfront.net) from the General tab. We will need this later to access files.

Try accessing the root of your CloudFront Distribution domain using a browser. You should see an error message like

<Error>

<Code>MissingKey</Code>

<Message>Missing Key-Pair-Id query parameter or cookie value</Message>

</Error>

CloudFront S3 Bucket Permissions

We need to grant CloudFront — our CloudFront distribution to be more specific — access to our S3 bucket. CloudFront, just like any other resource or user, needs permission to retrieve objects from the store to place them into its cache. We do this by applying a bucket policy in our S3 bucket. Luckily CloudFront provides us with a template bucket policy.

In your created CloudFront distribution select the Origins header. Then select your S3 origin from the list and click on Edit.

Click the Copy Policy button to copy the pre-created bucket policy to clipboard.

Switch to S3 service and click on your S3 bucket from the list of buckets.

Select the Permissions tab

Under Bucket Polices click Edit, then paste the copied policy. Then add the PutObject action permission manually.

Your bucket policy should look like this:

{

"Version": "2008-10-17",

"Id": "PolicyForCloudFrontPrivateContent",

"Statement": [

{

"Sid": "AllowCloudFrontServicePrincipal",

"Effect": "Allow",

"Principal": {

"Service": "cloudfront.amazonaws.com"

},

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": "arn:aws:s3:::<your S3 Bucket name>/*",

"Condition": {

"StringEquals": {

"AWS:SourceArn": "<arn of your CloudFront distribution>"

}

}

}

]

}

The final step is to apply some settings in the reference application.

Configure the Reference Application

To try out the reference application, you will need the following information from the configuration steps you did above.

Distribution domain name — Found on the AWS CloudFront console under the Distribution detail screen.

CloudFront Key ID — The ID of the public key (not the key group) you uploaded, located in the CloudFront console under the Key Management menu in Public Keys.

Private Key — The PEM file of your private key that matches the public key you uploaded to CloudFront.

Configure the app settings of the reference application in the ODC portal.

Enter the values for CloudFrontDomainName (without https://), CloudFrontKeyId, and CloudFrontPrivateKey. For the last one, use the text content of the private key file. Ensure no extra spaces or lines are included.

Before we begin exploring the reference application, I want to briefly explain the two different types of CloudFront Signed URLs.

CloudFront Signed URL Types

CloudFront allows the use of two types of signed URLs to access a private distribution:

Canned Policy Signed URLs - These signed URLs are used for a single resource, whether it already exists or is new.

Custom Policy Signed URLs - These provide more flexibility and can target a group of resources or an entire bucket, using wildcards in their resource description.

As mentioned above, both types of signed URLs allow access to resources, permitting all request types configured in the CloudFront distribution. With CloudFront signed URLs, it is not possible to restrict the request type individually.

The reference application uses both types of signed URLs. The Canned Policy Signed URL is used for uploading an object, and the Custom Policy Signed URL is used for listing objects.

Upload

Let's start with uploading files through our CloudFront distribution to the S3 bucket origin.

Uploading a file from the frontend application requires some JavaScript coding. The reference application includes a complete example that we will use to walk through the process, which is:

Generate a pre-signed URL for a new object and a PUT request in the backend using the AWSSimpleStorage external logic connector.

Use the pre-signed URL in the frontend to perform the upload using JavaScript's XMLHttpRequest class.

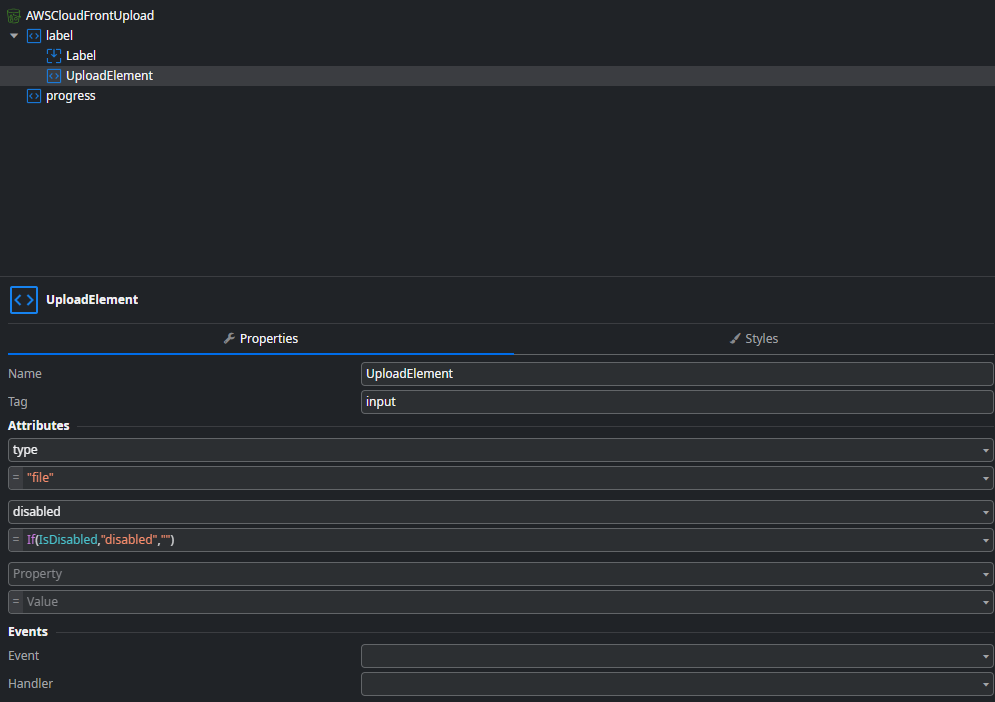

Open the reference application in ODC Studio. On the Interface tab select the AWSCloudFrontUpload block.

Open the AWSCloudFrontUpload widget tree. Inside you will find a HTML input element of type file named UploadElement.

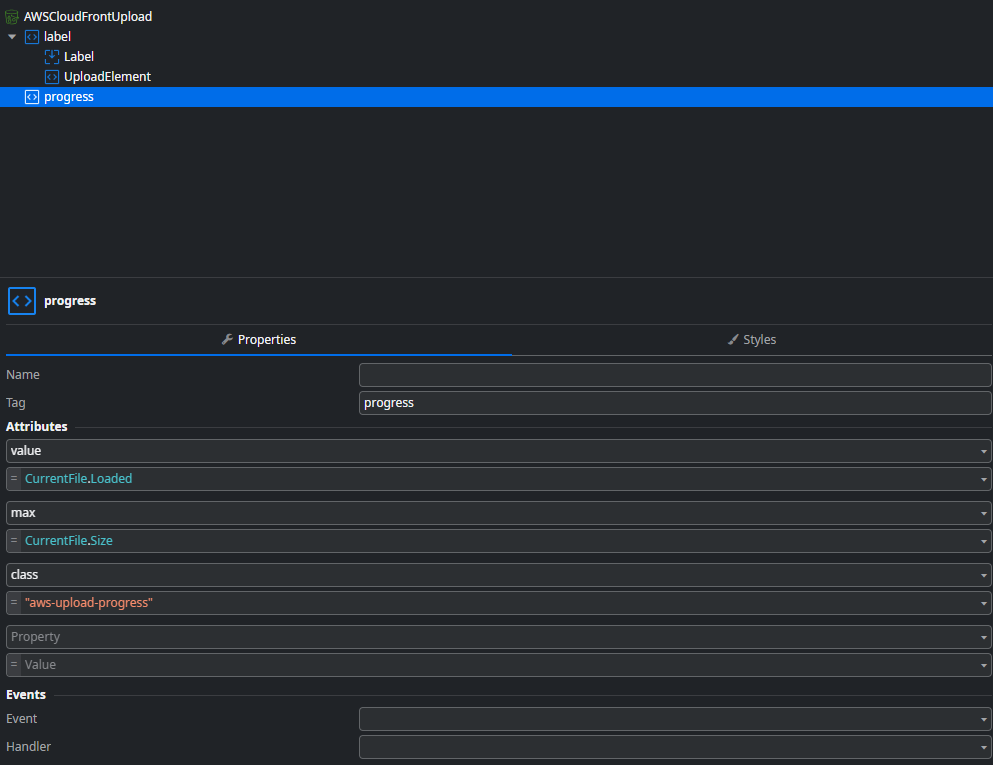

Besides that you will also find a progress element which is used to display the upload progress.

The widgets CSS uses some design tokens (CSS variables) which can be overridden in the screen or in your application theme. You will also note that the HTML input element is hidden and that all styles apply to the wrapping label element.

OnReady and OnDestroy Event Handlers

In the OnReady event handler there is a single JavaScript element registering a change event with the OnFileUploadChange client action.

const uploadElement = document.getElementById($parameters.UploadFileWidgetId);

uploadElement.addEventListener('change', $actions.OnFileUploadChange);

console.debug('Change listener added');

Likewise, in the OnDestroy event handler the change listener is removed.

const fileElement = document.getElementById($parameters.UploadFileWidgetId);

fileElement.removeEventListener('change', $actions.OnFileUploadChange);

console.debug('Change listener removed');

OnFileUploadChange Event Handler

This event handler is executed when the user selects a file to upload. The Event object contains information about the file selected.

The JavaScript inside the event handler first checks if a file was selected. If not the script returns.

It then calls another Client Action GetCloudFrontPutUrl — this one is located outside of the widget in the Logic tab — to get a pre-signed URL for a given prefix (a folder) and the filename of the selected file. Given the pre-signed URL it executes the widgets UploadFile client action.

if($parameters.Event.target.files.length == 0) {

return;

}

$actions.GetCloudFrontPutUrl($parameters.Prefix, $parameters.Event.target.files[0].name)

.then((result => {

$actions.UploadFile(

result.PreSignedUrl,

$parameters.Event.target.files[0].name,

$parameters.Event.target.files[0].type,

$parameters.Event.target.files[0].size,

$parameters.Event.target.files[0]

);

}));

GetCloudFrontPutUrl Client Action

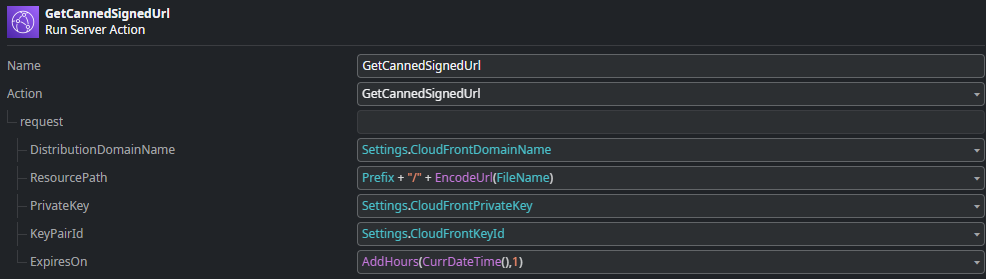

In the Logic tab you will find the GetCloudFrontPutUrl client action. It executes the server action CloudFront_GetUploadUrl located directly under Server Actions and returns a generated canned policy signed URL.

The CloudFront_GetUploadUrl uses the GetCannedSignedUrl action from the AWSCloudFrontSigner forge component using the private key and key pair id you configured above.

This creates a canned signed policy URL valid for 1 hour.

UploadFile Client Action

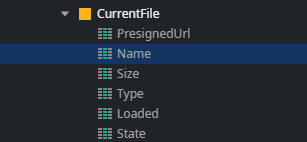

The UploadFile client action first sets values for the block’s CurrentFile local variable. This structure contains information about the file and is used later to track the upload progress.

The JavaScript element creates a new XMLHttpRequest instance for a PUT request to the given pre-signed URL.

let xhr = new XMLHttpRequest();

xhr.open('PUT',$parameters.Url,true);

xhr.setRequestHeader("content-disposition", `attachment; filename="${$parameters.FileName}"`);

/*

* Register Event Handlers

*/

xhr.upload.onprogress = (evt) => $actions.OnTransferProgress(evt.loaded);

xhr.upload.onloadstart = (evt) => $actions.OnTransferState('start');

xhr.upload.onload = (evt) => $actions.OnTransferState('success');

xhr.upload.onloadend = (evt) => $actions.OnTransferEnd();

xhr.upload.onerror = (evt) => $actions.OnTransferState('error');

xhr.upload.ontimeout = (evt) => $actions.OnTransferState('timeout');

xhr.upload.onabort = (evt) => $actions.OnTransferState('abort');

/*

* Send Binary Data

*/

xhr.send($parameters.File)

OnTransferState Event Handler

This client action runs whenever an upload has:

encountered an error

been aborted

timed out

succeeded

It then updates the State variable in the CurrentFile structure accordingly.

OnTransferEnd Event Handler

After the upload is finished—whether successful or not—this client action is executed. It triggers the OnFileUploaded event with the uploaded file details as the payload and resets the CurrentFile local variable.

Saving a File Record on Upload Completed

The ClientCloudFront screen handles the OnFileUploaded event from the widget and runs the CloudFront_AddItem server action, which creates a new record in the File entity for the uploaded file.

Summary

Uploading an object from the browser via CloudFront to a S3 bucket involves two steps. First, create a canned signed policy URL to a new object. Then, use JavaScript to execute the PUT request with the object's binary data.

The reference implementation is a simple example using XMLHttpRequest. There are many more advanced JavaScript file upload libraries available that you might want to consider, especially for uploading very large files.

Download

When downloading an object or using it as a resource, we follow a two-step process. First, we generate a wildcarded custom policy signed URL and append the generated signature to our requested resource.

When uploading a file using the method described above, we include an extra header called content-disposition with the value attachment; filename="<filename>". This header and its value are stored as metadata attached to our S3 object.

When we request that object with a GET request, the header is sent from S3 to our browser, instructing the browser to treat the content as a downloadable file rather than displaying it inline.

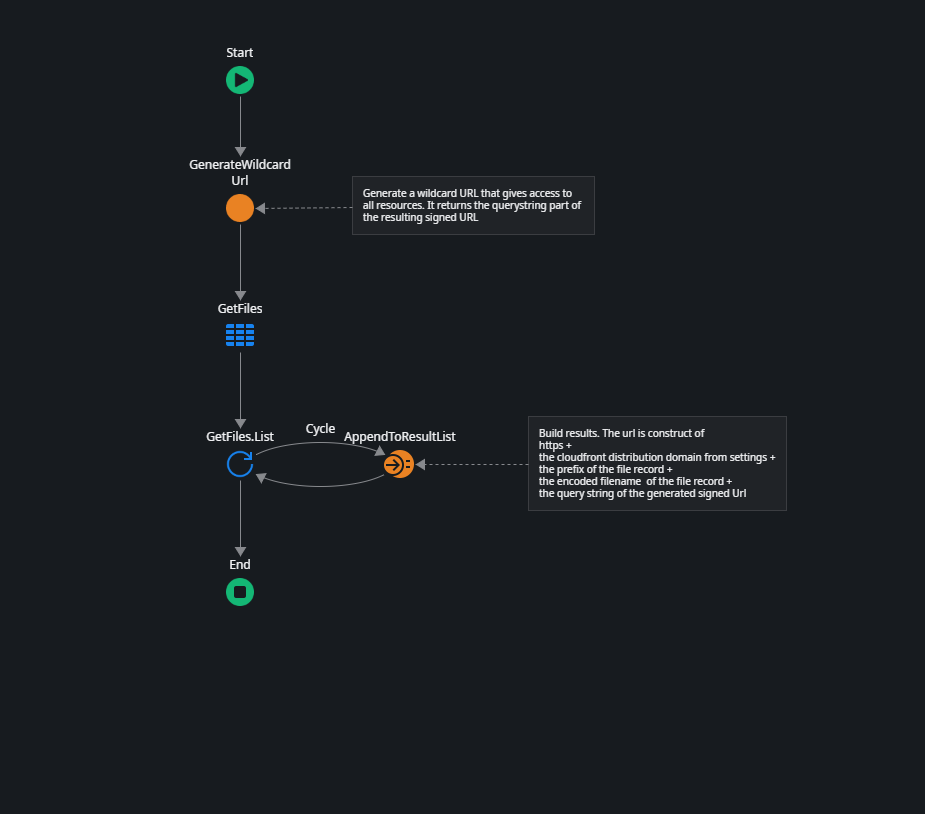

On the ClientCloudFront screen in the Interface tab double-click the GetCloudFrontFiles data action.

GetCloudFrontFiles Data Action

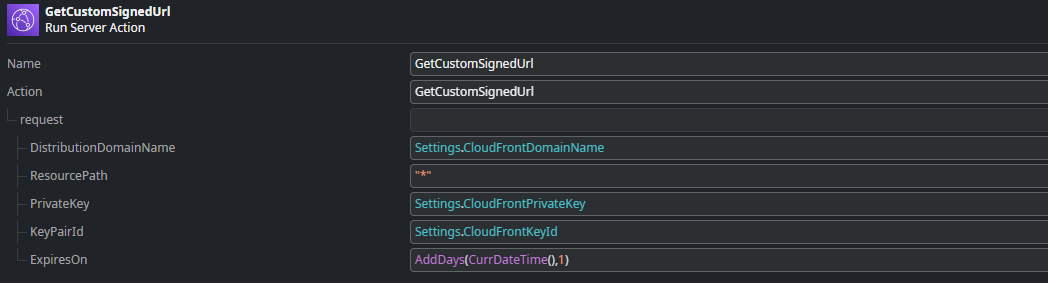

- First, we generate a wildcard custom policy signed URL using the server action GenerateWildcardUrl.

This action uses the GetCustomSignedUrl action from the AWSCloudFrontSigner connector library to create a signed URL that allows access to all objects in the bucket (note the “*” in ResourcePath). It returns the query string of the generated URL, which we can add to our request URL.

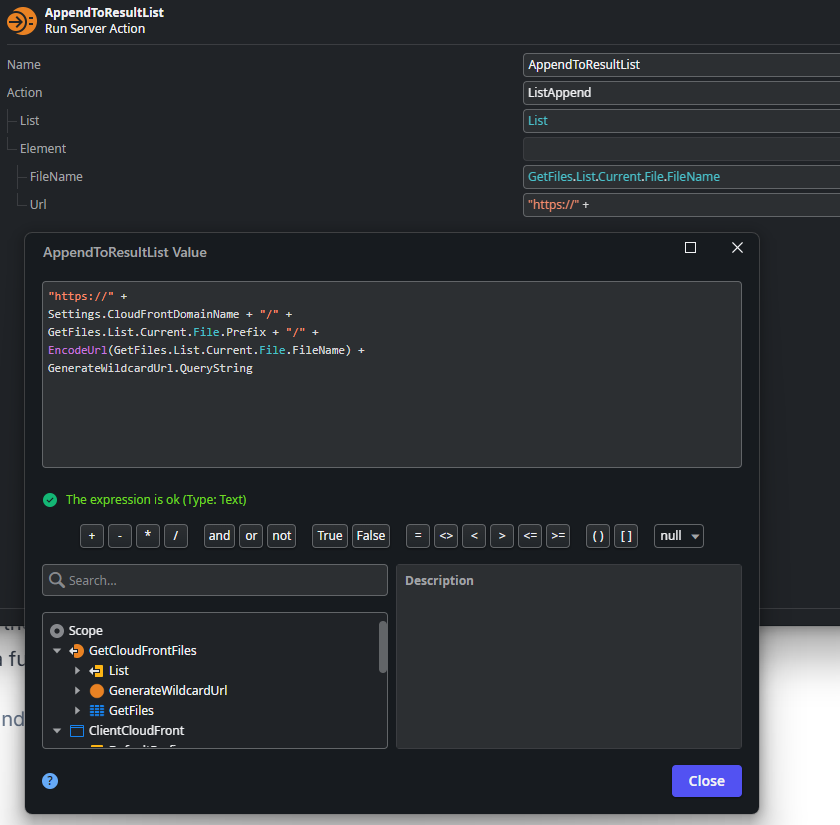

- Then we query the File entity for available File records, iterate over the results, and construct a full URL.

Streaming

The download URL can be used not only for downloading objects but also as a source for media streaming, such as in a video or audio player component. Unlike pre-signed S3 URLs, CloudFront Signed URLs provide full streaming support. With a single signed URL, multiple request types are possible, whereas S3 URLs allow only one request type. Media players and document viewers typically first send a HEAD request to check if the resource supports byte-range requests, then stream the resource using a GET request.

Summary

Uploading and downloading files to and from an S3 bucket via CloudFront requires a detailed setup of AWS services, but once completed, the process is straightforward. This method avoids some challenges mentioned in the introductory article and offers additional benefits:

We don't use external logic connector actions for S3 GetObject and PutObject, which have a request or response payload limit of 5.5MB.

We bypass the 28MB request limit from the browser to an ODC application by uploading directly to S3, allowing us to upload even larger files.

The maximum application request timeout is not an issue because we aren't using a server or server action to perform uploads or downloads.

Objects we want to store or retrieve are not passed through our application container, so the container's memory isn't filled with binary data.

Provides full streaming support for all types of media.

Offers regional distribution of content, making large objects quickly available in multiple regions.

However, the described approach has some downsides as well:

This client-side method doesn't work when we need an object stored in S3 for an asynchronous process, like an in-app event handler or a workflow.

The reference application relies on saving an entry to the database after the OnFileUploaded client event is triggered, which can fail. In a production environment, additional steps are necessary to ensure that File entity records in the database match all objects stored in the bucket. S3 Object events, combined with EventBridge and HttpEndpoint targets (webhooks), are a good way to achieve this, but they are beyond the scope of this article and reference application.

I hope you enjoyed reading this article and that I explained the important parts clearly. If not, please let me know by leaving a comment. I invite you to read the other articles in the series about different patterns for storing and retrieving S3 objects.

Subscribe to my newsletter

Read articles from Stefan Weber directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Stefan Weber

Stefan Weber

Throughout my diverse career, I've accumulated a wealth of experience in various capacities, both technically and personally. The constant desire to create innovative software solutions led me to the world of Low-Code and the OutSystems platform. I remain captivated by how closely OutSystems aligns with traditional software development, offering a seamless experience devoid of limitations. While my managerial responsibilities primarily revolve around leading and inspiring my teams, my passion for solution development with OutSystems remains unwavering. My personal focus extends to integrating our solutions with leading technologies such as Amazon Web Services, Microsoft 365, Azure, and more. In 2023, I earned recognition as an OutSystems Most Valuable Professional, one of only 80 worldwide, and concurrently became an AWS Community Builder.