Building a Reverse Proxy with Pingora | Rust

Carlos Armando Marcano Vargas

Carlos Armando Marcano Vargas

This tutorial focuses on how to create a load balancer using the Pingora library. If you have read the Pingora documentation, there won’t be anything new in this article for you, except that we will add a rate limiter from the example posted in the docs.

I want to make clear, that this article is for learning purposes. And I also learning about the subject. Do not expect a production-ready reverse proxy from this article.

Requirements

Rust installed

WSL installed for Windows users

What is a Reverse Proxy?

According to the article “How To Set Up a Reverse Proxy (Step-By-Steps for Nginx and Apache)” written by Salman Ravoof, a reverse proxy is a server that sits in front of a web server and receives all the requests before they reach the origin server. It works similarly to a forward proxy, except in this case it’s the web server using the proxy rather than the user or client. Reverse proxies are typically used to enhance the performance, security, and reliability of the web server.

Pingora

Pingora is a Rust framework to build fast, reliable and programmable networked systems.

Pingora is battle tested as it has been serving more than 40 million Internet requests per second for more than a few years.

Create the Project

We start creating a new project in Rust as usual, with cargo:

cargo new loadbalancer

Then, in the cargo.toml file, we add the following dependencies:

async-trait="0.1"

pingora = { version = "0.3", features = [ "lb" ] }

pingora-core = "0.3"

pingora-load-balancing = "0.3"

pingora-proxy = "0.3"

Now, let’s create a Pingora server by adding the following code to the main.rs file:

main.rs

use async_trait::async_trait;

use pingora::prelude::*;

use std::sync::Arc;

fn main() {

let mut my_server = Server::new(None).unwrap();

my_server.bootstrap();

my_server.run_forever();

}

According to this guide on Pingora’s documentation, a pingora Server is a process that can host one or many services. The pingora Server takes care of configuration and CLI argument parsing, daemonization, signal handling, and graceful restart or shutdown.

In the code snippet above, we initialize the Server in the main() function and use run_forever() function to spawn all the runtime threads and block the main thread until the server needs to quit. According to the documentation, run_forever() may fork the process for daemonization, so any additional threads created before this function will be lost to any service logic once this function is called.

Creating the load balancer proxy

use async_trait::async_trait;

use pingora::prelude::*;

use std::sync::Arc;

use pingora_load_balancing::{selection::RoundRobin, LoadBalancer};

pub struct LB(Arc<LoadBalancer<RoundRobin>>);

fn main() {

let mut my_server = Server::new(None).unwrap();

my_server.bootstrap();

my_server.run_forever();

}

In the code above, we import the pingora_load_balancing crate which provides the LoadBalancer struct and the selection algorithms. We use the round-robin algorithm for our load balancer.

Then, we create the struct LB that encapsulates the LoadBalancer using the round-robin strategy in an Arc type.

To make our server proxy, we have to implement the ProxyHttp trait.

...

use pingora_core::upstreams::peer::HttpPeer;

use pingora_core::Result;

use pingora_proxy::{ProxyHttp, Session};

pub struct LB(Arc<LoadBalancer<RoundRobin>>);

#[async_trait]

impl ProxyHttp for LB {

type CTX = ();

fn new_ctx(&self) -> () {

()

}

async fn uptream_peer(&self, _session: &mut Session, _ctx: &mut ()) -> Result<Box<HttpPeer>> {

let upstream = self.0

.select(b"", 256)

.unwrap();

println!("upstream peer is: {upstream:?}");

let peer = Box::new(HttpPeer::new(upstream, true, "one.one.one.one".to_string()));

Ok(peer)

}

Any object that implements the ProxyHttp trait defines how a request is handled in the proxy. The only required method in the ProxyHttp trait is upstream_peer() which returns the address to which the request should be proxied.

In the body of the upstream_peer(), we use the select() method for the LoadBalancer to round-robin across the upstream IPs. In this example we use HTTPS to connect to the backends, so we also need to specify to use_tls and set the SNI when constructing our Peer) object.

async fn upstream_request_filter(

&self,

_session: &mut Session,

upstream_request: &mut RequestHeader,

_ctx: &mut Self::CTX,

) -> Result<()> {

upstream_request.insert_header("Host", "one.one.one.one").unwrap();

Ok(())

}

For the 1.1.1.1 backends to accept our requests, a host header must be present. Adding this header can be done by the upstream_request_filter() callback which modifies the request header after the connection to the backends is established and before the request header is sent.

Now, according to the Pingora guide, we have to create a proxy service that follows the instructions of the load balancer.

fn main() {

let mut my_server = Server::new(None).unwrap();

my_server.bootstrap();

let upstream =

LoadBalancer::try_from_iter(["1.1.1.1:443", "1.0.0.1:443"]).unwrap();

let mut lb = http_proxy_service(&my_server.configuration, LB(Arc::new(upstream)));

lb.add_tcp("0.0.0.0:6188");

my_server.add_service(lb);

my_server.run_forever();

}

A pingora Service listens to one or multiple (TCP or Unix domain socket) endpoints. When a new connection is established the Service hands the connection over to its "application." pingora-proxy is such an application that proxies the HTTP request to the given backend as configured above.

In the example below, we create a LB instance with two backends 1.1.1.1:443 and 1.0.0.1:443. We put that LB instance to a proxy Service via the http_proxy_service() call and then tell our Server to host that proxy Service.

Now, we run the command cargo run to start our proxy. Then, we make requests to the proxy to see if the load balancer works properly.

To test the proxy we run the command:

curl 127.0.0.1:6188 -svo /dev/null

I will run the command above three times.

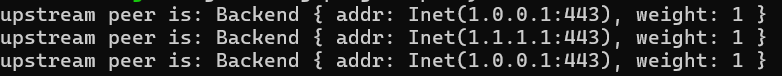

We should see the following messages in the command line of our proxy:

Adding Health Check

First, we need to import background_service and time::Duration packages to the main.rs file.

use pingora_core::services::background::background_service;

use std::{sync::Arc, time::Duration};

Now, let’s develop the health check functionality.

fn main() {

let mut my_server = Server::new(None).unwrap();

my_server.bootstrap();

let mut upstreams =

LoadBalancer::try_from_iter(["1.1.1.1:443", "1.0.0.1:443",

"127.0.0.1:343"]).unwrap();

let hc = TcpHealthCheck::new();

upstreams.set_health_check(hc);

upstreams.health_check_frequency = Some(std::time::Duration::from_secs(1));

let background = background_service("health check", upstreams);

let upstreams = background.task();

let mut lb = http_proxy_service(&my_server.configuration, LB(upstreams));

lb.add_tcp("0.0.0.0:6188");

my_server.add_service(background);

my_server.add_service(lb);

my_server.run_forever();

}

With the health check, we ensure the load balancer proxies the request to a running backend. Without it, we would receive a 502: Bad Gateway status code when the service tries to proxy the connection to the "127.0.0.1:343" backend.

Adding Rate limiter

According to the article “What is rate limiting? | Rate limiting and bots“ posted on CloudFlare’s website, Rate limiting is a strategy for limiting network traffic. It limits how often someone can repeat an action within a certain timeframe – for instance, trying to log in to an account. Rate limiting can help stop certain kinds of malicious bot activity. It can also reduce strain on web servers

Pingora provides the pingora-limits crate, which allows us to create a rate limiter for our reverse proxy.

We need to add the following dependencies to our project:

...

pingora-limits = "0.3.0"

once_cell = "1.19.0"

Now, start creating our rate limiter. First, we import Rate and Lazy. Then implement the get_request_appid() method for the LB struct.

use once_cell::sync::Lazy;

use pingora_limits::rate::Rate;

pub struct LB(Arc<LoadBalancer<RoundRobin>>);

impl LB {

pub fn get_request_appid(&self, session: &mut Session) -> Option<Option<String> {

match session

.req_header()

.headers

.get("appid")

.map(|v| v.to_string())

{

None => None,

Some(v) => match v {

Ok(v) => Some(v.to_string()),

Err(_) => None,

},

}

}

}

static RATE_LIMITER: Lazy<Rate> = Lazy::new(|| Rate::new(Duration::from_secs(1)));

static MAX_REQ_PER_SEC: isize = 1;

The Struct rate from the pingora-limits crate is a stable rate estimator that reports the rate of events in the past interval time. According to the documentation, it returns the average rate between interval * 2 and interval while collecting the events happening between interval and now.

Also, the documentation says that the estimator ignores events that happen less than once per interval time.

Lazy is used to initialize values on the first access. This type is thread-safe and can be used in statics.

Next, the ProxyHttp trait has the request_filter method, we have to override it to implement the rate limiter.

According to the documentation, the request_filter method is responsible for handling incoming requests. It also says the following:

In this phase, users can parse, validate, rate limit, perform access control, and/or return a response for this request.

If the user already sent a response to this request, an

Ok(true)should be returned so that the proxy would exit. The proxy continues to the next phases whenOk(false)is returned.By default this filter does nothing and returns

Ok(false).

async fn request_filter(&self,

session: &mut Session,

_ctx: &mut Self::CTX) -> Result<bool>

where

Self::CTX: Send + Sync,

{

let appid = match self.get_request_appid(session) {

None => return Ok(false),

Some(addr) => addr,

};

let curr_window_request = RATE_LIMITER.observe(&appid, 1);

if curr_window_request > MAX_REQ_PER_SEC {

let mut header = ResponseHeader::build(429, None).unwrap();

header

.insert_header("X-Rate-Limit-Limit", MAX_REQ_PER_SEC.to_string())

.unwrap();

header.insert_header("X-Rate-Limit-Remaining", "0").unwrap();

header.insert_header("X-Rate-Limit-Reset", "1").unwrap();

session.set_keepalive(None);

session

.write_response_header(Box::new(header), true)

.await?;

return Ok(true);

}

Ok(false)

}

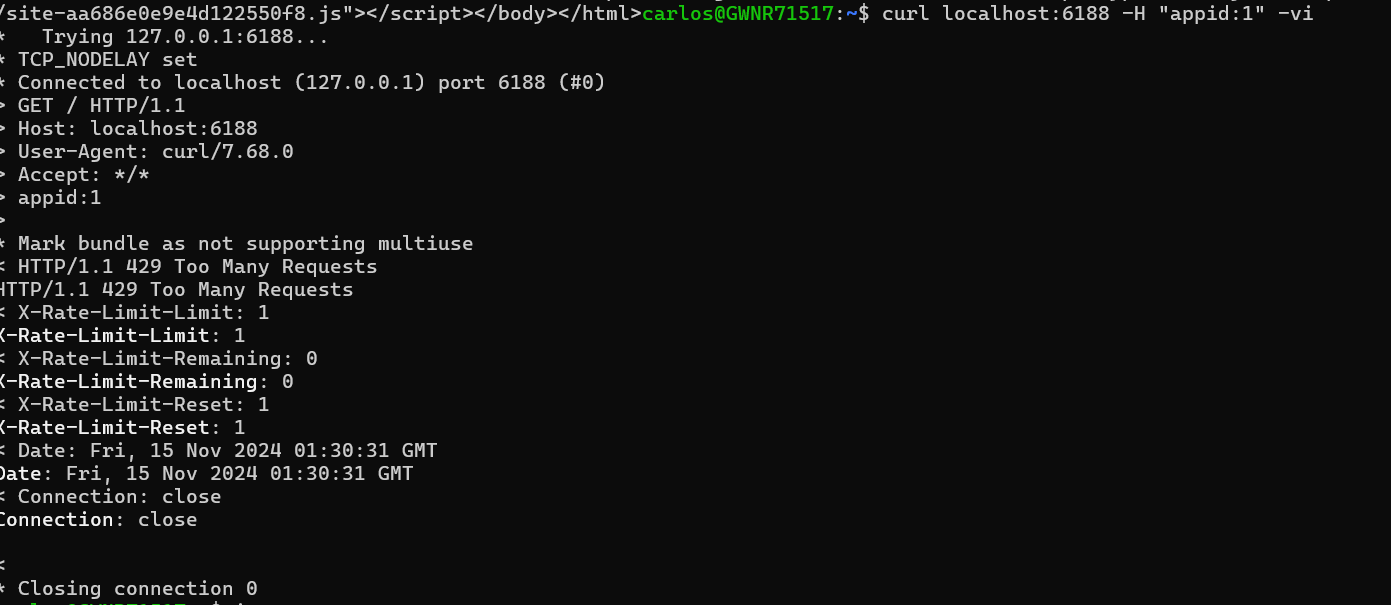

Let’s test the rate limiter by running the following command:

curl localhost:6188 -H "appid:1" -vi

We should see the following message on our command line:

Conclusion

This is the first time I built a reverse proxy. I know there is a lot to know about this subject. I have to say that Pingora has good documentation. Something I liked about its documentation is it has examples that are easy to follow for someone with no previous experience building reverse proxies.

The source code is here.

Thank you for taking the time to read this article.

If you have any recommendations about other packages, architectures, how to improve my code, my English, or anything; please leave a comment or contact me through Twitter, or LinkedIn.

Resources

How To Set Up a Reverse Proxy (Step-By-Steps for Nginx and Apache)

Subscribe to my newsletter

Read articles from Carlos Armando Marcano Vargas directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Carlos Armando Marcano Vargas

Carlos Armando Marcano Vargas

I am a backend developer from Venezuela. I enjoy writing tutorials for open source projects I using and find interesting. Mostly I write tutorials about Python, Go, and Rust.