Kubernetes 101: Part 11

Md Shahriyar Al Mustakim Mitul

Md Shahriyar Al Mustakim MitulMonitoring a Kubernetes cluster

We may want to check the pods details or cluster details but kubernetes does not show those metrics in general.

We have a few open source projects which gives these solutions. They are basically advanced metrics servers.

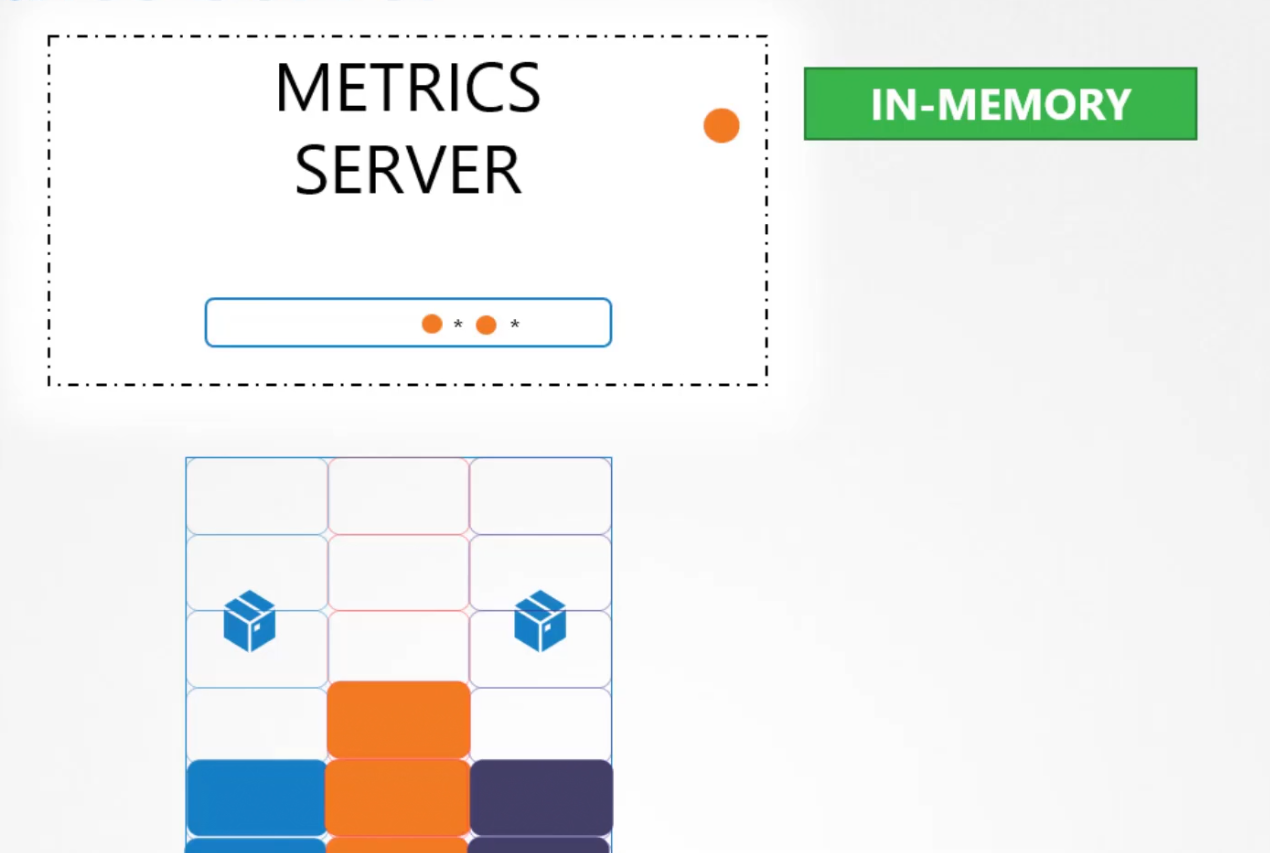

We can have one metrics server per kubernetes cluster.

It extracts details of the cluster and saves them in memory. It does not keep the data on the kubernetes device.

But if you need data on the kubernetes device or more, you need to use advanced metrics server mentioned earlier.

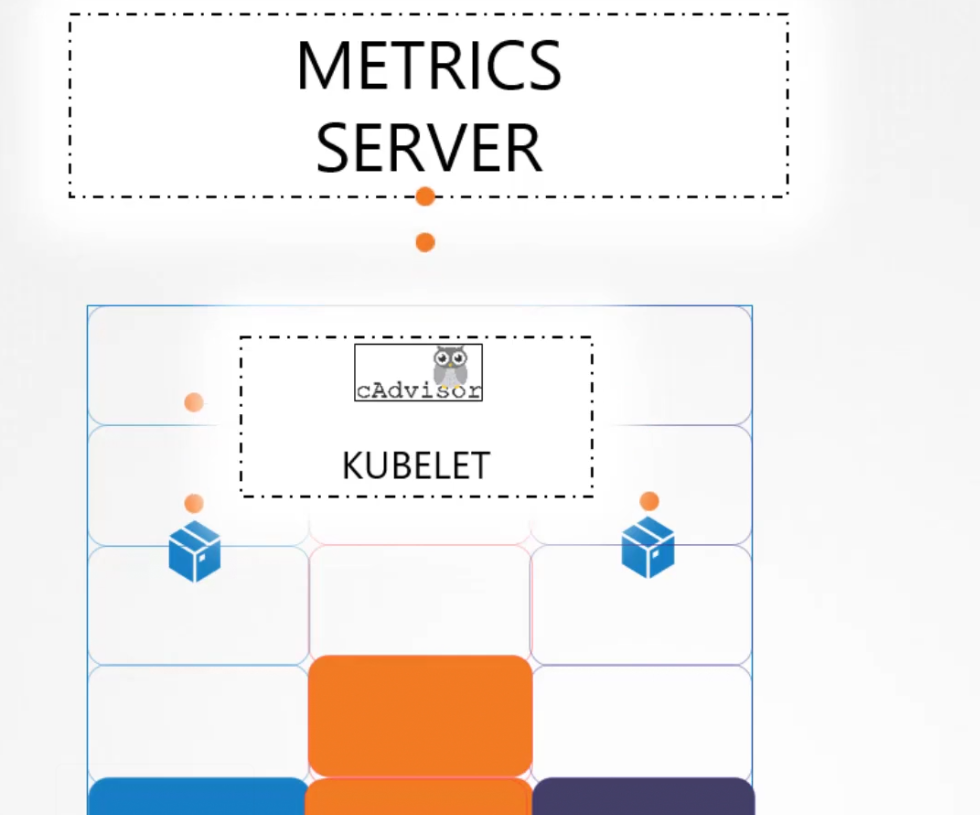

But it does so?

Basically kubelet has something call cAdvisor and it exposes the metrics to metrics server

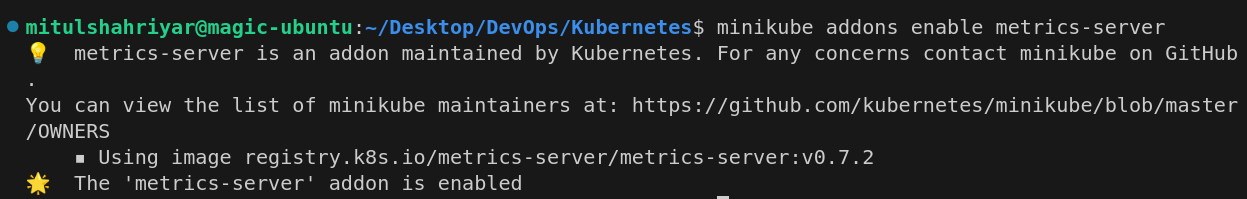

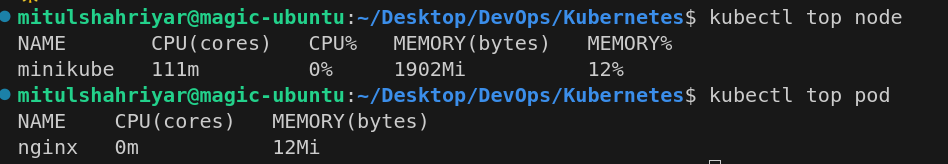

How to enable the metrics server?

If you use minikube, use this one

Then using the kubectl top node, you can see the metrics of memory consumption etc

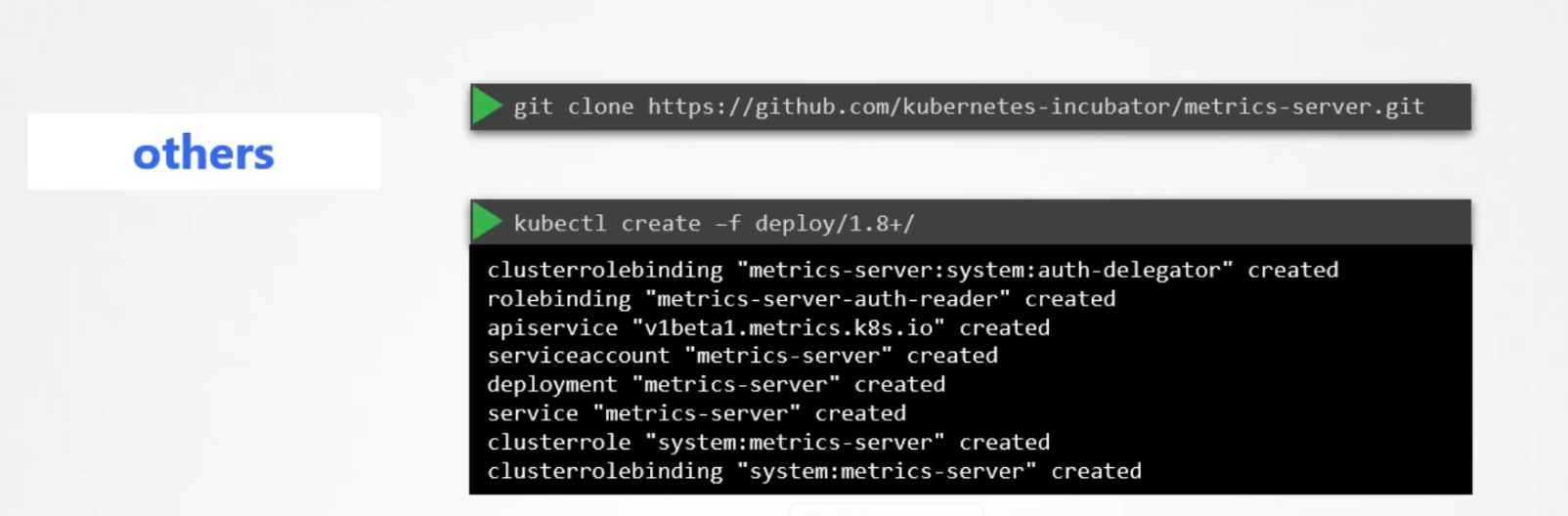

else, clone the repository and deploy

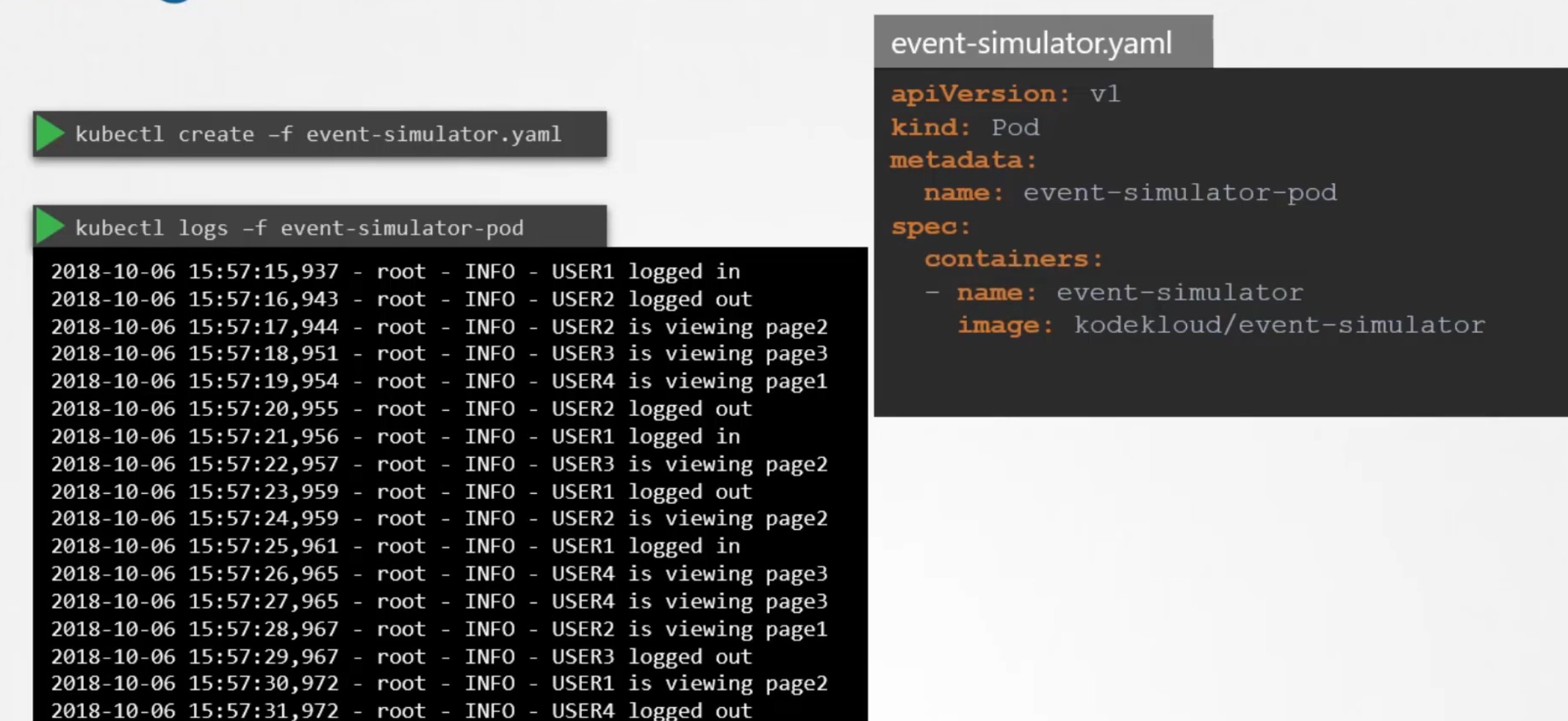

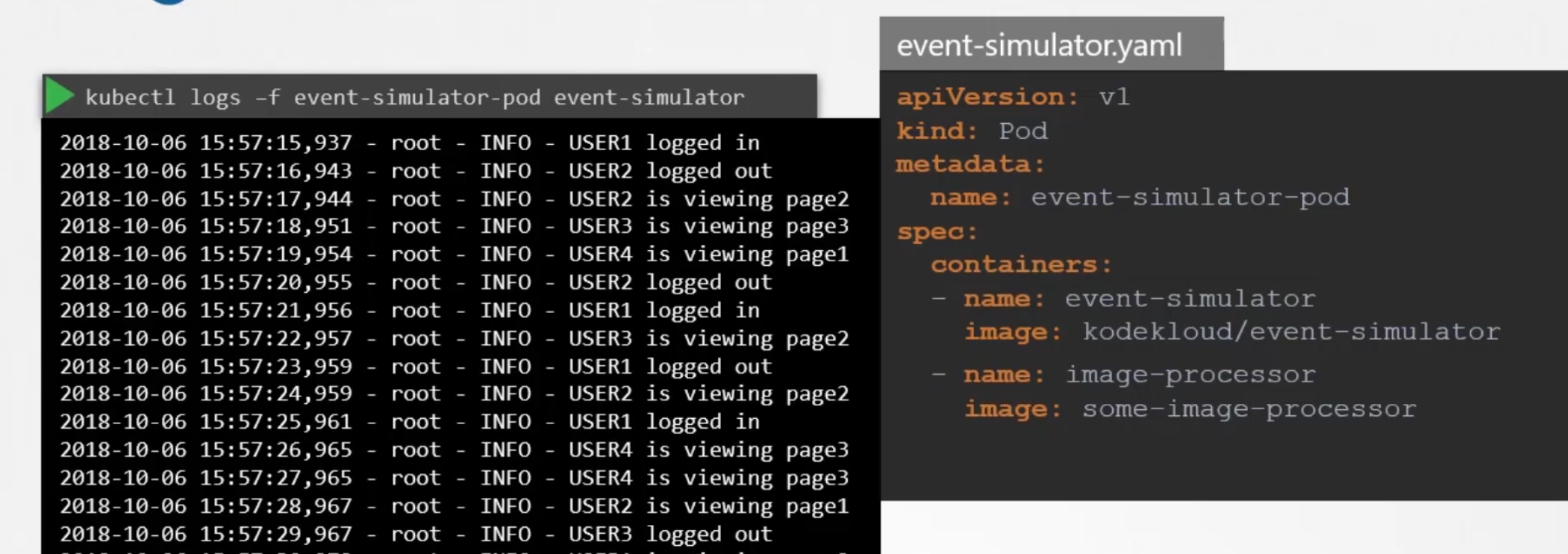

Managing Application log

In kubernetes, we can see logs of a pod by using kubectl logs -f <pod-name>

But if there are multiple containers within a pod, you have to specify which pod’s log you want to check by this

kubectl logs -f <pod-name> <container-name>

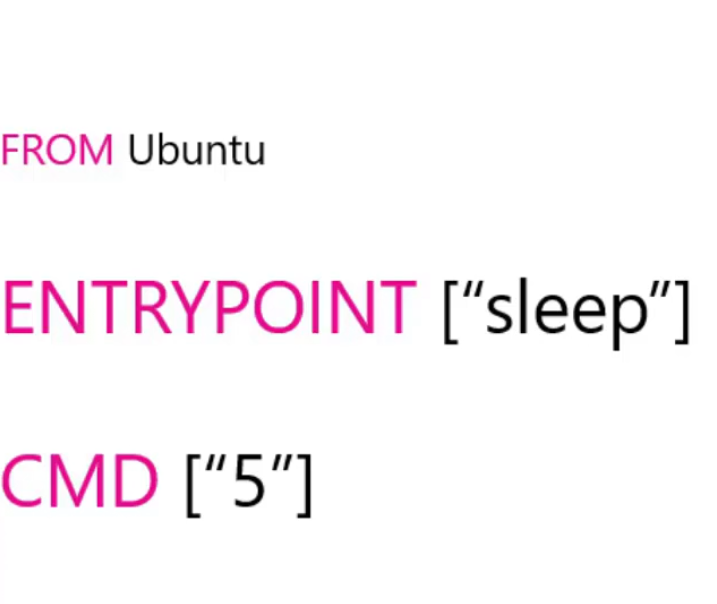

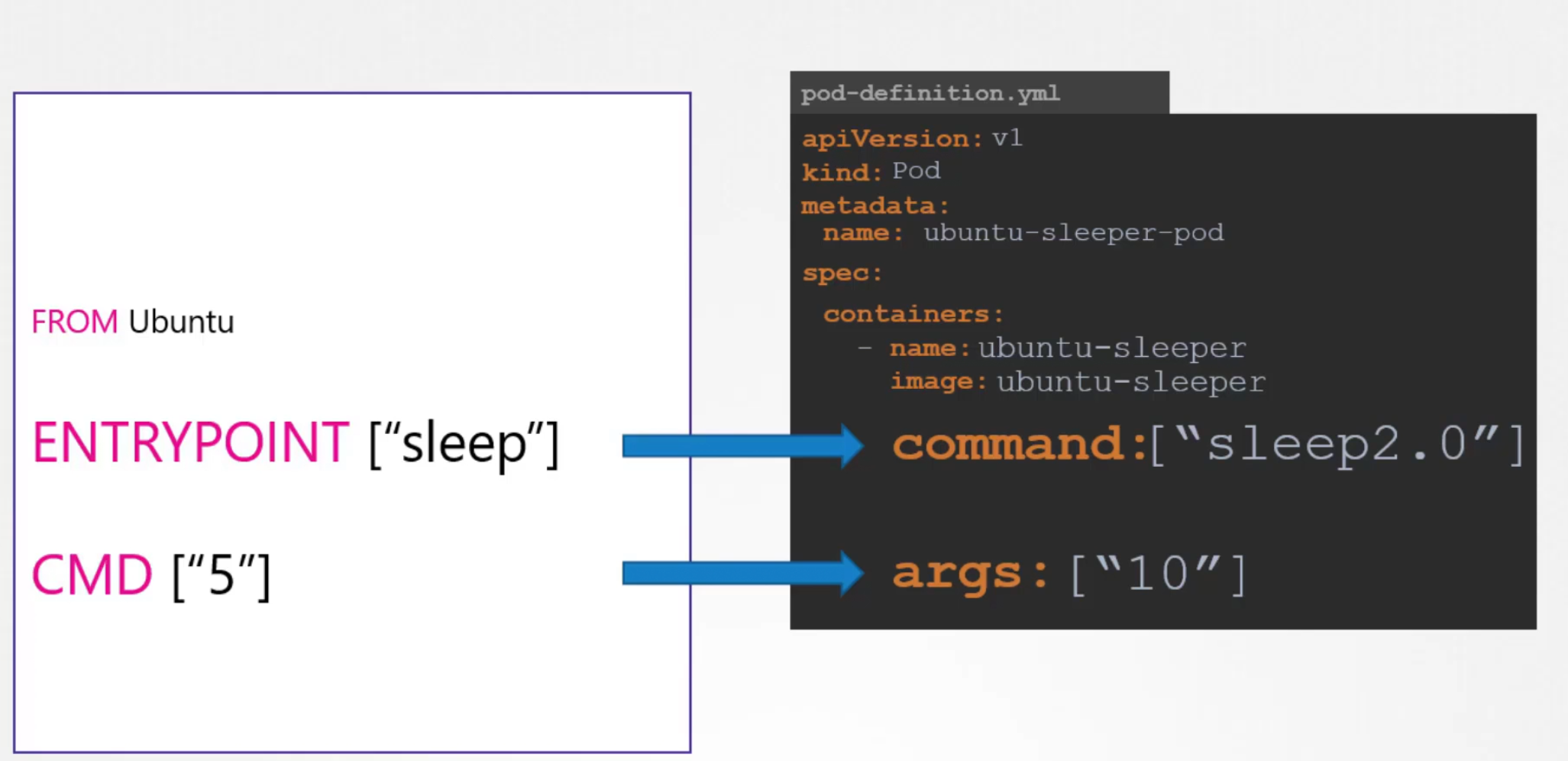

Commands and arguments in docker and kubernetes

Assuming that our docker image has entry point and command set

Now , if we want to overwrite them , we have to provide value in command to overwrite entrypoint , provide args value to overwrite command value in docker file

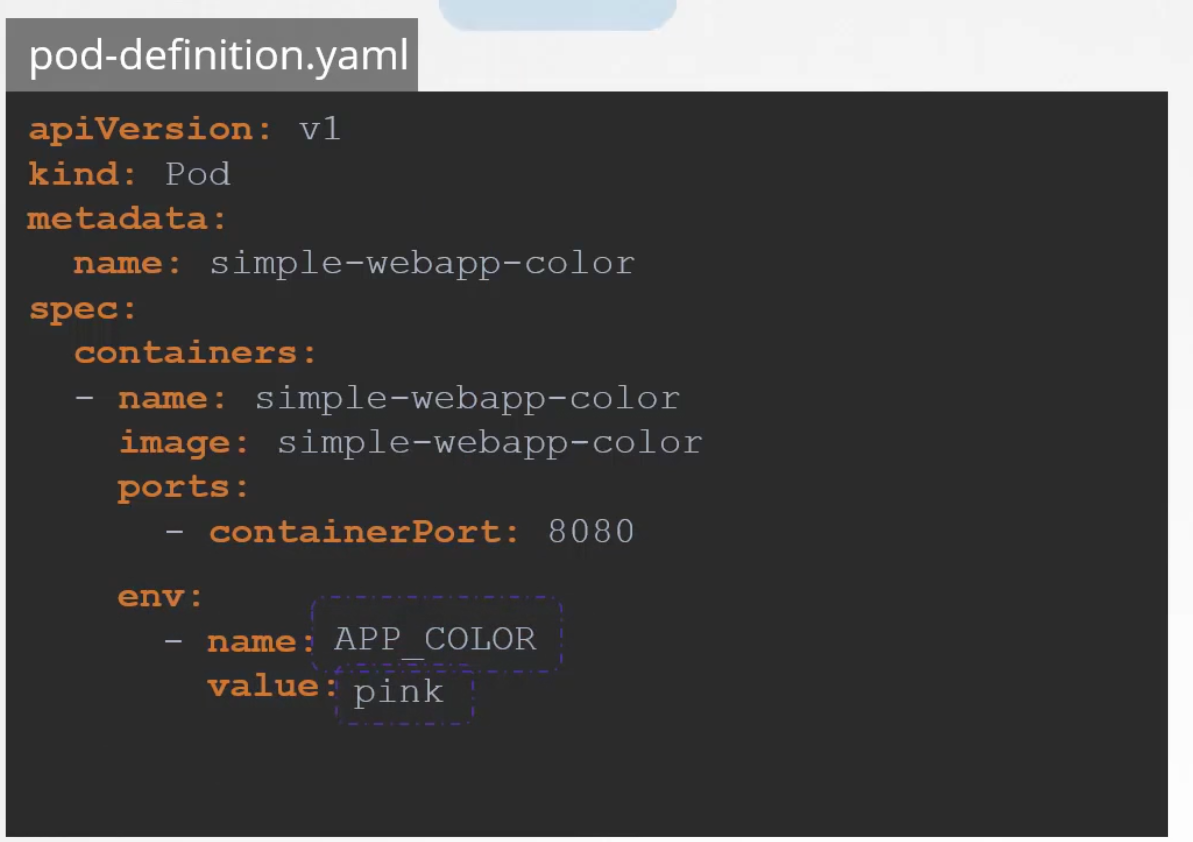

ENV variables in kubernetes

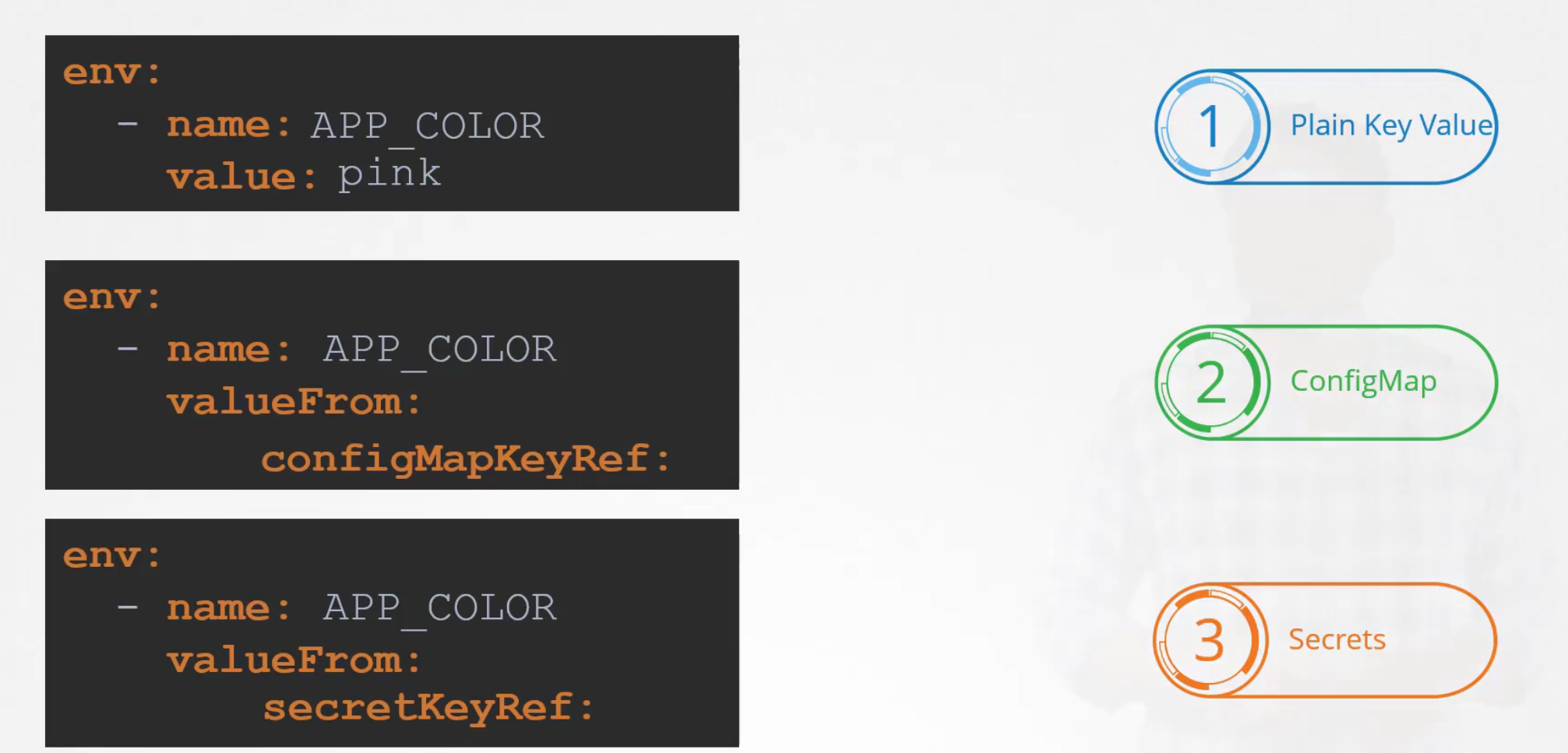

We can specify the environment variable name and values in the yaml file

That’s a direct way but we can also share values from configMap or SecretKey. The difference is, instead of value, we can write valueFrom and then refer to the configMap or SecretKey.

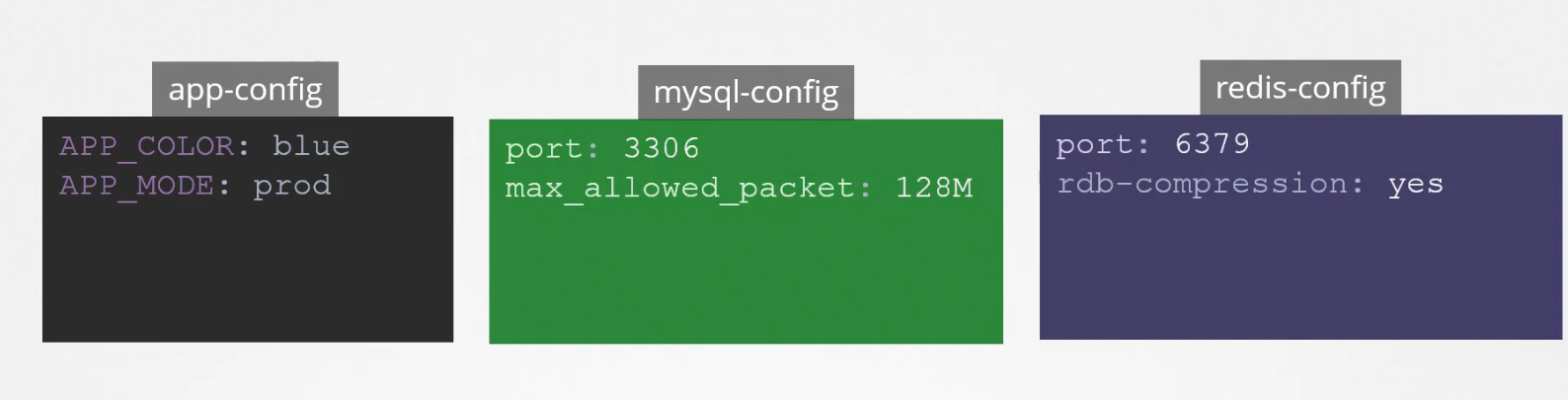

ConfigMaps

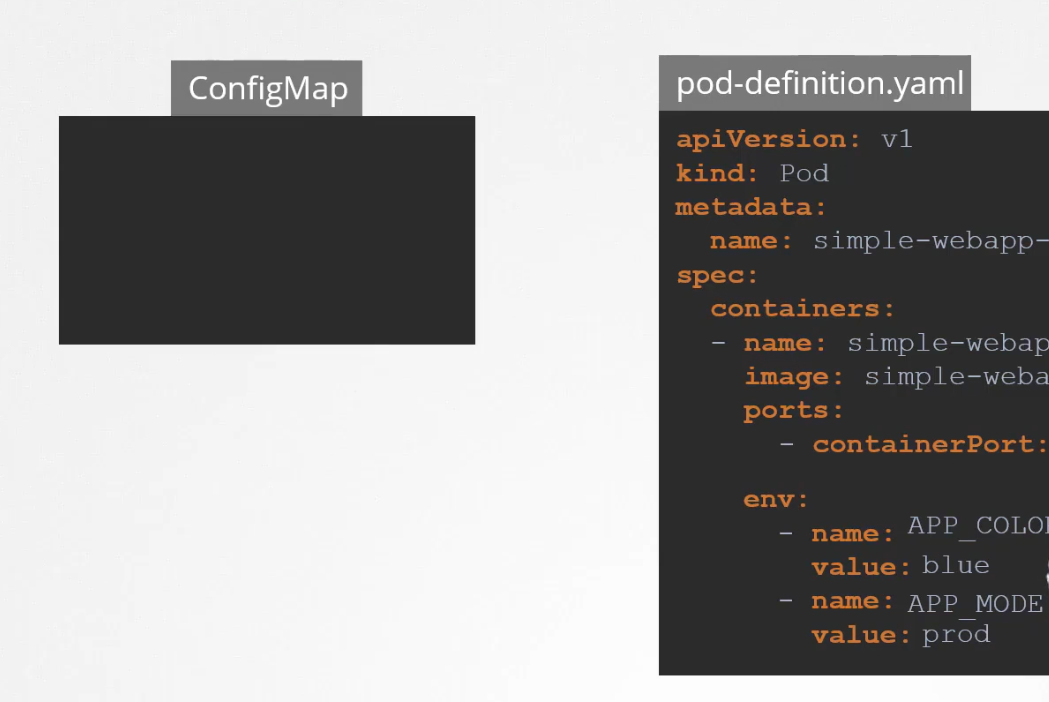

When we have a lots of pods, it becomes difficult to store environment variables and query them.

To solve this, rather than keeping environmental variables in the pod definition file, we can keep them in the ConfigMaps

It stores values as a key : value pair

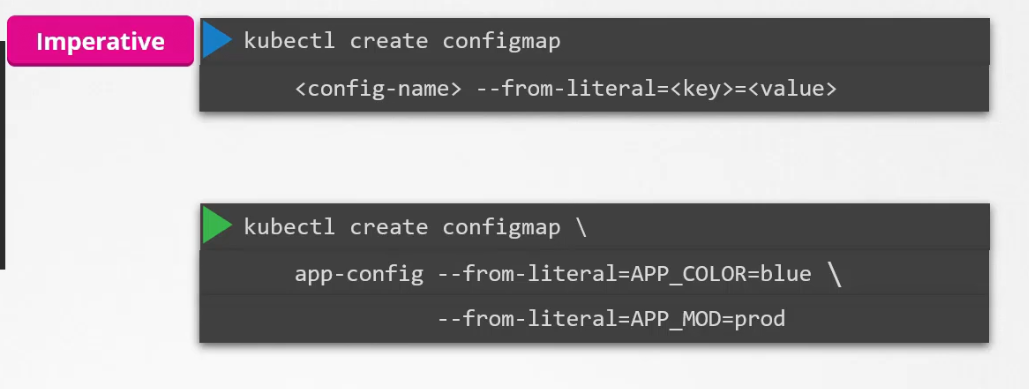

But how to create a config file?

Use kubectl create configmap and then mention the configmap file name and the key value pairs

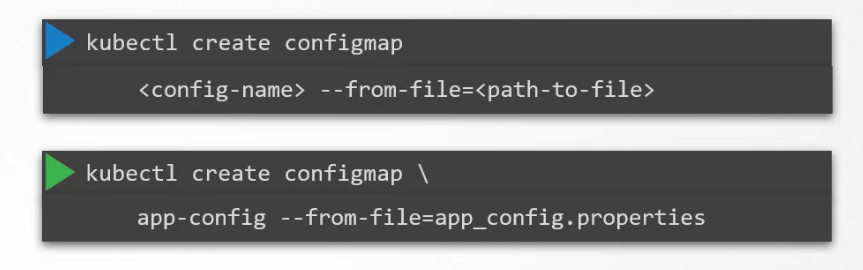

Another way is to create configMaps through file. Here we need to mention the file path where the key value pair details are (Note: You may have hundreds of environment variables. So, using this way will make things easier)

Here, app_config.properties is the file where environmental value key pairs are

Finally, we can also create a config-map.yaml file to create that. Naming the configmap is very important as we can have multiple of them for different reasons.

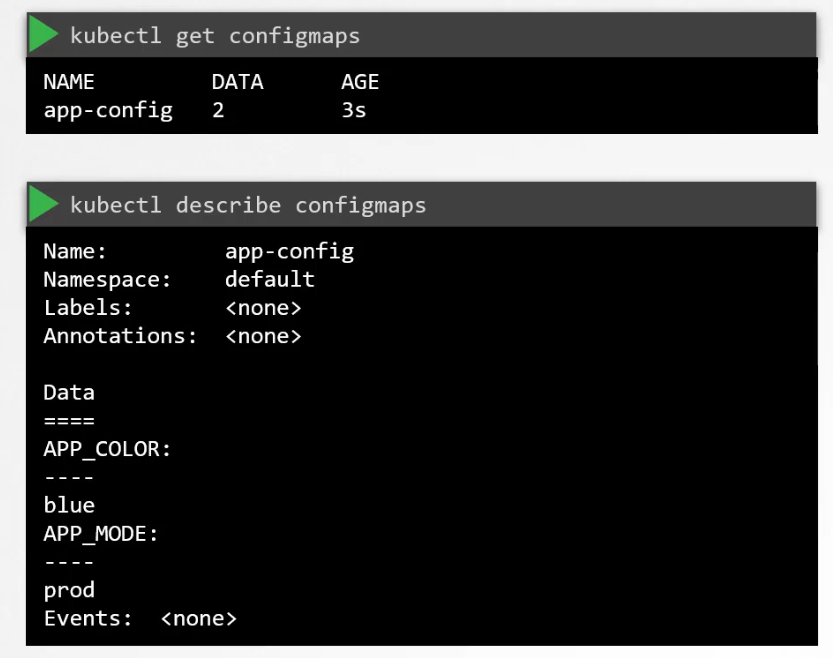

How to check the details of a configMap?

Just use these commands

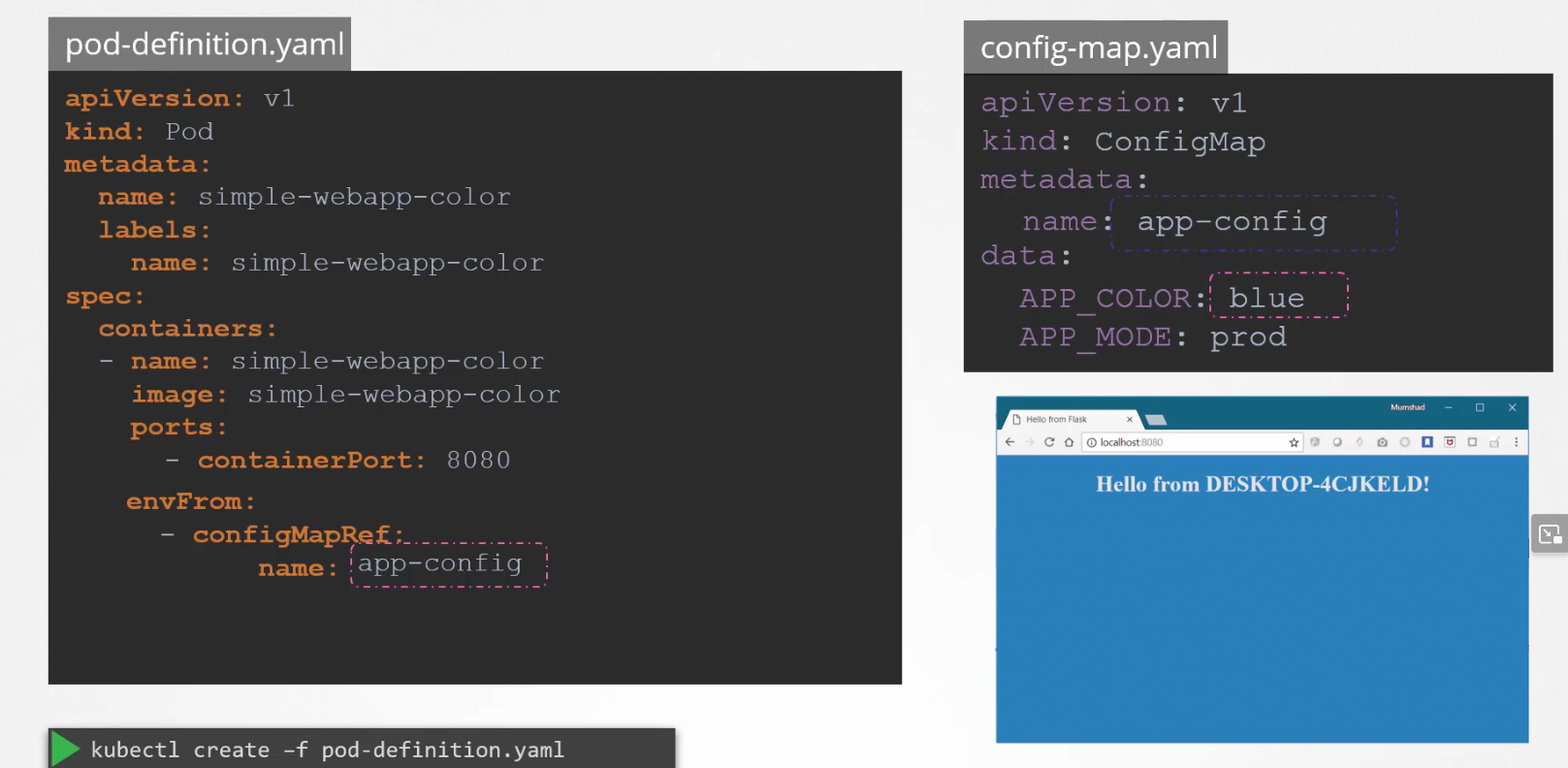

But how to connect the configMap file to the pod definition file?

In the pod definition file, use envFrom and then mention the configMap file name

Then once the pod is launched, the app color will be blue as the pod refers to the app-config file and it says, environment variable APP_COLOR as blue

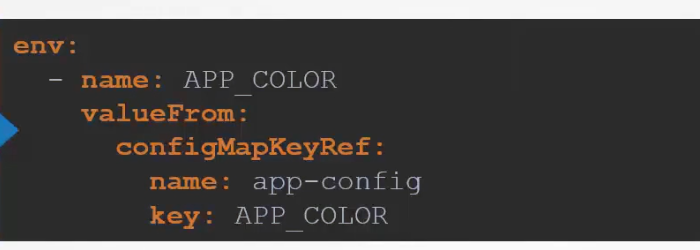

or, we could mention the environment variable and take refer value from a configMap using configMapKeyRef:

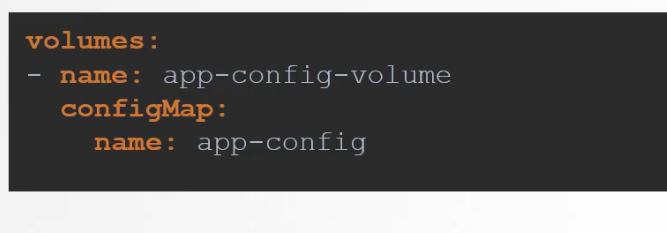

Or, we could inject that as a volume.

Secrets

For an app, we may have confidential data like host name, database, password etc; we simply can’t keep them in the yaml file.

We can keep them in config file but as configmap keeps plain text, it’s not safe to keep these type of variables.

So, the best solution is a secret file.

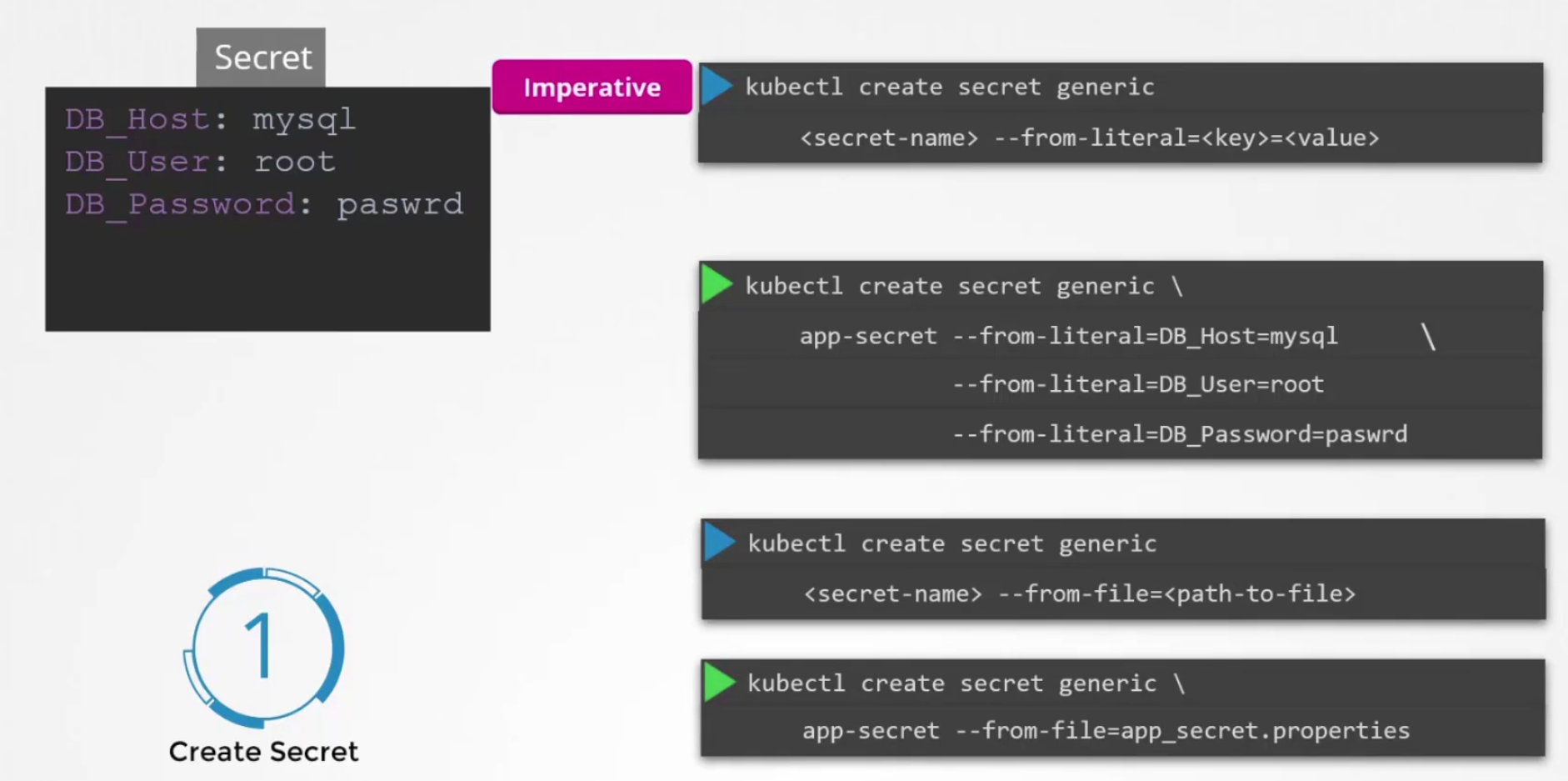

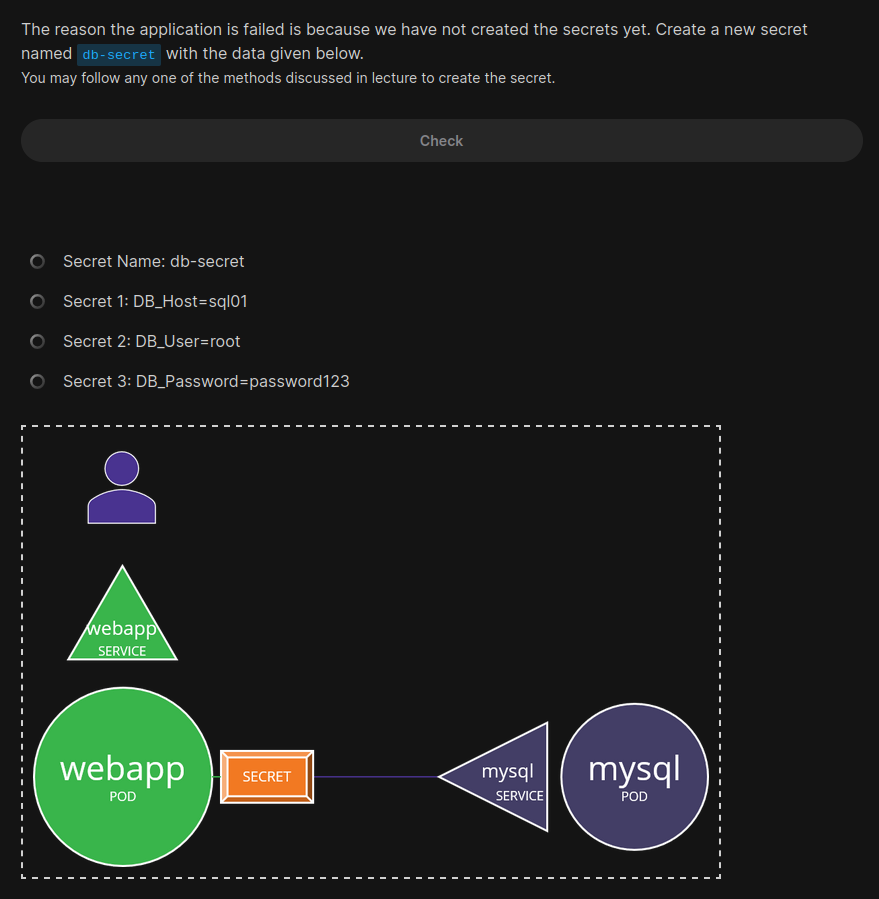

Now, the question is how to create a secret file?

We can either set the value for the variables using —from-literal or, use —from-file and specify a path which has all variables.

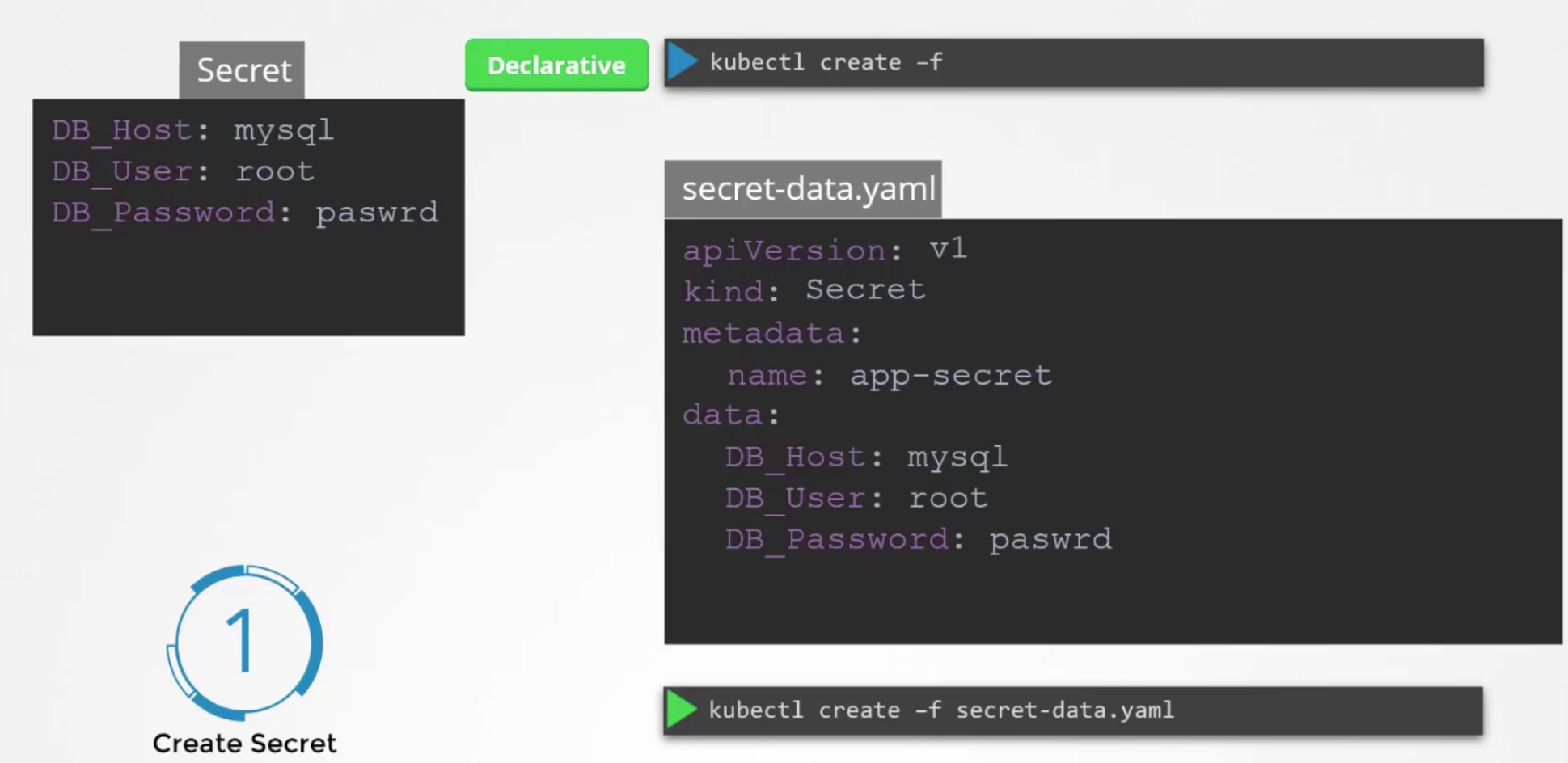

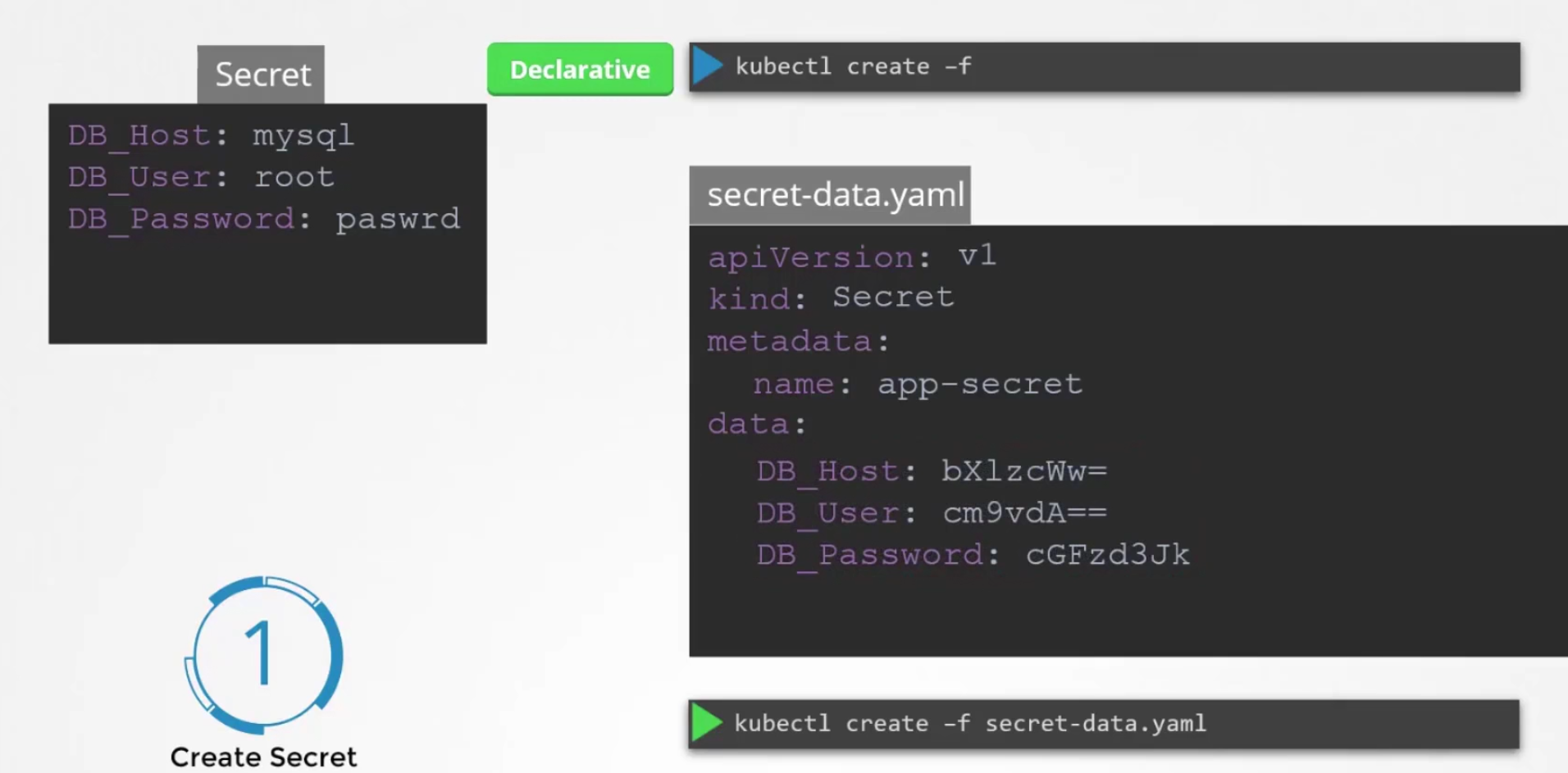

or, using yaml file

Here, you can see we can keep environment variable and values under data:

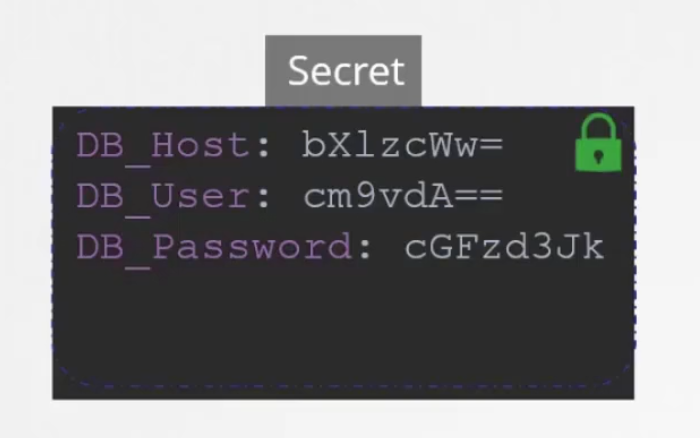

But the problem is, the values are in plain text which is a risk. So, instead of having plaintext, lets write encoded text here

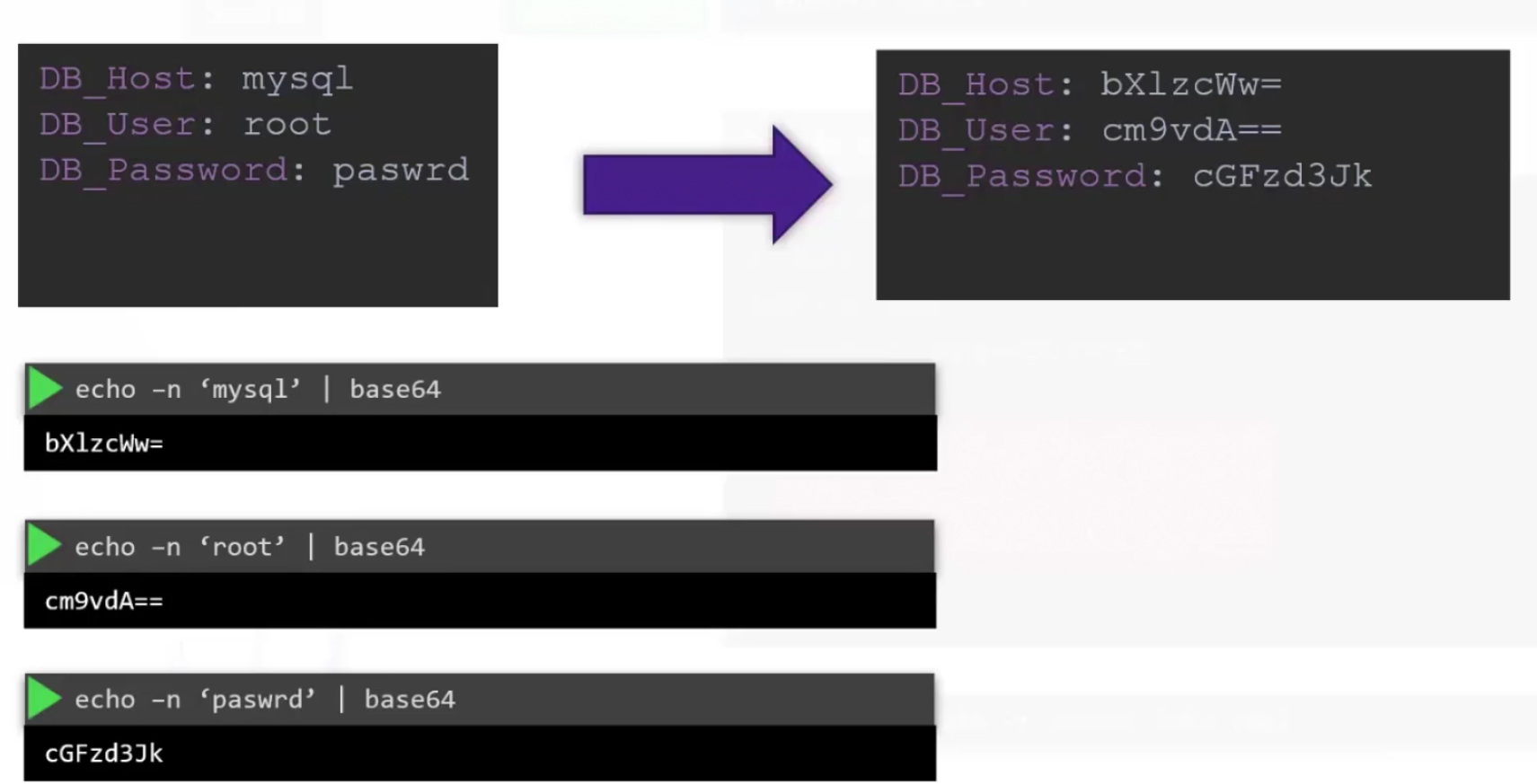

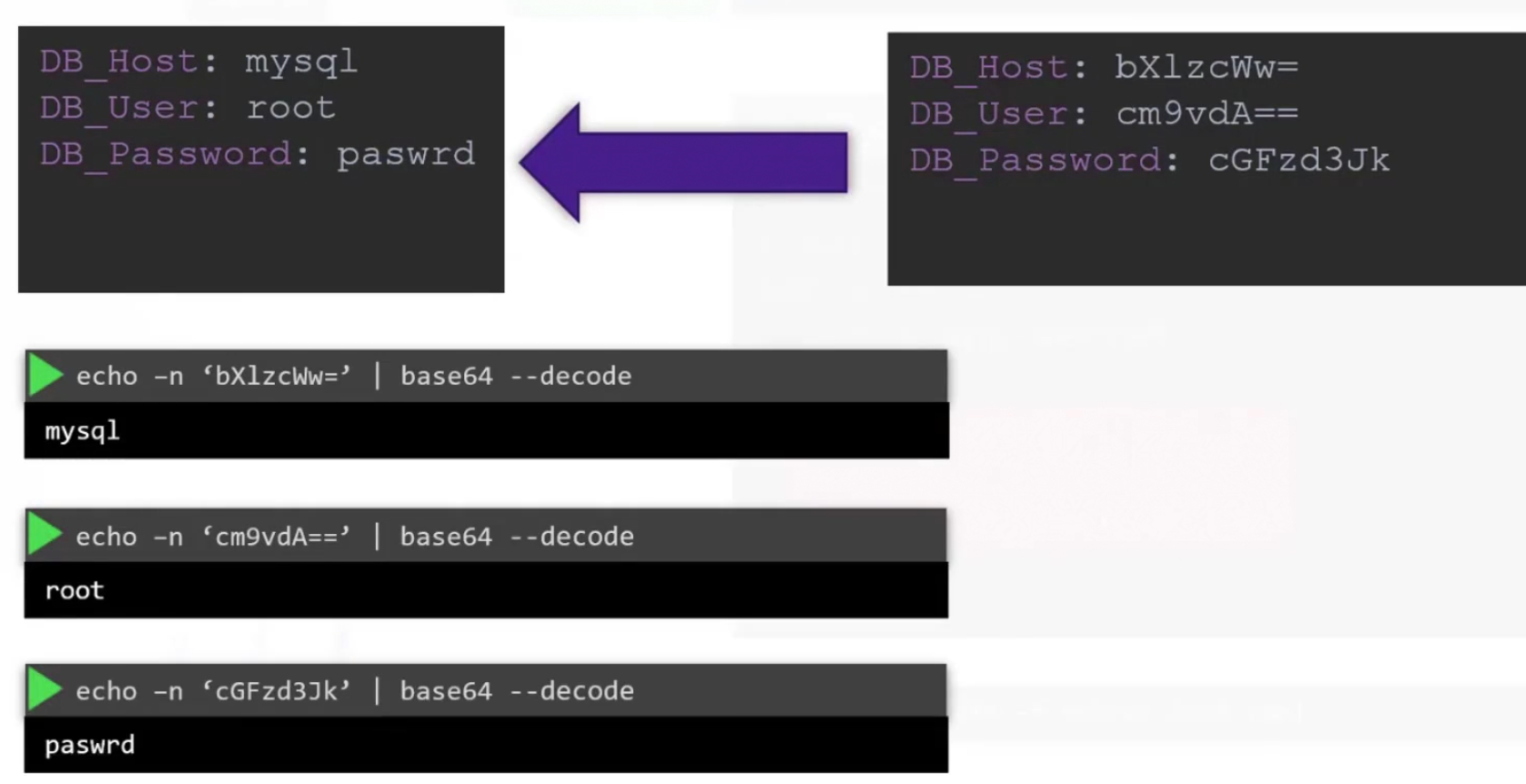

But how to encode the text?

We can encode any text using echo -n ‘<text_to_encode>’ | base64

Then we get the encoded texts and use them in the yaml file.

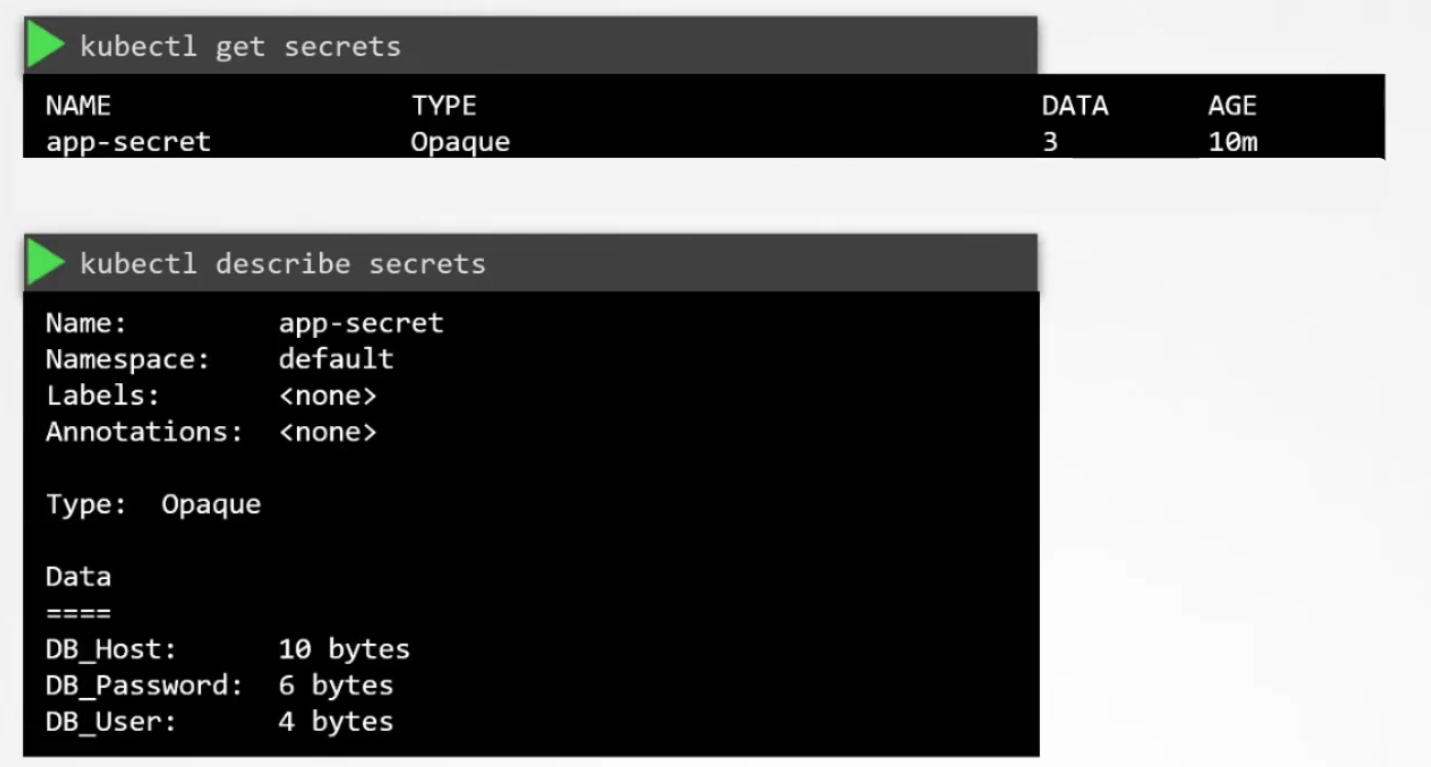

So, once done, the secret is created. Now, you may check secrets

But we can’t see the values of the variables. How to check them?

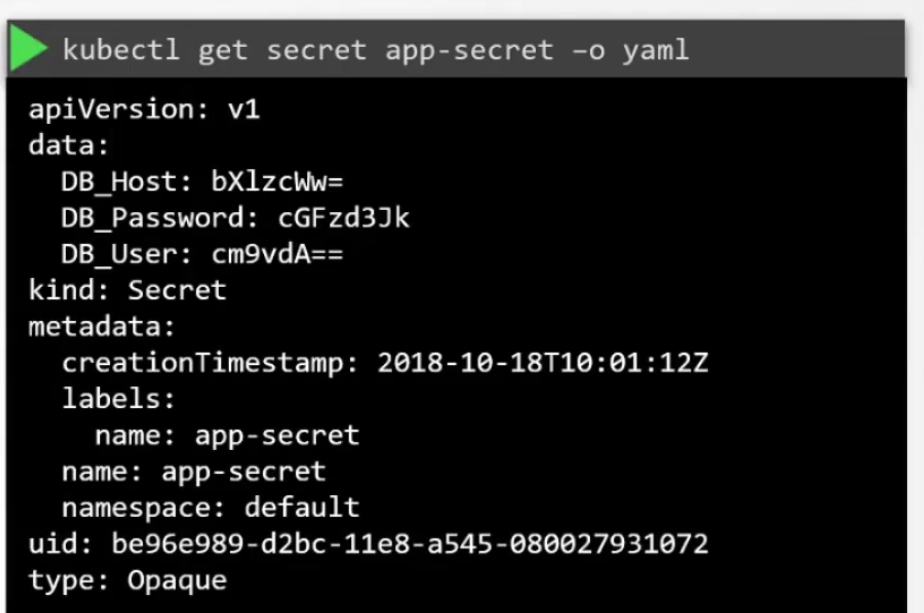

But the value of the environment is encoded. How to decrypt it?

Use echo -n “<encoded-text>” | base64 —decode

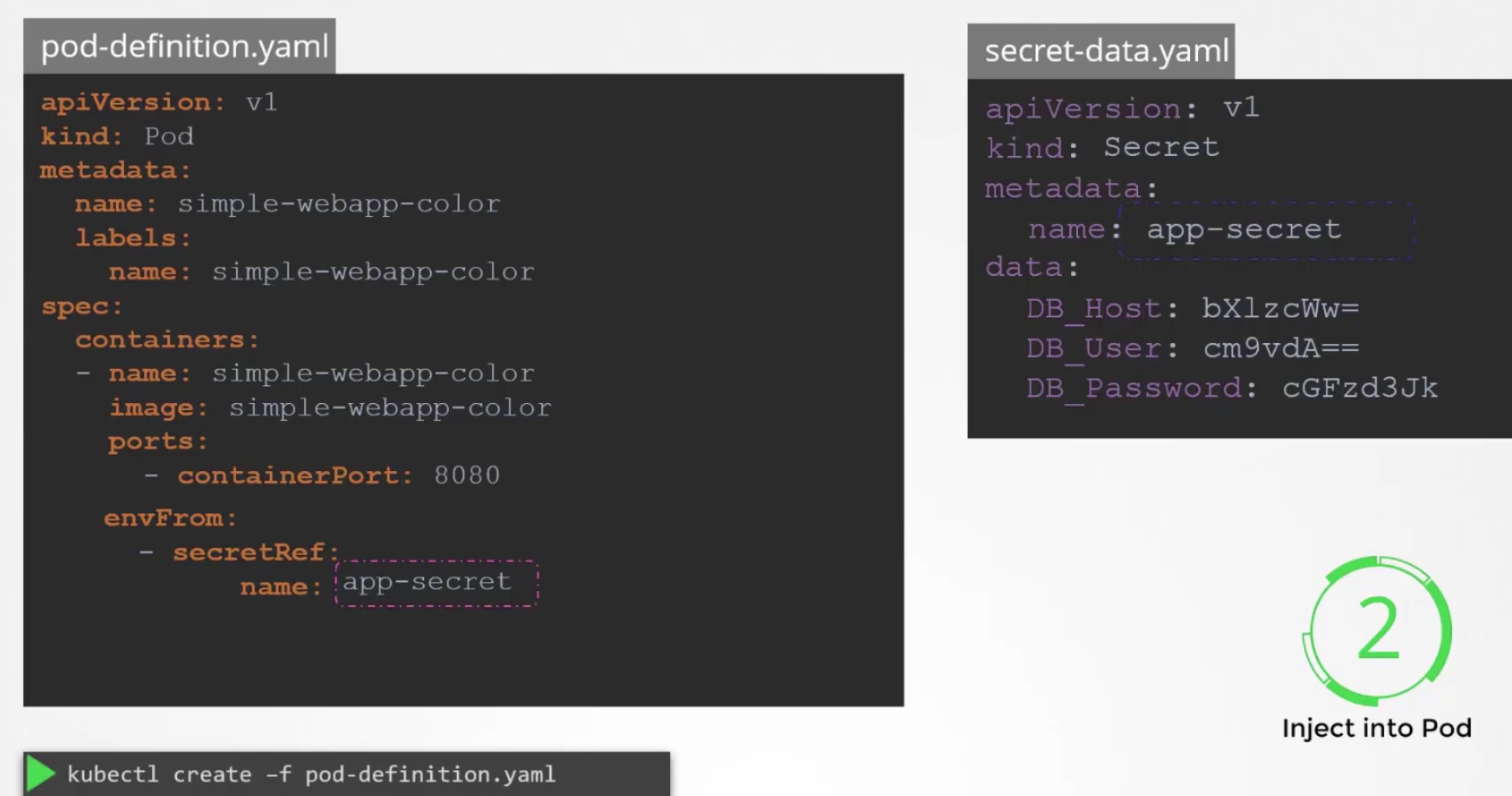

Now we have a secret yaml file and we need to connect the secret file to the pod file

We can just set that under envFrom: and then create the pod.

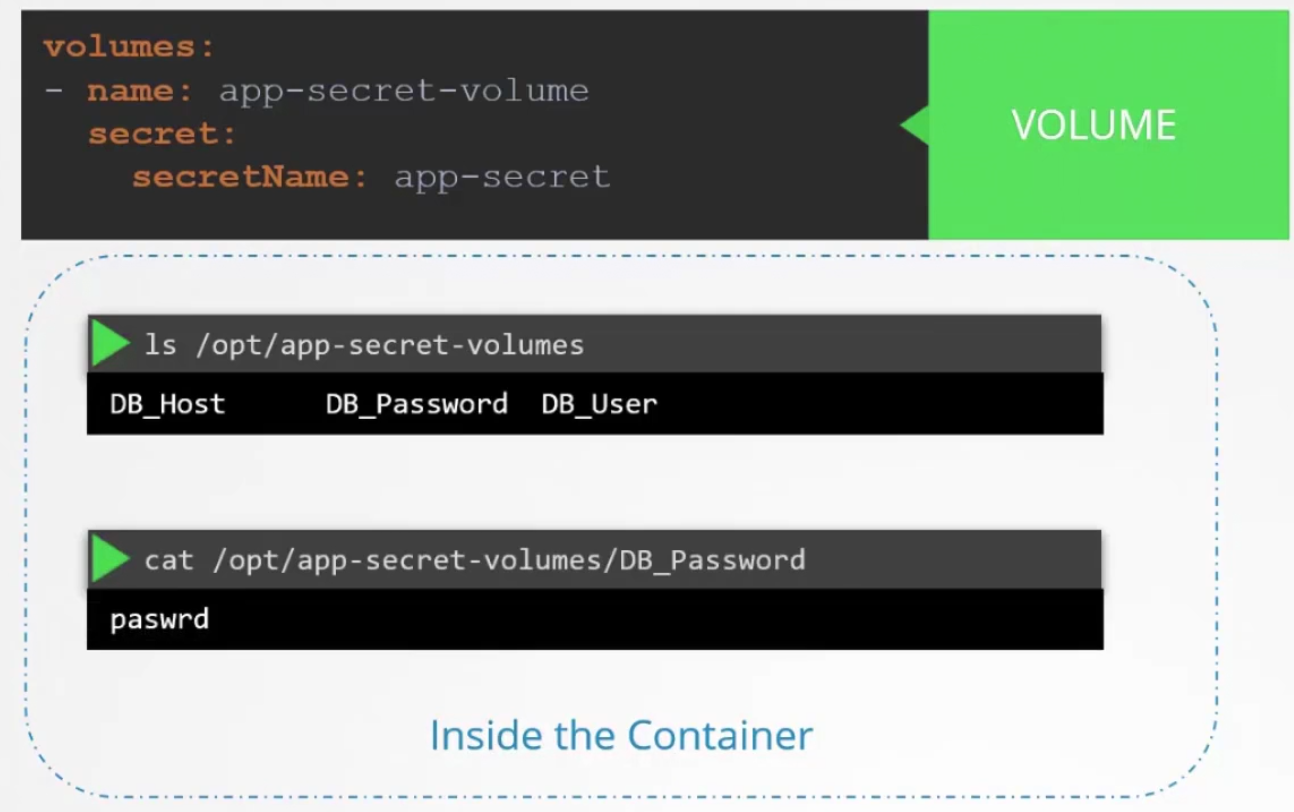

There are other ways to add the secrets like setting as single env and volume.

If we set it as a volume, we can also check them from the folder

ls /opt/<volume_file_name>

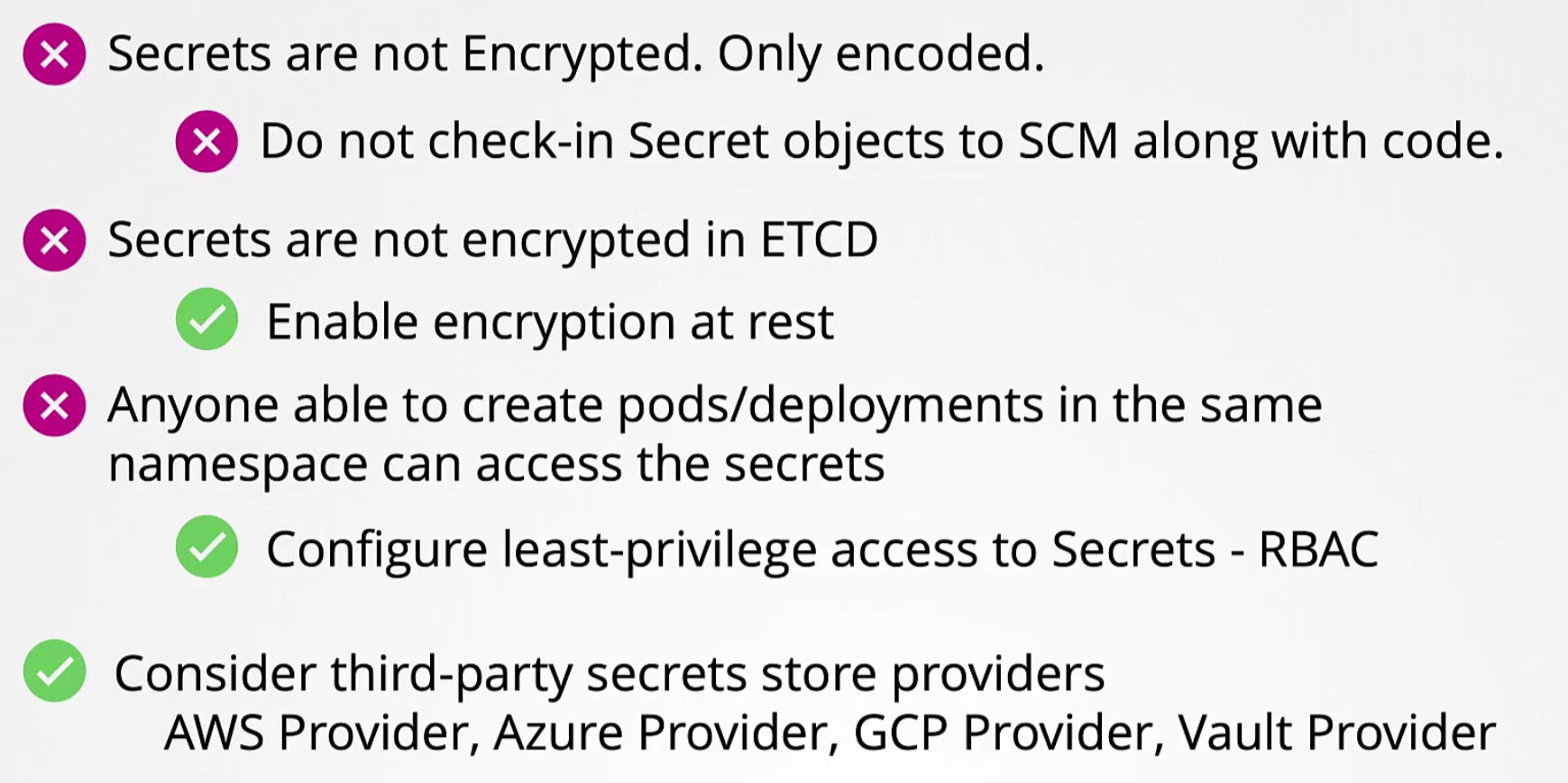

Each file will have the environment values. Also keep in mind that these secrets should not be shared in the github repo and these secrets are just encoded (not encrypted). So, anyone can decode them and use it.

So, use solutions by AWS Provider, Azure Provider etc.

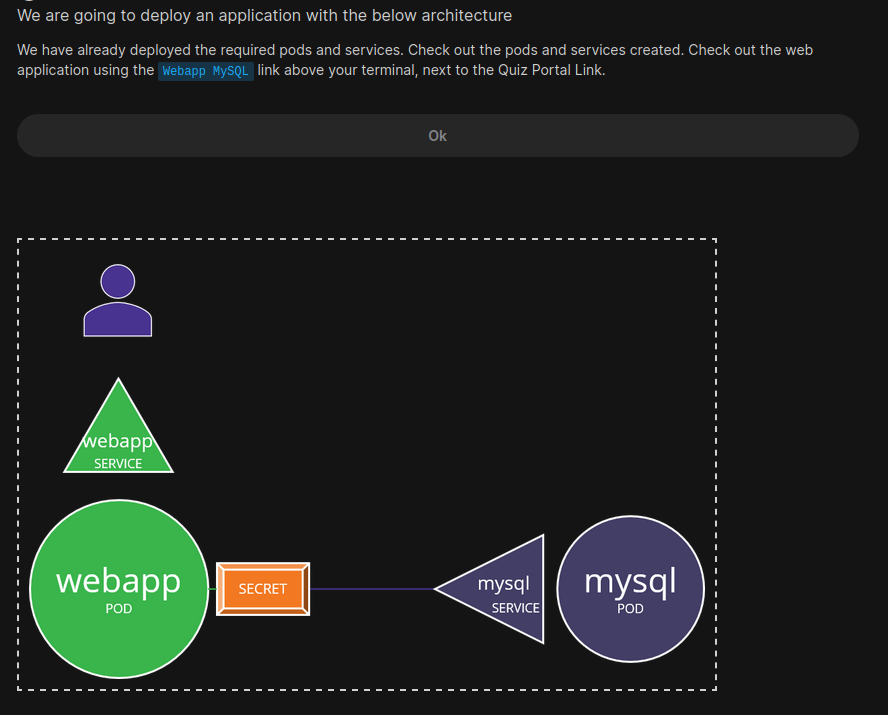

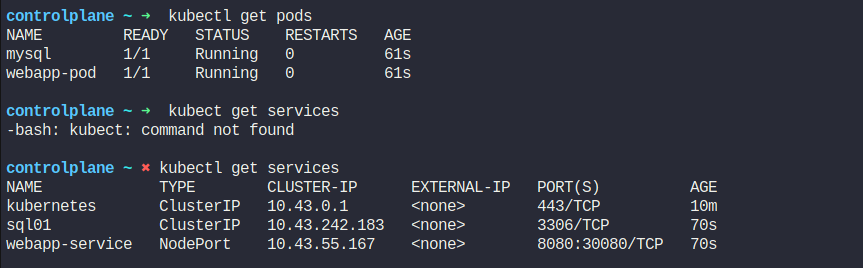

Hands-On:

Let’s the mysql page

Let’s do it in the terminal

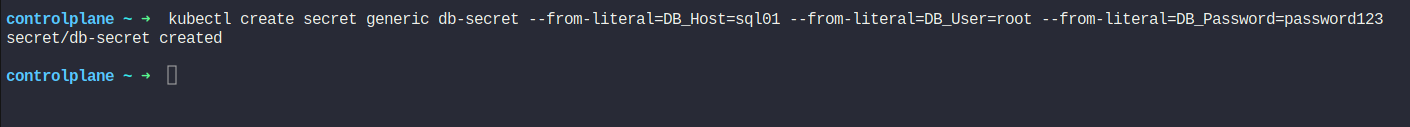

kubectl create secret generic db-secret --from-literal=DB_Host=sql01 --from-literal=DB_User=root --from-literal=DB_Password=password123

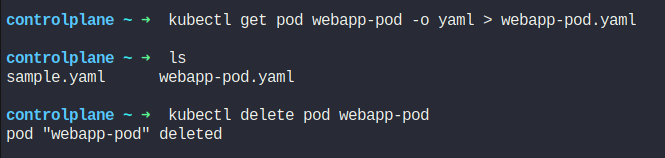

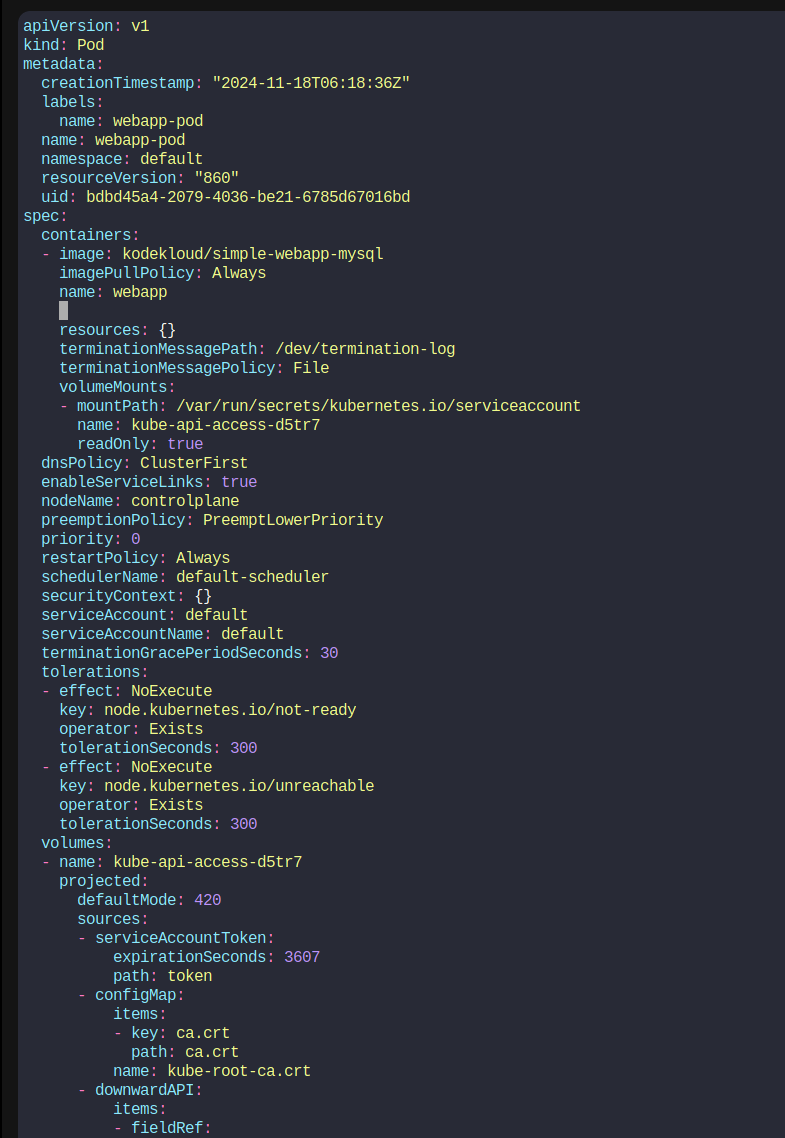

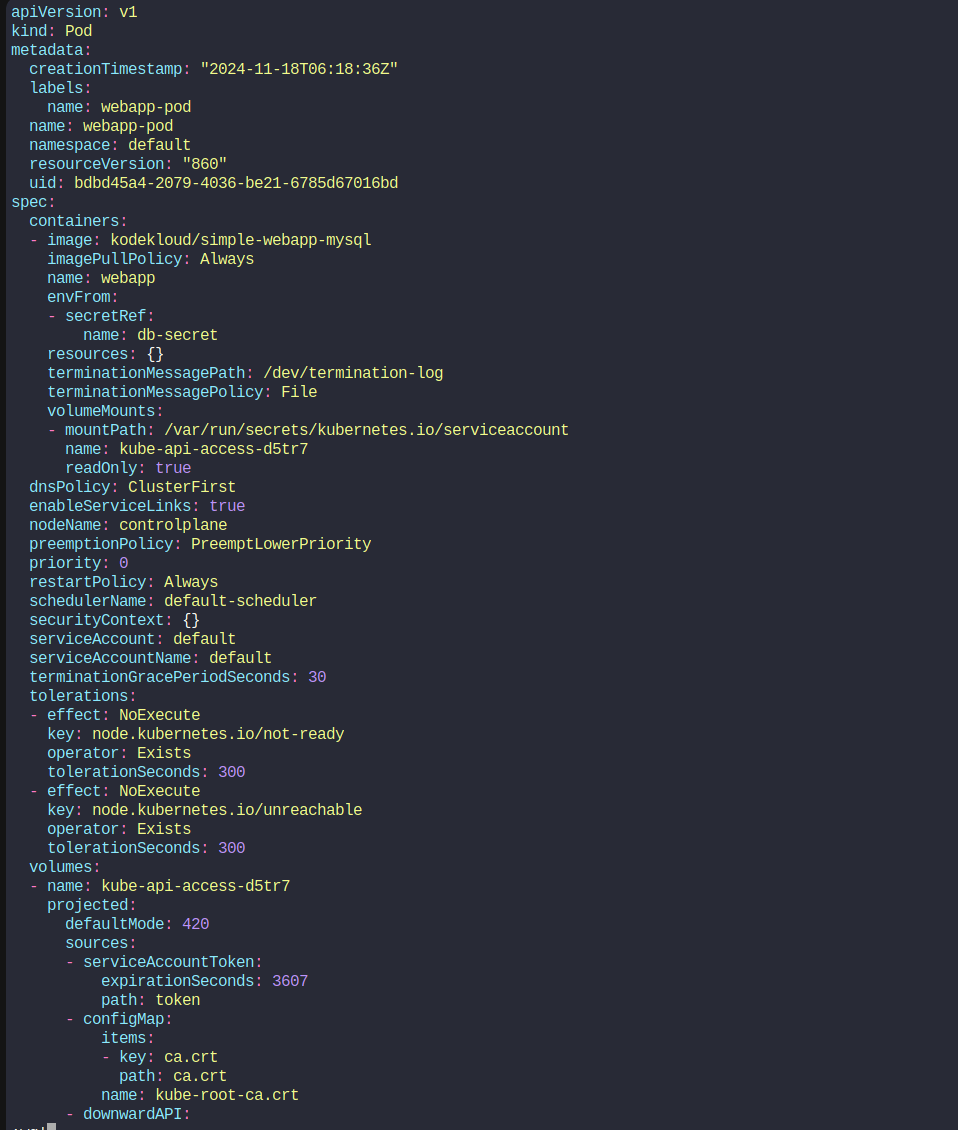

As we have created the secret file, now we will delete the existing the webapp-pod but save it’s configurations in a yaml file.

vi webapp-pod.yaml

We have modified the file and included

envFrom:

- secretRef:

name: db-secret

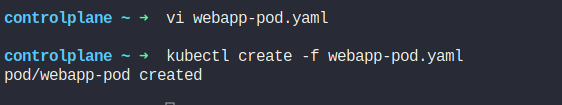

Then we can create the pod

It worked!

Multi Container Pods

We have been dealing with micro services architecture by now

Now, assuming for a service, we need 2 pods (logs agent and web server) all the time.

We won’t merge them together rather manage them such that, they can scale up

and down at the same time.

But how to design a pod so that our desired containers get created together, scale together and share resourced together?

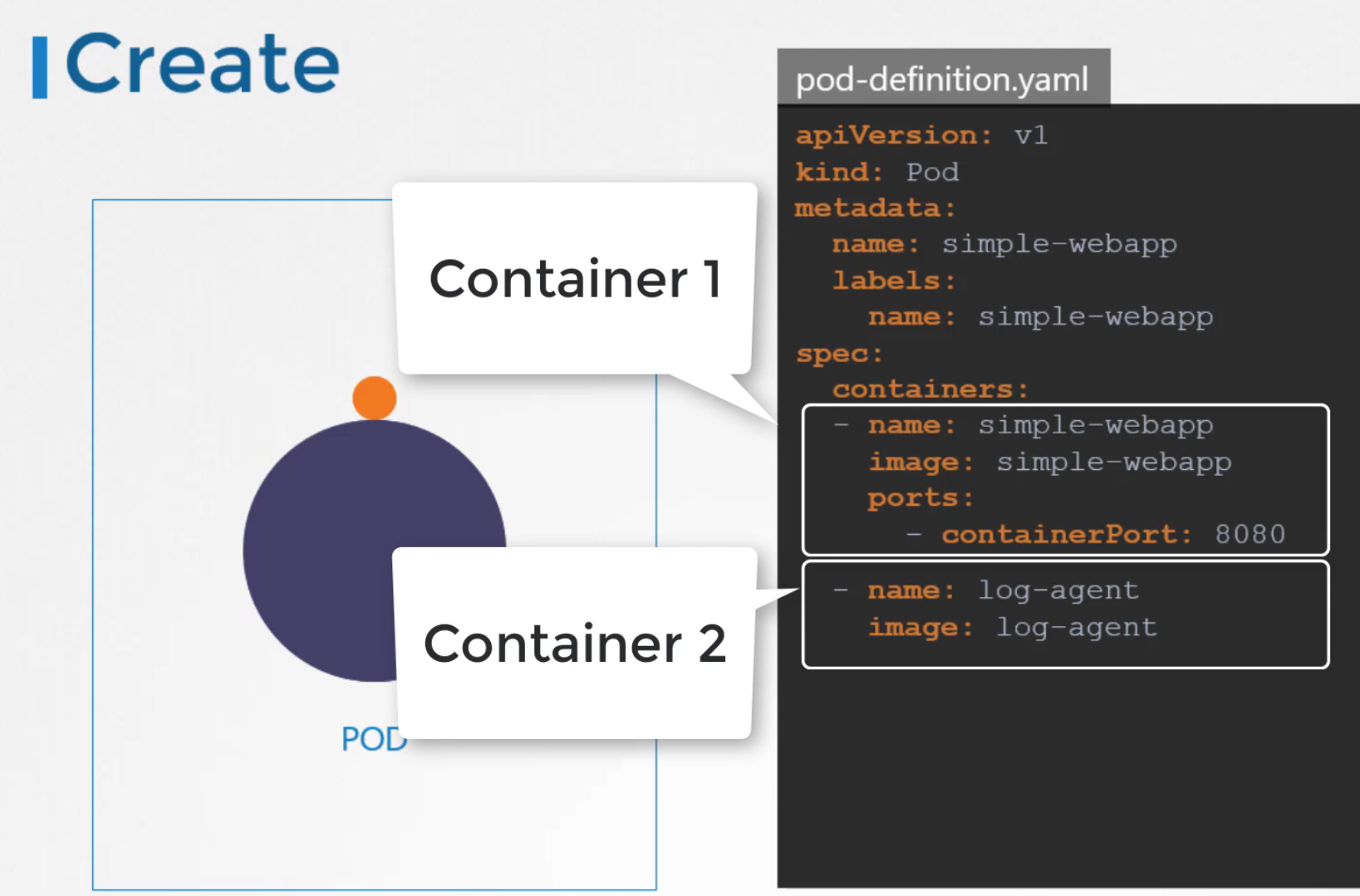

It’s simple! Just add them in the container list within a pod

Hands-on:

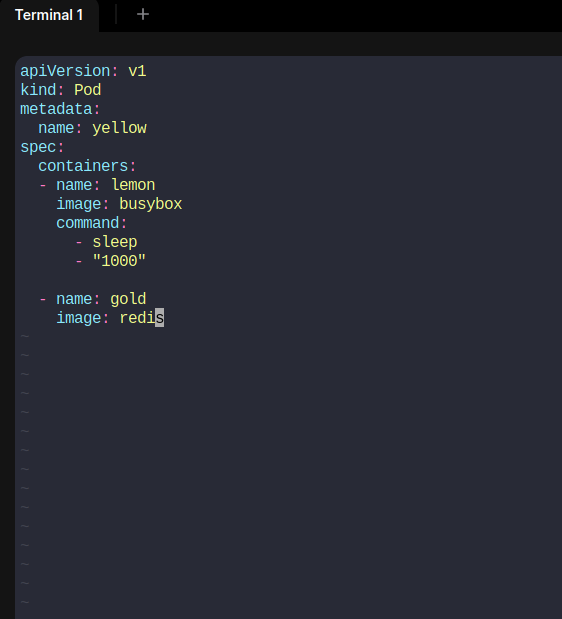

Let’s create a yellow.yaml file and paste these

Done!

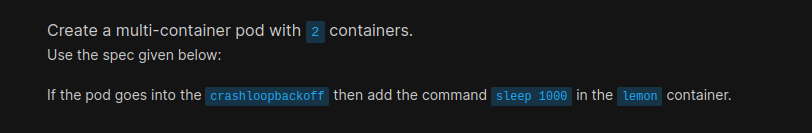

Let’s do another task:

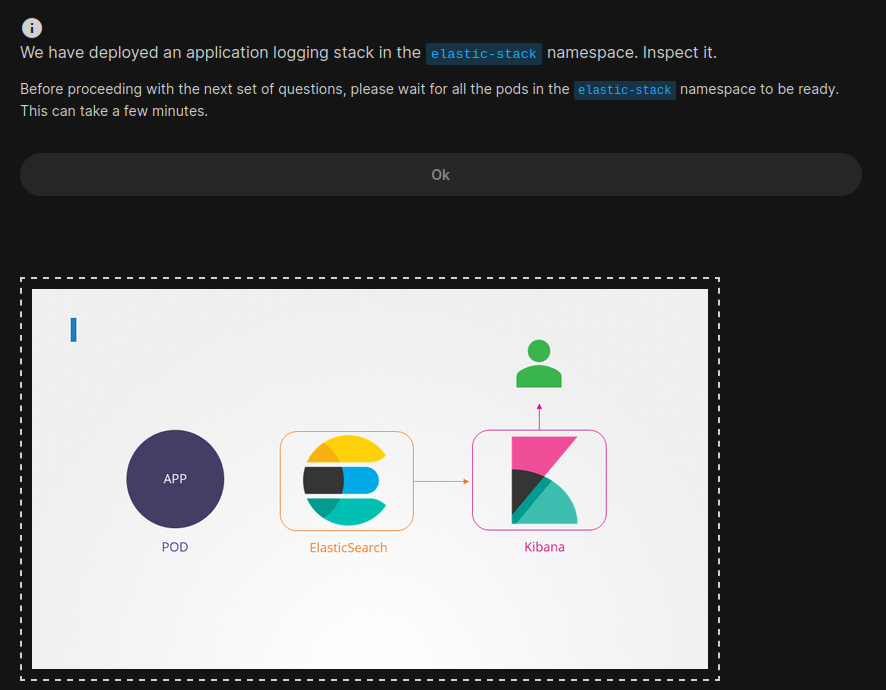

This is how Kibana looks:

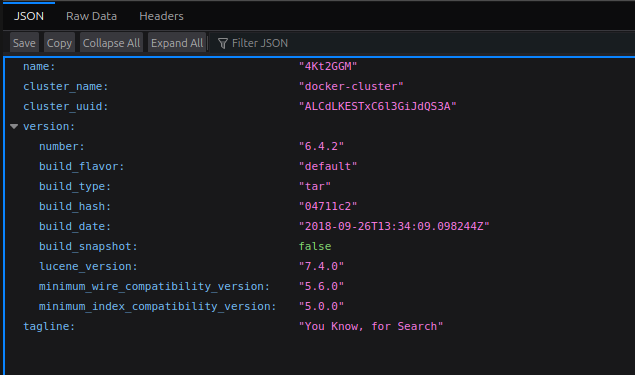

This is the elastic search:

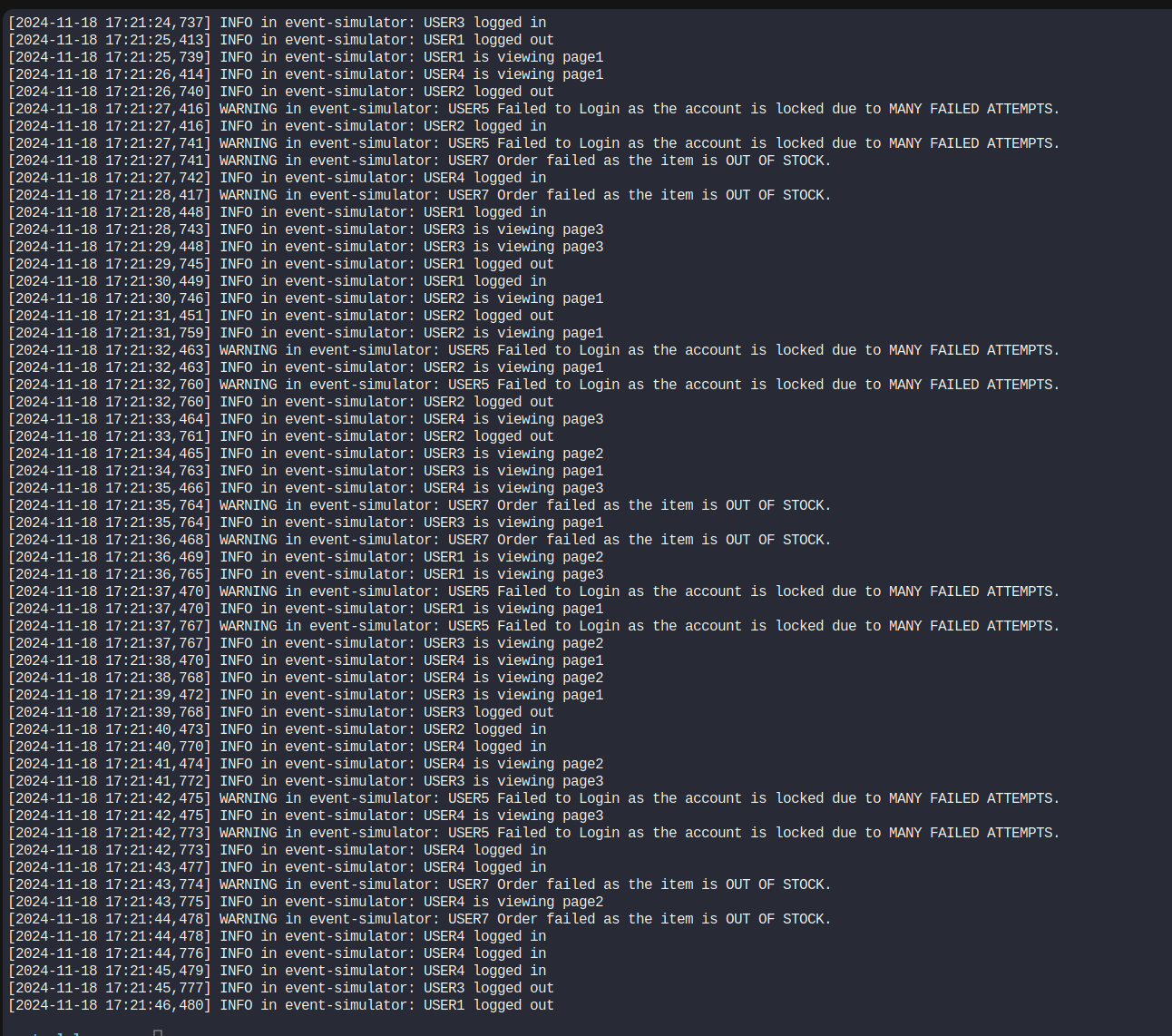

Then using this command, we tried to check the logs output kept in /log/app.log

kubectl -n elastic-stack exec -it app -- cat /log/app.log

User5 is having issues here.

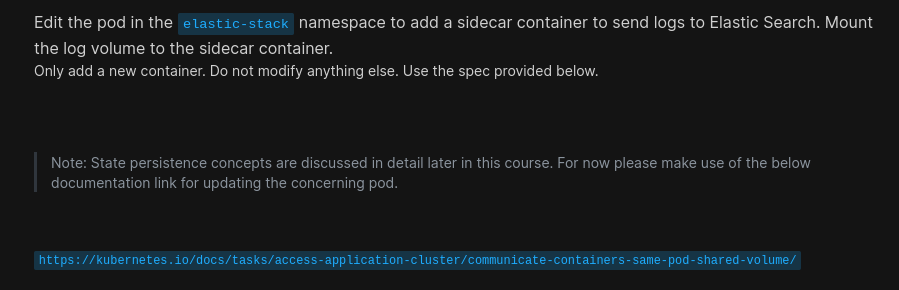

Our task now is:

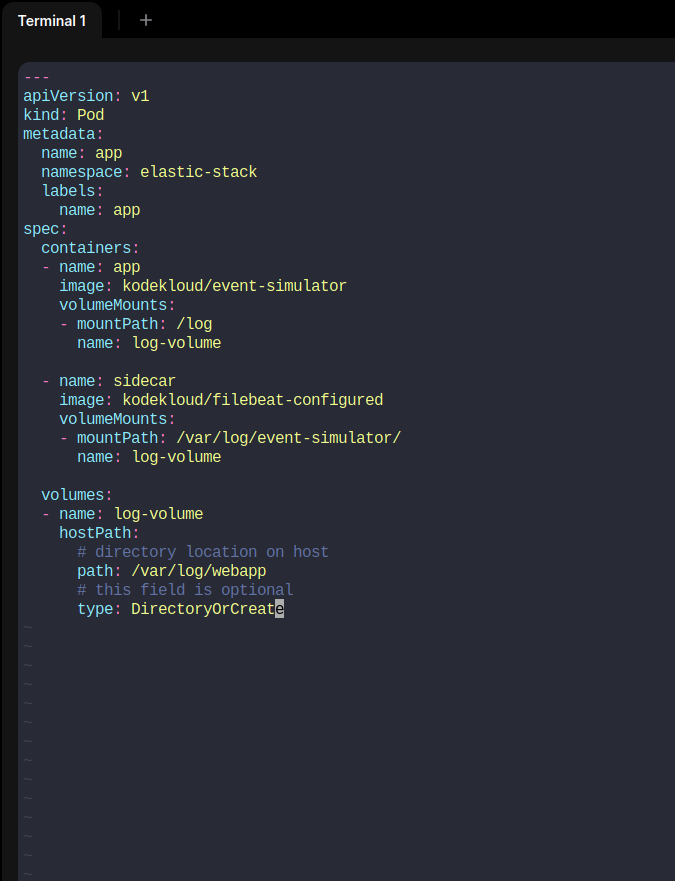

We can now create a yaml file using vi <yaml_file_name>

Then we have stopped the existing app pod and created this pod.

There are 3 common patterns when it comes to designing multi-container PODs. The first, and what we just saw with the logging service example, is known as a sidecar pattern. The others are the adapter and the ambassador pattern.

Init Containers

In a multi-container pod, each container is expected to run a process that stays alive as long as the POD's lifecycle.

For example in the multi-container pod that we talked about earlier that has a web application and logging agent, both the containers are expected to stay alive at all times.

The process running in the log agent container is expected to stay alive as long as the web application is running. If any of them fail, the POD restarts.

But at times you may want to run a process that runs to completion in a container. For example, a process that pulls a code or binary from a repository that will be used by the main web application.

That is a task that will be run only one time when the pod is first created. Or a process that waits for an external service or database to be up before the actual application starts.

That's where initContainers comes in. An initContainer is configured in a pod-like all other containers, except that it is specified inside a initContainers section, like this:

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox:1.28

command: ['sh', '-c', 'echo The app is running! && sleep 3600']

initContainers:

- name: init-myservice

image: busybox

command: ['sh', '-c', 'git clone ;']

When a POD is first created the initContainer is run, and the process in the initContainer must run to a completion before the real container hosting the application starts.

You can configure multiple such initContainers as well, like how we did for multi-containers pod. In that case, each init container is run one at a time in sequential order.

If any of the initContainers fail to complete, Kubernetes restarts the Pod repeatedly until the Init Container succeeds. ``` apiVersion: v1 kind: Pod metadata: name: myapp-pod labels: app: myapp spec: containers:

name: myapp-container image: busybox:1.28 command: ['sh', '-c', 'echo The app is running! && sleep 3600'] initContainers:

name: init-myservice image: busybox:1.28 command: ['sh', '-c', 'until nslookup myservice; do echo waiting for myservice; sleep 2; done;']

name: init-mydb image: busybox:1.28 command: ['sh', '-c', 'until nslookup mydb; do echo waiting for mydb; sleep 2; done;']

Subscribe to my newsletter

Read articles from Md Shahriyar Al Mustakim Mitul directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by