The Surprising Effectiveness of Test-Time Training for Abstract Reasoning

Mike Young

Mike Young

This is a Plain English Papers summary of a research paper called The Surprising Effectiveness of Test-Time Training for Abstract Reasoning. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- The paper examines the surprising effectiveness of "test-time training" for improving the abstract reasoning capabilities of language models.

- Test-time training involves fine-tuning a pre-trained model on a small amount of task-specific data during inference, rather than during the typical pre-training phase.

- The authors demonstrate that this simple technique can significantly boost performance on the Abstract Reasoning Challenge (ARC) - a benchmark for evaluating abstract reasoning in AI systems.

Plain English Explanation

The researchers explored a technique called "test-time training" to improve the abstract reasoning abilities of AI language models. Typically, AI models are trained on large datasets during a pre-training phase, and then fine-tuned on a specific task.

However, the researchers found that fine-tuning the model during the testing phase, using just a small amount of task-specific data, can actually lead to substantial performance improvements on abstract reasoning benchmarks like the ARC Challenge. This was a surprising result, as it challenges the traditional machine learning approach of relying solely on the pre-training phase to build capable models.

The key insight is that language models can continue to learn and adapt even at test-time, rather than being locked into a fixed set of capabilities. By exposing the model to just a few relevant examples during inference, it can quickly "figure out" the patterns and rules needed to excel at abstract reasoning tasks.

This test-time training technique is significant because it suggests new ways to build more flexible and adaptable AI systems, capable of learning and reasoning on-the-fly, rather than being limited by their initial training. It opens up exciting possibilities for deploying language models in real-world applications that require abstract thinking and problem-solving.

Key Findings

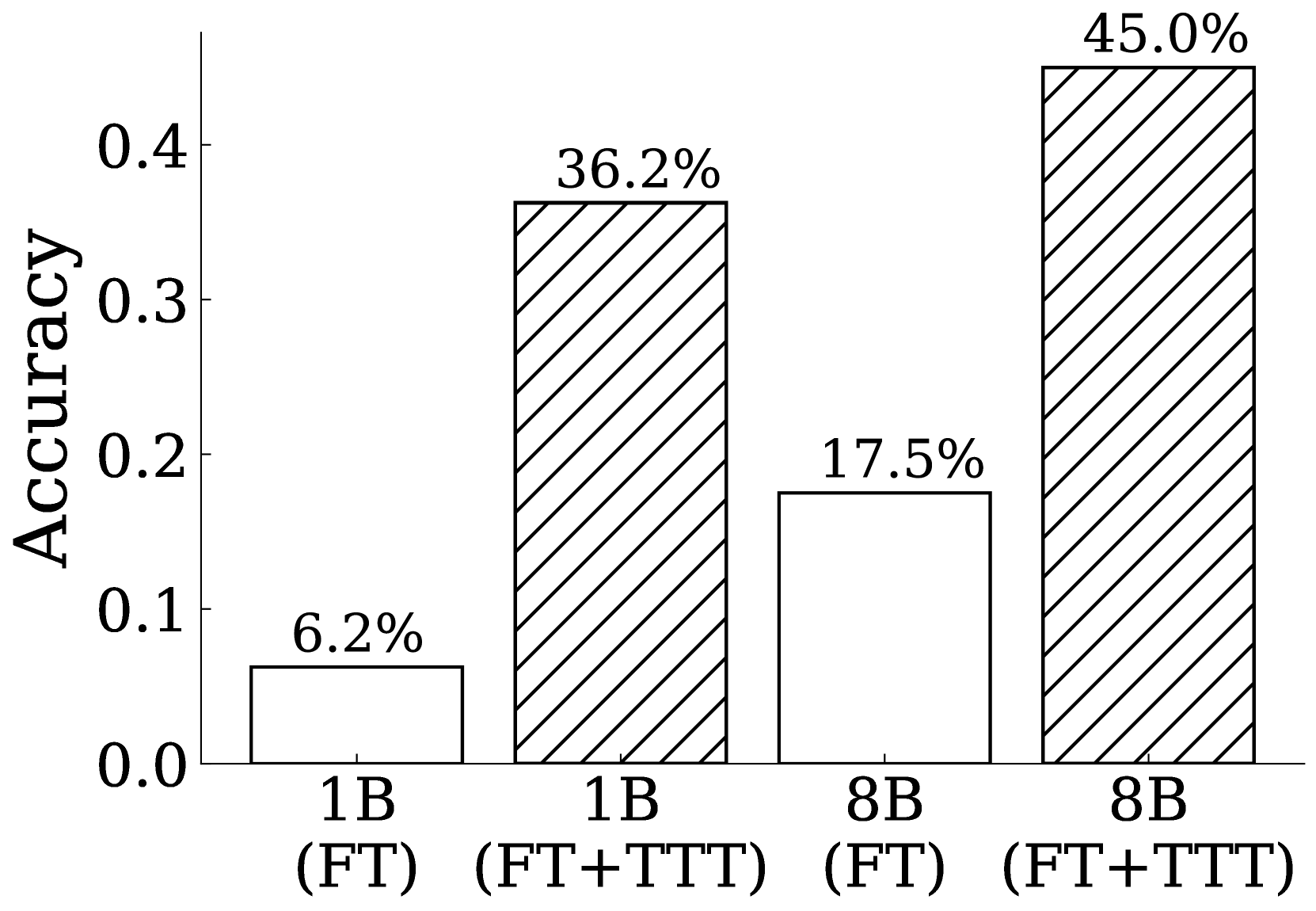

- Test-time training - fine-tuning a pre-trained model on a small amount of task-specific data during inference - can significantly boost performance on abstract reasoning benchmarks like the ARC Challenge.

- This technique leads to much larger performance gains compared to traditional fine-tuning approaches applied during the pre-training phase.

- The ability to continue learning at test-time suggests language models have more flexible and adaptable reasoning capabilities than previously thought.

Technical Explanation

The researchers evaluated the test-time training approach on the Abstract Reasoning Challenge (ARC) - a dataset designed to assess the abstract reasoning abilities of AI systems. ARC presents a series of visual analogy tasks, where the model must infer the underlying rules or patterns that relate the input images and predict the missing output image.

Typically, language models are trained on large datasets during a pre-training phase, and then fine-tuned on a specific task. In contrast, the test-time training approach involves fine-tuning the pre-trained model on just a small number of ARC examples during the inference stage.

The researchers found that this simple test-time fine-tuning led to substantial performance gains, boosting the model's ARC accuracy by over 20 percentage points compared to the pre-trained baseline. Importantly, this improvement was much larger than what could be achieved through traditional pre-training fine-tuning approaches.

The authors attribute this surprising effectiveness to the inherent adaptability and reasoning capabilities of language models. By exposing the model to just a few relevant examples at test-time, it is able to quickly "figure out" the abstract rules and patterns needed to excel at the task, rather than being limited by its original pre-training.

These findings suggest that language models have more flexible and generalizable reasoning abilities than previously assumed. The ability to continue learning at test-time opens up new possibilities for building AI systems that can adapt and reason on-the-fly, rather than being constrained by their initial training.

Implications for the Field

The test-time training approach represents a significant departure from traditional machine learning practices, which have typically relied on pre-training and fine-tuning as the primary means of building capable AI models.

By demonstrating the power of continued learning at inference time, this research challenges the assumption that language models are limited to the knowledge and capabilities encoded during the pre-training phase. Instead, it suggests that these models possess more dynamic and adaptable reasoning abilities that can be further refined and expanded through targeted fine-tuning, even at test-time.

This has important implications for the design and deployment of language-based AI systems. Rather than treating models as static, fixed entities, the test-time training approach points towards more flexible and responsive AI architectures that can continuously learn and improve their reasoning capabilities in real-world applications.

Ultimately, this work highlights the need for a deeper understanding of the inner workings and reasoning mechanisms of large language models. By uncovering their ability to adapt and learn on-the-fly, it opens up new avenues for advancing the field of artificial intelligence and building AI systems with more robust and generalizable problem-solving skills.

Critical Analysis

One potential limitation of the test-time training approach is the requirement for task-specific data during the inference stage. While the authors demonstrate impressive performance gains using just a small number of examples, in real-world applications, access to such task-specific data may not always be feasible or practical.

Additionally, the paper does not explore the generalization capabilities of the test-time trained models beyond the specific ARC benchmark. It remains to be seen whether the performance improvements transfer to other abstract reasoning tasks or whether the models become overly specialized to the training examples seen during inference.

Further research is needed to better understand the mechanisms underlying the test-time training approach and its broader implications. For instance, investigating the model's ability to retain and transfer knowledge gained during test-time fine-tuning, or exploring the trade-offs between the amount of task-specific data required and the resulting performance gains, could provide valuable insights.

Despite these caveats, the core finding - that language models can continue to learn and improve their reasoning capabilities even at test-time - is a significant and thought-provoking contribution to the field of artificial intelligence. It challenges existing assumptions and opens up new avenues for developing more adaptive and versatile AI systems.

Conclusion

The paper's exploration of test-time training for abstract reasoning represents a promising step towards building more flexible and adaptable AI systems. By demonstrating the ability of language models to continue learning and refining their capabilities even during the inference stage, this research challenges the traditional reliance on pre-training and fine-tuning as the primary means of developing intelligent agents.

The findings suggest that language models possess inherent reasoning and problem-solving skills that can be further honed and expanded through targeted fine-tuning, even in the context of specific tasks. This opens up exciting possibilities for deploying AI systems in real-world applications that require abstract thinking and adaptive learning, rather than being limited by their initial training.

While the test-time training approach faces some practical limitations and warrants further investigation, its core insight - that language models can learn and reason on-the-fly - represents a significant advancement in our understanding of artificial intelligence and its potential for continued growth and improvement. As the field of AI continues to evolve, techniques like test-time training may play an increasingly important role in developing more flexible, adaptable, and capable intelligent systems.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Subscribe to my newsletter

Read articles from Mike Young directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by