Modeling AdaGrad, RMSProp, and Adam with Integro-Differential Equations

Mike Young

Mike Young

This is a Plain English Papers summary of a research paper called Modeling AdaGrad, RMSProp, and Adam with Integro-Differential Equations. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- Research examines continuous-time models of adaptive optimization algorithms

- Focuses on AdaGrad, RMSProp, and Adam optimizers

- Develops integro-differential equations to model optimizer behavior

- Proves convergence properties for various optimization scenarios

- Demonstrates connections between discrete and continuous optimization methods

Plain English Explanation

Optimization algorithms help machine learning models learn efficiently. This research explores how three popular optimization methods - AdaGrad, RMSProp, and Adam - work at their core. Think of these optimizers like different strategies for climbing a mountain - each has its own way of deciding which path to take.

The researchers created mathematical models that show how these algorithms behave over time, similar to how physicists model the motion of objects. By converting the step-by-step computer algorithms into smooth, continuous mathematical equations, they gained new insights into why these methods work well.

This approach reveals how the optimizers adjust their learning process, much like how a hiker might change their pace based on the terrain. The continuous models help explain why certain optimizers perform better in different situations.

Key Findings

The research established that:

- Adaptive optimization methods can be accurately modeled using continuous mathematics

- The continuous models predict optimizer behavior in both simple and complex scenarios

- Each optimizer has distinct convergence properties that match practical observations

- The mathematical framework provides new tools for analyzing optimization algorithms

Technical Explanation

The study develops integro-differential equations that capture the behavior of adaptive optimization methods. These equations model how the optimizers accumulate and use gradient information over time.

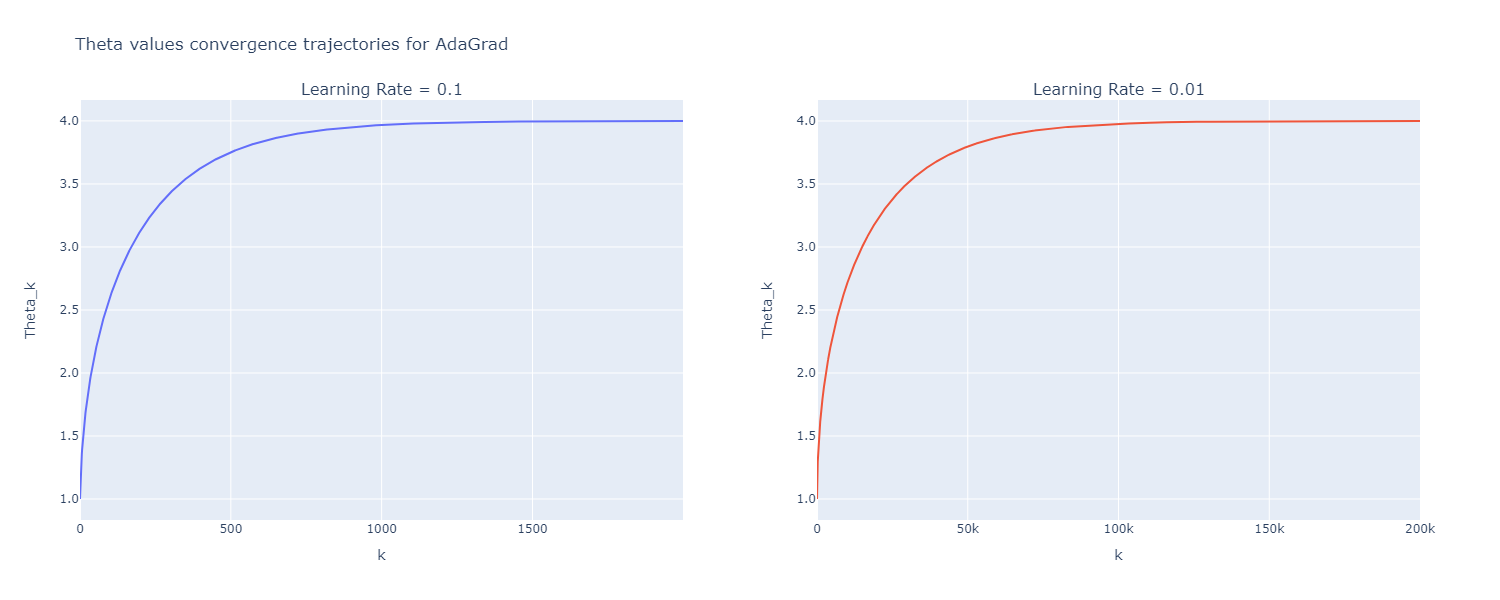

The continuous-time analysis reveals that AdaGrad's adaptation mechanism leads to naturally decreasing step sizes, while RMSProp and Adam maintain more consistent step sizes through exponential averaging.

The mathematical framework provides rigorous proofs for convergence rates and stability properties, matching empirical observations from practical applications.

Critical Analysis

Limitations of the research include:

- Models assume idealized conditions that may not match real-world scenarios

- Analysis focuses on theoretical aspects rather than practical implementations

- Some mathematical assumptions may not hold in deep learning applications

Further research could explore:

- Extensions to more complex optimization landscapes

- Practical implications for optimizer design

- Connections to other optimization methods

Conclusion

This research bridges the gap between discrete optimization algorithms and continuous mathematics. The insights gained could lead to better optimization methods and deeper understanding of existing algorithms. The work opens new paths for analyzing and improving machine learning optimization techniques.

The mathematical framework provides a foundation for future research in optimization theory and practical algorithm design. These advances could help develop more efficient training methods for machine learning models.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Subscribe to my newsletter

Read articles from Mike Young directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by