Implementing Self-Hosted GitHub Action Runners using AWS CodeBuild

Kieran Lowe

Kieran Lowe

I saw an interesting announcement from AWS What’s New? some time back regarding GitHub Actions and native AWS CodeBuild integration. This allows you to use AWS CodeBuild to be the compute for your self-hosted GitHub Action Runners. Let’s dig in!

What is GitHub Actions?

GitHub Actions is GitHub’s own CI/CD (Continuous Integration and Continuous Deployment) system in which you can run many things along side your code: linters, unit tests, builds and so much more. It’s super powerful and super flexible. It brings the CI/CD closer to the code, which is normally ran in other CI/CD pipeline services:

GitHub Actions makes it easy to automate all your software workflows, now with world-class CI/CD. Build, test, and deploy your code right from GitHub. Make code reviews, branch management, and issue triaging work the way you want.

- https://github.com/features/actions

What is AWS CodeBuild?

AWS CodeBuild is a fully managed CI service that can build code, run tests and create artifacts and more. It can be very powerful! You don’t need to manage servers, worry about patching them, scaling them or concern yourself with the operational overheads of managing such. It’s orchestrated via what they call a “buildspec”. This is defined in YAML and essentially tells CodeBuild what it needs to do. AWS defines it as:

AWS CodeBuild is a fully managed continuous integration service in the cloud. CodeBuild compiles source code, runs tests, and produces packages that are ready to deploy. CodeBuild eliminates the need to provision, manage, and scale your own build servers. CodeBuild automatically scales up and down and processes multiple builds concurrently, so your builds don’t have to wait in a queue. You can get started quickly by using CodeBuild prepackaged build environments, or you can use custom build environments to use your own build tools. With CodeBuild, you only pay by the minute.

- https://aws.amazon.com/codebuild/faqs/?nc=sn&loc=5

Why use AWS CodeBuild for GitHub Actions?

You might also be thinking why use AWS CodeBuild for self-hosted runners? Why not just GitHub-hosted runners? Well it’s completely up-to-you, but consider the following use cases:

Enterprise Enforcement — You might be part of an organisation or enterprise that mandates the use of self-hosted runners.

Security — You are mandated to use self-hosted runners, and require control over all ingress/egress to/from allowed sources/destinations. You can put AWS CodeBuild inside a VPC for extra control.

Speed — If any dependencies are already installed on the CodeBuild image, such as Python, you don’t need to define it in your workflow e.g.

actions/setup-python. You can go straight into the build!Cost — If you are part of the AWS Free Tier, you get 100 free build minutes a month using the

general1.smallorarm1.smallcompute types using on-demand EC2. Additionally, you get 6,000 total build seconds per month with thelambda.arm.1GBorlambda.x86-64.1GBcompute types using on-demand Lambda. Another consideration here is this implements “just-in-time” runners. So the compute is only provisioned when there’s an actual workflow to run. There’s no long standing EC2 instances or EKS clusters that would be generating cost waiting for a workflow to run.Easier onboarding — It might be easier to onboard as you don’t have to setup another finance/billing process. This could be lengthly, especially in a company. The CodeBuild compute you use will just show on your AWS bill.

Compute Power — You need access to a GPU to run some AI/Machine Learning models? You can leverage the GPU compute that AWS CodeBuild offers.

Integrating AWS CodeBuild with GitHub

Now we’ve done a brief introduction to what GitHub Actions and AWS CodeBuild are, let’s look at integrating it with GitHub. Now when this first came out you had two options:

Use a PAT (Personal Access Token)

OAuth

However quite recently they added integration support using a GitHub App. Now this works better because we can:

Better control the scope in which the app has access to our GitHub environment.

It can act on its own behalf e.g. we don’t need a functional user or for it to “assume” our personal GitHub user

We don’t need to worry about rotating PAT tokens

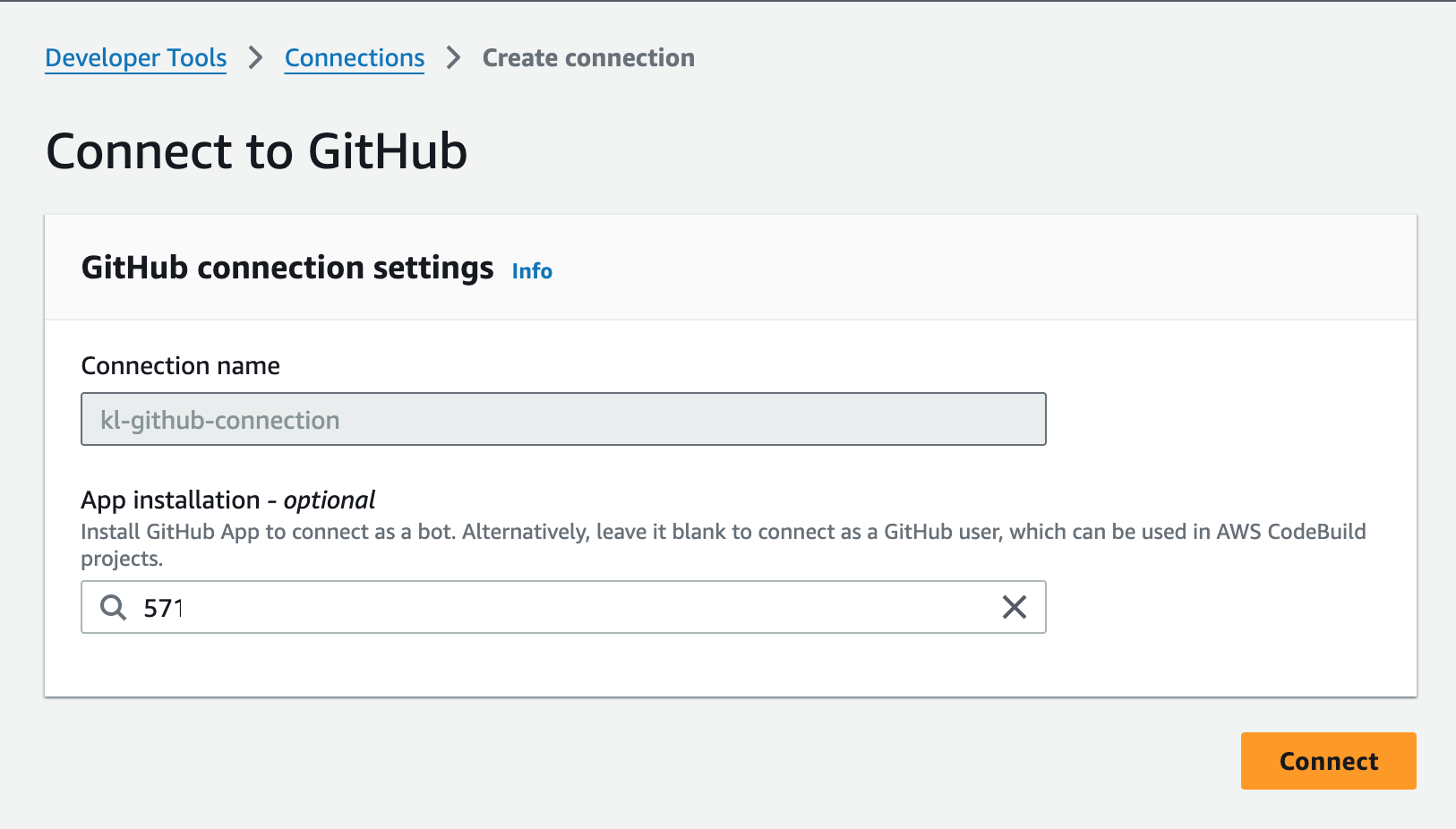

Now, I advocate the use of Infrastructure-as-Code, and using OpenTofu we can provision the aws_codestarconnections_connection resource. This resource has one drawback: all connections created through this are marked in the “Pending” state. Now this isn’t an OpenTofu or AWS Provider limitation, it’s just how it works when connections are creating via the API instead of the AWS Console. This makes sense, because someone has to actually authenticate the application on GitHub; this cannot be automatic. Anyway, let’s write the code:

resource "aws_codestarconnections_connection" "github" {

name = "kl-github-connection"

provider_type = "GitHub"

}

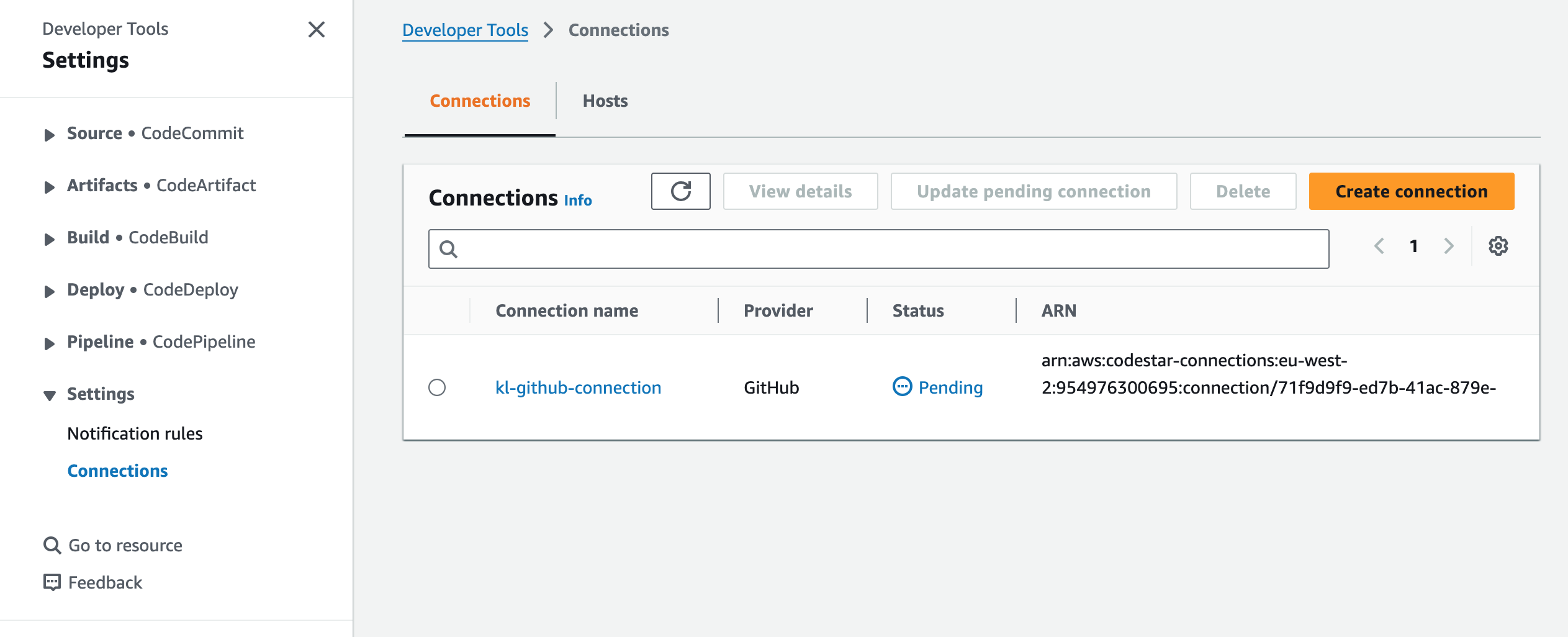

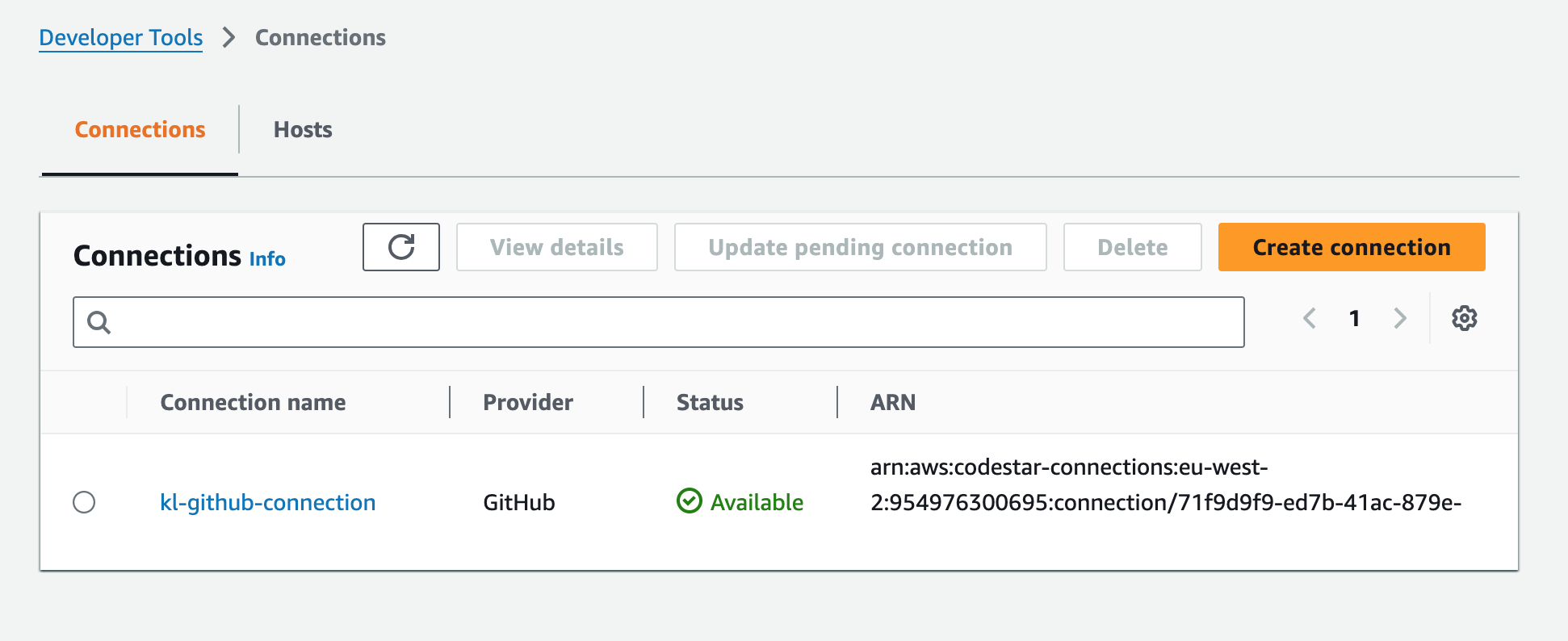

Once we plan and apply this, we can see our connection in the AWS Console:

As mentioned before, using the API means it’s marked in “Pending” so now we have to authenticate and authorise it on our GitHub environment. Click on the connection and then “Update pending connection” and you’ll see something like this:

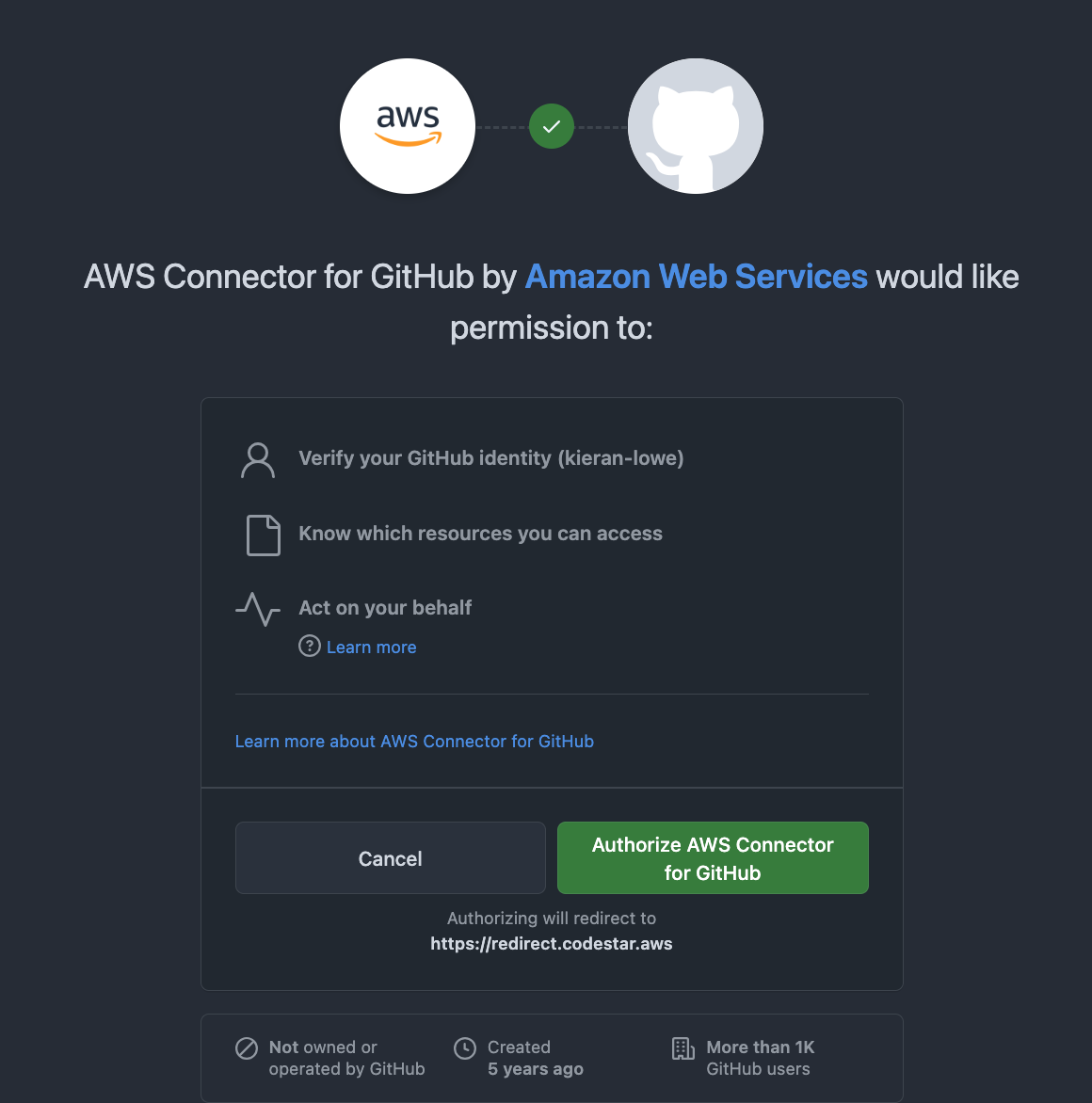

Now I did say one of the pros is that it can act on behalf of itself and not my own user, so why do we see “Act on your behalf” when authorising the AWS Connector for GitHub? Click on the “Authorise AWS Connector for GitHub” button and you’ll be taken to the next screen:

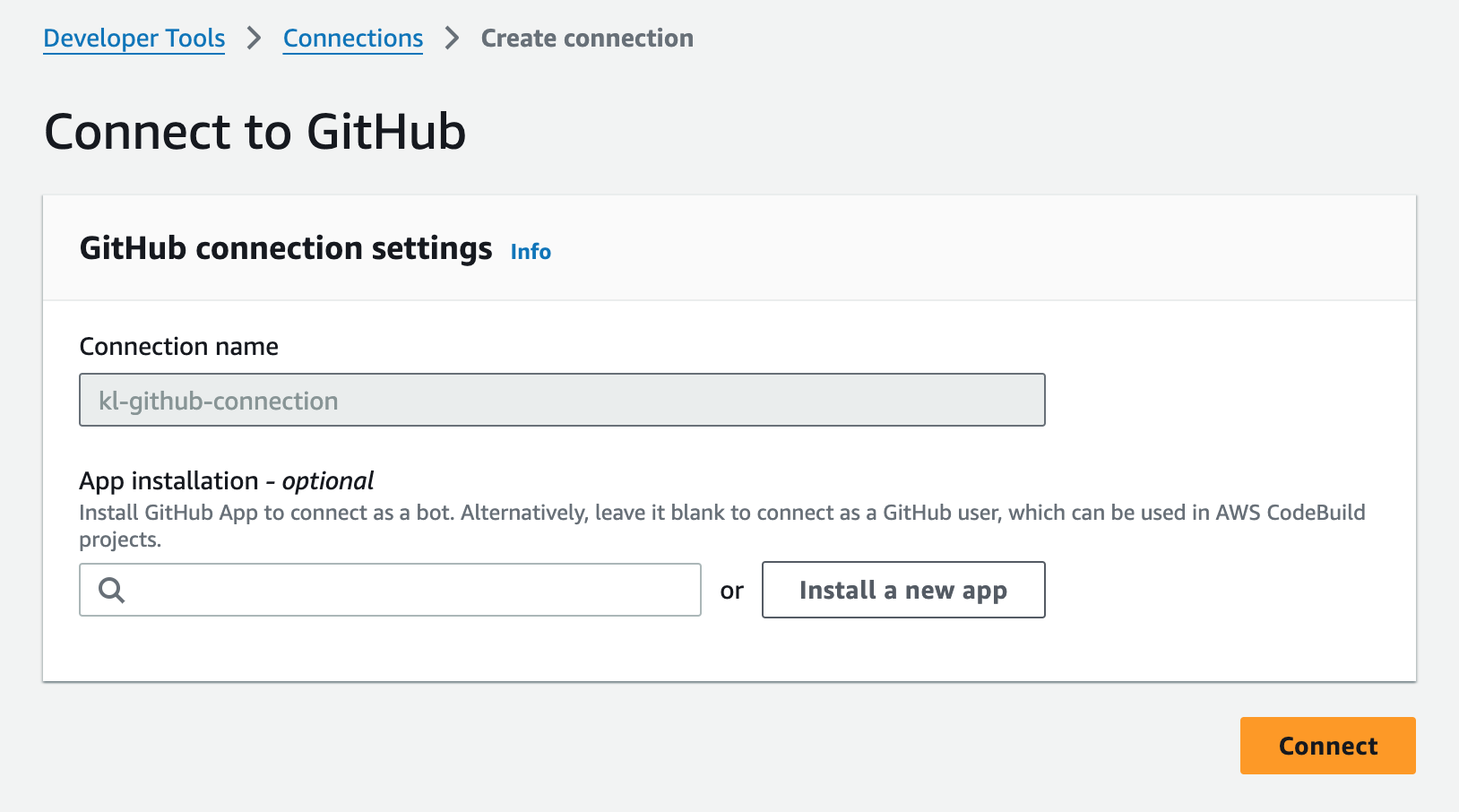

Now we can configure the integration to use a GitHub App, which would connect and operate as a “bot”. If we didn’t do this, it would just use our GitHub user that authorised the application, which would be me. To continue, click on the “Install a new app” button and you’ll be taken to a page similar to the below:

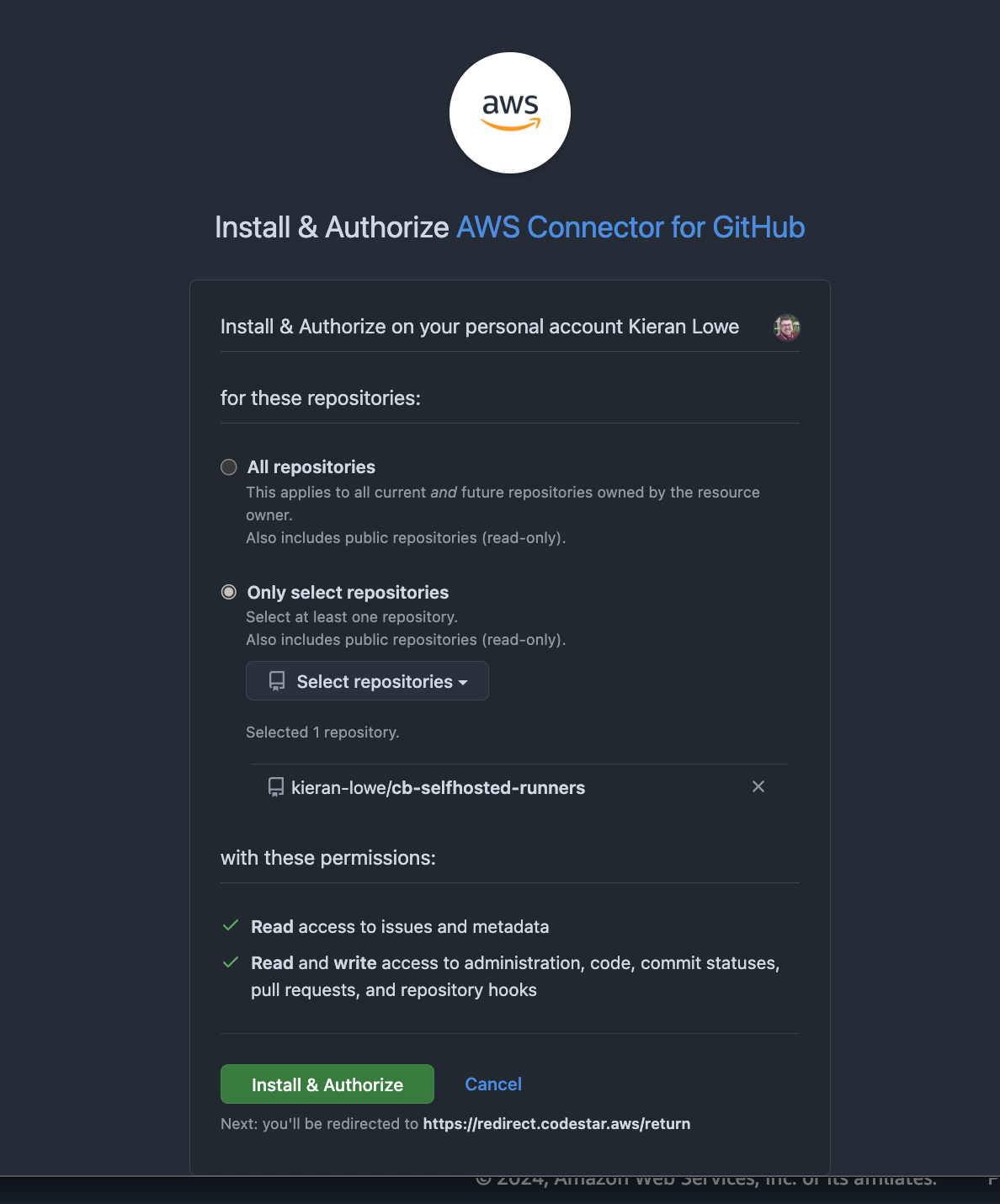

Now you can give it access to all repositories by default, but for this example we’re going to limit it to one repo. This means two things:

We can only create self-hosted runners for the repository:

kieran-lowe/cb-selfhosted-runnersWhen selecting the “Source” in the CodeBuild project, we will only see one repo

kieran-lowe/cb-selfhosted-runners, regardless of how many other repos we have.

I like to adhere to the principal of least privilege, so I believe the app should only have access to what it needs and nothing more. This however could be seen as a tradeoff, as if you want to enable another repository, you need to specify the additional repositories the GitHub App can access.

Let’s “Install & Authorise” and continue, you’ll be taken back to the screen you was on inside the AWS Console, but will now see an “Installation ID” in the box.

You can click on “Connect” and you’ll be taken back to the main screen in which you should see the “Available” status for your connection:

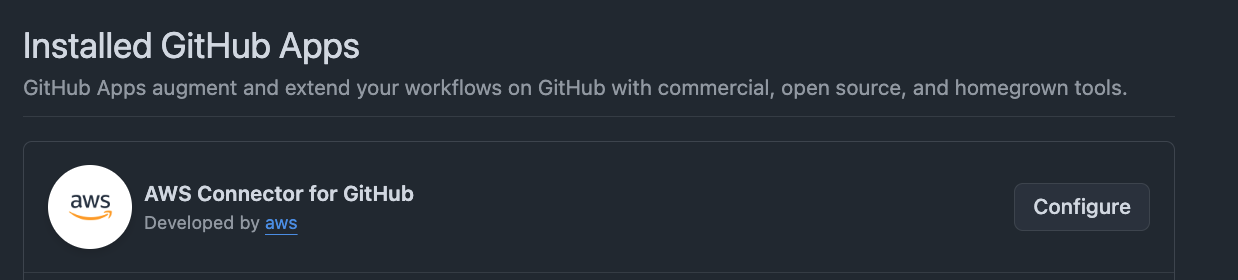

Verifying on GitHub

Now we’ve completed the integrations setup, let’s validate our repo on GitHub has the GitHub App enabled. You can access it at: https://github.com/settings/installations/<INSALLATION_ID>, in which you’ll see something like the below:

Great! Now we can start configuring the CodeBuild Project!

Creating the AWS CodeBuild Project

Now for this to work, we need to provision an AWS CodeBuild Project.

Setting the Default Source Credential

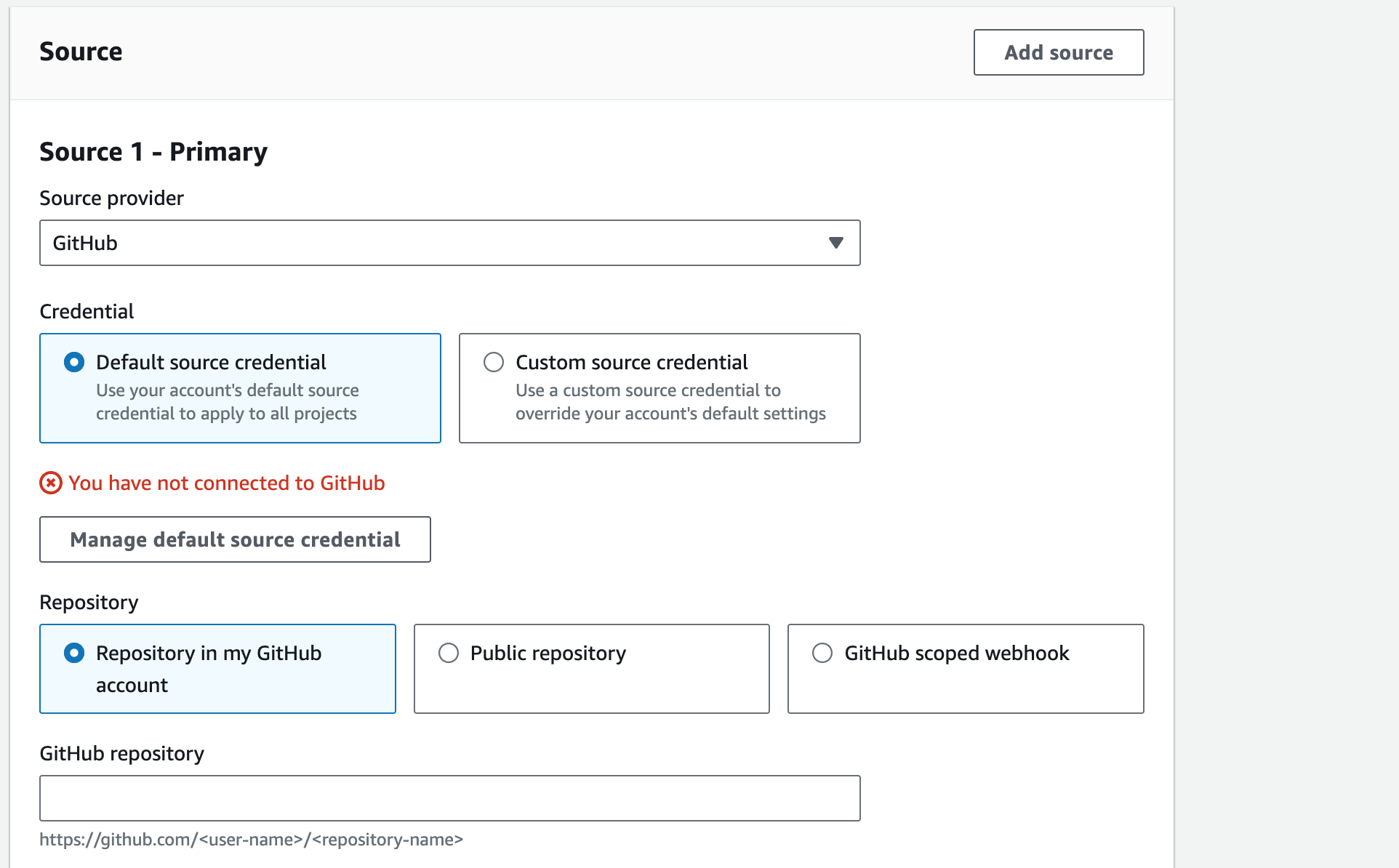

If we look at the console for creating a project, we can see the following:

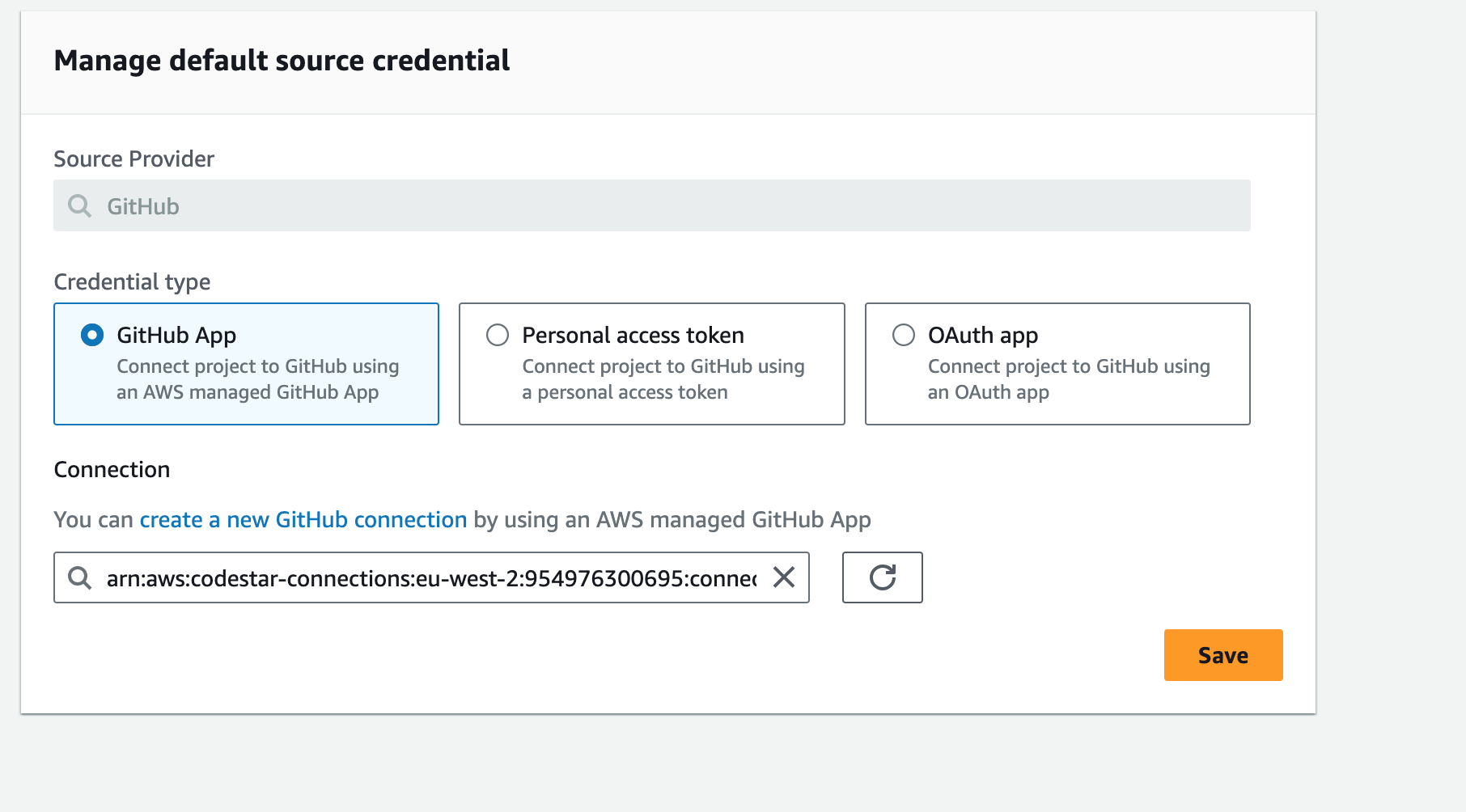

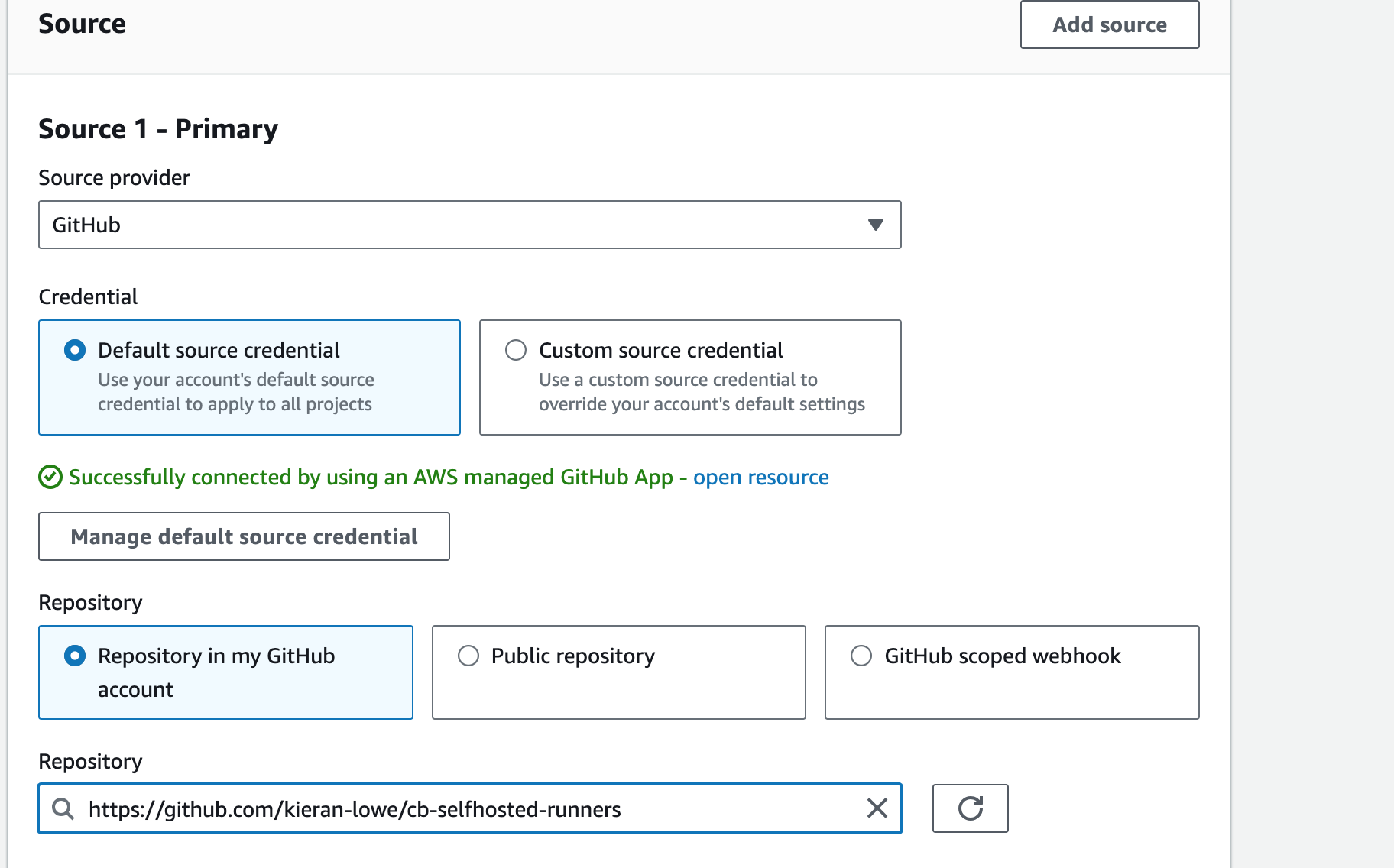

It tells us that we have not connected to GitHub, but if we select “Custom source credential”, it shows us our connection. I’ll expand on this further in a moment, but for now click on “Manage default source credential” and you’ll be taken to the following screen:

Here we can see our CodeConnection, click on “Save” and then we should be good to go!

Now, why did we have to do this? As of the time of writing, the AWS Provider in OpenTofu/Terraform doesn’t support the “Custom source credential”, there has been a request but to my knowledge no one is working on it:

Additionally, the aws_codebuild_source_credential resource doesn’t support the CodeConnection type, only PAT or OAuth. Hence why we did it via the console. You can also do it via the API:

# Replace <CODECONNECTION_ARN> with the ARN of your GitHub CodeConnection

aws codebuild import-source-credentials --token <CODECONNECTION_ARN> --server-type GITHUB --auth-type CODECONNECTIONS

Now we could technically run that command as part of the OpenTofu/Terraform workflow using some provisioners, but I wanted to keep it simple. If you are interested you could read more here: local-exec Provisioner | OpenTofu.

We need to configure this all first before we can get OpenTofu/Terraform to create the AWS CodeBuild project. If we don’t do this, we will get an error! Ensure you’ve set the default to the CodeConnection you have created before you continue.

Writing the Code

Let’s write the code to create our AWS CodeBuild project. As some settings must be set to configure the project accordingly, I’ve created a reusable module with the required resources:

# modules/terraform-aws-codebuild-action-runner/provider.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 5.0.0, < 6.0.0"

}

}

}

# modules/terraform-aws-codebuild-action-runner/data.tf

data "aws_region" "current" {}

data "aws_caller_identity" "current" {}

data "aws_codestarconnections_connection" "connection" {

name = var.github_connection_name

}

# modules/terraform-aws-codebuild-action-runner/locals.tf

locals {

repo_name = split("/", var.repo)[1]

codebuild_project_name = "${local.repo_name}-action-runner"

}

# modules/terraform-aws-codebuild-action-runner/codebuild.tf

resource "aws_codebuild_project" "project" {

name = local.codebuild_project_name

description = "CodeBuild project to run as the compute for Actions running in repo: ${local.repo_name}"

service_role = aws_iam_role.role.arn

project_visibility = "PRIVATE"

badge_enabled = false

source {

type = "GITHUB"

location = "https://github.com/${var.repo}"

}

artifacts {

type = "NO_ARTIFACTS"

}

environment {

compute_type = var.environment.compute_type

type = var.environment.type

image = var.environment.image

}

logs_config {

cloudwatch_logs {

status = "ENABLED"

}

}

}

resource "aws_codebuild_webhook" "webhook" {

project_name = aws_codebuild_project.project.name

build_type = "BUILD"

filter_group {

filter {

pattern = "WORKFLOW_JOB_QUEUED"

type = "EVENT"

}

}

}

# modules/terraform-aws-codebuild-action-runner/iam.tf

resource "aws_iam_role" "role" {

name = "${local.codebuild_project_name}-service-role"

description = "IAM role for CodeBuild project: ${local.codebuild_project_name}"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow"

Principal = {

Service = "codebuild.amazonaws.com"

}

Action = "sts:AssumeRole"

Condition = {

StringEquals = {

"aws:SourceArn" = "arn:aws:codebuild:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:project/${local.codebuild_project_name}"

"aws:SourceAccount" = data.aws_caller_identity.current.account_id

}

}

}

]

})

}

resource "aws_iam_role_policy" "allow_cloudwatch" {

name = "allow_cloudwatch"

role = aws_iam_role.role.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = "logs:CreateLogGroup"

Resource = "arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:/aws/codebuild/${local.codebuild_project_name}:log-stream:"

},

{

Effect = "Allow"

Action = [

"logs:CreateLogStream",

"logs:PutLogEvents"

]

Resource = "arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:/aws/codebuild/${local.codebuild_project_name}:log-stream:*"

}

]

})

}

resource "aws_iam_role_policy" "allow_codeconnection" {

name = "allow_codeconnection"

role = aws_iam_role.role.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = [

"codeconnections:GetConnectionToken",

"codeconnections:GetConnection"

]

Resource = [

data.aws_codestarconnections_connection.connection.arn,

replace(data.aws_codestarconnections_connection.connection.arn, "codestar-connections", "codeconnections")

]

}

]

})

lifecycle {

precondition {

condition = data.aws_codestarconnections_connection.connection.connection_status == "AVAILABLE"

error_message = "Your GitHub connection must be in the AVAILABLE status before you can use it with CodeBuild. Yours reports as: ${data.aws_codestarconnections_connection.connection.connection_status}"

}

}

}

# modules/terraform-aws-codebuild-action-runner/variables.tf

variable "repo" {

description = "Name of the repository following the format: owner/repo"

type = string

nullable = false

validation {

condition = can(regex("^[a-zA-Z0-9-]+/[a-zA-Z0-9-]+$", var.repo))

error_message = "The repository name must be in the format: owner/repo"

}

}

variable "environment" {

description = "Environment configuration for the CodeBuild project"

type = object({

compute_type = string

type = string

image = string

})

nullable = false

default = {

compute_type = "BUILD_GENERAL1_SMALL"

type = "ARM_CONTAINER"

image = "aws/codebuild/amazonlinux2-aarch64-standard:3.0"

}

}

variable "github_connection_name" {

description = "Name of the GitHub connection to use from CodeConnections"

type = string

nullable = false

}

Breakdown

Let’s break some of this down:

CodeBuild Project

# modules/terraform-aws-codebuild-action-runner/locals.tf

locals {

repo_name = split("/", var.repo)[1]

codebuild_project_name = "${local.repo_name}-action-runner"

}

# modules/terraform-aws-codebuild-action-runner/codebuild.tf

resource "aws_codebuild_project" "project" {

name = local.codebuild_project_name

description = "CodeBuild project to run as the compute for Actions running in repo: ${local.repo_name}"

service_role = aws_iam_role.role.arn

project_visibility = "PRIVATE"

badge_enabled = false

source {

type = "GITHUB"

location = "https://github.com/${var.repo}"

}

artifacts {

type = "NO_ARTIFACTS"

}

environment {

compute_type = var.environment.compute_type

type = var.environment.type

image = var.environment.image

}

logs_config {

cloudwatch_logs {

status = "ENABLED"

}

}

}

One of CodeBuild’s primary purposes is building code and creating “artifacts”. We don’t need to create anything here, we just need the compute to run the GitHub Action Workflows. Here we set type = “NO_ARTIFACTS” to configure the Project as such.

We use a local to derive the project name which is opinionated, it’s the name of the repository itself followed by -action-runner. You can see this itself is based on local.repo_name, so what is that? var.repo requires a repository name to be in the format of <owner>/<repo_name>, this is required by CodeBuild to ensure the location in source is configured correctly. We don’t actually want the owner to be part of the project name, so we use split() based on / which produces:

[

"kieran-lowe",

"cb-selfhosted-runners"

]

so using [1] gets us just the repo name to be used for the project name.

The environment block configures the compute settings for the project, such as:

Architecture (x86, ARM)

Size (Power of the compute: cores, memory)

Image (Image for CodeBuild to run: AL2023, AL2, Ubuntu etc.)

To keep this within the AWS Free Tier, we have set some defaults that can be overridden if required:

environment = {

compute_type = "BUILD_GENERAL1_SMALL"

type = "ARM_CONTAINER"

image = "aws/codebuild/amazonlinux2-aarch64-standard:3.0"

}

I prefer use ARM where possible, because for most AWS Services they are:

Cheaper to run

Can be quicker (workload dependant of course)

More sustainable and energy efficient

Lastly we need to see the build logs, so we simply configure CloudWatch Logs!

CodeBuild Webhook

We also see another resource here: aws_codebuild_webhook. You might recall when we configured the GitHub App one of the permissions it requires is to manage web-hooks, this, in-fact, is how CodeBuild knows whether to start the project, and therefore become a runner. A web-hook is configured on the GitHub repository by this app, specifically an event of “Workflow jobs queued”. Now we need CodeBuild to respond to that event:

resource "aws_codebuild_webhook" "webhook" {

project_name = aws_codebuild_project.project.name

build_type = "BUILD"

filter_group {

filter {

pattern = "WORKFLOW_JOB_QUEUED"

type = "EVENT"

}

}

}

Here we use WORKFLOW_JOB_QUEUED as part of the CodeBuild web-hook API, which is specific for use in using CodeBuild as a self-hosted runner and responds to that web-hook created in the GitHub repository.

IAM

CodeBuild needs a “service role” so it can do things. Everything in AWS requires permissions, even if you want AWS to do things on your behalf. In order for our project to send logs to CloudWatch Logging and use our CodeConnection we have to give it permissions to do so:

resource "aws_iam_role" "role" {

name = "${local.codebuild_project_name}-service-role"

description = "IAM role for CodeBuild project: ${local.codebuild_project_name}"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow"

Principal = {

Service = "codebuild.amazonaws.com"

}

Action = "sts:AssumeRole"

Condition = {

StringEquals = {

"aws:SourceArn" = "arn:aws:codebuild:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:project/${local.codebuild_project_name}"

"aws:SourceAccount" = data.aws_caller_identity.current.account_id

}

}

}

]

})

}

resource "aws_iam_role_policy" "allow_cloudwatch" {

name = "allow_cloudwatch"

role = aws_iam_role.role.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = "logs:CreateLogGroup"

Resource = "arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:/aws/codebuild/${local.codebuild_project_name}:log-stream:"

},

{

Effect = "Allow"

Action = [

"logs:CreateLogStream",

"logs:PutLogEvents"

]

Resource = "arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:/aws/codebuild/${local.codebuild_project_name}:log-stream:*"

}

]

})

}

resource "aws_iam_role_policy" "allow_codeconnection" {

name = "allow_codeconnection"

role = aws_iam_role.role.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = [

"codeconnections:GetConnectionToken",

"codeconnections:GetConnection"

]

Resource = [

data.aws_codestarconnections_connection.connection.arn,

replace(data.aws_codestarconnections_connection.connection.arn, "codestar-connections", "codeconnections")

]

}

]

})

lifecycle {

precondition {

condition = data.aws_codestarconnections_connection.connection.connection_status == "AVAILABLE"

error_message = "Your GitHub connection must be in the AVAILABLE status before you can use it with CodeBuild. Yours reports as: ${data.aws_codestarconnections_connection.connection.connection_status}"

}

}

}

Here we use the same local we use for the project name, just append service-role to it so each role for each project is easily identifiable in the console.

If we are creating multiple projects, we could use one IAM role for them all but you can argue this is not following the principal of least privilege. In our current implementation, we’d have to change the allow_cloudwatch policy each time to add a new log group, or we make it generic using * for the Resource part of the IAM policy.

All AWS IAM Roles have a “trust policy” — it’s a special type of IAM policy for IAM roles only, and it says “which IAM principals can assume this role”. As we only want AWS CodeBuild to assume this role, we specify codebuild.amazonaws.com. You can also see we specify two condition keys, in short this is configured as only this CodeBuild Project and this account can CodeBuild assume this role. To be clear, this would work without these condition keys, but AWS recommends them to prevent something called the “confused deputy” problem. You can read the docs here for more information if you are interested: The confused deputy problem - AWS Identity and Access Management.

allow_cloudwatch

This policy gives our project to log to CloudWatch Logs only in the log group associated with the project. The CloudWatch Logs configuration is very basic, you can configure specific log groups and append log stream prefixes if you like, but we’re keeping it simple. If this is not configured, AWS CodeBuild will create the group as /aws/codebuild/<project_name and this is what our IAM policy matches.

allow_codeconnection

This policy gives CodeBuild permissions to use our GitHub CodeConnection we set up earlier. The name of the connection is passed in as a variable to the module. This then uses the aws_codestarconnections_connection data source to get the CodeConnection details, we will be using the ARN.

As of the time of writing, due to the service rename we are configuring the pre-service name prefix AND the new service name prefix as part of the Resource in the IAM policy. This ensures we will be covered permission wise, however at some point this won’t be required anymore.

We are also using precondition here, which uses that data source to do a check ensuring the specified CodeConnection is actually “Available”.

Now we can call our module using the below:

resource "aws_codestarconnections_connection" "github" {

name = "kl-github-connection"

provider_type = "GitHub"

}

module "self_hosted_runner" {

source = "./modules/terraform-aws-codebuild-action-runner"

repo = "kieran-lowe/cb-selfhosted-runners"

github_connection_name = aws_codestarconnections_connection.github.name

}

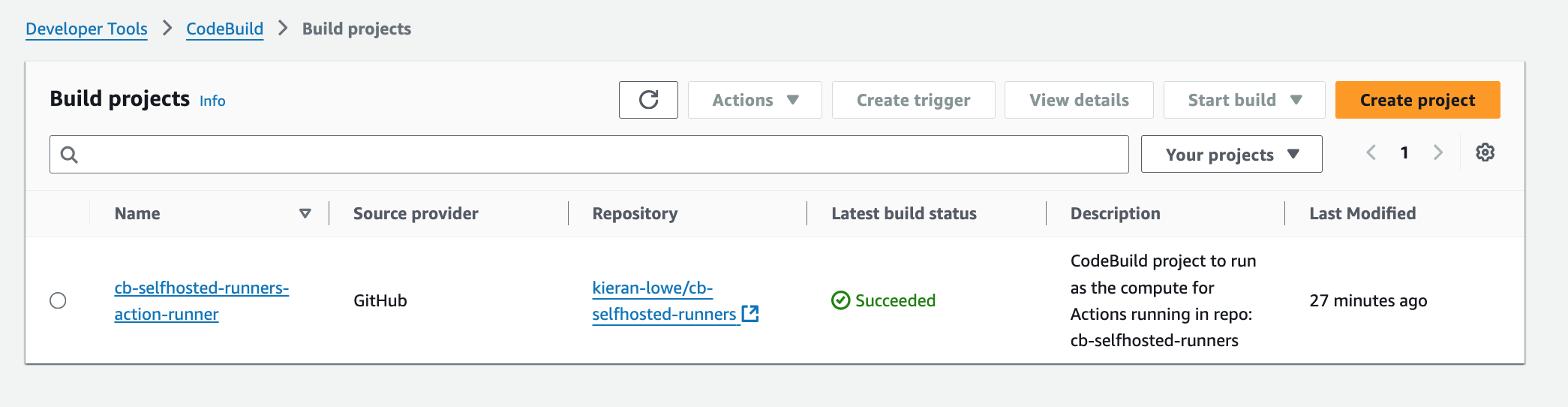

and now we have our project!

Creating the GitHub Action Workflow

Now we have the project ready to go, we need to create the workflow to use it! Now in order for our Project to be started successfully, we need to follow the AWS documentation on configuring our workflow as such: https://docs.aws.amazon.com/codebuild/latest/userguide/action-runner.html#sample-github-action-runners-update-yaml

Let’s create a basic workflow using the above:

name: Hello World

on: workflow_dispatch

jobs:

hello_world:

runs-on: codebuild-cb-selfhosted-runners-action-runner-${{ github.run_id }}-${{ github.run_attempt }}

steps:

- name: Hello World

run: echo "Hello World"

We use workflow_dispatch so we can invoke it manually, and we use runs-on to specify what project to use for our runner. The AWS docs tell us to follow the following convention:

runs-on: codebuild-<project_name>-${{ github.run_id }}-${{ github.run_attempt }}

It’s important the project name is exactly how it shows in the AWS Console. Otherwise the workflow in GitHub could hang and would need to be cancelled.

Running the GitHub Action Workflow

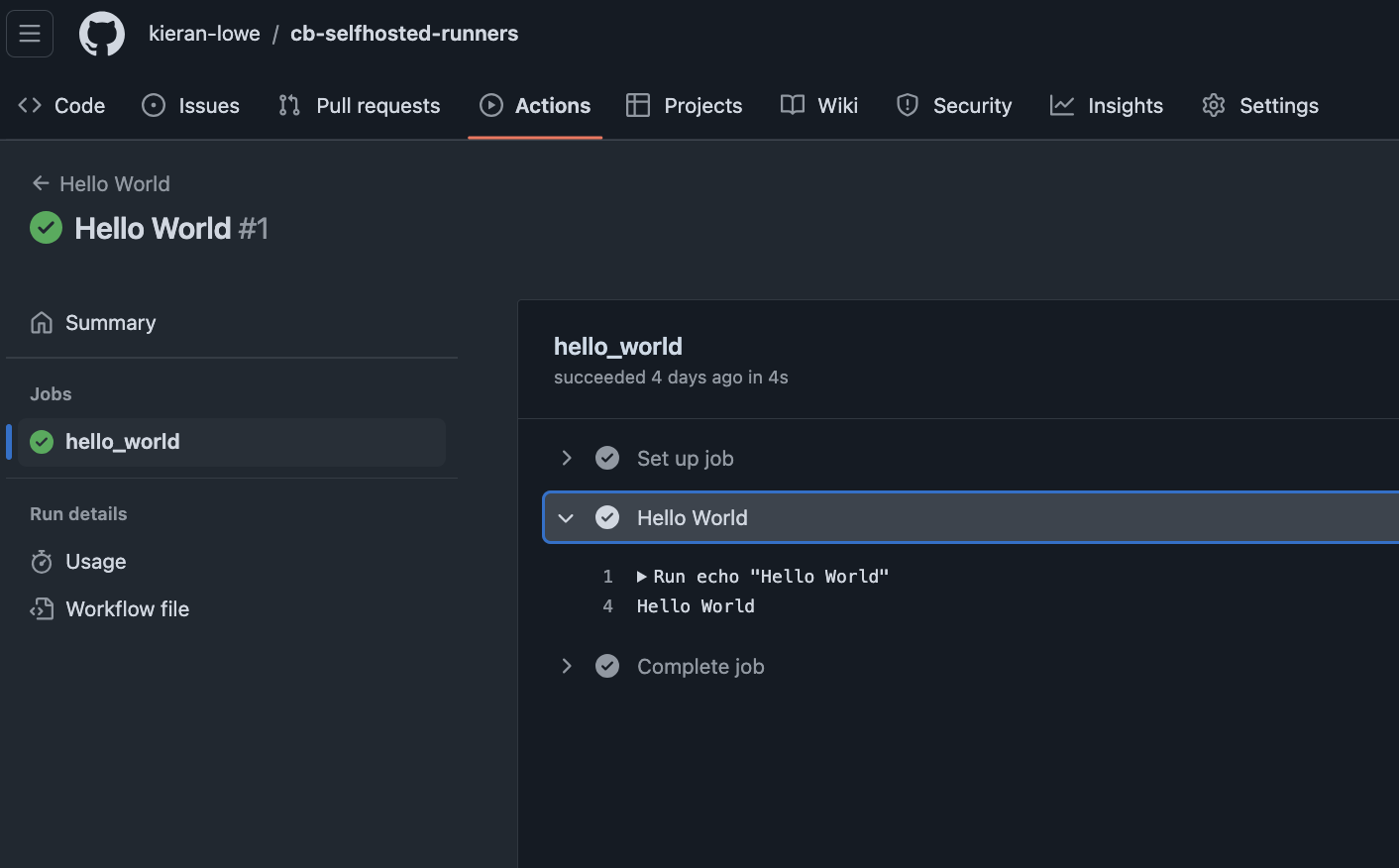

Now we have our workflow defined, we can run it!

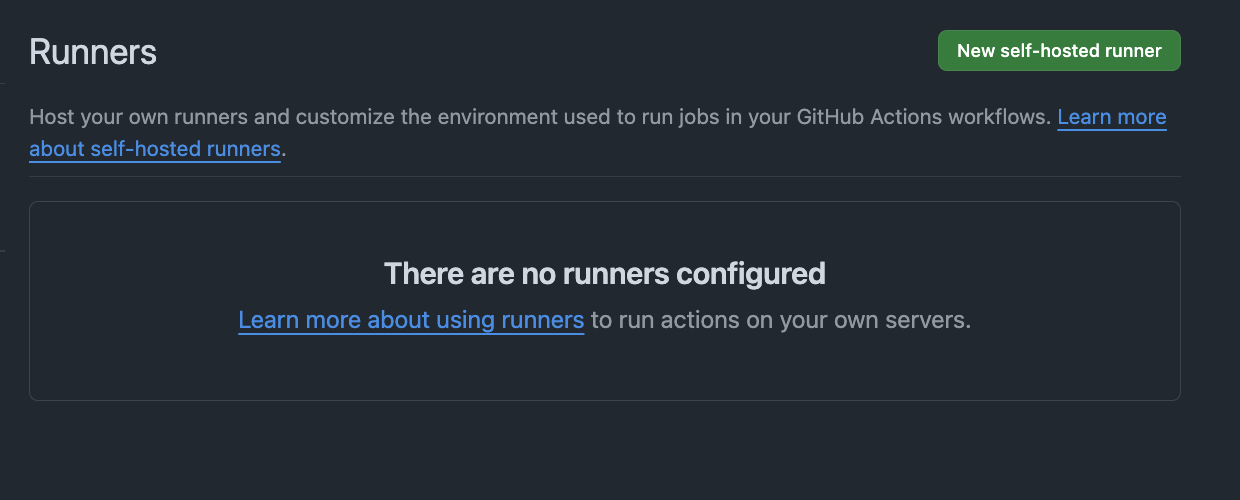

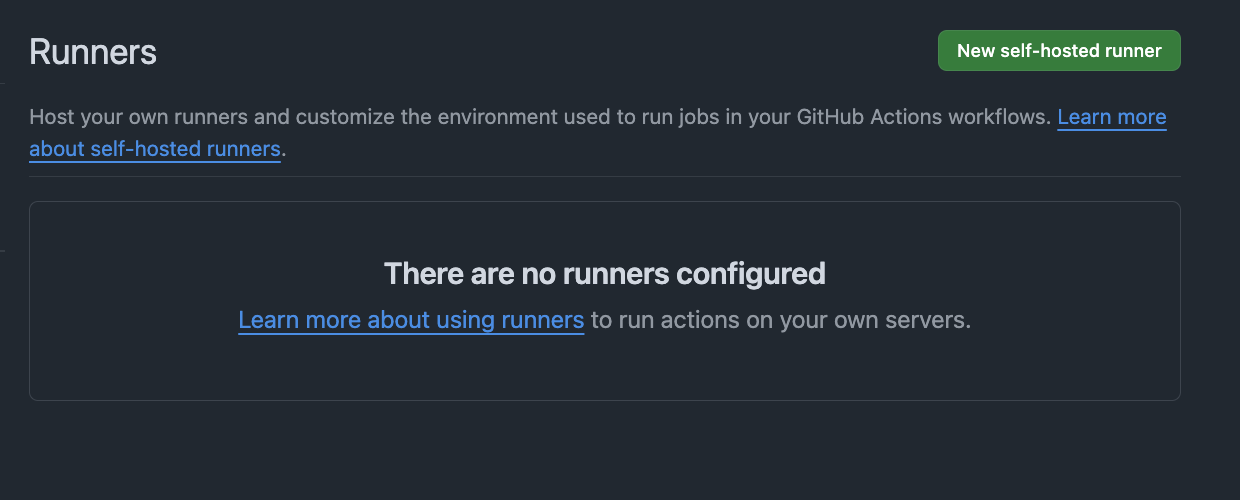

It worked! Now how did it work behind the scenes, as the CodeBuild Project starts we can see there are no runners configured for the repo:

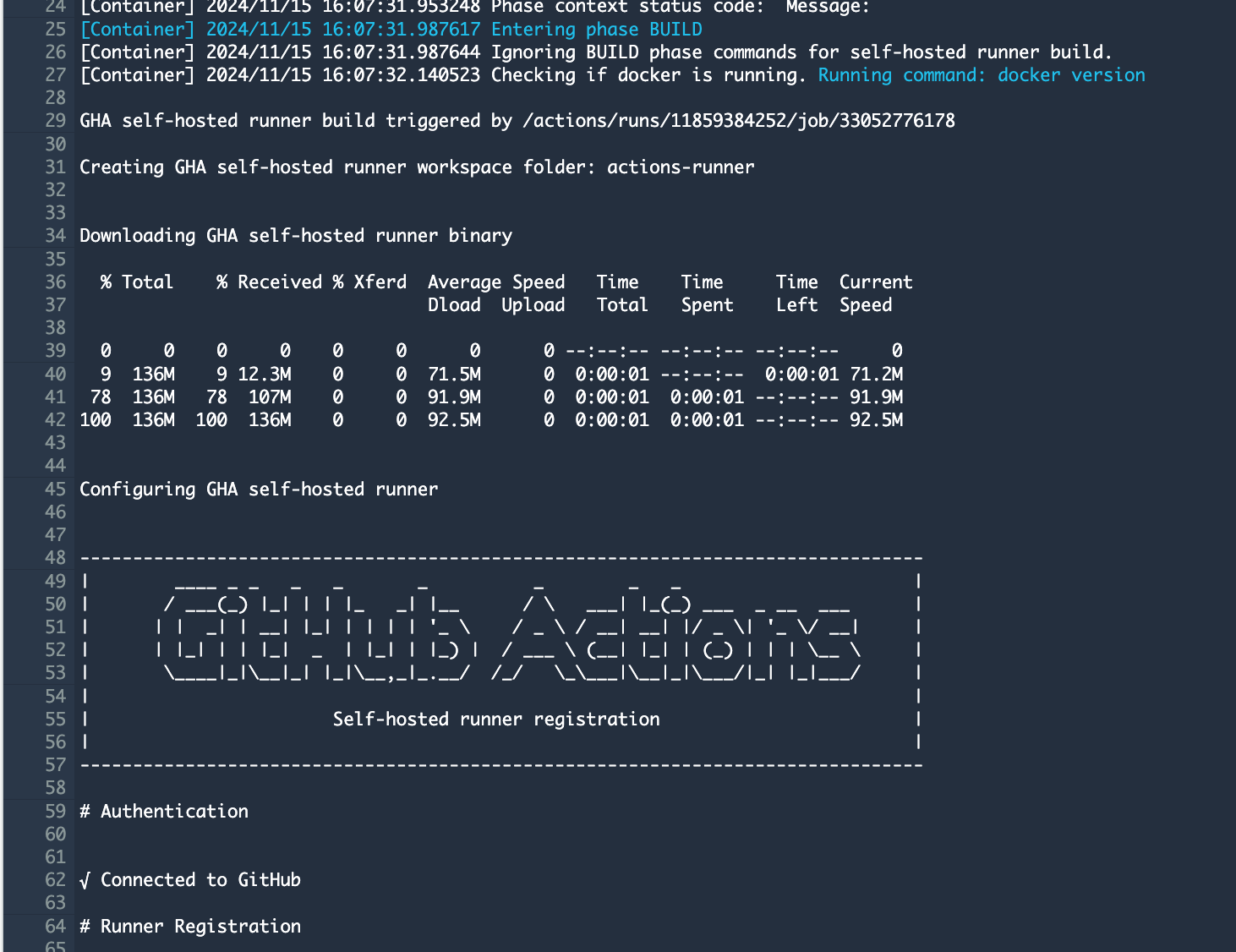

As the project starts, we can see in the build output it’s starting the process to register the runner with the repository:

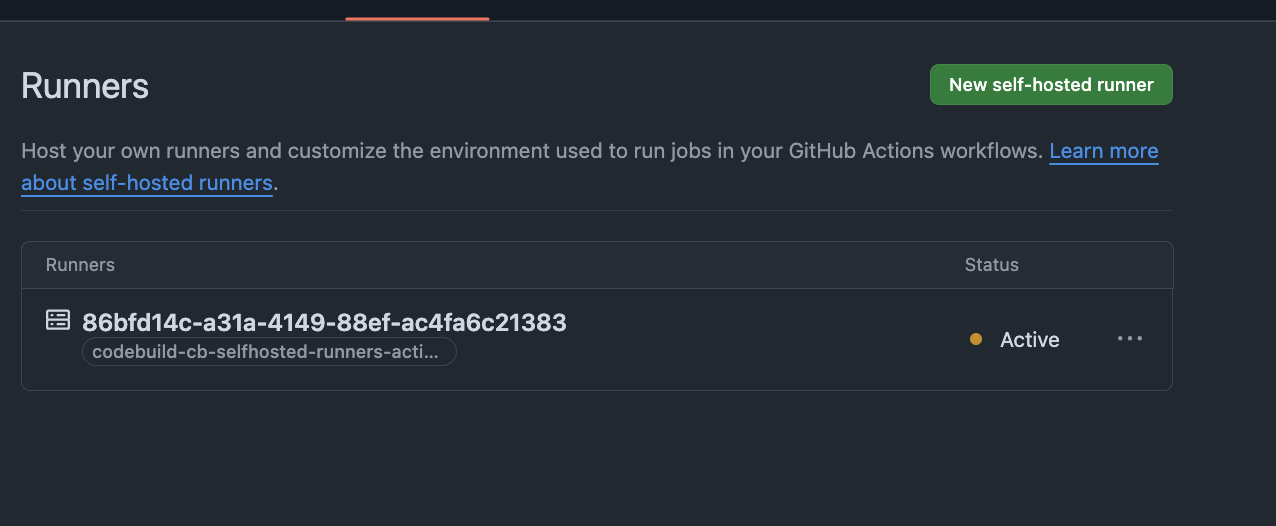

Now if we quickly check our runners in the repo again, we can see our CodeBuild project:

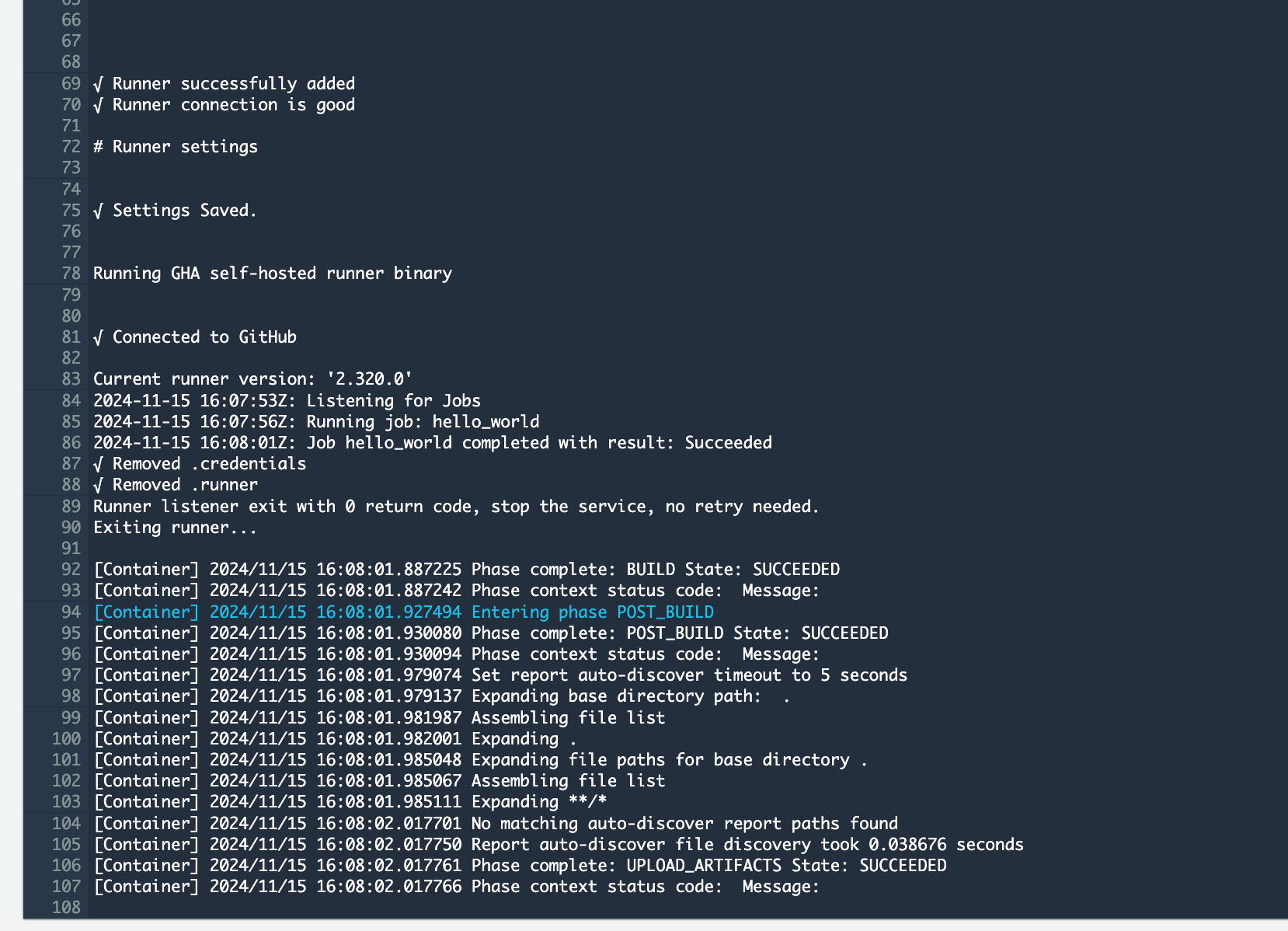

As the CodeBuild project starts, we can see it’s running our hello_world job we defined in our workflow:

If we check the runners for the repo again, we can see it has unregistered itself and no runners are available:

This is the “just in time” runner concept I mentioned towards the start of this article. A runner is registered only if a job, matching our runs-on configuration is present, it runs the job and then unregistered itself.

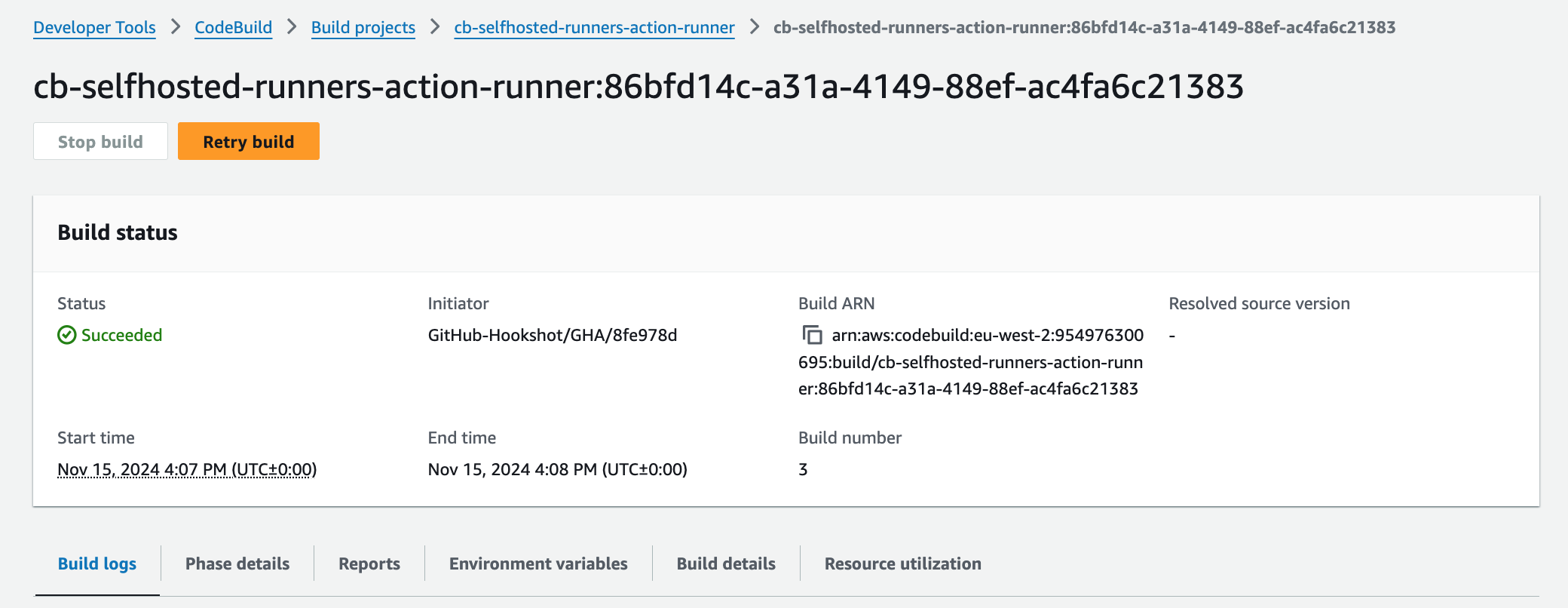

If we check our CodeBuild project, you can see our build has completed successfully!

allow_cloudwatch policy is correct!There we go, you have successfully used AWS CodeBuild and CodeConnections to run self-hosted GitHub Action runners for your GitHub Action Workflows and repositories!

We can now run tofu destroy to clean up our resources!

Further Customisation

Overriding the Compute

I mentioned earlier that changing the variables for the compute size is not the only way to override environment settings. If you workflow requires a lot of compute, you don’t want to have to update the variables each time! Here is where it gets pretty cool, you can pass in additional labels in your runs-on configuration to override the default compute settings on the CodeBuild project.

For this example, lets say I need to run a workflow using x86 on Amazon Linux 2023 with 8 vCPUs and 16 GiB of memory, I define it using the below:

name: Hello World

on: workflow_dispatch

jobs:

Hello-World-Job:

runs-on:

- codebuild-cb-selfhosted-runners-action-runner-${{ github.run_id }}-${{ github.run_attempt }}

- image:linux-5.0

- instance-size:large

steps:

- run: echo "Hello World!"

How about running Ubuntu 22.04 LTS x86 for an AI/Machine Learning based workflow using 32 vCPUs, 255 GiB of memory and 3.7TB SSD:

name: Hello World

on: workflow_dispatch

jobs:

Hello-World-Job:

runs-on:

- codebuild-cb-selfhosted-runners-action-runner-${{ github.run_id }}-${{ github.run_attempt }}

- image:ubuntu-7.0

- instance-size:gpu_large

steps:

- run: echo "Hello World!"

What about running a workflow needing to use multiple operating systems? Such as an application build:

name: Hello World

on: workflow_dispatch

jobs:

Hello-World-Job:

runs-on:

- codebuild-cb-selfhosted-runners-action-runner-${{ github.run_id }}-${{ github.run_attempt }}

- image:${{ matrix.os }}

- instance-size:${{ matrix.size }}

strategy:

matrix:

include:

- os: arm-3.0

size: small

- os: al2-5.0

size: large

- os: linux-5.0

size: medium

steps:

- run: echo "Hello World!"

Want to use AWS Lambda for near instantaneous start up times for running something Python based?

name: Pip Freeze

on: workflow_dispatch

jobs:

Hello-World-Job:

runs-on:

- codebuild-cb-selfhosted-runners-action-runner-${{ github.run_id }}-${{ github.run_attempt }}

- image:arm-lambda-python3.12

- instance-size:1GB

steps:

- run: pip freeze > requirements.txt

For all supported images, version and sizes, please check out:

Using the CodeBuild BuildSpec

Now CodeBuild uses something called a “buildspec” file. This basically tells CodeBuild what it needs to do and when. If you’re using CodeBuild for anything else, you will be using a buildspec file. When using CodeBuild for GitHub Action Runners, this file is completely ignored, however you can actually tell your workflow to use it:

name: Hello World

on: workflow_dispatch

jobs:

Hello-World-Job:

runs-on:

- codebuild-cb-selfhosted-runners-action-runner-${{ github.run_id }}-${{ github.run_attempt }}

- buildspec-override:true

steps:

- run: echo "Hello World!"

Settings this allows the build to run buildspec commands in the INSTALL, PRE_BUILD, and POST_BUILD phases.

Using CodeBuild Fleets

Do you use any CodeBuild fleets? You can use them here too!

name: Hello World

on: workflow_dispatch

jobs:

Hello-World-Job:

runs-on:

- codebuild-cb-selfhosted-runners-action-runner-${{ github.run_id }}-${{ github.run_attempt }}

- fleet:my-cb-fleet

steps:

- run: echo "Hello World!"

Using Organisation Level Runners

What we’ve configured above is using a CodeBuild Project per repository, this gives us the following benefits:

Logs are related to the same workflow

Easier IAM separation if you are needing to lock down who can see what logs in CloudWatch

Better adhere to the principal of least privilege

However, you can configure a CodeBuild project using organisation and global level GitHub web-hooks too! If you are interested you can read more on: GitHub global and organization webhooks - AWS CodeBuild

Subscribe to my newsletter

Read articles from Kieran Lowe directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kieran Lowe

Kieran Lowe

Cloud Engineer and DevOps