Automating AWS Cost Reporting with Lambda and SNS

Amit Maurya

Amit Maurya

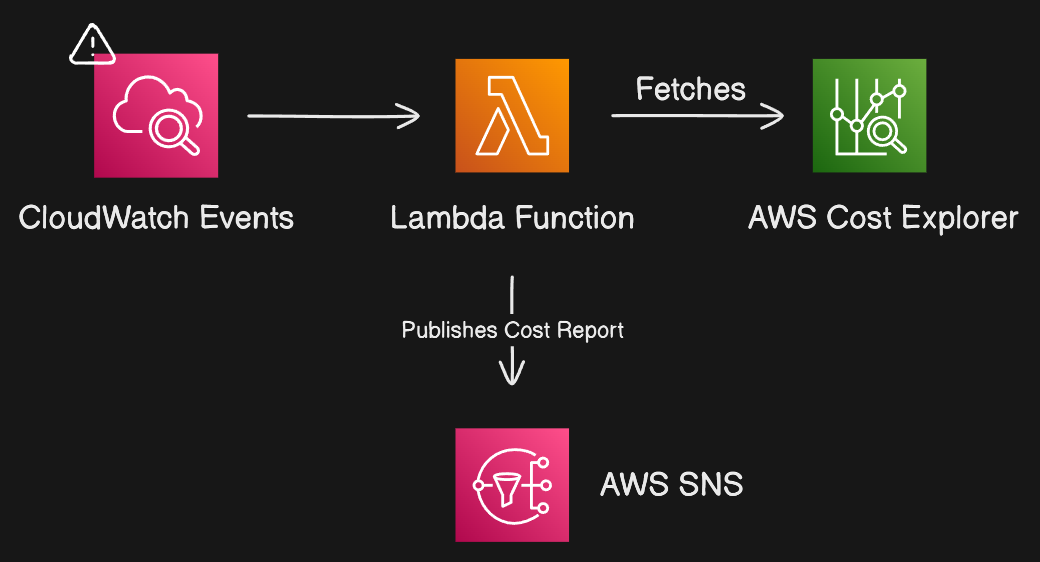

In this article, we are going to build a Lambda Function in which there is Python code that will generate the AWS Cost Report of your specific region and send it to your email through SNS Topic. We had automated this Lambda function by creating CloudWatch that will execute on schedule basis on 1st day of every month.

Before heading to Introduction section, do read my latest blogs:

End-to-End DevOps for a Golang Web App: Docker, EKS, AWS CI/CD

Deploying Your Website on AWS S3 with Terraform

Learn How to Deploy Scalable 3-Tier Applications with AWS ECS

Overview of AWS Cost Management

In today’s modern computing world, most of the companies or startups are switching their traditional servers to virtual servers to reduce their server setup cost and by AWS it reduces upto 50%.

But as a DevOps Engineer or Cloud Engineer we had to monitor the costs of AWS resources also how to use efficiently AWS resources we had to keep in mind so that unwanted costs can be cut.

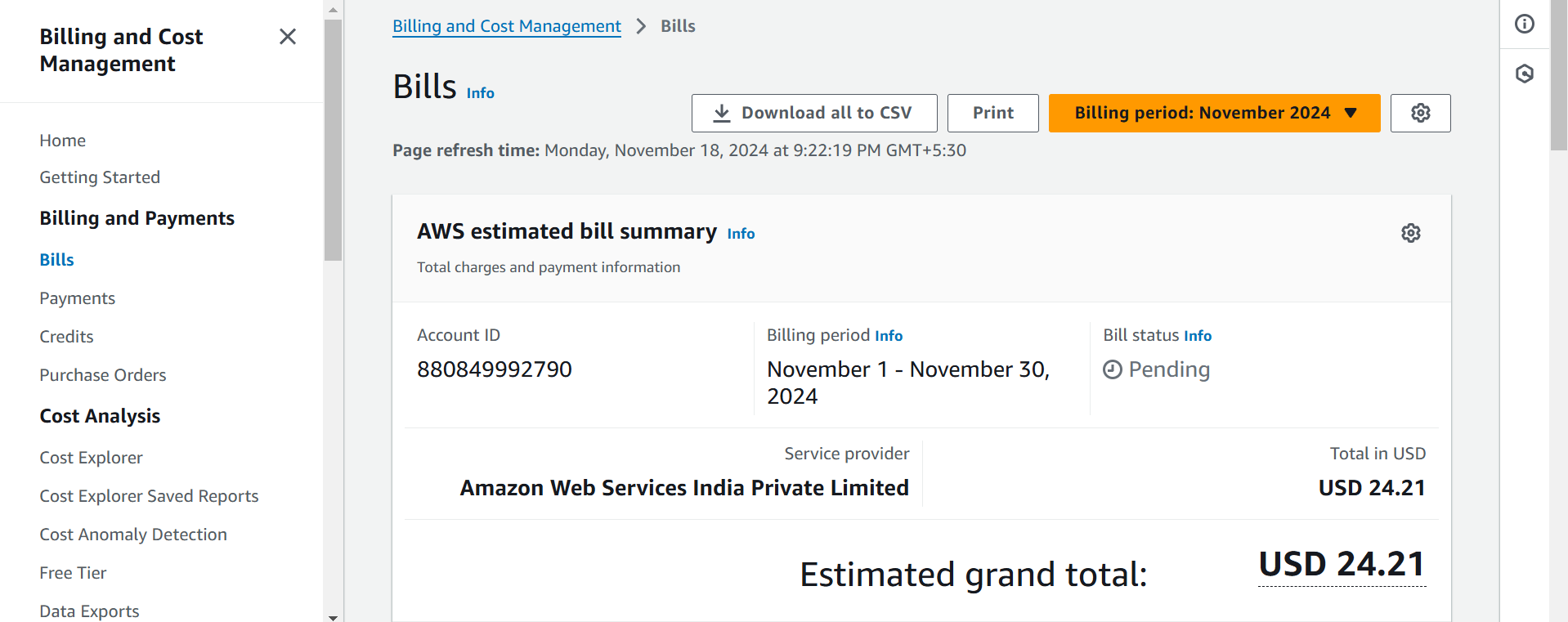

To see the AWS Resources Costs, navigate to Billing and Cost Management Dashboard (https://us-east-1.console.aws.amazon.com/billing/home#/bills)

Prerequisites

Setup IAM (Identity & Access Management) Role to communicate with other AWS services.

Write Python Code for specific region that will fetch the cost and sent the report to Email through SNS (Simple Notification Service).

Lambda Function

SNS (Simple Notification Service) Topic

CloudWatch Alarm

Implementation

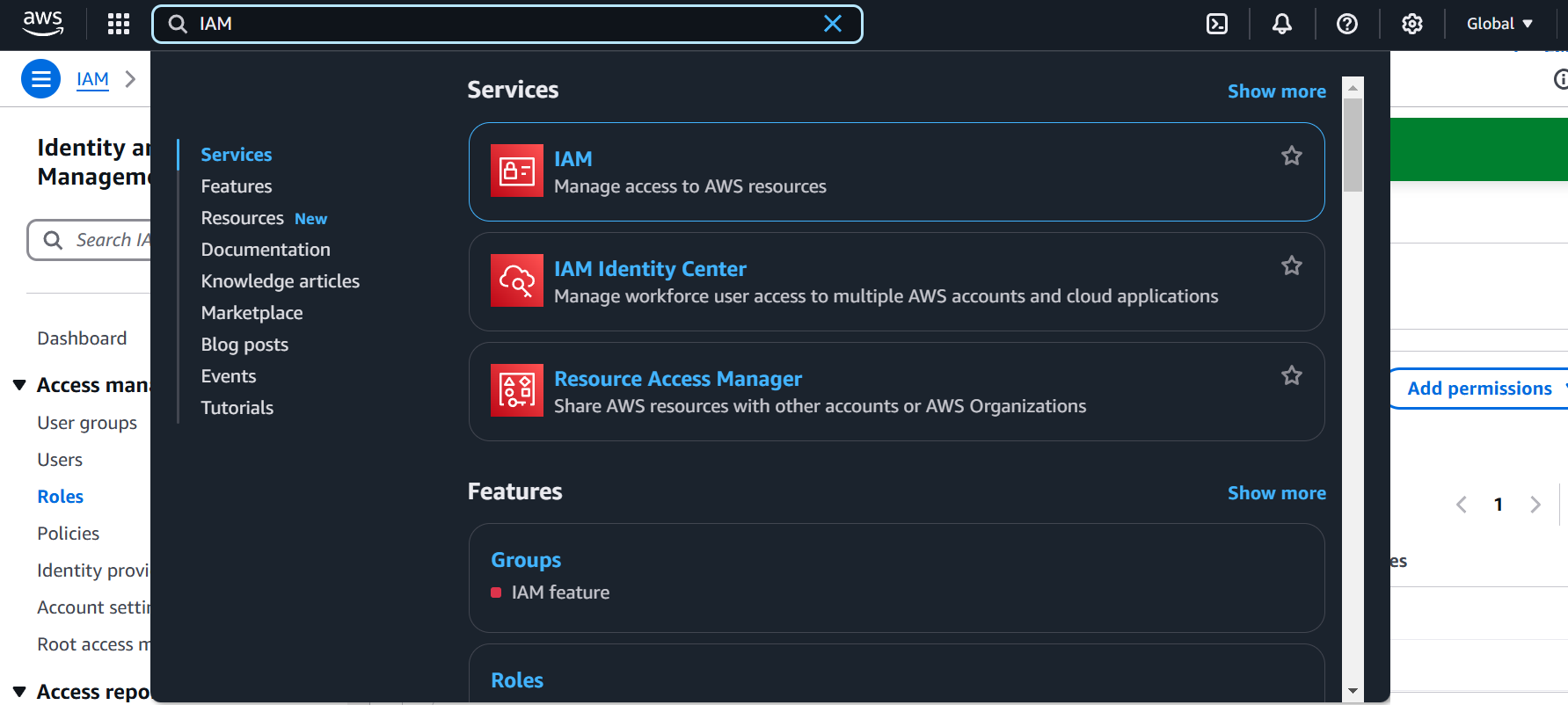

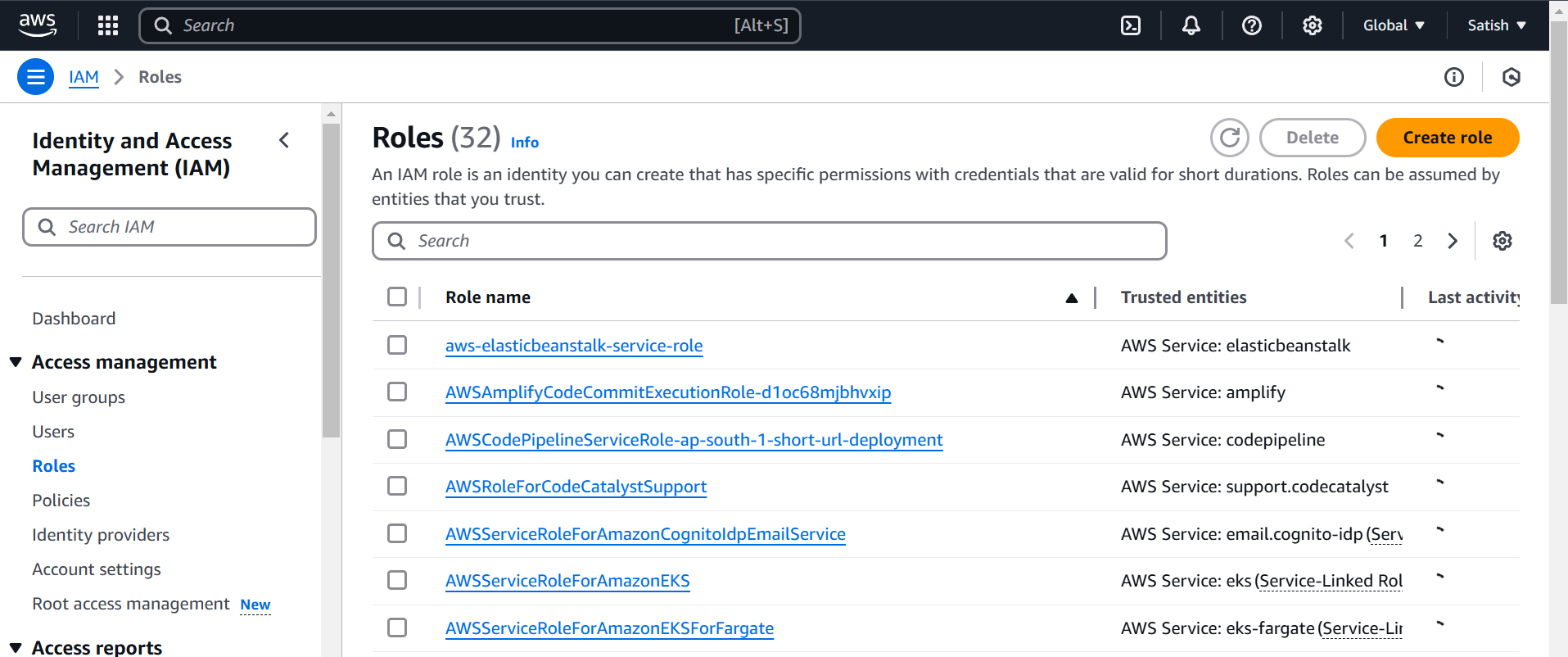

Create an IAM role for Lambda Function that will execute the SNS service. Navigate to IAM then select Roles

Click on Create Role then select Lambda as UseCase then Next. Now Add the required Permissions.

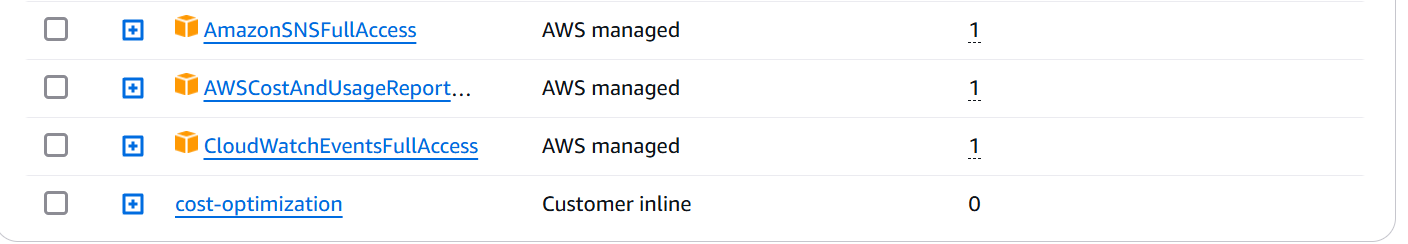

AmazonSNSFullAccess- SNS Full AccessAWSCostAndUsageReportAutomationPolicy- Cost Explorer AccessCloudWatchEventsFullAccess- CloudWatch Events Access to create RulesThen, add Inline Policy also for GetCostUsage name it

cost-optimization.{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "ce:GetCostAndUsage", "ce:GetCostForecast", "ce:GetDimensionValues", "ce:GetUsageForecast" ], "Resource": "*" } ] }

Now, IAM role is created setup the AWS Lambda function to execute our Python code.

AWS Lambda is serverless compute service that enables us to run our code without managing servers. It automatically scales up and down the servers that will be cost-efficient. It follow Event-Driven architecture where we can trigger Lambda by multiple events by integrating CloudWatch or other AWS services.

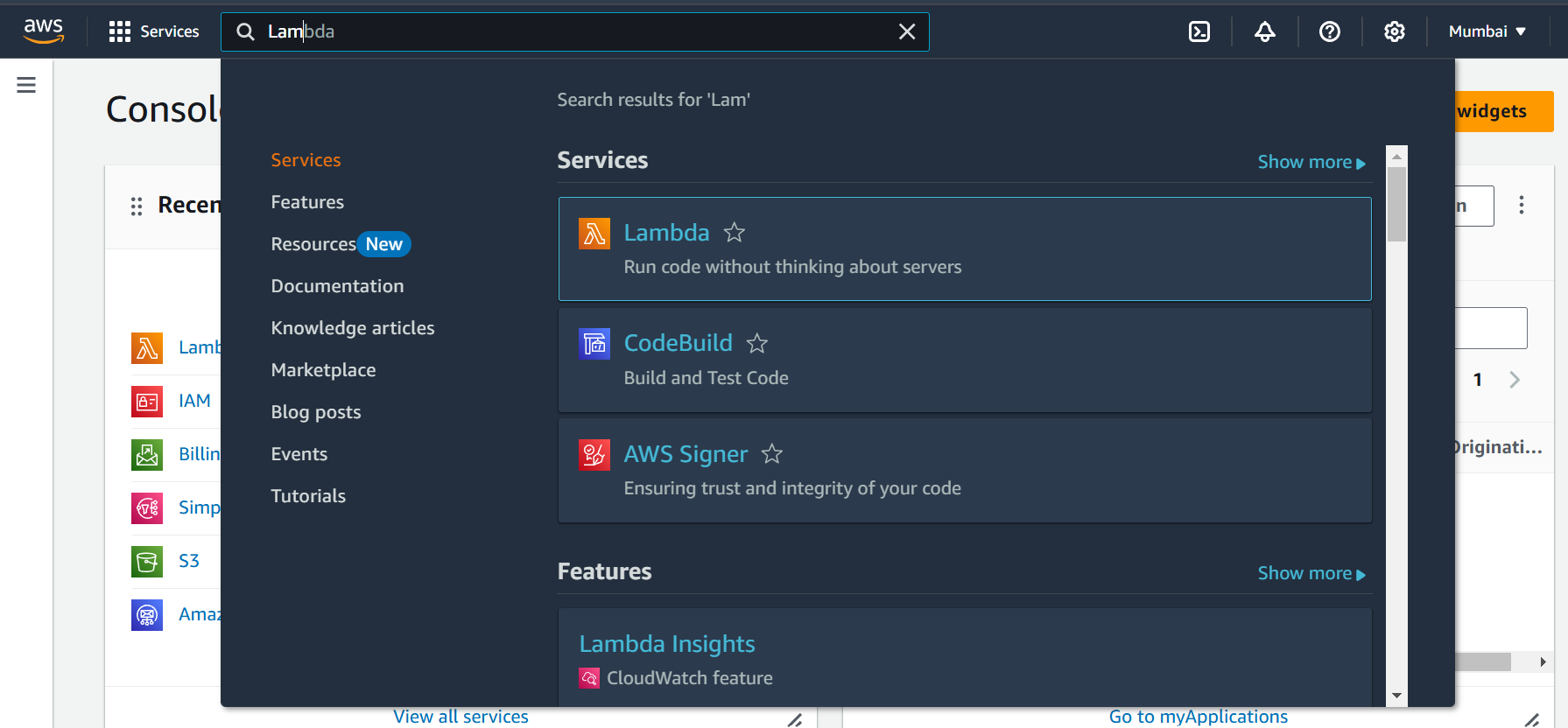

import boto3 import datetime from botocore.exceptions import ClientError # Initialize AWS Cost Explorer and SNS clients cost_explorer = boto3.client('ce') sns = boto3.client('sns', region_name="ap-south-1") # SNS topic ARN sns_topic_arn = 'arn:aws:sns:ap-south-1:880849992790:CostOptimization' # Replace with your actual SNS topic ARN # Define the Lambda function def lambda_handler(event, context): # Get current date and start of the month today = datetime.date.today() start_date = today.replace(day=1) end_date = today try: # Query AWS Cost Explorer for cost breakdown per service in ap-south-1 region ap_south_1_response = cost_explorer.get_cost_and_usage( TimePeriod={ 'Start': start_date.strftime('%Y-%m-%d'), 'End': end_date.strftime('%Y-%m-%d') }, Granularity='MONTHLY', Metrics=['UnblendedCost'], GroupBy=[{ 'Type': 'DIMENSION', 'Key': 'SERVICE' }], Filter={ 'Dimensions': { 'Key': 'REGION', 'Values': ['ap-south-1'] } } ) # Query AWS Cost Explorer for cost breakdown per service for all regions all_regions_response = cost_explorer.get_cost_and_usage( TimePeriod={ 'Start': start_date.strftime('%Y-%m-%d'), 'End': end_date.strftime('%Y-%m-%d') }, Granularity='MONTHLY', Metrics=['UnblendedCost'], GroupBy=[{ 'Type': 'DIMENSION', 'Key': 'SERVICE' }] ) # Prepare the breakdown of costs for ap-south-1 ap_south_1_total_cost = 0 ap_south_1_details = "Cost Breakdown for the ap-south-1 Region for the Current Month:\n\n" for result in ap_south_1_response['ResultsByTime']: for group in result['Groups']: service_name = group['Keys'][0] cost_amount = float(group['Metrics']['UnblendedCost']['Amount']) ap_south_1_total_cost += cost_amount ap_south_1_details += f"{service_name}: ${cost_amount:.2f}\n" ap_south_1_details += f"\nTotal Cost for ap-south-1: ${ap_south_1_total_cost:.2f}\n\n" # Prepare the breakdown of costs for all regions all_regions_total_cost = 0 all_regions_details = "Cost Breakdown for All Regions for the Current Month:\n\n" for result in all_regions_response['ResultsByTime']: for group in result['Groups']: service_name = group['Keys'][0] cost_amount = float(group['Metrics']['UnblendedCost']['Amount']) all_regions_total_cost += cost_amount all_regions_details += f"{service_name}: ${cost_amount:.2f}\n" all_regions_details += f"\nTotal Cost for All Regions: ${all_regions_total_cost:.2f}" # Combine both reports (ap-south-1 and all regions) into one message full_report = ap_south_1_details + all_regions_details # Publish the cost report to SNS sns.publish( TopicArn=sns_topic_arn, Subject='AWS Cost Breakdown Report', Message=full_report ) return { 'statusCode': 200, 'body': 'Cost report for ap-south-1 and all regions sent successfully via SNS!' } except ClientError as e: print(f"Error: {e}") return { 'statusCode': 500, 'body': f"Failed to send cost report: {e}" }Search AWS Lambda in AWS Management Console Search Bar

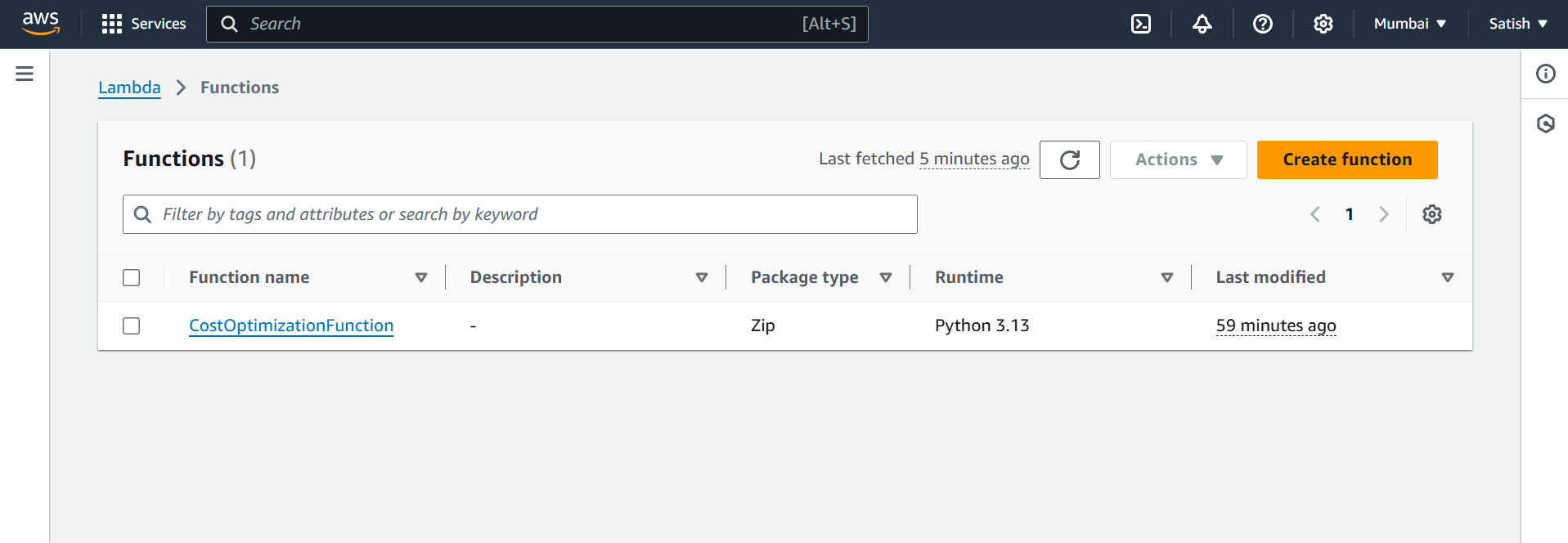

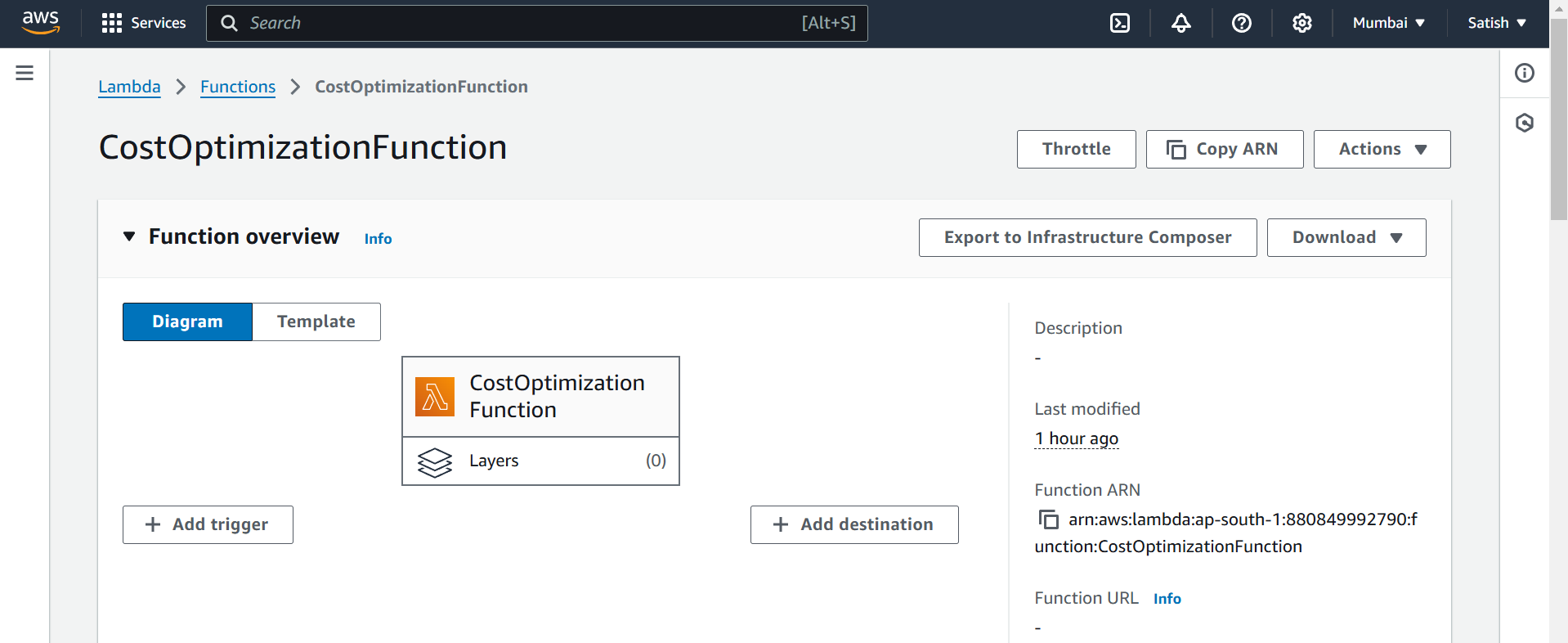

Click on Create Function and select Author From Scratch, name the function

CostOptimizationFunction, select Python 3.x Runtime then Open Permissions and select Use Existing Role and select the created IAM role and at last click on Create Function.

Scroll down and, paste the Source Code in Editor then click on Tests to test the program is working properly or not.

But, before executing the program navigate to AWS SNS (Simple Notification Service) to create the topic and subscription so that the report will be sent to Email.

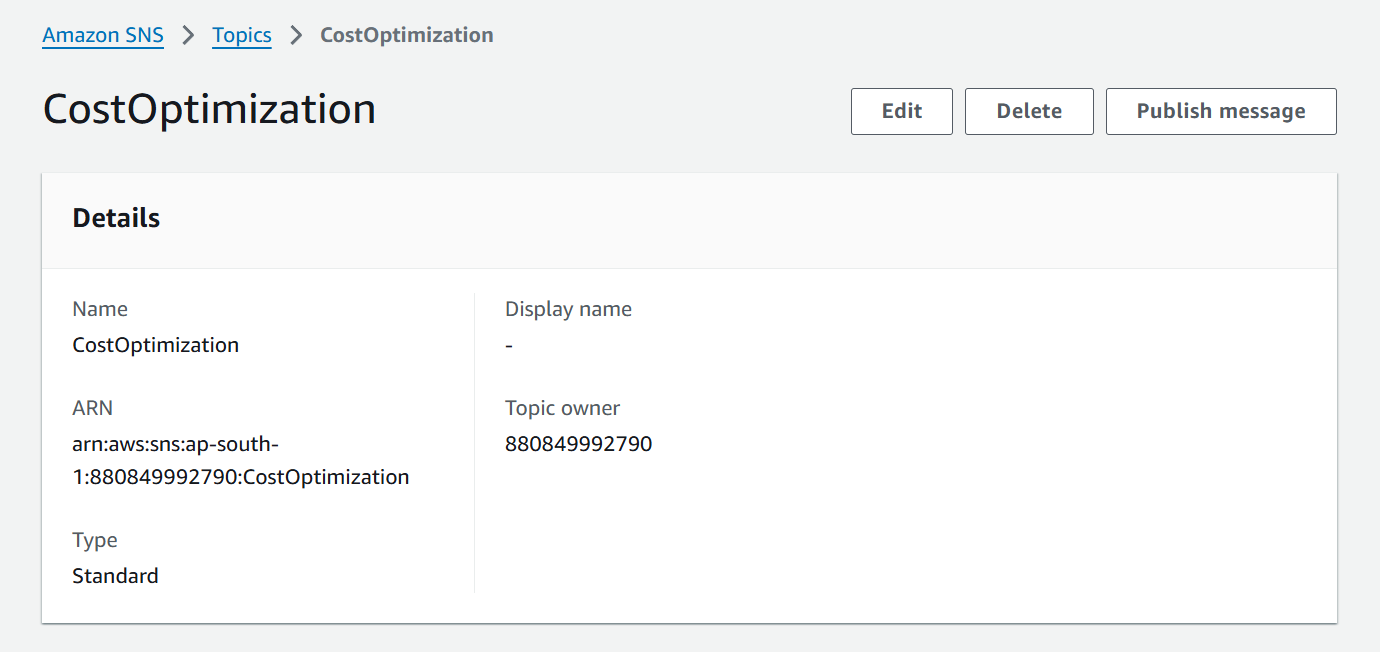

Name the topic

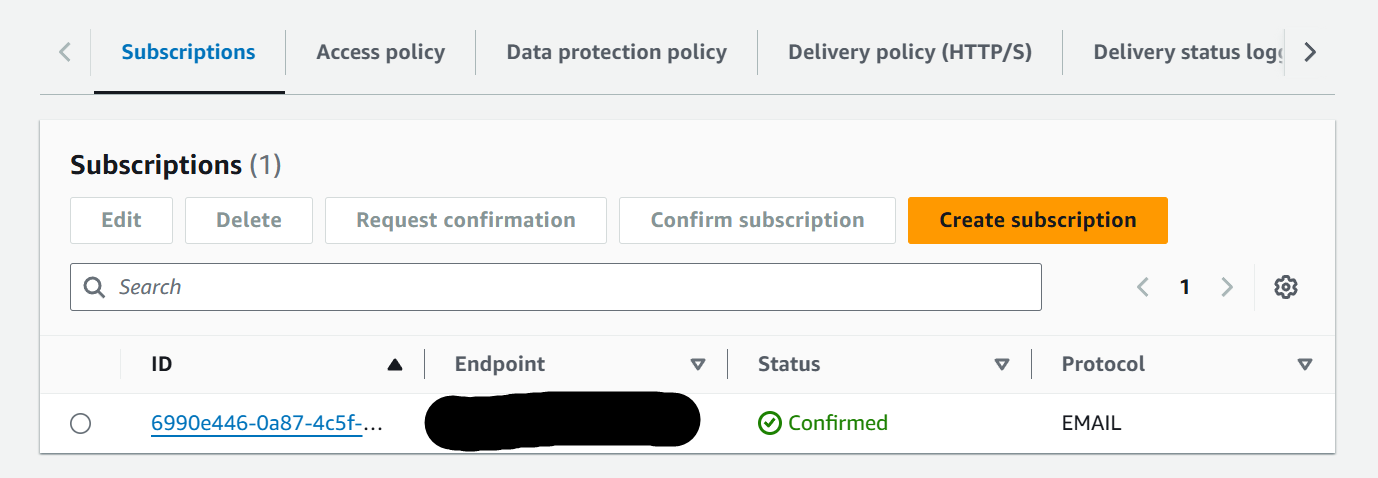

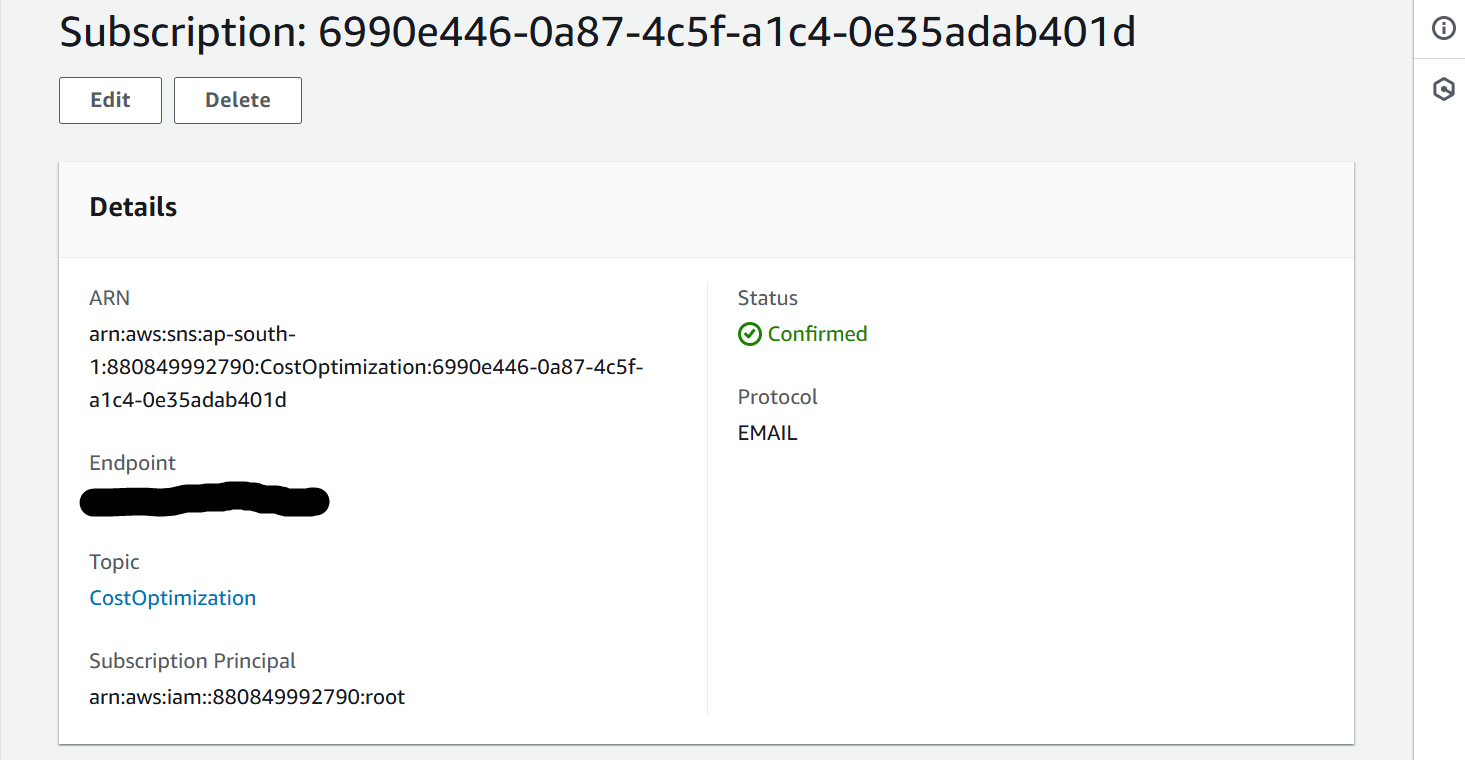

CostOptimizationand create the Subscription where you will verify the Email.

Click on Create Subscription and select the protocol “Email“

Now, go to your Email to verify the subsrciption endpoint. Click on the link that is provided in the Email it will be verified.

Now, copy the SNS Topic ARN and paste it into Lambda function in variable

sns_topic_arnand write the region from which you want the cost like I want the cost of region Mumbai I had written the region (ap-south-1).Create the Tests name

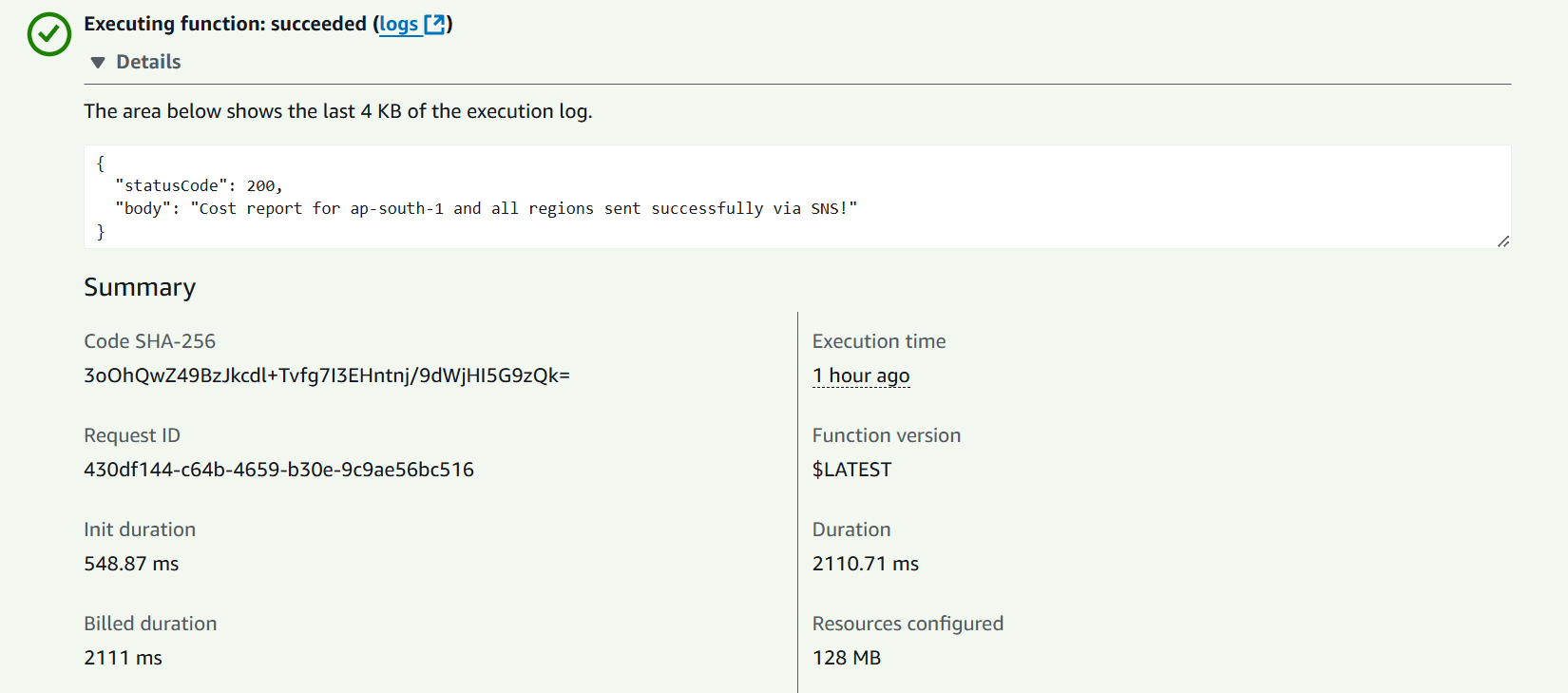

CostOptimizationFuncthen click on Test. It will deploy the template Hello World to deploy the changes of our Python program click on Deploy it will give the output in JSON.

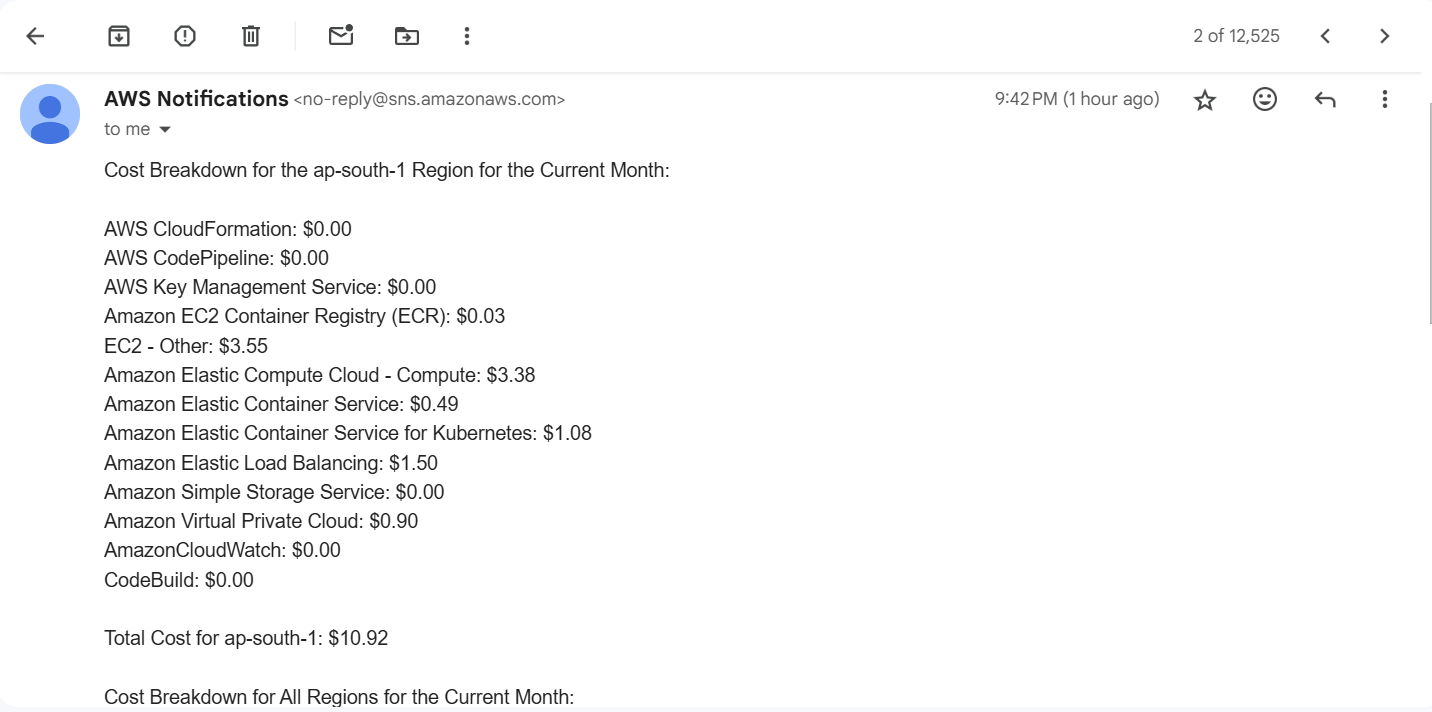

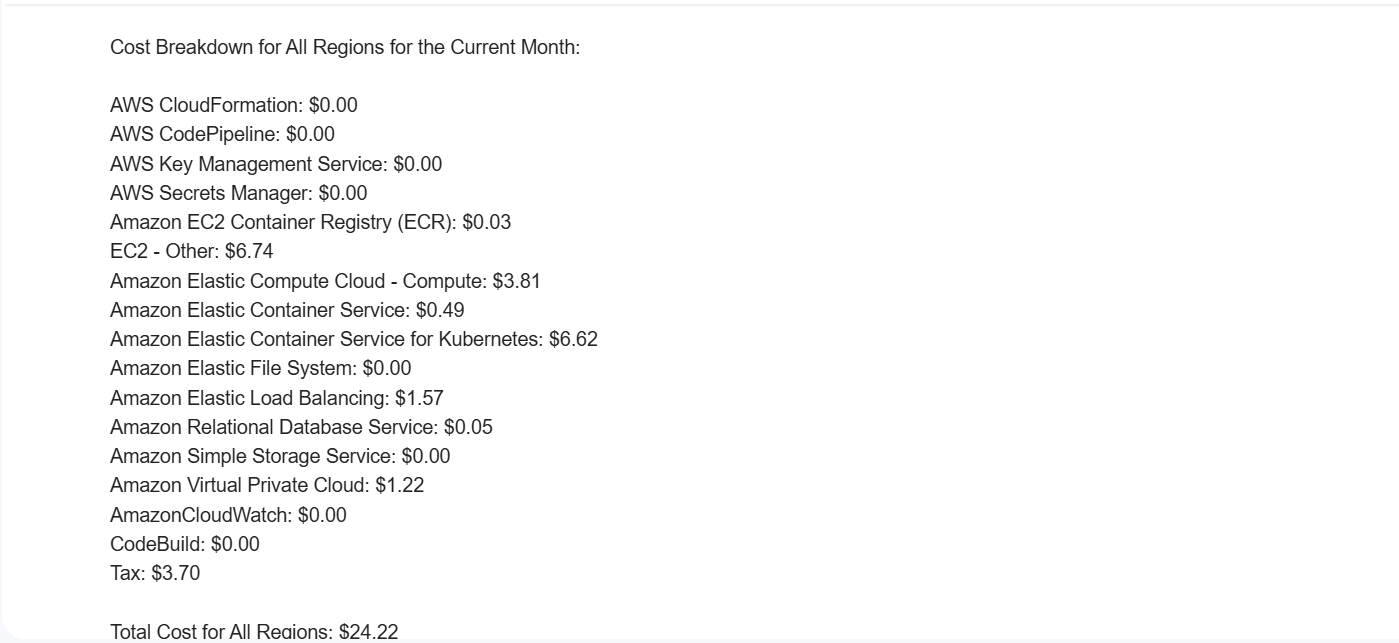

As we can see 200 status code that means our program is successfully executed. Now check the Email Inbox you will recieve the email of your Cost Report of your specific region and all regions.

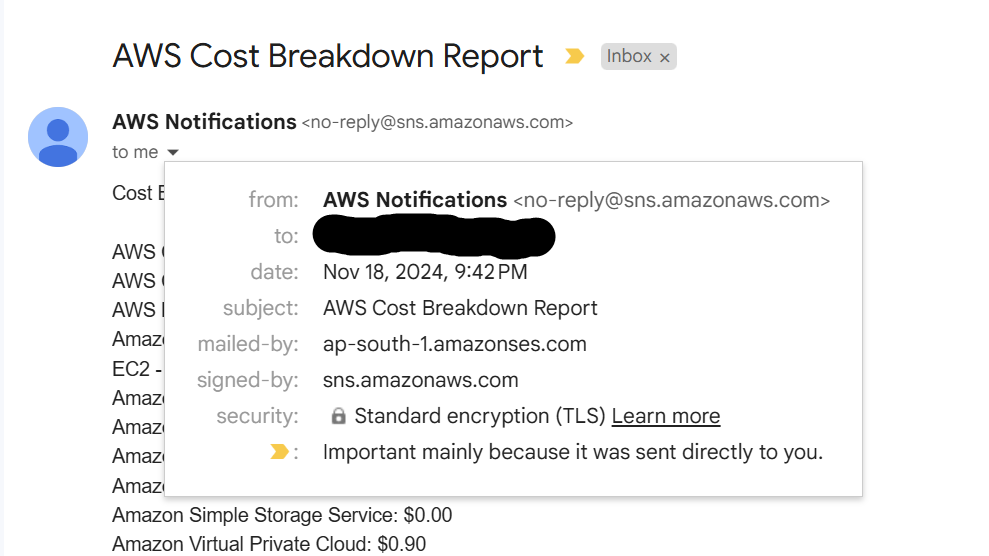

As we can see in the Security Details it mailed by and signed by AWS.

After all this, we will automate this so we don’t run the lambda function manually we will integrate CloudWatch with Lambda on schedule basis of every 1st day of month so that lambda will be triggered automatically and cost report sent to Email.

Navigate to AWS Cloudwatch in AWS Management Console Search Bar.

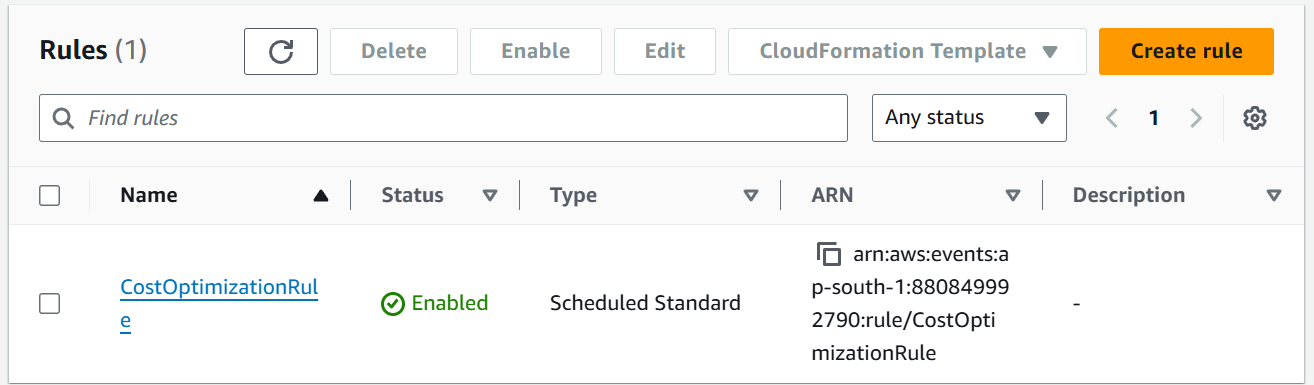

Now, in the left hand side we have to create Rules under Events section. Click on Rules, then select default EventBus name it

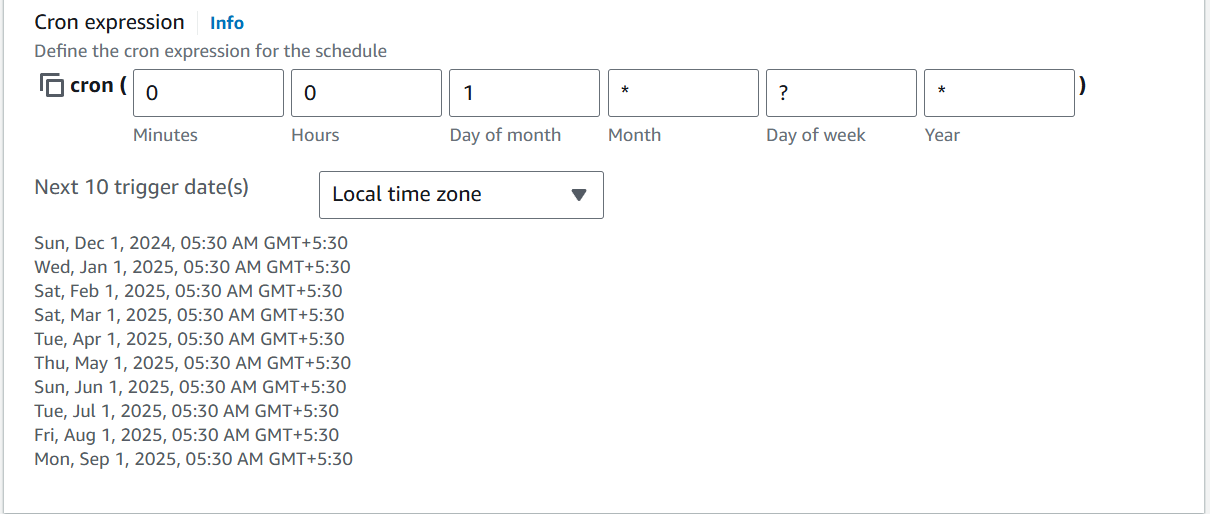

CostOptimizationRulethen select the Rule Type Schedule.Click on Continue to Create Rule fill the CRON expression (0 0 1 \ ? *)*

and select Local Time Zone. You will see the Next trigger dates means the Lambda will trigger on which date.

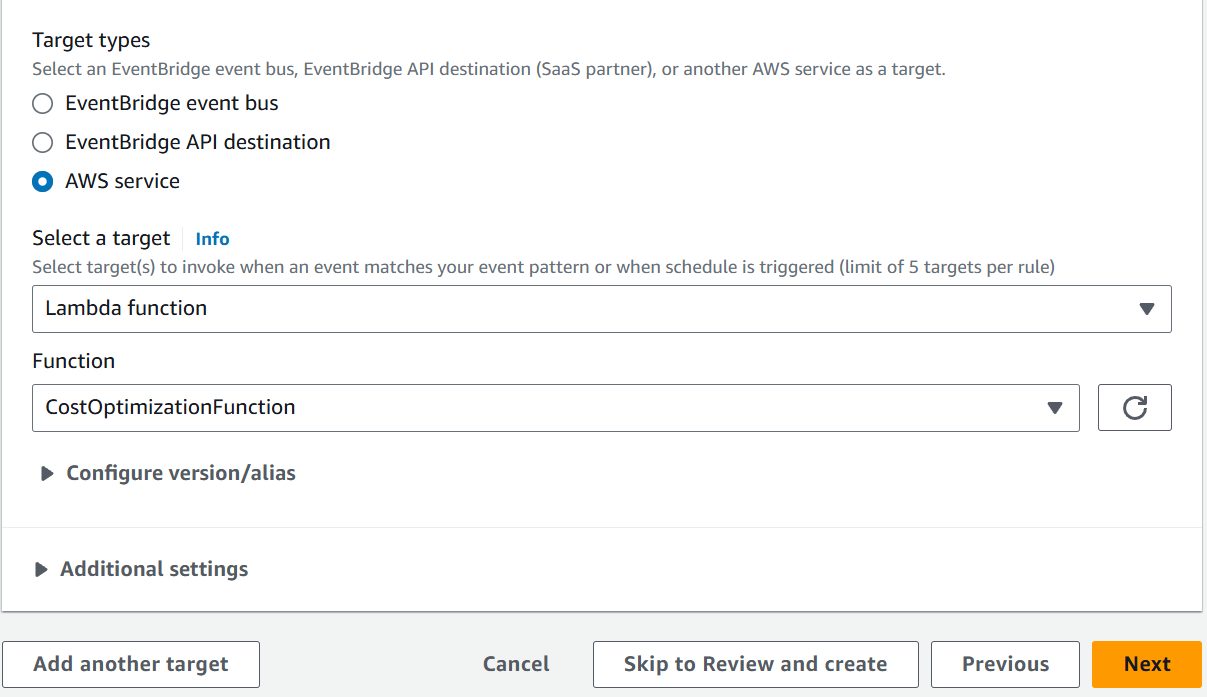

Click on Next and select AWS service type on which service you want to trigger. In our case it is Lambda function, so select Lambda then select the Lambda Function ARN.

Click on Next and create the Rule.

Conclusion

In this article, we had learnt how to create Lambda function, CloudWatch Rules to automatically trigger then generate the cost report and sent it to the Email. Meanwhile in the upcoming blogs you will see a lot of AWS Services creation and automating it with terraform. Stay tuned for the next blog !!!

GitHub Code : https://github.com/amitmaurya07

Twitter : x.com/amitmau07

LinkedIn : linkedin.com/in/amit-maurya07

If you have any queries you can drop the message on LinkedIn and Twitter.

Subscribe to my newsletter

Read articles from Amit Maurya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Amit Maurya

Amit Maurya

DevOps Enthusiast, Learning Linux