SpikingNeRF: Making Bio-inspired Neural Networks See through the Real World

Mike Young

Mike Young

This is a Plain English Papers summary of a research paper called SpikingNeRF: Making Bio-inspired Neural Networks See through the Real World. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- Introduces Spiking NeRF, combining neural radiance fields with bio-inspired spiking neural networks

- Achieves 3D scene reconstruction while reducing computational costs by 95%

- Demonstrates real-time rendering capabilities at 40+ FPS

- Maintains high visual quality comparable to traditional NeRF models

- First successful integration of spiking neurons for 3D scene representation

Plain English Explanation

Neural radiance fields (NeRF) create 3D scenes from 2D photos, but they need lots of computing power. This research makes them faster and more efficient by using brain-inspired computing methods called spiking neural networks.

Think of traditional NeRF like a painter who needs to mix every color perfectly for each brushstroke. Spiking NeRF works more like our brain - using quick bursts of activity (spikes) to represent information. This makes it much faster while still creating beautiful 3D scenes.

The system processes visual information similar to how human neurons fire, using discrete spikes instead of continuous signals. This approach cuts down the computing needed by 95%, making it possible to render 3D scenes in real-time on regular computers.

Key Findings

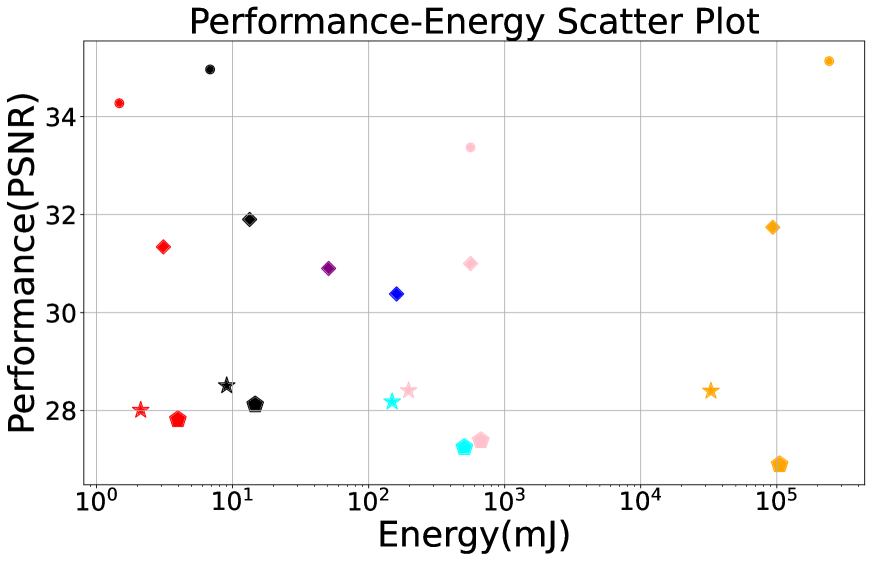

Spiking neural networks achieved comparable quality to standard NeRF while using significantly less computation:

- Reduced computational costs by 95%

- Achieved rendering speeds of 40+ frames per second

- Maintained high visual quality with PSNR above 31dB

- Successfully reconstructed complex 3D scenes from limited view angles

- Demonstrated effective handling of various lighting conditions

Technical Explanation

The research implements a hybrid architecture combining traditional neural networks with spiking neurons. The system uses a two-stage approach: first training a standard NeRF model, then converting it to a spiking neural network through careful parameter mapping.

Spatial annealing techniques help maintain stability during the conversion process. The spiking neurons use a rate-based coding scheme to represent continuous values, with careful attention to maintaining temporal coherence.

The architecture preserves the core NeRF principles while replacing dense matrix operations with sparse, event-driven computations. This dramatically reduces the computational overhead without significantly impacting the quality of the 3D reconstruction.

Critical Analysis

The current implementation has some limitations:

- Requires initial training of a standard NeRF model

- May struggle with extremely complex scenes or unusual lighting

- Network conversion process needs manual parameter tuning

- Limited testing on diverse real-world scenarios

- Potential challenges in handling dynamic scenes

Further research could explore direct training of spiking networks without the conversion step and investigate methods for handling dynamic scenes.

Conclusion

Spiking NeRF represents a significant breakthrough in making neural radiance fields practical for real-world applications. The dramatic reduction in computational requirements while maintaining high quality output suggests a promising future for bio-inspired approaches in computer vision and 3D reconstruction.

The success of this hybrid approach opens new possibilities for efficient neural rendering systems and demonstrates the potential of bringing neuromorphic computing principles to complex visual tasks.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Subscribe to my newsletter

Read articles from Mike Young directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by