Unlock Llama 3–8b Zero-Shot Chat: Expert Tips and Techniques

NovitaAI

NovitaAI

Key Highlights

Discover Llama 3–8b: The latest large language model offering impressive capabilities in text generation and question answering.

Zero-Shot Chat: Master the art of zero-shot chat with Llama 3–8b, enabling natural language interactions without prior training examples.

Advanced Prompting Techniques: Explore techniques like few-shot examples, task decomposition, and chain-of-thought prompting to enhance performance.

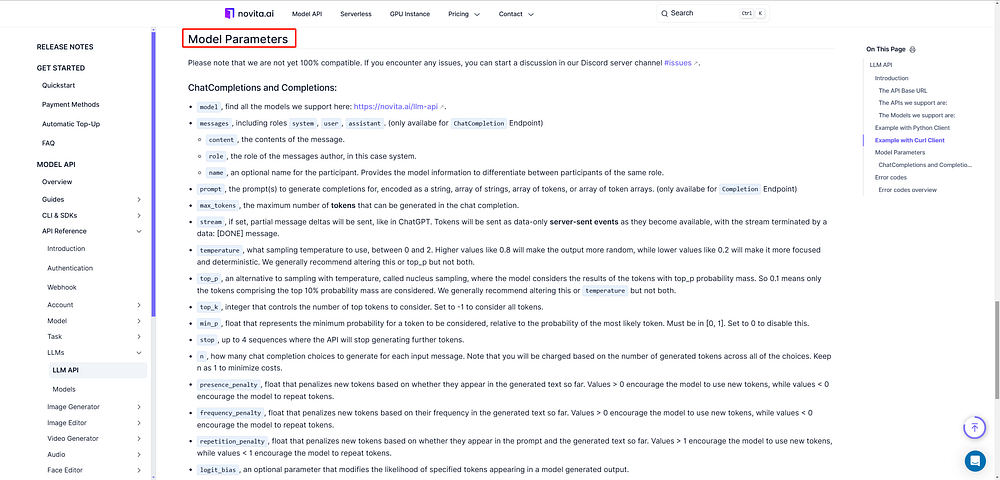

Performance Optimization: Fine-tune parameters like temperature, top-k, and top-p to maximize Llama 3–8b’s output quality.

API Call: Accessible through Novita AI’s API, simplifying deployment for developers with adjustable parameters like temperature and top-k.

Introduction

Llama 3 represents a significant advancement in large language models, trained on vast datasets with enhanced data quality and optimization. Its dialogue-focused “Instruct” models deliver high-performance conversational AI for various applications, all while maintaining manageable memory requirements. This latest iteration of Llama 3, with zero-shot capabilities and a special token for customizable parameters, adapts seamlessly to diverse tasks. Developers can easily access and deploy it via Novita AI’s API, avoiding the complexities of local management while leveraging its creative and analytical potential for innovation.

What’s new with Llama 3?

The Llama 3 models were trained on 8 times more data, using over 15 trillion tokens from various online sources with the help of 24,000 GPUs, which is the recommended way to achieve enhanced data quality similar to the original checkpoint. This significant improvement in data volume dramatically enhanced generative AI model performance.

The new open LLM models, including Llama 3 Instruct, were customized for dialogue-based applications, integrating over 10 million human-annotated data samples via techniques like supervised fine-tuning (SFT), rejection sampling, proximal policy optimization (PPO), direct policy optimization (DPO), and LLM inputs token for enhanced functionality.

Llama 3 has a permissive license allowing redistribution, fine-tuning, and derivative works. The new license requires explicit attribution compared to Llama 2. Derived models must start with “Llama 3” in their name and mention “Built with Meta Llama 3” in works or services.For full details, please make sure to read the llama 3 license.

Advanced Techniques for Enhancing Llama 3–8b Performance

Unlock Meta Llama 3–8b’s potential by mastering prompt engineering with Amazon SageMaker JumpStart, a powerful feature embraced by ML practitioners, including the use of Amazon SageMaker Pipelines and Amazon SageMaker Debugger and Amazon SageMaker machine learning. Effective prompts are key to accurate, creative, and relevant responses from the model, providing essential context for desired outputs.

Llama 3–8b is strong in zero-shot learning. This means it can do tasks without having seen any examples first, similar to how such models operate. However, using better prompting techniques can greatly improve how well it performs.

Parameter Tuning for Optimal Results

Adjusting Llama 3–8b’s inference settings is crucial for optimal results. The “temperature” setting is key, influencing the creativity and predictability of the model’s responses. Lower temperature ensures clarity, while higher temperature allows for more creative and surprising answers.For a deeper understanding of how to adjust the temperature parameter for optimal results, you can refer to “How to Adjust Temperature for Optimal Results.”

The “top-k” setting is essential as it limits the model’s word choices from the original codebase. Selecting the right k value is key for maintaining output clarity and coherence. A smaller k provides focused answers, whereas a larger k fosters creativity and unexpected responses.

Testing different settings is vital to find the right balance of creativity, clarity, and accuracy. This involves using essential cookies and performance cookies for data collection to improve site features and considering their impact on interactions with third parties. The ideal settings may vary based on the task, desired output, and your primary goal in accordance with the AWS Cookie Notice.

Understanding Llama 3–8b Zero-Shot Chat

Zero-shot chat is a big change in conversational AI. It allows models like Llama 3–8b to have natural language conversations without needing special training data for specific tasks. This amazing skill comes from the model’s large knowledge gained during its pre-training. During this phase, it learned from a lot of text data.

When Llama 3–8b gets a prompt, it uses its language understanding skills. It figures out what the user wants and creates a helpful response using what it has already learned.

Crafting Effective Zero-Shot Prompts with Llama 3–8b

Crafting good zero-shot prompts is important for using Llama 3–8b to its fullest. When you give the model instructions, be clear, short, and specific. Keep in mind Llama 3–8b can add a lot of context, which helps create deeper talks.

Use Llama 3–8b’s strong language understanding by giving clear directions. For example, if you need a translation, say, “Translate the following phrase into Spanish” before sharing the text.

Also, try out different ways of writing prompts to see what works best for you. The great thing about zero-shot prompting is its flexibility. With a bit of creativity, you can guide Llama 3–8b to do many tasks.

Principles of Prompt Design

Effective prompt design is important. It plays a big role in how well large language models like Llama 3–8b respond. Prompts are like guides that help the model understand what to do. When you create prompts using different techniques, especially for the instruct versions of Llama 3–8b, keep these key ideas in mind:

Clarity and Specificity: Make your instructions clear so there is no confusion. Clearly define how you want the output to look, its length, and style.

Contextual Relevance: Give background information or examples if needed. This helps the model understand the context better.

Task Decomposition: Divide complex tasks into smaller parts. This can help the model provide more accurate and efficient answers.

If you follow these ideas, you can make prompts that share your intent well with Llama 3–8b. This leads to outputs that are accurate, relevant, and creative.

Examples of Zero-Shot Prompts

Let’s look at some simple examples of zero-shot prompts that show what Llama 3–8b can do in different situations for your own use cases, particularly in continuing an input sequence from a single user message. For a creative writing task, you might ask the model: “Write a short story about a young musician who finds a magical instrument during his free time.” This type of open-ended question lets Llama 3–8b use its ability to generate text, creating a story that taps into its rich knowledge of characters and tales.

In another situation, suppose you need to summarize a long research article. You can use a zero-shot prompt like “Give a brief summary of the following research paper, using techniques from a GitHub repository,” followed by the article text. This would help Llama 3–8b quickly pull out relevant information and make it easy to understand.

Llama 3–8b’s flexibility and the effectiveness of zero-shot prompts are evident in these examples. By offering a list of terminators, including the standard EOS token and the regular EOS token, and ensuring clear, specific prompts from the original meta codebase, this language model can tackle diverse tasks. It serves as a valuable tool across various fields and applications.

Why Use Llama 3–8B Zero-Shot Chat?

Llama 3–8b can chat without prior training, a significant advancement in AI. It enables rapid development of conversational AI systems for various tasks, automating work, sharing information, and enhancing user experiences.

Customer service chatbots now mimic human responses. Virtual assistants draft emails and create content. Llama 3–8b’s chat function without training is transforming technology in electrical engineering and business problem-solving. As technology advances, we expect more diverse applications using natural language to improve work efficiency and computer interactions.

Future Directions for Llama 3–8b and Zero-Shot Learning

Looking ahead, the future of Llama 3–8b and zero-shot learning holds immense potential. Ongoing research and development are enhancing these models, leading to advancements in multilingual capabilities, personalized learning, and interactive knowledge exploration.

Developing stronger zero-shot learning techniques will enhance Llama 3–8b’s capabilities for handling tasks and generating code generation, especially with open source models. Picture LLMs effortlessly adapting to new languages, personalizing responses, and tackling complex problems creatively.

These future paths highlight how Llama 3–8b and zero-shot learning can change artificial intelligence. They have the power to transform how we interact with computers.

Using Llama 3 8b on the Novita AI LLM API

To seamlessly apply Llama 3–8b’s capabilities, users can easily access and utilize zero-shot chat through the Novita AI LLM API, simplifying deployment and interaction with the model without the need for complex setup. Here’s how you can get started.

- Step 1: Sign up for a Novita AI account and log in.

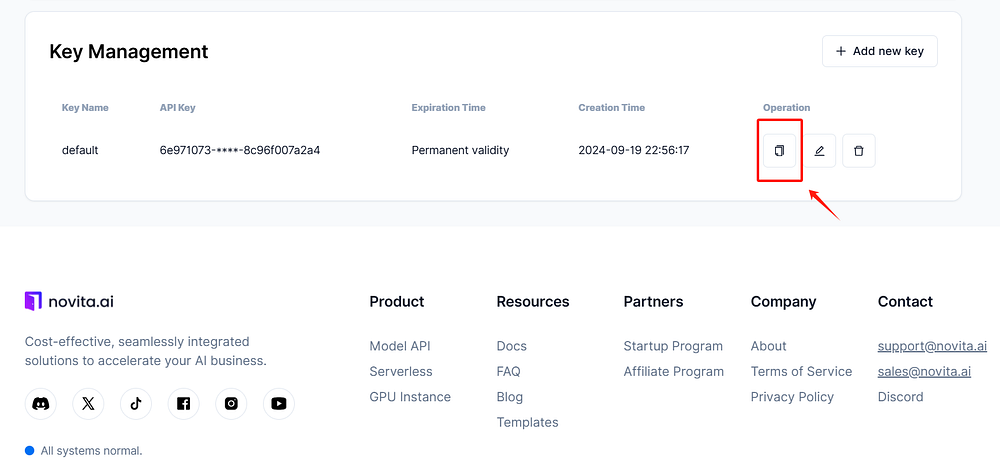

- Step 2: Go to “Key Management” in the settings to manage your API keys and use bearer authentication to verify API access.

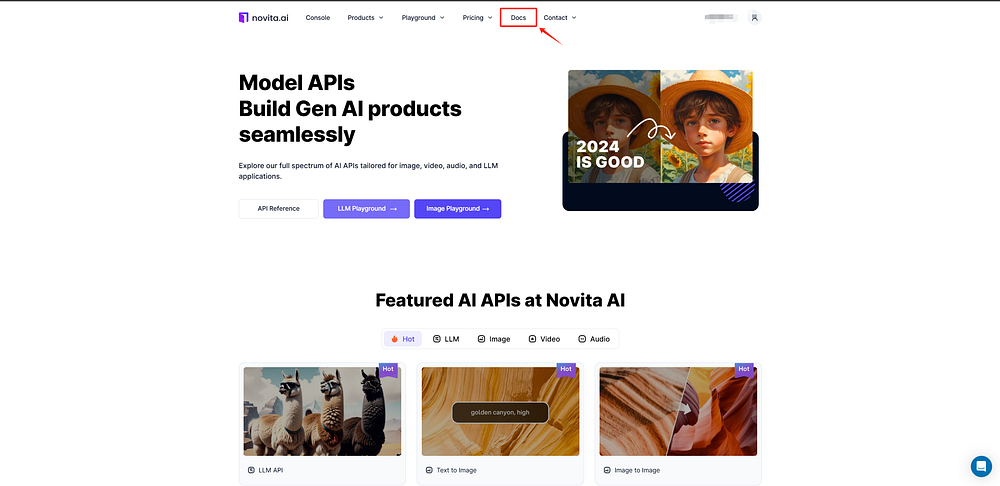

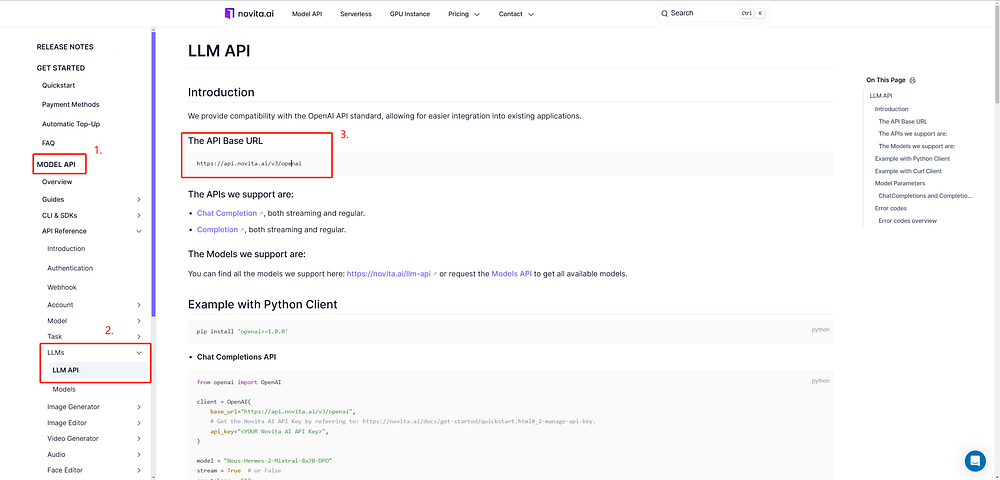

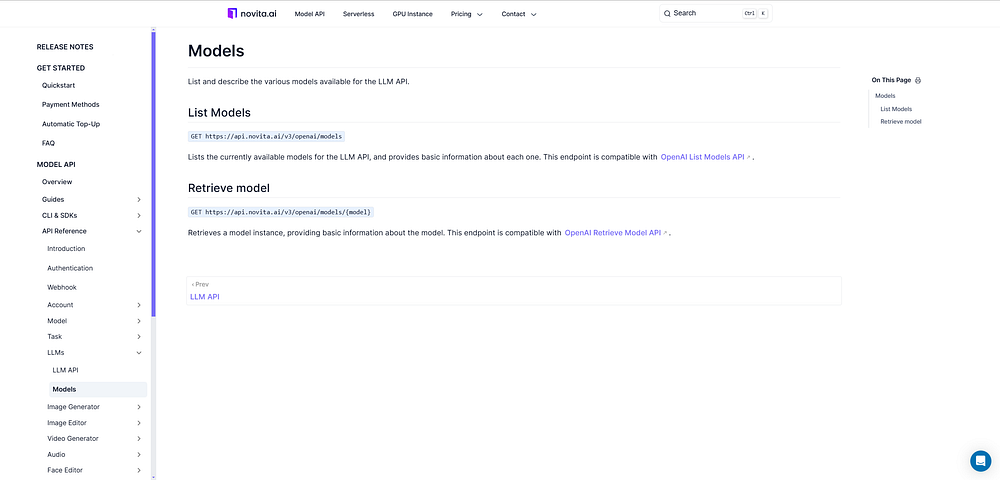

- Step 3: Go to the LLM API documentation to view the API base URL.

- Step 4: Select a model. Novita AI offers a range of model APIs, including Llama, Mistral, Mythomax, and more. To view the complete list of available models, you can visit the Novita AI LLM Models List.

You can choose the Llama 3 API to meet your needs.

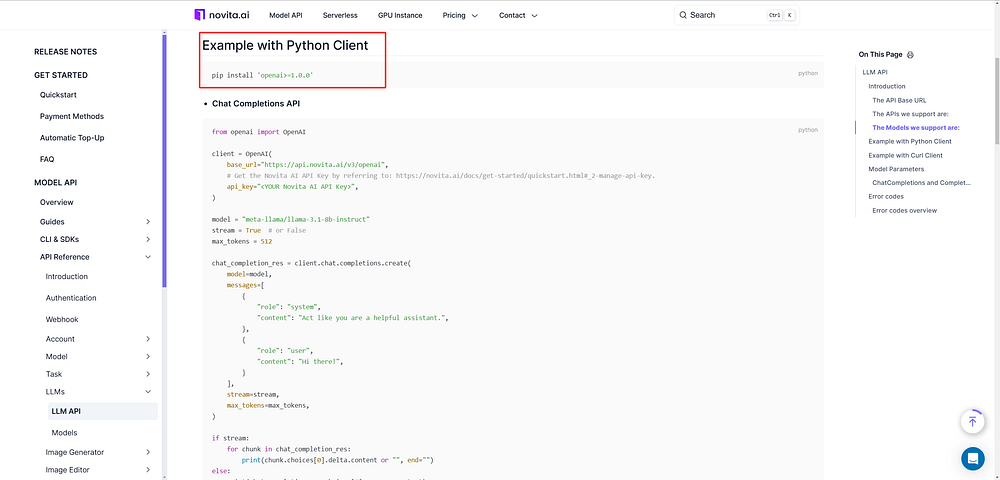

- Step 5: Install the required libraries and review important considerations for model parameters. Ensure your environment is properly set up to work seamlessly with the selected model.

Model parameters are crucial for your subsequent use of the model. It’s important to carefully review the documentation on Model Parameters, such as prompt, temperature, top_p, and others.

- Step 6: Run several tests to verify the API’s reliability.

Testing the Llama 3.1 Demo on Novita AI

Novita AI does more than just give you access. It often offers a demo environment where you can try things out before fully calling the API.

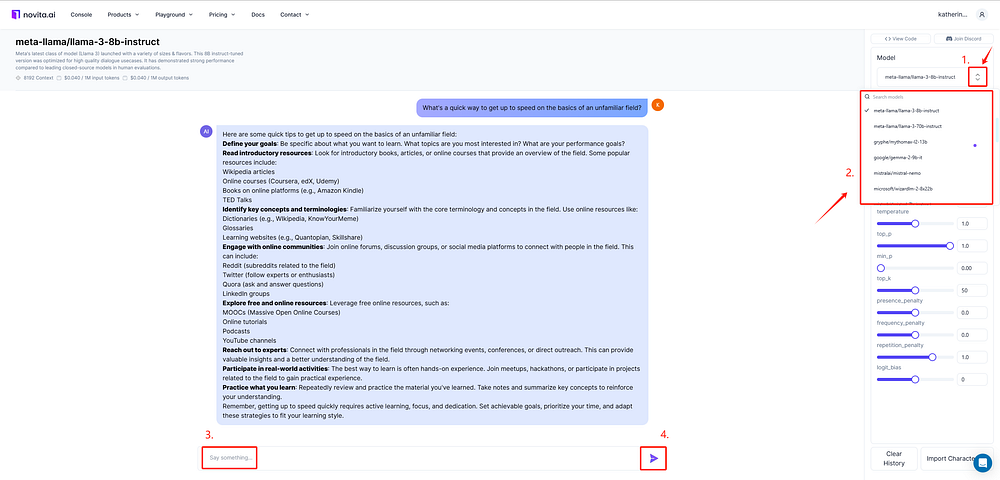

Let’s dive into the Novita AI LLM Demo

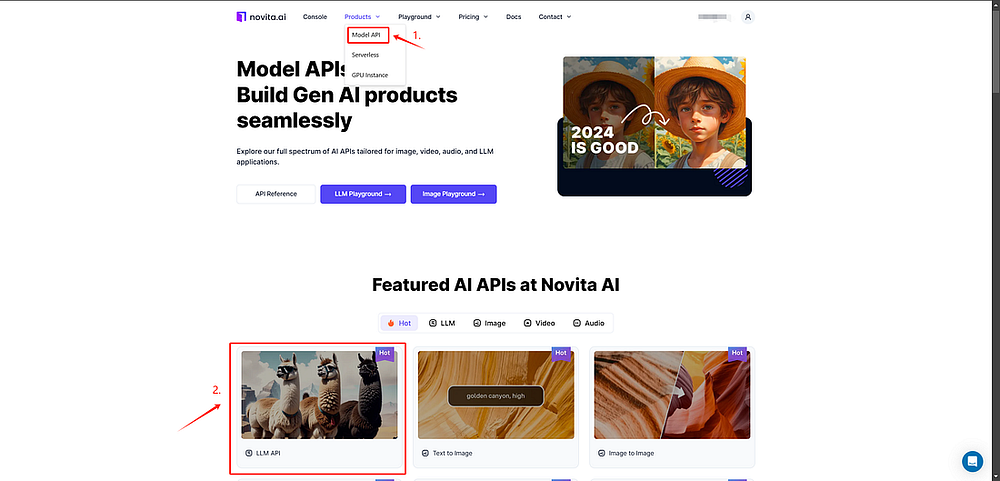

Step 1: Start with the demo: Navigate to the “Model API” section and select “LLM API” to explore the LLaMA 3.1 models.

Step 2: Choose the desired model, input your prompt in the provided field, and view the results.

Here’s what we offer for Llama 3

Conclusion

In conclusion, Llama 3–8b Zero-Shot Chat is a great tool that uses new methods for better performance. Knowing its details and making good prompts can improve how you use it. As zero-shot learning changes, Llama 3–8b shows a bright future ahead. You can try it on the Novita AI LLM API to see its potential up close. Stay on top in chat technology with the new features and abilities of Llama 3–8b. There are exciting possibilities with this amazing tool.

Frequently Asked Questions

How accurate is the Llama 3 8B?

The accuracy of Llama 3 8B, similar to other large language models, relies on several data science factors. These include how complex the task is and how good the prompt is.

How much VRAM to run a Llama 3 8B?

Running Llama 3 8B well usually needs a good amount of VRAM. It is best to have at least 16GB to get the best performance.

What is Llama 3 instruct 8B?

Llama 3 instruct 8B is a type of language model. It is specially trained to follow instructions. This makes it a great choice for NLP tasks that require question answering and text generation.

Is the Llama 3 instruction tuned?

Llama 3 does have instruct-tuned versions. This means the AI model has received extra training. It is designed to improve its ability to follow instructions and do related tasks well.

How does llama 3–8b zero-shot chat compare to other chatbot technologies?

Llama 3–8b can chat with people without needing to be trained on specific dialogues. This makes its conversations feel more like natural language, which is different from regular chatbots.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Subscribe to my newsletter

Read articles from NovitaAI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by