Using Giskard: The Testing Framework for ML Models

Tanvi Ausare

Tanvi Ausare

Machine learning (ML) models are powerful tools, but ensuring their reliability, fairness, and robustness is an ongoing challenge. Giskard, a testing framework specifically designed for ML models, offers a way to tackle these issues systematically. By leveraging Cloud GPU infrastructure, an AI Cloud, or an AI Datacenter, developers and businesses can accelerate and scale their ML testing efforts seamlessly. This article delves into Giskard, its features, and how it complements cloud-based environments like Cloud GPU setups to transform ML testing.

Why ML Model Testing is Critical

ML models are becoming integral to modern applications, but they aren't perfect. Some key challenges include:

Bias and Fairness: ML models can inadvertently inherit biases from training data. Testing ensures fairness across diverse demographic groups.

Robustness: Real-world data often differs from training data. Testing evaluates how models handle edge cases and unexpected inputs.

Performance: Ensuring high accuracy, speed, and scalability in production environments is paramount.

Giskard simplifies these challenges, empowering teams to build trustworthy ML solutions efficiently.

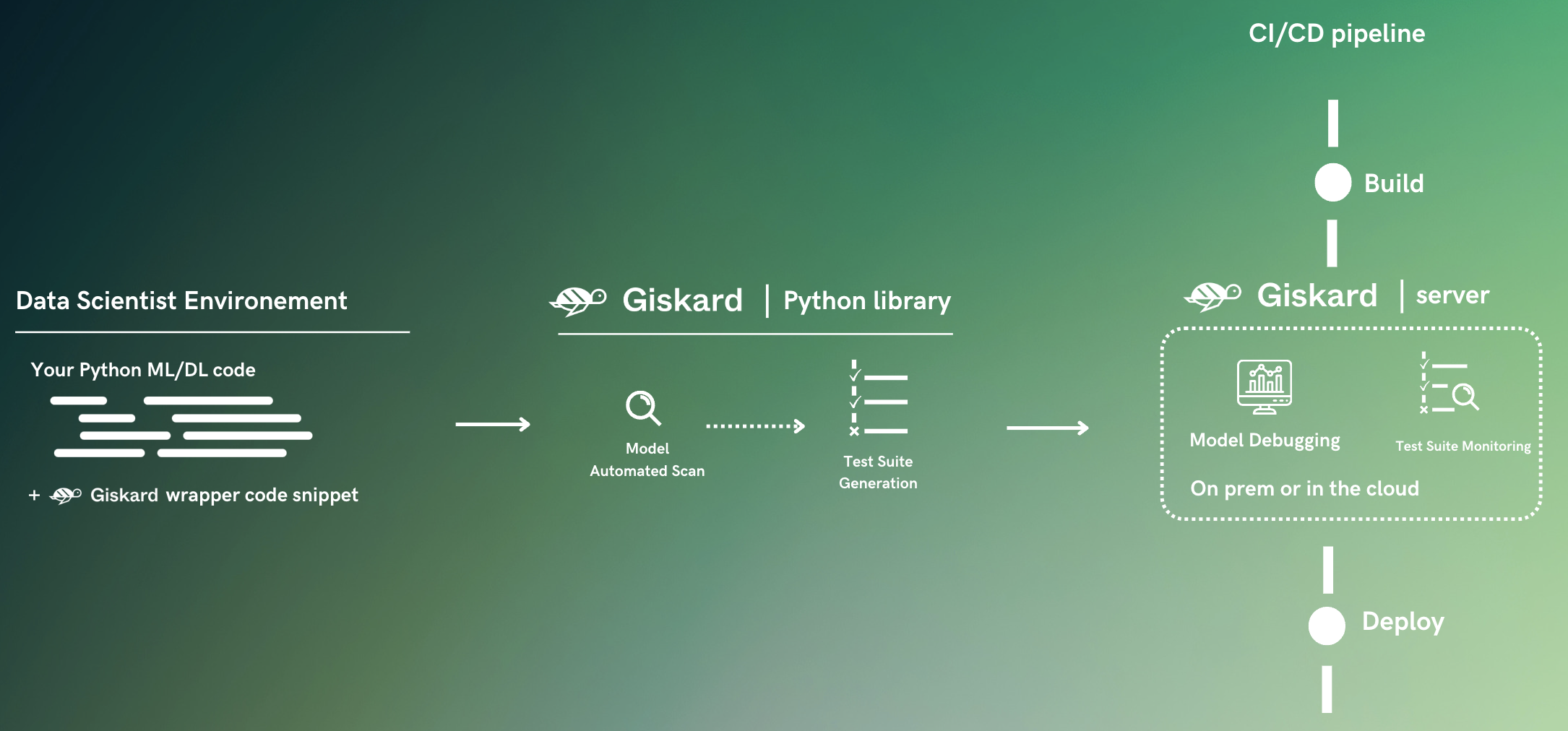

What is Giskard?

Giskard is an open-source framework tailored for testing ML models. It is designed to detect bugs, measure bias, and improve performance by identifying weaknesses before deployment. Some core features include:

Model Debugging: Identify hidden flaws that could impact production performance.

Bias Detection: Evaluate fairness across different demographic or categorical groups.

Automated Testing Pipelines: Integrate seamlessly with CI/CD workflows for continuous testing.

Interpretability: Gain insights into how models make predictions, enhancing transparency.

By integrating Giskard with Cloud GPU platforms, teams can perform resource-intensive testing at scale, making it ideal for large models or datasets.

Key Features of Giskard for ML Testing

1. Comprehensive Model Evaluation

Giskard offers tools to evaluate models beyond traditional metrics like accuracy or F1-score. Key evaluation features include:

Fairness Metrics: Assess and mitigate biases across sensitive attributes.

Robustness Checks: Simulate adversarial scenarios to test model resilience.

Explainability Tools: Visualize feature importance and understand decision-making processes.

2. Built-in Bias Detection

Bias detection ensures that ML models perform equitably across diverse populations. With Giskard, you can:

Identify biased predictions for subgroups within your data.

Create bias-specific tests to monitor fairness over time.

Generate detailed reports for compliance and audits.

3. Seamless CI/CD Integration

Giskard enables the automation of testing pipelines by integrating with existing CI/CD tools.

Automate regression testing for updates to your ML models.

Deploy Giskard on AI Cloud environments for parallel test execution.

Monitor test results and log data for continuous improvement.

4. Robust API and SDK Support

For developers, Giskard provides:

Python SDK for integrating testing workflows into existing ML pipelines.

APIs to deploy and execute tests programmatically in AI Datacenters or cloud-based GPUs.

5. Collaborative Testing

Collaboration is central to Giskard, allowing cross-functional teams to contribute:

Share test results with stakeholders using interactive dashboards.

Customize tests for domain-specific requirements.

Foster a culture of accountability by documenting testing standards.

Why Use Giskard with Cloud GPU Infrastructure?

Modern ML models, especially deep learning systems, require significant computational resources for testing. By leveraging Cloud GPU and AI Datacenter environments, Giskard users gain several advantages:

Scalability: Execute tests on large datasets and models without hardware constraints.

Speed: Harness the parallel processing power of GPUs to accelerate testing tasks.

Cost Optimization: Utilize resource pooling in cloud environments to test multiple models simultaneously.

Accessibility: Access high-performance resources from anywhere, enabling global collaboration.

How Giskard Fits into the AI Cloud Ecosystem

1. Optimized Workflows for Cloud-based GPUs

Deploy Giskard in AI Clouds to take advantage of scalable GPU resources.

Use containerized setups to streamline deployment across multiple environments.

Monitor GPU utilization for efficient resource management.

2. Integrated Data Security

Giskard ensures compliance with security protocols required by AI Datacenters, such as:

Encryption of sensitive datasets during testing.

Secure API calls for interacting with cloud resources.

Compliance with industry standards like GDPR or HIPAA.

3. Enhanced Collaboration Across Teams

Cloud-based Giskard setups foster real-time collaboration:

Allow remote teams to run tests concurrently.

Share insights via cloud-native dashboards and reporting tools.

Maintain version control for test scripts and results.

How to Get Started with Giskard on the Cloud

Step 1: Set Up Giskard

Install Giskard via Python’s package manager (

pip install giskard).Configure the framework to connect with your testing datasets and ML models.

Step 2: Leverage Cloud GPU for Scalability

Deploy Giskard on platforms like NeevCloud’s AI Datacenter, which offers dedicated Cloud GPUs.

Use Docker containers to manage dependencies and ensure portability.

Step 3: Define Your Testing Metrics

Create tests focusing on fairness, robustness, and accuracy.

Utilize Giskard’s built-in templates or customize your own.

Step 4: Automate Testing Pipelines

Integrate Giskard into your CI/CD pipelines using its API.

Schedule periodic testing to ensure model performance remains consistent.

Step 5: Analyze and Optimize

Review Giskard’s detailed reports to identify improvement areas.

Refine models based on insights and re-test iteratively.

Best Practices for Giskard in Cloud Environments

Prioritize Security: Use secure connections and encrypted storage for sensitive data.

Leverage GPUs Effectively: Match the GPU configurations with your model size to avoid over-provisioning.

Customize Tests: Tailor Giskard’s testing framework to address domain-specific challenges.

Collaborate Continuously: Encourage team participation to catch edge cases and biases early.

Monitor Results: Regularly update testing benchmarks to align with evolving project goals.

Case Study: Giskard on NeevCloud’s Cloud GPU Platform

The Challenge

A financial services company needed to test a fraud detection ML model for fairness and accuracy across diverse customer demographics.

The Solution

Deployed Giskard on NeevCloud’s Cloud GPU infrastructure.

Ran large-scale tests for bias detection and robustness using high-performance GPUs.

Automated the testing process with a CI/CD pipeline integrated with Giskard.

The Results

Reduced bias in predictions by 30%.

Increased overall model accuracy by 15%.

Decreased testing time by 50%, enabling faster deployment cycles.

Conclusion

Giskard offers a transformative approach to testing ML models, ensuring they are reliable, fair, and robust before deployment. By leveraging the power of Cloud GPU, AI Cloud, and AI Datacenters, teams can scale testing efforts and deliver high-performing models faster. Integrating Giskard into your ML pipeline not only enhances model quality but also fosters trust and transparency in AI applications.

With platforms like NeevCloud providing the infrastructure needed for Giskard, embracing this framework has never been easier. Start your journey toward robust ML testing today!

Subscribe to my newsletter

Read articles from Tanvi Ausare directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by