Procedural Knowledge in Pretraining Drives Reasoning in Large Language Models

Mike Young

Mike Young

This is a Plain English Papers summary of a research paper called Procedural Knowledge in Pretraining Drives Reasoning in Large Language Models. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

• Research examines how large language models (LLMs) leverage procedural knowledge from pretraining data

• Study reveals pretrained knowledge drives reasoning abilities more than previously thought

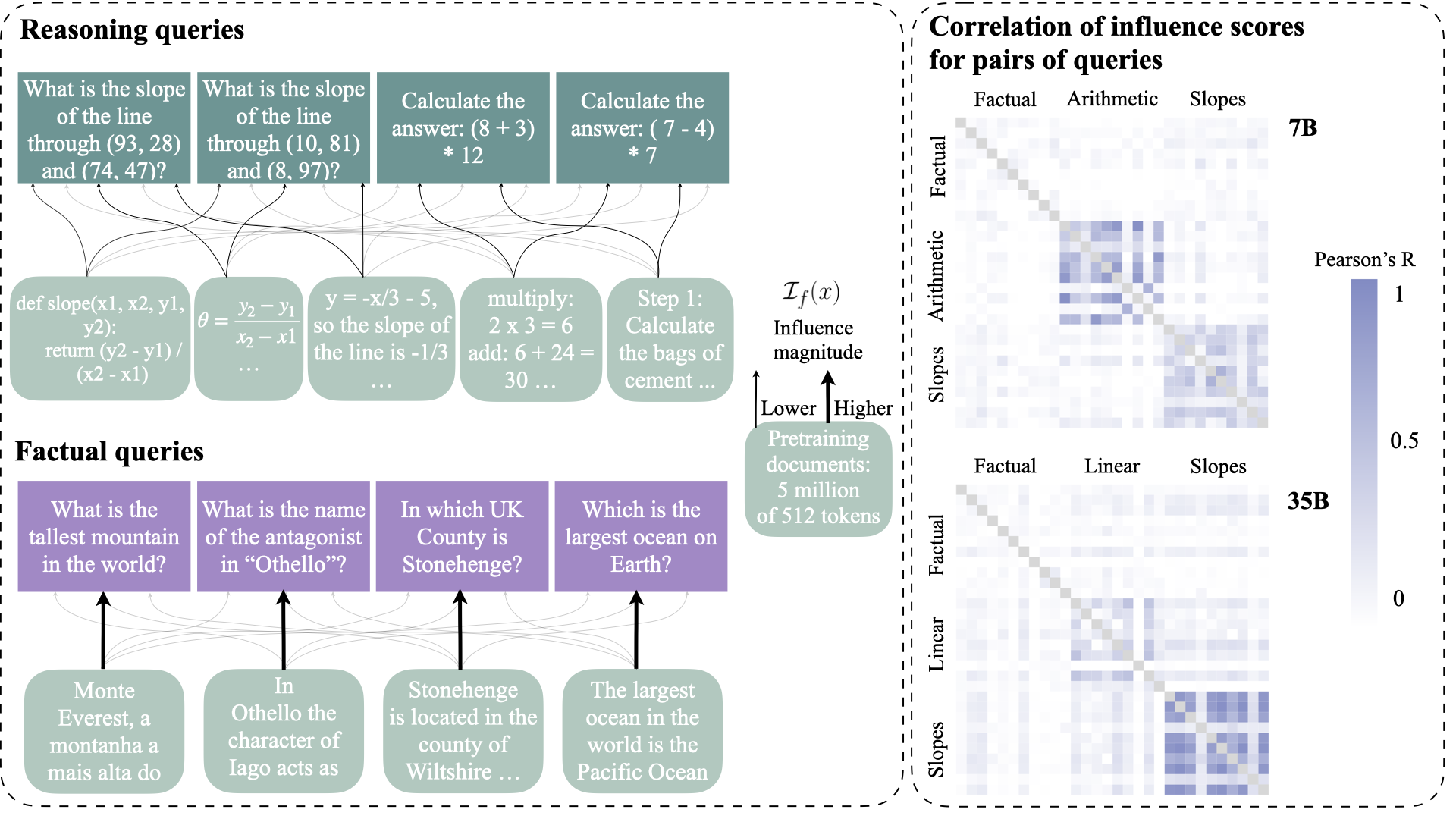

• Novel influence tracing method developed to analyze document impact on model outputs

• Findings show models rely heavily on procedural patterns learned during initial training

Plain English Explanation

Large language models learn fundamental reasoning skills during their initial training, similar to how humans learn basic problem-solving patterns early in life. Rather than memorizing specific answers, these models pick up general approaches for tackling problems.

The researchers developed a way to track how different training documents influence a model's reasoning abilities. Like tracing footprints in sand, this method reveals which training experiences shaped the model's problem-solving strategies.

Reliable reasoning emerges from exposure to many examples of logical thinking patterns. Just as a child learns to solve puzzles by seeing many examples, language models develop reasoning capabilities by processing millions of documents containing procedural knowledge.

Key Findings

The research uncovered that procedural knowledge gained during pretraining plays a crucial role in model reasoning. Models don't simply memorize answers - they learn general problem-solving approaches.

Learning dynamics show that exposure to procedural texts helps models develop systematic reasoning abilities. The study found strong connections between pretraining data and downstream reasoning performance.

Models demonstrate better reasoning on tasks that align with procedural patterns encountered during training. This suggests that careful curation of training data could enhance reasoning capabilities.

Technical Explanation

The researchers developed an influence tracing methodology to analyze how pretraining documents affect model outputs. This involves calculating importance scores for training examples based on their impact on specific reasoning tasks.

Procedural knowledge transfer occurs through exposure to step-by-step explanations, logical arguments, and problem-solving demonstrations in the training data. The study tracked how this knowledge impacts downstream task performance.

The methodology revealed that models rely heavily on procedural patterns learned during pretraining when tackling new reasoning challenges. This suggests that reasoning abilities emerge from exposure to structured thinking patterns rather than task-specific fine-tuning.

Critical Analysis

Several limitations exist in the current study. The influence tracing method may not capture all relevant training influences, and the analysis focuses primarily on English language content.

The research doesn't fully address how different types of procedural knowledge interact or how to optimize training data selection for enhanced reasoning capabilities. More work is needed to understand the precise mechanisms of knowledge transfer.

Beyond accuracy, questions remain about the reliability and consistency of model reasoning across different domains and task types.

Conclusion

This research demonstrates that procedural knowledge acquired during pretraining fundamentally shapes how language models reason. The findings suggest that careful curation of training data could lead to more capable and reliable AI systems.

The study opens new paths for improving model reasoning abilities through better understanding of knowledge transfer mechanisms. Future work should focus on optimizing training data selection and developing more robust evaluation methods for reasoning capabilities.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Subscribe to my newsletter

Read articles from Mike Young directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by