A backend engineer lost in the DevOps world - Making a Kubernetes Operator with Go

Amr Elhewy

Amr Elhewy

Introduction

Hello folks! This will be a series of articles where I try diving into complex Devops topics simplifying them for us backend engineers and making the article serve as a quick recap for whoever is interested. In this article we’ll dive into Kubernetes operators and we’ll be making our first operator using Go (not from scratch but we’ll use a handy tool called kubebuilder that adds a boilerplate so we can focus on what we should focus on only). Before moving forward let’s talk about what operators even are.

Kubernetes Operators

A Kubernetes Operator is a method of automating the management of complex applications on Kubernetes, a typical operator consists of:

Controller: A program that watches and reacts to changes in Kubernetes resources (like Custom Resources) and takes actions to manage the application’s lifecycle (e.g., creating, updating, or deleting resources).

Custom Resource (CR): A custom-defined object that represents the application or service the operator manages. It defines the desired state (e.g., number of replicas, configuration) of that application.

It automates the management of complex applications by using a controller to watch a custom resource and ensure the application matches the desired state.

Now if you were like me first time reading this, you probably didn’t understand anything. Now its time to simplify this even further

The operator is an umbrella for 2 main things:

A custom resource; which Is a new type of object that Kubernetes doesn’t even know about (for example, pod is a resource) (pod-stalker is a custom resource because it isn’t natively installed in Kubernetes)

So we create new custom resources that have a defined schema. This will become much clearer when we implement the actual operator.

The controller which is the brain of the operator and where the main code lies. The controller watches for changes in the custom resource and does some logic based on what happened. This process is called reconciliation where the goal is always get back to the desired state from the current one. That desired state is specified in something called a Custom Resource Definition. Which is just a YAML file actually initializing the custom resource filling in the schema.

Enough with the theoretical stuff let’s do a walkthrough of a cool project. We will create a custom resource PodTracker which will watch over some pods with a specified name and will monitor any pod creations in the default namespace and send a message on a slack channel notifying that creation happened.

PodTracker Walkthrough

To get started first install kubebuilder

Kubebuilder is a framework for building Kubernetes Operators and Custom Controllers. It provides a set of tools and libraries to help you easily create, test, and manage Kubernetes Operators, which automate the management of complex applications on Kubernetes.

This will give us a great scaffold to start off of.

Once kubebuilder is installed let’s run the commands to create a scaffold

# initialize a new kubebuilder project in Go.

kubebuilder init --domain lost.backend --repo lost.backend/pod-tracker

# creates the API responsible for our new Custom Resource

kubebuilder create api --group pod-tracker --version v1 --kind PodTracker

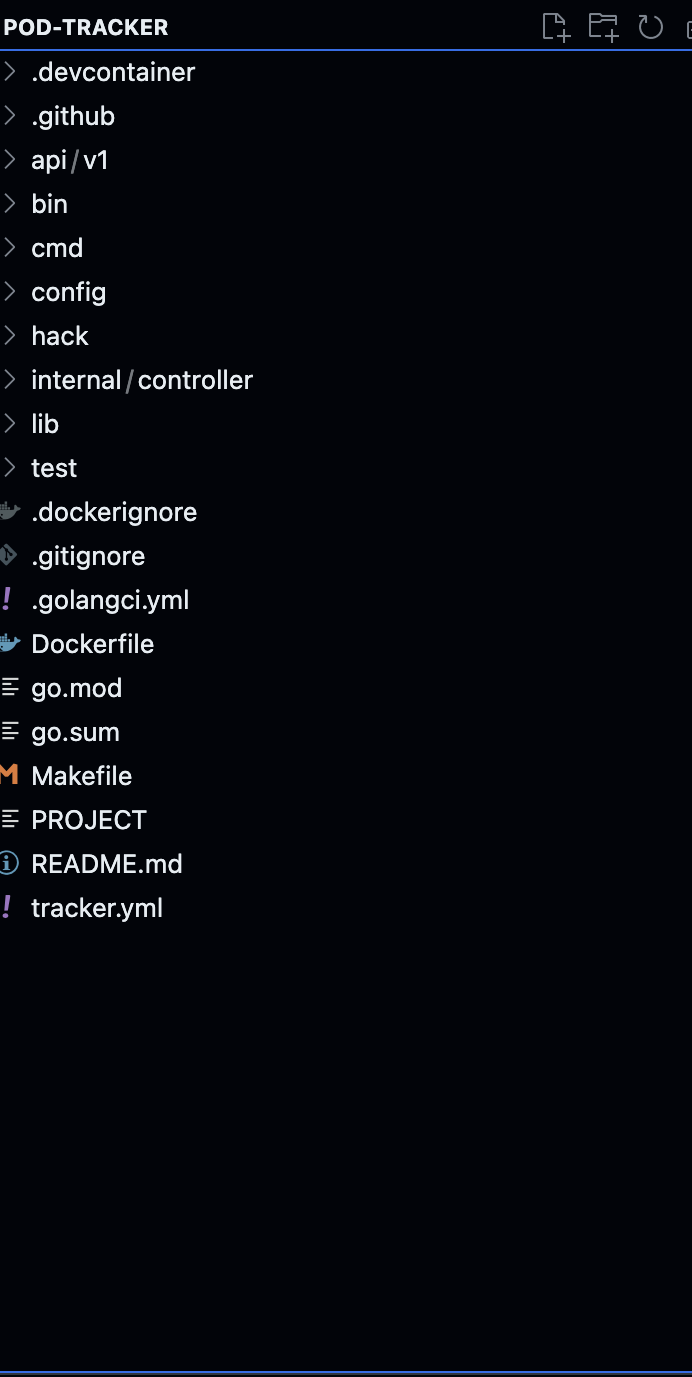

Once installed the file structure should look something like this

We’re only going to be concerned with two main files

`

api/v1/podtracker_types.go

Let’s start by defining our Custom Resource Schema first

Custom Resource Schema

Inside api/v1/podtracker_types.go

You’ll find a structure that looks like the following

type PodTrackerSpec struct {

}

// PodTrackerStatus defines the observed state of PodTracker.

type PodTrackerStatus struct {

}

// +kubebuilder:object:root=true

// +kubebuilder:subresource:status

// PodTracker is the Schema for the podtrackers API.

type PodTracker struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec PodTrackerSpec `json:"spec,omitempty"`

Status PodTrackerStatus `json:"status,omitempty"`

}

PodTrackerSpec is for the desired schema of the pod tracker. We will have the following:

NameField that ensures that this pod tracker would track pods with that given name (For simplicity, usually pod names are unique so a better approach is to add a deployment name and monitor changes for pods that belong to a specific deployment)ReporterWhich is a struct that containsKind, Key & Channeldescribing the kind of reporting (in our case slack, the api key of it and the channel to post on)

PodTrackerStatus defines the current observed state of the PodTracker. Which is usually updated in reconciliation. (the controller updates it according to events that occur). Let’s leave this empty for now.

our types file should look like this now

type Reporter struct {

Kind string `json:"kind,omitempty"`

Key string `json:"key,omitempty"`

Channel string `json:"channel,omitempty"`

}

type PodTrackerSpec struct {

Name string `json:"name,omitempty"`

Reporter Reporter `json:"reporter,omitempty"`

}

// PodTrackerStatus defines the observed state of PodTracker.

type PodTrackerStatus struct {

PodCount int `json:"podCount,omitempty"`

Status string `json:"status,omitempty"`

}

NOTE that it’s essential to add the json annotations otherwise it won’t compile properly.

Now since we defined our Custom Resource. It’s time to actually write a definition for it. Something like this

# tracker.yaml

apiVersion: "pod-tracker.lost.backend/v1"

kind: "PodTracker"

metadata:

name: pod-tracker

spec:

name: "nginx"

reporter:

kind: "slack"

key: "slack-api-key (will post link on how to)"

channel: "C0821GM4602 (channel ID)"

This is a manifest where we can use kubectl apply -f tracker.yaml to apply this manifest and have our first pod-tracker object running!

Before doing kubectl apply we actually need to install the custom resource created in a local Kubernetes cluster. Make sure you have one running using kind for example.

We can install the Custom Resource by executing make install inside the project directory.

If we execute the kubectl apply command above we’ll find out that we have a pod-tracker resource instance already up and running

Check by kubectl get podtracker

However it’s just a resource instance running and it doesn't do anything useful (for now). Now comes time to actually add the brain to this resource using the second important file we mentioned and that is our controller file!

Custom Controller

In internal/controller/podtracker_controller.go we should have a method called Reconcile that looks as follows:

func (r *PodTrackerReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

return ctrl.Result{}, nil

}g

Reconcile Takes in what is known as a reconciliation request (a request to trigger this method basically) and executes the logic inside the method to reconcile the custom resource to the desired state.

It automatically gets called when events happen on PodTracker Resource (Creating, updating, deleting, etc)

Controlling what triggers the reconcile method basically lies within the second method we have here

func (r *PodTrackerReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).

For(&podtrackerv1.PodTracker{}). // Your primary resource

Watches(&corev1.Pod{}, handler.EnqueueRequestsFromMapFunc(r.HandlePodEvents)).

WithEventFilter(predicate.Funcs{

CreateFunc: func(e event.CreateEvent) bool {

return true // Process only create events

},

UpdateFunc: func(e event.UpdateEvent) bool {

return false // Ignore updates

},

DeleteFunc: func(e event.DeleteEvent) bool {

return false // Ignore deletions

},

GenericFunc: func(e event.GenericEvent) bool {

return false // Ignore generic events

},

}).

Complete(r)

}

In this method we basically watch for changes both in the PodTracker and Pod resources. Only create events are allowed to get processed and we discard the rest.

We pass the Pod events to a method r.HandlePodEvents which finds its PodTracker object and enqueues a reconciliation request for that PodTracker object which in turn does its job and sends a message to slack that a new pod has been created.

This is how HandlePodEvents looks like

func (r *PodTrackerReconciler) HandlePodEvents(ctx context.Context, o client.Object) []ctrl.Request {

// Check if the object is a pod if not ignore

pod, ok := o.(*corev1.Pod)

if !ok {

return []ctrl.Request{}

}

// check if the object lies in the kubernetes default namespace otherwise ignore

if pod.Namespace != "default" {

return []ctrl.Request{}

}

// get the list of PodTracker objects

podTrackerList := &podtrackerv1.PodTrackerList{}

// if none are found ignore.

if err := r.List(ctx, podTrackerList); err != nil {

return []ctrl.Request{}

}

ctrlRequests := []ctrl.Request{}

// iterate over the list of PodTracker objects

for _, podTracker := range podTrackerList.Items {

// check if the PodTracker object is watching the pod

if podTracker.Spec.Name == pod.Name {

ctrlRequests = append(ctrlRequests, ctrl.Request{NamespacedName: client.ObjectKeyFromObject(&podTracker)})

}

}

return ctrlRequests

}

We simply just get the podTracker objects and check which one of them is responsible for managing the currently created pod. When we find it we enqueue a request to reconcile that specific podTracker using NamespacedName which is an object useful for passing to the reconciliation request. It contains the resource name and namespace.

ctrlRequests could potentially be an array of reconciliation requests which means the method would be invoked as many times as the length of ctrlRequests respectively.

Now in the main reconciliation method I added this.

func (r *PodTrackerReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

fmt.Println("Reconciling PodTracker")

podTracker := &podtrackerv1.PodTracker{}

if err := r.Get(ctx, req.NamespacedName, podTracker); err != nil {

return ctrl.Result{}, client.IgnoreNotFound(err)

}

// send to slack an update that a pod with the name was created

lib.SlackSendMessage(podTracker.Spec.Reporter.Key, podTracker.Spec.Reporter.Channel, "Pod "+podTracker.Spec.Name+" was created")

return ctrl.Result{}, nil

}

I check if the - to be reconciled - pod tracker exists if it does I send a slack message using this function I made and return.

func SlackSendMessage(key string, channelID string, message string) {

api := slack.New(key)

_, _, err := api.PostMessage(channelID, slack.MsgOptionText(message, false))

if err != nil {

fmt.Println(err)

}

}

// just a simple function that sends a message to a channel

You provide the slack credentials in the custom resource manifest we did earlier. To get these credentials make sure you create a new slack app and follow the instructions here:

Install the go library for slack

slack-go/slackGo to the Slack API Apps page.

Create a new app from scratch

Navigate to "OAuth & Permissions" in your app's settings.

Add the necessary OAuth scopes for your app based on what it needs to do. In our case

chat:writeGo to "Install App" under the slack settings.

Click "Install App to Workspace".

Authorize the app with your workspace.

After installation, you’ll see an OAuth token in the "OAuth & Permissions" section.

The token starts with

xoxb-(for bot tokens) orxoxp-(for user tokens).This will be your key.

To get the channel id just check channel details in your slack app for the channel you want to write to it should be at the very bottom of channel details.

If you got all these steps correct execute make install again to compile the code and make run to test the controller logic

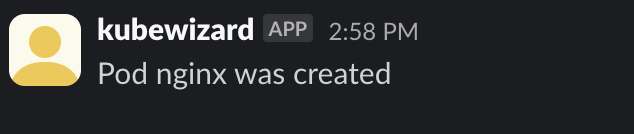

if we try to create an nginx pod using kubectl run nginx —image=nginx

we should get a slack notification 🎉

However our current code has a problem where if a new PodTracker is created. The reconcile method will run sending a slack message for a PodTracker resource creation. We only track created pods so this behavior is unwanted.

To be able to solve this we can use annotations! That’s where their power comes in. We can annotate PodTracker objects that actually need reconciliation because of a pod creation and not because the pod tracker itself is created. we can update the HandlePodEvents method to as follows:

func (r *PodTrackerReconciler) HandlePodEvents(ctx context.Context, o client.Object) []ctrl.Request {

pod, ok := o.(*corev1.Pod)

if !ok {

return []ctrl.Request{}

}

if pod.Namespace != "default" {

return []ctrl.Request{}

}

// get the list of PodTracker objects

podTrackerList := &podtrackerv1.PodTrackerList{}

if err := r.List(ctx, podTrackerList); err != nil {

return []ctrl.Request{}

}

ctrlRequests := []ctrl.Request{}

// iterate over the list of PodTracker objects

for _, podTracker := range podTrackerList.Items {

// check if the PodTracker object is watching the pod

if podTracker.Spec.Name == pod.Name {

if podTracker.Annotations == nil {

podTracker.Annotations = map[string]string{}

}

// add annotation to check for in the reconcilation

podTracker.Annotations["triggered-by"] = "pod"

// update the kubectl cluster podtracker object with the new annotation

if err := r.Update(ctx, &podTracker); err != nil {

log.FromContext(ctx).Error(err, "Failed to update PodTracker with annotations")

continue

}

ctrlRequests = append(ctrlRequests, ctrl.Request{NamespacedName: client.ObjectKeyFromObject(&podTracker)})

}

}

return ctrlRequests

}

func (r *PodTrackerReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

fmt.Println("Reconciling PodTracker")

podTracker := &podtrackerv1.PodTracker{}

if err := r.Get(ctx, req.NamespacedName, podTracker); err != nil {

return ctrl.Result{}, client.IgnoreNotFound(err)

}

// check the annotations if triggered by exists only send a message.

if podTracker.Annotations != nil && podTracker.Annotations["triggered-by"] == "pod" {

// delete the annotation for cleanup

delete(podTracker.Annotations, "triggered-by")

if err := r.Update(ctx, podTracker); err != nil {

return ctrl.Result{}, fmt.Errorf("failed to clear annotation: %w", err)

}

lib.SlackSendMessage(podTracker.Spec.Reporter.Key, podTracker.Spec.Reporter.Channel, "Pod "+podTracker.Spec.Name+" was created")

}

return ctrl.Result{}, nil

}

And voila! now we only send slack messages of newly created pods.

Summary

The main goal of an article like this is that first it’s targeted to backend developers with little to know knowledge about operators. Because let’s be honest it’s something we might finish our career and never touch. It’s just an attempt from me to ease the understanding of these concepts that I personally find myself struggling with. The goal was without diving deep make a simple use case that clearly explains the idea of this. Hopefully I delivered what I wanted. Also I might make these series into a YouTube series instead if anyone wants that let me know! Till the next one

Subscribe to my newsletter

Read articles from Amr Elhewy directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by