Data Architecture for Data Quality

Piotr Czarnas

Piotr Czarnas

The purpose of data quality validation

Data quality validation is the process of ensuring that data is accurate, complete, and suitable for its intended use. Just as a baker checks the freshness and quantity of ingredients before baking a cake, businesses must verify their data to make informed decisions, provide excellent customer service, and avoid costly mistakes.

Early detection of data problems is crucial to prevent them from escalating into larger issues that can result in wasted time, lost revenue, and even reputational damage. For instance, if a customer enters their email address incorrectly when placing an order, and this error is not caught, they will not receive order confirmations or shipping updates, leading to frustration and potentially lost business. Detecting this mistake early, while the customer is still on the website, allows for a quick fix. However, if the inaccurate data makes its way into other systems, it becomes much harder to correct. A data steward might have to manually track down the customer and update their information, consuming valuable time and resources.

Therefore, it is essential to incorporate data quality checks throughout the entire data journey, from the point of entry to storage and use.

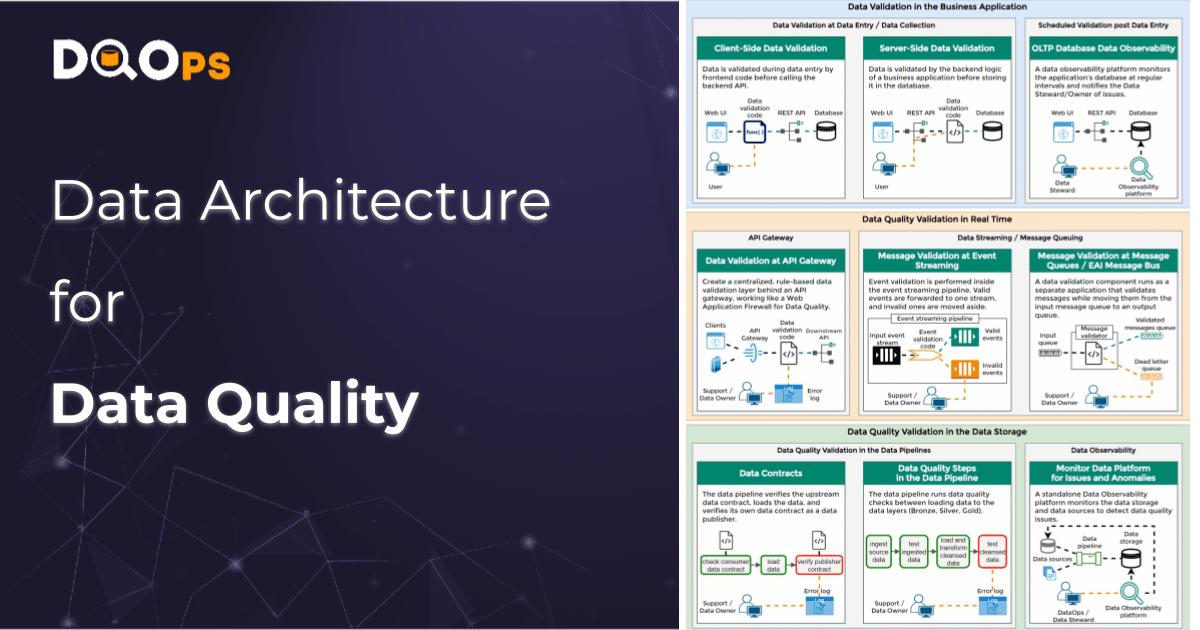

Types of data architectures

Think of data flowing through your systems like water moving through a network of pipes and filters. At different stages of this journey, the data passes through distinct layers, each offering unique opportunities to implement quality checks and ensure its cleanliness and reliability.

Here's a breakdown of these layers and how data quality validation fits in:

Business Application Layer: This is where users interact with your systems, such as websites or desktop applications. It encompasses both the frontend (user interface) and backend (underlying logic). Here, validation happens in real-time, catching errors as the user enters data, like those helpful pop-up messages that flag an invalid email address or a missing field.

Data Communication Layer: This layer manages the communication between different parts of your system, often using APIs, event streaming, or message queues. Validation at this layer adds checks as data moves between services, ensuring consistency and accuracy across your applications.

Data Platform Layer: This is where your data is stored and processed, typically in a data lake or warehouse. Here, validation often involves batch processing or using data observability tools to monitor quality over time. This is critical because this data is often used for crucial business decisions, reporting, and analytics.

By implementing data quality checks at each of these layers, you create a robust system that maintains data integrity throughout its entire lifecycle.

What is data observability

Real-time data quality validation isn't always feasible. You might be working with a pre-built application that's difficult to modify, or the sheer volume of data might make real-time checks impractical. In these situations, rigorous testing of all data quality requirements using dedicated checks becomes crucial.

However, certain data quality issues, known as data anomalies, can slip past even the most thorough real-time checks. These are unexpected patterns or behaviors in the data that emerge over time and require advanced analysis to detect. For example, imagine a data transformation process suddenly misinterpreting decimal separators due to a change in server settings. The value "1,001" (intended to be close to 1.0) might get loaded as "1001" (one thousand and one). Such errors wouldn't trigger typical validation rules but could severely impact any analysis relying on that data.

This is where data observability platforms, such as DQOps prove invaluable. They continuously monitor your data stores – databases, data lakes, data warehouses, even flat files – for anomalies, schema changes (like new columns or altered data types), and delays in data processing that might indicate skipped validation steps. By combining time-series analysis with AI, these platforms can identify unusual trends and alert you to potential data quality problems that might otherwise go unnoticed.

How to learn more

Check out DQOps, an open-source data quality and observability platform.

The full version of the Data Architecture for Data Quality article has a higher resolution infographic.

Subscribe to my newsletter

Read articles from Piotr Czarnas directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by