The Bot Awakens

DataGeek

DataGeek

Introduction

Welcome to the first step of our Chatbot journey! 🚀 In this blog, we’ll build a simple yet engaging chatbot application—complete with a sleek UI and powered by a dash of Generative AI magic. ✨ Whether you're a coding enthusiast or just getting started, this project is designed to be fun, beginner-friendly, and packed with exciting features.

So, what are we waiting for… (oh yeah, the Prerequisites!! 🛠️📋)

Prerequistes

Basic Knowledge of LangChain and Streamlit 🛠️

A GROQ API Key 🔑 (Don’t have one? No worries - here’s how to get yours)

VS Code 💻 (or your favorite code editor)

Most importantly, a love for coding ❤️

By the end of this blog, you’ll have created a fully functional chatbot with a polished UI and the satisfaction of building something cool from scratch! 🎉

Implementing a Simple Chatbot

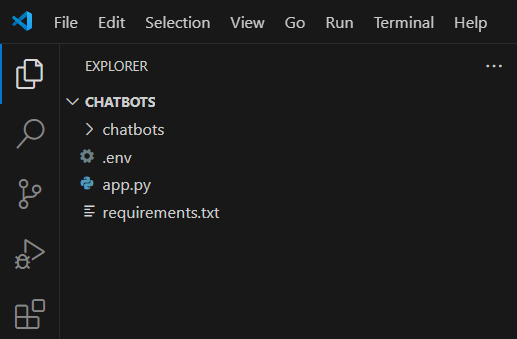

Step 1: Create the Project Directory

- First, let’s get organized like a pro! Create a new folder for your chatbot project. Then, open it up in VSCode (or any IDE of your choice, we don’t judge)

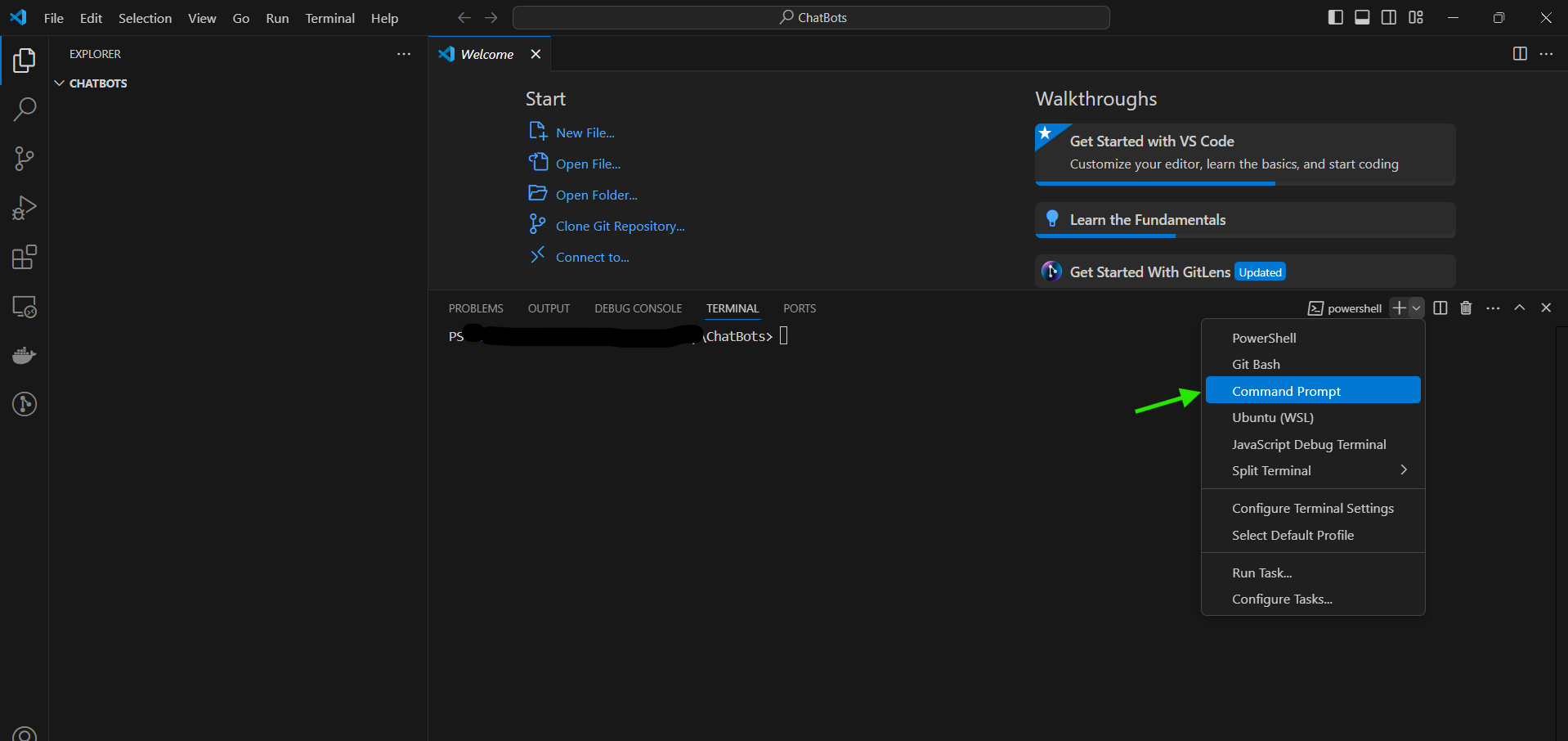

Next, open the terminal ( just press Ctrl + ` ) and let’s get to work.

Step 2: Creating a Virtual Environment

Why do we need a virtual environment? It’s like a clean, comfy home for your project, away from the mess of other projects. 🏠

To install Virtualenv

Run this in your terminal to install

virtualenv-pip install virtualenvCreate a Virtual Environment

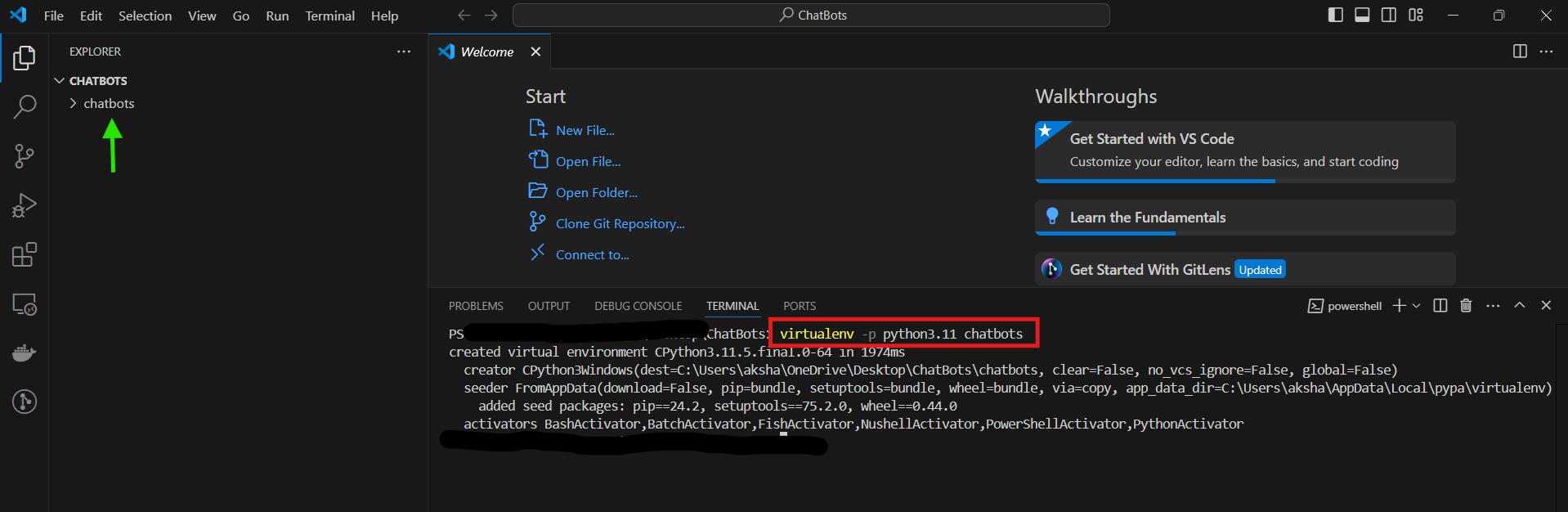

Now, let’s create a virtual env with a specific python version ( python version should be > 3.8 ) and make sure that version is present in your machine.

virtualenv -p python3.11 {{env_name}}Replace

{{env_name}}with your own environment name—be creative, it's your project!

After running the above commands one directory ( name

{{env_name}}) will appear in the Root project directory

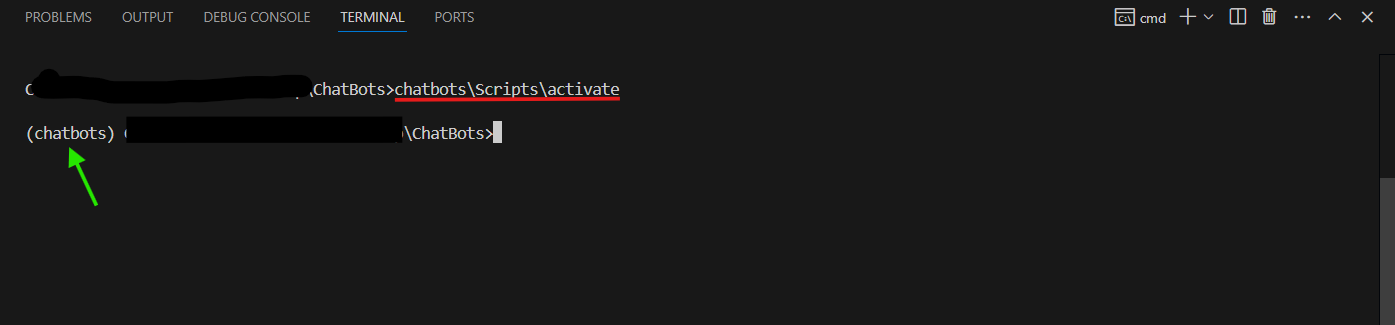

Activate Your Virtual Environment

To activate it, run -

{{env_name}}\Scripts\activateRemember to swap

{{env_name}}with your cool environment name.

Step 3: Setting Up the Project Files

Now, let’s create some essential files for our chatbot's setup! ✨

.env → This file will hold your API key. (We’re all about security, right? 😎)

requirements.txt → This is where you’ll list all the dependencies your project needs.

app.py → This is the main working file where the magic happens! 🎩✨

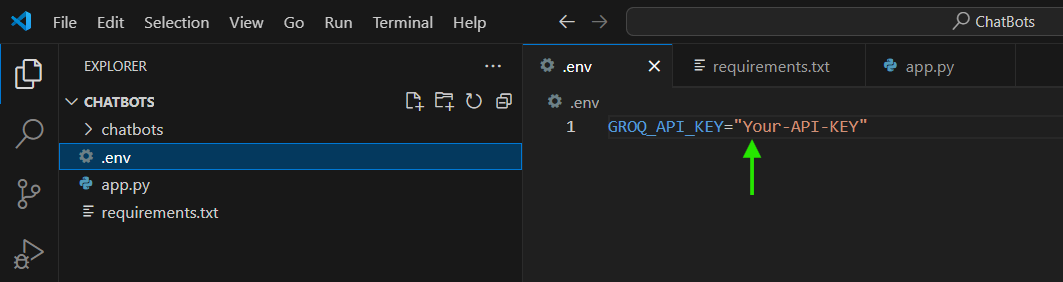

.env File Setup

Grab the GROQ API Key from your Groq Cloud account and add it to the

.envfile like this:

Save it and keep it safe. We don’t want anyone snooping around. 🔒

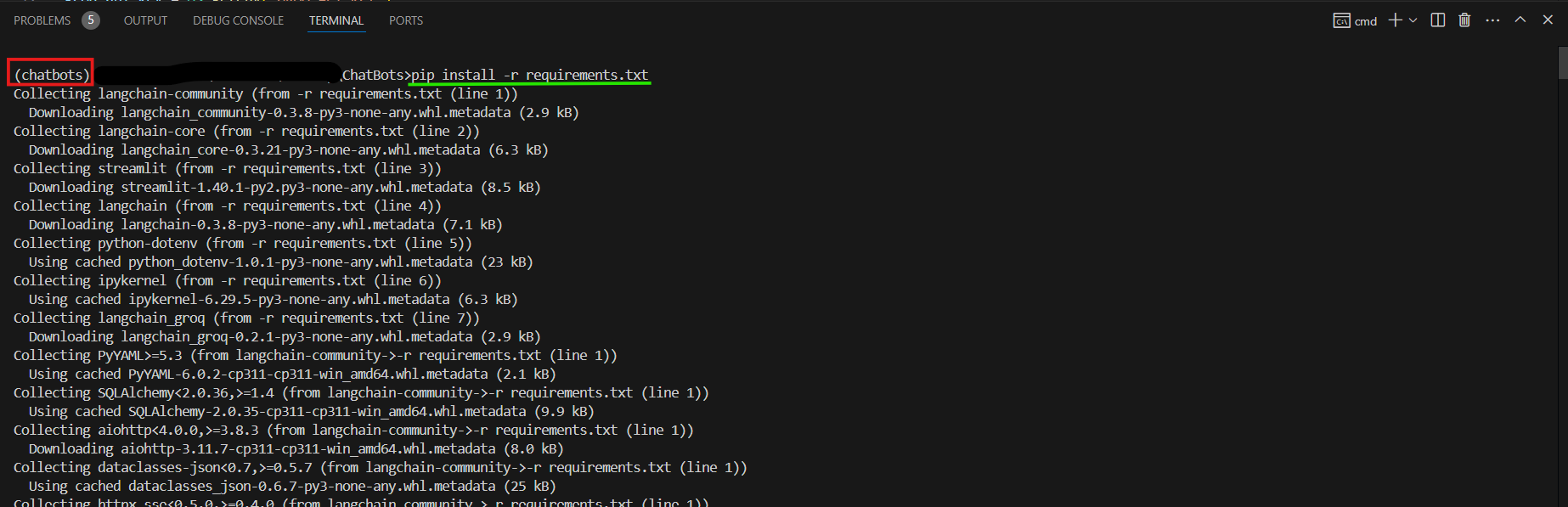

requirements.txt File -

Add the necessary dependencies to

requirements.txt. Here’s a sneak peek:langchain-community # Extends LangChain with community-built tools langchain-core # Core components for building LLM apps streamlit # Creates user-friendly web interfaces langchain # Enables LLM-based workflows and integrations python-dotenv # Manages environment variables securely langchain_groq # Integrates GROQ features with LangChainAfter you’ve set that up, let’s install all the goodies. Go ahead and run in the terminal -

pip install -r requirements.txtPS: Make sure your virtual environment is activated before running this. We want everything to live in its own little bubble! 🌈

Step 4: Creating the Main Working File → app.py

1.1 Importing Modules

import streamlit as st # For devloping a Simple UI

from langchain_groq import ChatGroq # To create a chatbot using Groq

from langchain_core.messages import HumanMessage, SystemMessage # To specify System and Human Message

from langchain_core.output_parsers import StrOutputParser # View the output in proper format

import os

from dotenv import load_dotenv # To access the GROQ_API_KEY

import time # To introduce a delay for a more natural chatbot response

Why these imports? These libraries are the foundation of our chatbot. They handle the UI, chatbot brain, messages, secure API access, and response timing.

1.2 Loading the Environment Variables and Setting Up the Model

# Load environment variables from the .env file

load_dotenv()

# Retrieve Groq API key

groq_api_key = os.getenv("GROQ_API_KEY")

# Initialize the chatbot model

model = ChatGroq(model="Gemma2-9b-It", groq_api_key=groq_api_key)

# Define the output parser to format the chatbot's responses

parser = StrOutputParser()

We use

load_dotenv()to load the.envfile where our API key lives securely.groq_api_keyis retrieved from the environment variables so our chatbot knows how to talk to Groq’s AI brain 🧠.The model,

ChatGroq, is initialized with the key and a specific AI model (Gemma2-9b-Ithere). If you’re feeling adventurous, you can swap the model name!Then we initialize an Output Parser to ensure that responses are clear and user-friendly.

1.3 Creating the Streamlit UI

# Set the chatbot's title in the web app

st.title("ChatPal 🤖")

A friendly chatbot needs a friendly title! We use

st.title()to set the chatbot’s name as "ChatPal 🤖".Streamlit makes it easy to add elements like titles, input boxes, and chat messages—so our chatbot feels alive!

1.4 Session_state for Chat History

# Initialize chat history if not already set

if "history" not in st.session_state:

st.session_state.history = []

# Display previous chat messages

for message in st.session_state.history:

with st.chat_message(message["role"]):

st.markdown(message["content"])

st.session_stateensures your chatbot remembers past conversations. Without it, every UI reload would be like talking to someone with short-term memory loss. 😅If the

historykey doesn’t exist, we initialize it as an empty list. This keeps all the chat messages neatly stored.Displaying Messages: Loops through the stored messages so users can see the full conversation history.

In short,

historysession_state variable is used to store the previous chats even after UI reload and the loop below it is used to display those stored previous chats after UI reloads

1.5 Chat Input and Prompt Handling

# Take user input and display it

if prompt := st.chat_input("Enter your text..."):

st.chat_message("user").markdown(prompt)

st.session_state.history.append({"role": "user", "content": prompt})

What is chat_Input ? ( info )

Purpose: A user-friendly input box for chat applications.

Feature: Automatically handles "Enter to send" functionality, simplifying user interaction.

What is a chat_message ? ( info )

Purpose: A visual component to display chat messages in a conversation-style UI.

Feature: Differentiates between user and assistant roles (

"user"or"assistant") and supports rich formatting with.markdown().

In short, This block takes the user's input via st.chat_input, stores it in prompt variable, displays it as a chat message using st.chat_message, and stores it in st.session_state.history for persistence and reusability.

1.6 Building the Chatbot’s Response

# Define system behavior and pass user input

messages = [

SystemMessage(content="You are a friendly chatbot. THINK carefully, respond politely, and answer precisely."),

HumanMessage(content=prompt)

]

# Invoke the model with user and system messages

result = model.invoke(messages)

A SystemMessage tells the chatbot how to behave. Think of it as the chatbot's personality manual: friendly, polite, and precise.

A HumanMessage contains the user’s query.

We send these messages to the AI model using

model.invoke(). The model processes the messages and generates a response.

1.7 Delaying the Response Display

# Generate the bot's response with a natural delay

def response_generator(result):

response = parser.invoke(result)

for word in response.split():

yield word + " "

time.sleep(0.05)

# Display the response as it's generated

with st.chat_message("assistant"):

response = st.write_stream(response_generator(result))

# Save the response in chat history

st.session_state.history.append({"role": "assistant", "content": response})

Bots responding instantly can feel too... robotic. So, we add a delay to simulate a human typing.

The

response_generatorsplits the bot’s response into words and displays them one at a time with a slight pause (time.sleep(0.05)). This creates a natural flow, like the bot is really thinking. 🤔Finally, the bot’s response is displayed using

st.chat_message("assistant").Both the user’s input and the bot’s response are saved in

st.session_state.history, ensuring the conversation is remembered for subsequent interactions.

Complete Code

- Here’s the polished version of the entire chatbot code we’ve walked through step by step:

import streamlit as st # For devloping a Simple UI

from langchain_groq import ChatGroq # To create a chatbot using Groq

from langchain_core.messages import HumanMessage, SystemMessage # To specify System and Human Message

from langchain_core.output_parsers import StrOutputParser # View the output in proper format

import os

from dotenv import load_dotenv # To access the GROQ_API_KEY

import time

# Loading environment variables that are set in .env file

load_dotenv()

# Retriving Groq API key from environment variables

groq_api_key = os.getenv("GROQ_API_KEY")

# Initializing the Groq-based model

model = ChatGroq(model="Gemma2-9b-It", groq_api_key=groq_api_key)

# Replace the model name if needed to use a different one

# Defining the output parser -> to display the respone in proper format

parser = StrOutputParser()

# Creating a Streamlit UI

st.title("ChatPal 🤖")

# Used to store the chat history in a "history" session_state variable even after UI reruns

if "history" not in st.session_state:

st.session_state.history = []

# Used to display the chat history even after reruns

for message in st.session_state.history:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# Taking input from the user

# := this operator takes the user input and simultaneously checks whether it is none or not

if prompt := st.chat_input("Enter your text..."):

# Used to display the input given by the user

st.chat_message("user").markdown(prompt)

# Appending that user message in the history session_state variable

st.session_state.history.append({"role":"user","content":prompt})

messages = [

# Instructions given to the Model -> can be used for hypertuning the Model

SystemMessage(content=f"You are a friendly chatbot, also THINK and process the user question carefully and answer the questions asked by the user precisely and in polite tone"),

# Query asked by the user

HumanMessage(content=prompt)

]

# Invoking the model

result = model.invoke(messages)

# To add a bit delay in response displayed

def response_generator(result):

# Parsing the result into a readable response

response = parser.invoke(result)

for word in response.split():

yield word + " "

time.sleep(0.05)

# Used to display the response given by the model

with st.chat_message("assistant"):

response = st.write_stream(response_generator(result))

# Appending that response message in the history session_state variable

st.session_state.history.append({"role":"assistant","content":response})

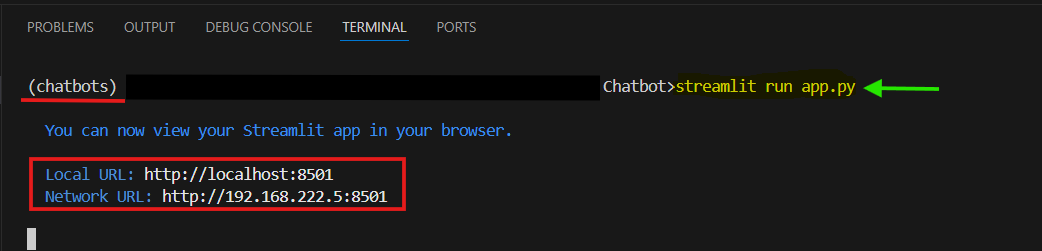

Step 5: Run and Access the Application

Now that you’ve completed building your chatbot. 🎉It’s time to let it shine in action! 🚀

Run the Application

Open your terminal, ensure your virtual environment is active, and execute the following command to start the app:

streamlit run {{app_name.py}}Replace

{{app_name.py}}with the name of your main Python file (e.g.,app.py).

Open the local URL in your web browser to access the application.

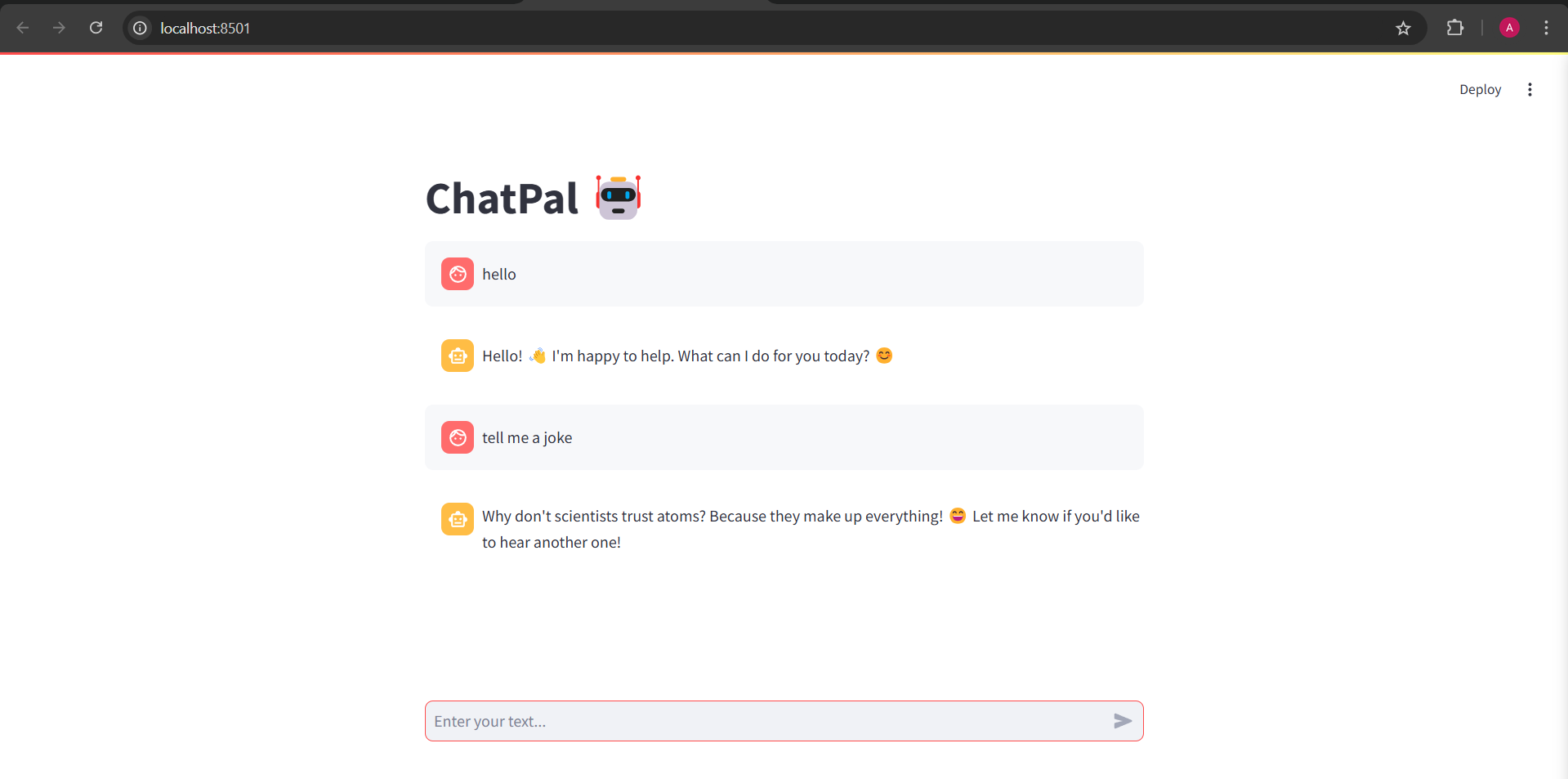

Demonstration

And that’s a wrap! 🎉

Congratulations, coder! You’ve officially stepped into the realm of Chatbots. Your chatbot is now alive, friendly, and ready to chat. Not to flex, but ChatPal is proud to call you its creator—and, honestly, so am I! 😄

What’s Next ?

While ChatPal can hold a chat like a pro, wouldn’t it be amazing if it remembered who you are, your past interactions, or even some preferences? 🤔

Coming up next: We’ll dive into building a chatbot with memory, where your bot doesn’t just responds but it also remembers! From recalling previous questions to maintaining context over long conversations, we’re about to level up the chatbot game. 🚀

Stay tuned for the next blog, where we’ll teach ChatPal how to remember you better than your EX ever did! 😄

Subscribe to my newsletter

Read articles from DataGeek directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

DataGeek

DataGeek

Data Enthusiast with proficiency in Python, Deep Learning, and Statistics, eager to make a meaningful impact through contributions. Currently working as a R&D Intern at PTC. I’m Passionate, Energetic, and Geeky individual whose desire to learn is endless.