Mastering Chatbot Memory: A Practical Guide

DataGeek

DataGeek

Introduction

Previously on ChatMania (dramatic music playing 🎶), we proudly introduced ChatPal, our shiny new chatbot. It could answer questions, handle small talk, and even fake politeness. But let’s face it—ChatPal had the memory of Dory from Finding Nemo 🐠.

Imagine telling ChatPal your favorite movie was Inception, only for it to forget seconds later and reply with, “What’s a movie?” 🎥🤦♀️

So, What’s Missing?

What it lacked was memory—the ability to remember past interactions and use them to provide contextually rich responses. Memory in chatbots isn’t just a fancy add-on; it’s the secret sauce 🥘 that makes conversations more meaningful, personal, and downright smarter 🤖✨.

Imagine chatting with a bot that remembers your preferences, prior questions, and even your terrible jokes. It’s not just a chatbot anymore—it’s your virtual buddy 🤝.

In this blog, we’ll dive into the world of chatbot memory. We’ll explore what it is, why it’s crucial, and how to implement it.

Specifically, we’ll take the first step by feeding ChatPal some Almonds... ha, just kidding! 😂 Instead, we’ll dive into In-Memory techniques and LangChain’s older classes to build a smarter, more reliable chatbot 🧠.

So, put on your coding goggles 👓 and prepare to add some brainpower 🧠 to ChatPal. By the time we’re done, your chatbot will go from forgetful to unforgettable 💡✨.

Types of Memory Implementation

When it comes to making chatbots remember, there’s no one-size-fits-all solution. The choice of memory implementation depends on your use case and the complexity of your bot. Broadly, we have two main approaches:

In-Prompt Memory (Quick and Temporary) 🧠

External Memory (Scalable and Persistent) 📂

1. In-Prompt Memory 🧩

This method involves storing memory directly within the chatbot's environment—like passing memory variables along with the user query in the LLM (Large Language Model) prompt. ✍️ It’s quick, easy to set up, and works well for simple tasks where long-term memory isn't needed.

In-Prompt Memory can be implemented using default LangChain memory classes. 🛠️ The older version of LangChain includes six memory classes:

ConversationBufferMemory

Stores the entire conversation history in a buffer, retaining all messages.ConversationBufferWindowMemory

Stores a fixed window of the conversation, keeping only the most recent messages.ConversationSummaryMemory

Summarizes the conversation and stores a brief summary instead of the full history.ConversationSummaryBufferMemory

A hybrid approach that summarizes recent messages while buffering the entire conversation.Entity Memory

Focuses on storing specific entities (e.g., names, dates, locations) from the conversation for easy retrieval.Graph Memory

Uses a graph structure to link entities and their relationships over the conversation.

In the newer version of LangChain, memory is simplified into three core classes:

BaseChatMessageHistory

Provides a base structure for message history, allowing for customization.ChatMessageHistory

A simple class to manage message history in a chat, focusing on storing and retrieving messages.RunnableWithMessageHistory

Runs tasks while maintaining a message history, ideal for workflows where context is essential.Workflow of In-Prompt Memory:

How It Works:

Step 1: The chatbot retains the history of the conversation in a variable.

Step 2: For every new user query, this memory is appended to the prompt and sent to the model.

Step 3: The model uses this contextual memory to craft a relevant response.

2. External Memory 🗂️

For chatbots handling large-an scale interactions or requiring memory persistence, external memory is the go-to approach. 🛠️ Here, the chatbot stores interaction history in a database, often a Vector Database 📂, and retrieves relevant information when needed

External memory can be implemented using vector databases such as -

FAISS,ChromaDB,Pinecone, etc.Workflow of External Memory:

How It Works:

Step 1: All interactions are stored in an external database.

Step 2: When a user query is received, relevant past interactions are retrieved using similarity search.

Step 3: This retrieved memory is added to the query context and used to generate a response.

Complete Types of Memory

Now that we’ve explored the two primary approaches, here’s a complete diagram summarizing the memory implementation landscape:

Implementing a Chatbot with Memory

Prerequisite

Before diving into this section, ensure you’ve gone through the previous blog to understand:

Basic chatbot structure: UI creation with Streamlit.

Setting up a language model: Using LangChain's ChatGroq.

Handling conversations: Managing user inputs, responses, and chat history.

This article builds on those fundamentals by introducing conversation memory for chatbots.

- In this section, we’ll take the foundation laid in steps 1 to 3 from the previous article and build upon it. Don’t worry—we’re not starting from scratch! All we need to do now is tweak the

app.pyfile to bring ChatPal’s memory to life. 🧠✨

Step 4: Creating the Main Working File → app.py

1.1 Importing Modules

import streamlit as st # For developing a simple UI

from langchain_groq import ChatGroq # To create a chatbot using Groq

from langchain_core.messages import HumanMessage, SystemMessage # To specify System and Human messages

from langchain_core.output_parsers import StrOutputParser # To view the output in proper format

import os # To interact with the operating system

from dotenv import load_dotenv # To access environment variables like GROQ_API_KEY

import time

# For managing different types of conversation memory # To add smooth delays in chatbot responses # To add smooth delays in chatbot responses

from langchain.memory import ConversationBufferMemory, ConversationSummaryMemory, ConversationBufferWindowMemory, ConversationSummaryBufferMemory

from langchain.chains import ConversationChain # To manage the chatbot's conversation flow

New Imports:

Memory Modules: Manage how the chatbot remembers conversations.

ConversationChain: Facilitates seamless conversation flow with memory integration.

1.2 Loading the Environment Variables and Setting Up the Model

load_dotenv() # Load .env variables

groq_api_key = os.getenv("GROQ_API_KEY") # Retrieve API key

model = ChatGroq(model="Gemma2-9b-It", groq_api_key=groq_api_key) # Initialize the model

- This is the same setup as the previous chatbot. Ensure your

.envfile has the correctGROQ_API_KEY.

1.3 Memory Type Selection

- First, we let users choose their preferred memory type via a Streamlit sidebar:

st.sidebar.title("Settings")

memory_type = st.sidebar.selectbox(

"Select Memory Type",

[

"ConversationBufferMemory",

"ConversationBufferWindowMemory",

"ConversationSummaryMemory",

"ConversationSummaryBufferMemory"

]

)

- Using a Streamlit sidebar, the user can select a memory type. This dropdown ensures flexibility, allowing the chatbot to adapt its memory strategy dynamically.

1.4 Memory Initialization

- Once the user picks their memory type, we initialize the corresponding memory module.

if "memory" not in st.session_state or st.session_state.memory_type != memory_type:

if memory_type == "ConversationBufferMemory":

st.session_state.memory = ConversationBufferMemory(return_messages=True)

elif memory_type == "ConversationBufferWindowMemory":

st.session_state.memory = ConversationBufferWindowMemory(k=5, return_messages=True)

elif memory_type == "ConversationSummaryMemory":

st.session_state.memory = ConversationSummaryMemory(llm=model, return_messages=True)

elif memory_type == "ConversationSummaryBufferMemory":

st.session_state.memory = ConversationSummaryBufferMemory(llm=model, return_messages=True)

st.session_state.memory_type = memory_type

Memory selected by the user is stored in a

session_statevariable, so the memory stays intact even if you reload the app.Even if you change the memory type mid-chat, the chatbot seamlessly switches and applies the new memory type from that point onward.

1.5 Building the Chat Flow

- The chatbot uses a

ConversationChainto manage the interaction:

conversation = ConversationChain(llm=model, verbose=True, memory=st.session_state.memory)

- This connects the large language model with the selected memory type, enabling the chatbot to provide context-aware responses.

What is ConversationChain ?

ConversationChain is a LangChain utility that handles the flow of a chatbot conversation. It connects the selected memory type with the language model (LLM) to manage dialogue coherently. Here's what it does:

Integrates Memory: Utilizes the chosen memory type to store and retrieve context.

Processes Input: Takes user queries and provides AI-generated responses.

Maintains Flow: Ensures smooth conversation continuity by using stored memory effectively.

In essence, it acts as the bridge between user input, memory, and the chatbot's responses.

1.6 Creating the Chat UI

st.title("Synapse 🧠") # Give your bot a new identity!

# Optionally display a chat summary

if memory_type in ["ConversationSummaryMemory"]:

memory_variables = st.session_state.memory.load_memory_variables({})

if st.sidebar.button("Show Chat Summary"):

with st.sidebar:

st.write("### Chat Summary")

try:

summary = memory_variables.get("history", "No summary available.")

st.write(summary)

except Exception as e:

st.error(f"Error loading summary: {e}")

- Here, we are setting the name of the ChatBot and also for Summary Memory type (

ConversationSummaryMemory), we are creating a button in the sidebar that allows users to view a summary of the conversation between Bot and User in the sidebar.

1.7 Handling Chat History and User Input

if "history" not in st.session_state:

st.session_state.history = [] # Initialize history

# Display chat history -> Ensures previous chats are displayed after reloads.

for message in st.session_state.history:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# Capture user input

if prompt := st.chat_input("Enter your text..."):

st.chat_message("user").markdown(prompt)

st.session_state.history.append({"role": "user", "content": prompt})

Here, the

historysession_state variable is initialized to store previous chats, ensuring they persist even after the UI reloads. A loop displays these stored chats, making the conversation feel continuous.Next, the user's input is captured using

st.chat_input, stored in thepromptvariable, displayed in the chat usingst.chat_message, and appended tost.session_state.historyfor persistence and reusability.

1.8 Generating and Displaying Responses

result = conversation.predict(input=prompt) # Get model response

# Function to display the respone in human typing format

def response_generator(result_text):

for word in result_text.split():

yield word + " " # Word-by-word delay

time.sleep(0.05)

# Display bot's response

with st.chat_message("assistant"):

response = "".join(response_generator(result))

st.markdown(response)

# Update memory and history

st.session_state.history.append({"role": "assistant", "content": response})

st.session_state.memory.chat_memory.add_user_message(prompt)

st.session_state.memory.chat_memory.add_ai_message(response)

Here, the user’s input (

prompt) is passed toconversation.predict(), generating context-aware replies based on the selected memory type.Natural Flow: A response generator adds a slight delay (

time.sleep(0.05)), simulating a typing effect for a human-like interaction.The assistant’s reply is shown using

st.chat_message("assistant"), ensuring clarity in the conversation flow.Finally, both the user’s input and the assistant’s reply are saved in

historyfor persistence and added to the chatbot’s memory for maintaining context in future chats.

With this, our code implementation is complete—your chatbot is now memory-ready and good to go! 🚀

Complete Code

- Here's the final version of the chatbot code we just built together:

import streamlit as st # For developing a simple UI

from langchain_groq import ChatGroq # To create a chatbot using Groq

from langchain_core.messages import HumanMessage, SystemMessage # To specify System and Human messages

from langchain_core.output_parsers import StrOutputParser # To view the output in proper format

import os # To interact with the operating system

from dotenv import load_dotenv # To access environment variables like GROQ_API_KEY

import time

# For managing different types of conversation memory # To add smooth delays in chatbot responses # To add smooth delays in chatbot responses

from langchain.memory import ConversationBufferMemory, ConversationSummaryMemory, ConversationBufferWindowMemory, ConversationSummaryBufferMemory

from langchain.chains import ConversationChain # To manage the chatbot's conversation flow

# Load environment variables from .env file

load_dotenv()

# Retrieve Groq API key for accessing the language model

groq_api_key = os.getenv("GROQ_API_KEY")

# Initialize the ChatGroq model with specified configuration

model = ChatGroq(model="Gemma2-9b-It", groq_api_key=groq_api_key)

# Sidebar for memory type selection

st.sidebar.title("Settings")

memory_type = st.sidebar.selectbox(

"Select Memory Type",

[

"ConversationBufferMemory",

"ConversationBufferWindowMemory",

"ConversationSummaryMemory",

"ConversationSummaryBufferMemory"

]

)

# Initialize session state to manage memory selection

if "memory" not in st.session_state or st.session_state.memory_type != memory_type:

# Choose memory type based on user selection

if memory_type == "ConversationBufferMemory":

st.session_state.memory = ConversationBufferMemory(return_messages=True)

elif memory_type == "ConversationBufferWindowMemory":

st.session_state.memory = ConversationBufferWindowMemory(k=5, return_messages=True)

elif memory_type == "ConversationSummaryMemory":

st.session_state.memory = ConversationSummaryMemory(llm=model, return_messages=True)

elif memory_type == "ConversationSummaryBufferMemory":

st.session_state.memory = ConversationSummaryBufferMemory(llm=model, return_messages=True)

# Store selected memory type in session state

st.session_state.memory_type = memory_type

# Set up a conversation chain using the selected memory

conversation = ConversationChain(llm=model, verbose=True, memory=st.session_state.memory)

# Streamlit UI Title

st.title("Synapse 🧠")

# Button to display chat summary for summary-based memories

if memory_type in ["ConversationSummaryMemory"]:

memory_variables = st.session_state.memory.load_memory_variables({})

if st.sidebar.button("Show Chat Summary"):

with st.sidebar:

st.write("### Chat Summary")

try:

# Retrieve and display summary

summary = memory_variables.get("history", "No summary available.")

st.write(summary)

except Exception as e:

st.error(f"Error loading summary: {e}")

# Initialize chat history if not already present

if "history" not in st.session_state:

st.session_state.history = []

# Display past messages from chat history

for message in st.session_state.history:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# Capture user input and handle the chat flow

if prompt := st.chat_input("Enter your text..."):

# Display user input in chat

st.chat_message("user").markdown(prompt)

st.session_state.history.append({"role": "user", "content": prompt})

# Generate and display the model's response

result = conversation.predict(input=prompt)

# Create a word-by-word response for better user experience

def response_generator(result_text):

for word in result_text.split():

yield word + " "

time.sleep(0.05)

# Show assistant's response in chat

with st.chat_message("assistant"):

response = "".join(response_generator(result))

st.markdown(response)

# Update chat history and memory with the new messages

st.session_state.history.append({"role": "assistant", "content": response})

st.session_state.memory.chat_memory.add_user_message(prompt)

st.session_state.memory.chat_memory.add_ai_message(response)

# Debugging memory (uncomment for testing)

# st.write(f"Conversation memory size: {len(st.session_state.memory.chat_memory.messages)}")

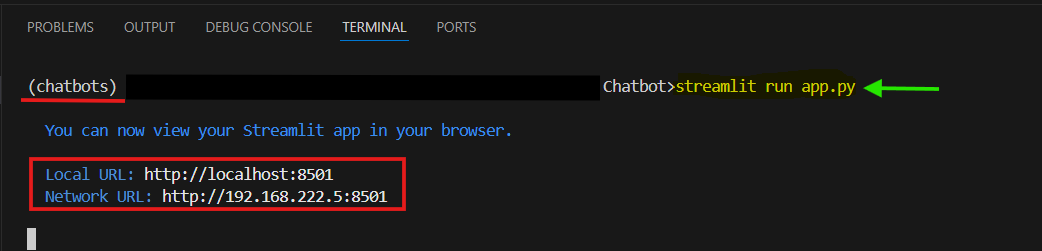

Step 5: Launch Your Chatbot 🚀

Congratulations! Your chatbot is ready to roll. 🎉 Let’s bring it to life:

- Run the Application:

Fire up your terminal, activate your virtual environment, and type this magical command:

streamlit run app.py

(Replace app.py with your file’s name if you chose something fancier! 🧐)

- Watch It in Action:

Your browser will pop open, and voilà—your chatbot is live, waiting to flex its conversational muscles. Test it out and bask in the glory of your creation!

- Demonstration

Problems with this Approach 🛑

Alright, imagine you're trying to build a super-powered chatbot using an old calculator—sounds like it could work, right? But as soon as things get complicated, it starts smoking, making strange noises, and possibly self-destructing. That’s what happens when you use the older LangChain memory classes. Let’s see why:

1. Limited Flexibility 🔒

- The older memory classes, like

ConversationBufferMemoryandConversationSummaryMemory, were designed with simplicity in mind. However, they lack the flexibility needed for more complex workflows and customizations.

2. Scalability Issues 📈

- Great for small chats, but when you scale up, they start to slow down. Managing hundreds of interactions within a buffer? Not so efficient. This is where External Memory shines in comparison.

3. Lack of Persistence 💀

- Once the chat ends, so does the memory. These classes forget everything, making your chatbot feel like a goldfish every time you start a new conversation.

4. Limited Support for Complex Data 🧩

- They can't handle complex data like images or API calls. Want your bot to remember rich media or structured data? Good luck!

5. Lack of Real-Time Memory Updates ⚡

- In real-time applications, these systems are sluggish. Manual updates and full reloads make the chatbot feel less responsive.

Using the older memory classes is like trying to charge your phone with a broken charger—frustrating, slow, and probably not going to work when you need it most. 📱⚡So, if you're ready to upgrade from “vintage” to “next-gen,” it's time to leave these old-school methods behind.

And That’s a Wrap! 🎬

We’ve officially taken ChatPal from “memoryless” to “mindful,” and it’s been quite the journey! From understanding the basics of memory in chatbots to implementing In-Prompt and External Memory with LangChain, you’ve now got the tools to make your chatbot not just speak, but remember. 🧠✨

While the older memory classes were fun to work with (back in the day), we’ve learned that the real magic happens when we upgrade to the newer LangChain classes. The future of chatbots isn’t about simply answering questions—it’s about building a chatbot that knows you, remembers your favorite pizza topping, and even brings it up the next time you chat. 🍕💬

As you move forward, keep in mind that chatbot memory is one of the most powerful features you can add. Whether you’re using In-Prompt Memory for quick tasks or External Memory for more advanced, scalable solutions, you’re equipped to take your chatbot to the next level.

Now go ahead, impress your users, and show them how smart your chatbot really is! 🚀

What’s Next ? 🔮

In the next blog, we’ll tackle the next big step—upgrading your chatbot’s memory with LangChain’s shiny new tools. We’ll make sure your bot doesn’t just remember your name but also remembers that embarrassing thing you said three weeks ago (because who doesn’t love a chatbot that can roast you, right?). 😎

So stay tuned, because things are about to get even smarter—and a little more sassy! 🤖💥

Subscribe to my newsletter

Read articles from DataGeek directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

DataGeek

DataGeek

Data Enthusiast with proficiency in Python, Deep Learning, and Statistics, eager to make a meaningful impact through contributions. Currently working as a R&D Intern at PTC. I’m Passionate, Energetic, and Geeky individual whose desire to learn is endless.