COPY command in Microsoft Fabric

Sachin Nandanwar

Sachin Nandanwar

Let's say you want to import data from an ADLS2 or any other external storage location to a Fabric Data Warehouse on the fly instead of the usual data pipeline approach. Something you would want to do through SQL that provides lightspeed data ingestion and provide the flexibility of making quick setting changes.

One option through which it can be achieved is by using the COPY command.

Yes you heard it right...the COPY command from Synapse Analytics can be used to move data into Microsoft Fabric warehouse but it works only for data warehouse and not for database or data lake in Fabric.

Lets see how it can be done.

SetUp

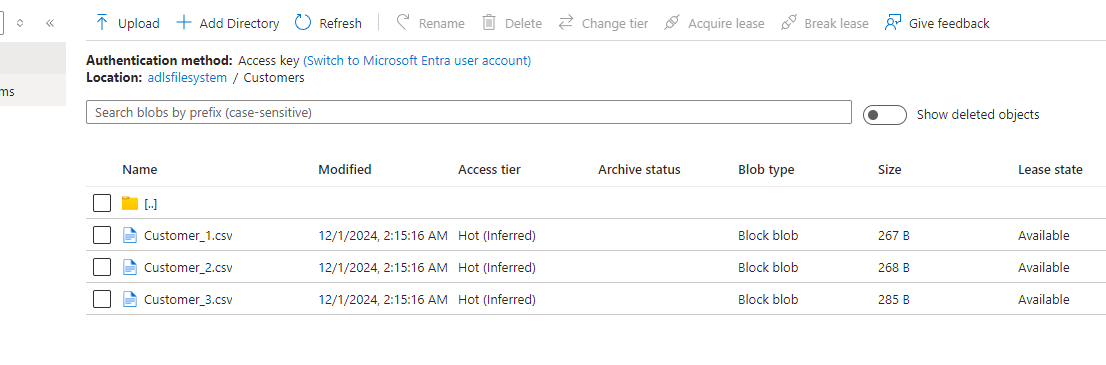

The given ADLS2 location is a secured location and has three csv files with each file having five records. In total fifteen records.

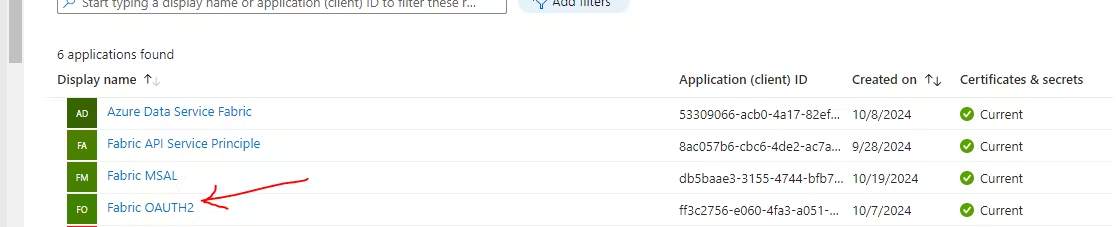

Select one of the existing service principal. We will use Fabric OAUTH2 service principal that exists in my Entra account.

We require ClientId, TenantId and Secret of the Service Principal.

Ensure that the Service Principal has atleast Storage Blob Data Contributor access to the ADLS2 location.

Create the destination table that matches the structure of the source data.

CREATE TABLE Customers

( [Customerid] [int] ,

[Name] [varchar](40) ,

[Email] [varchar](40) ,

[city] [varchar](40)

)

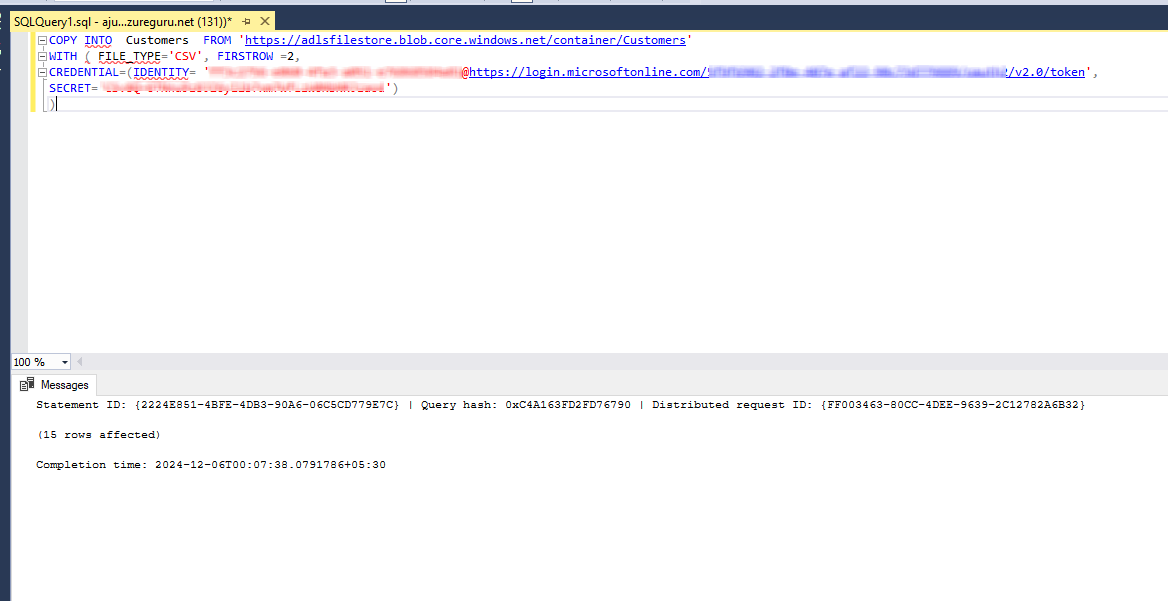

and then use the following COPY statement to move the data across.

COPY INTO Customers FROM 'https://storageaccount.blob.core.windows.net/container/Customers'

WITH ( FILE_TYPE='CSV', FIRSTROW =2,

CREDENTIAL=(IDENTITY= 'ClientId@https://login.microsoftonline.com/tenantId/oauth2/v2.0/token',

SECRET='secret')

Note that I have use https protocol with blob endpoints. You can use abfss protocols with dfs endpoint. Similar to use of COPY command on Synapse dedicated pool, there are no limitations wrt use of endpoints and protocols in Fabric for use of COPY command.

You can refer to this section of one of my article on Synapse dedicated pool for further details on COPY command in Synapse Analytics.

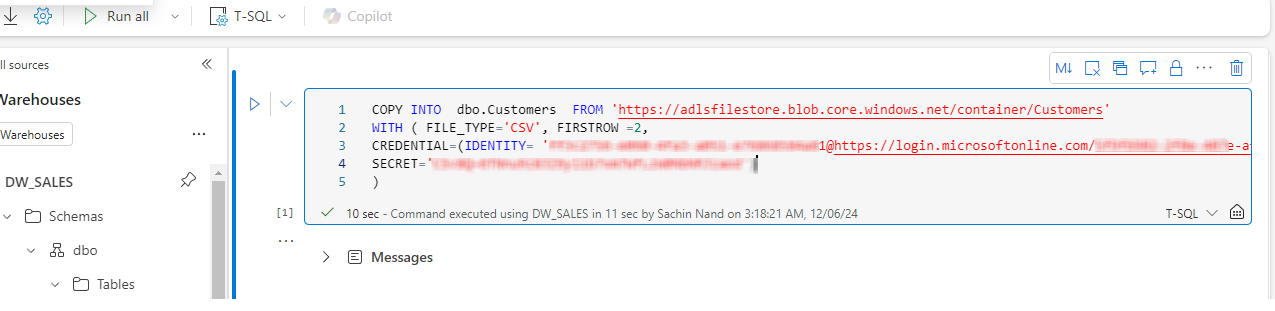

You can even run this command in TSQL Notebooks

Does COPY command work in Fabric Database ?

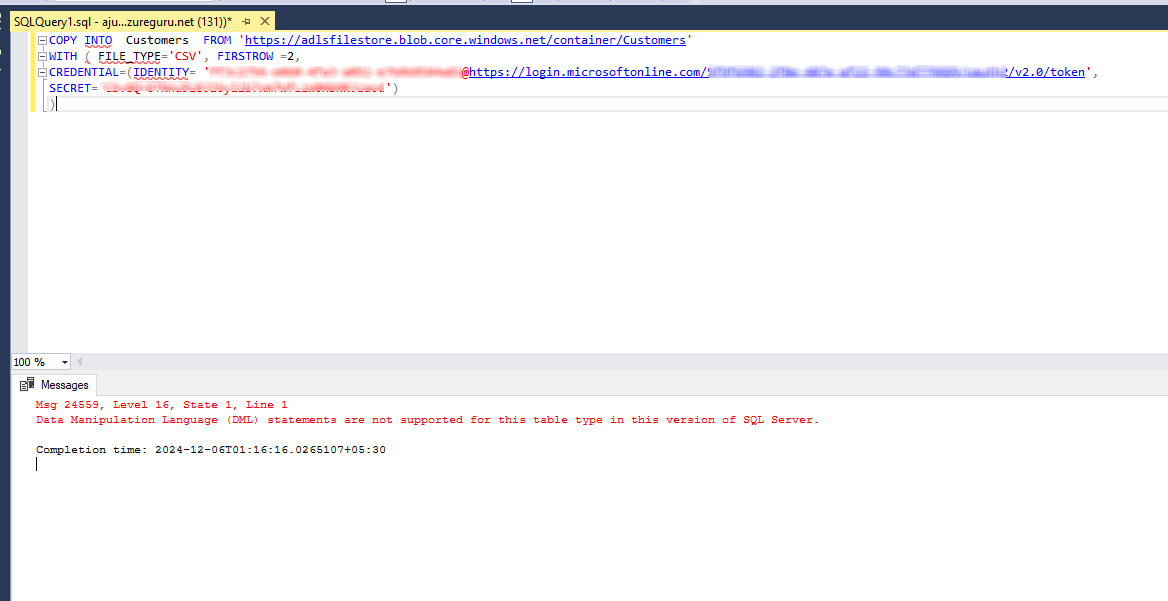

Unfortunately it doesn’t. When I execute the statement in SSMS it fails with this error message

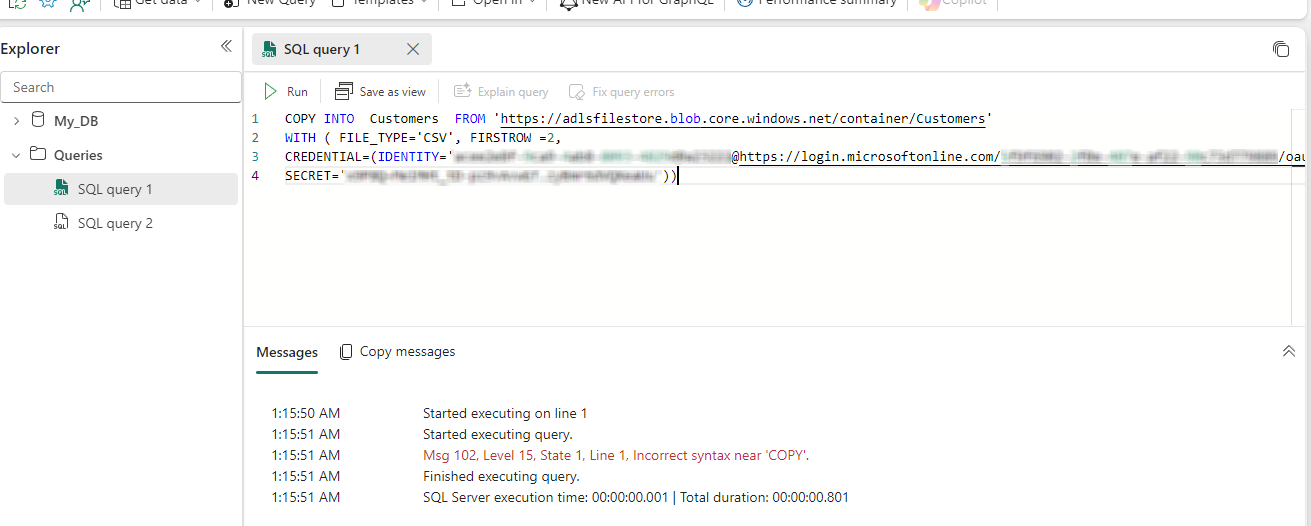

and running the same on Fabric UI fails with a different error message.

I came across this documentation of use of COPY command

https://learn.microsoft.com/en-us/fabric/data-warehouse/ingest-data-copy

But the article uses data that is publicly available and it does not mention how to use it with secured data sources.

Database scoped credentials and External data source

But then is it possible to use the other option database scoped credentials with external data source that works in Azure Synapse Analytics ?

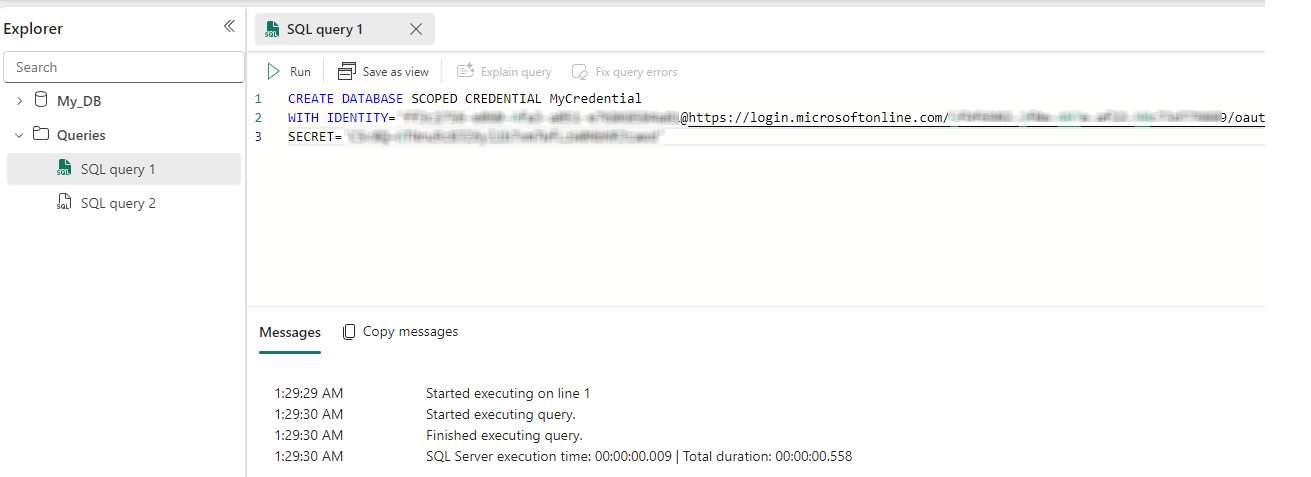

I tried creating them. Creation of Database Scoped Credentials and External Data Source do succeed.

Database Scoped Credentials

External Data Source

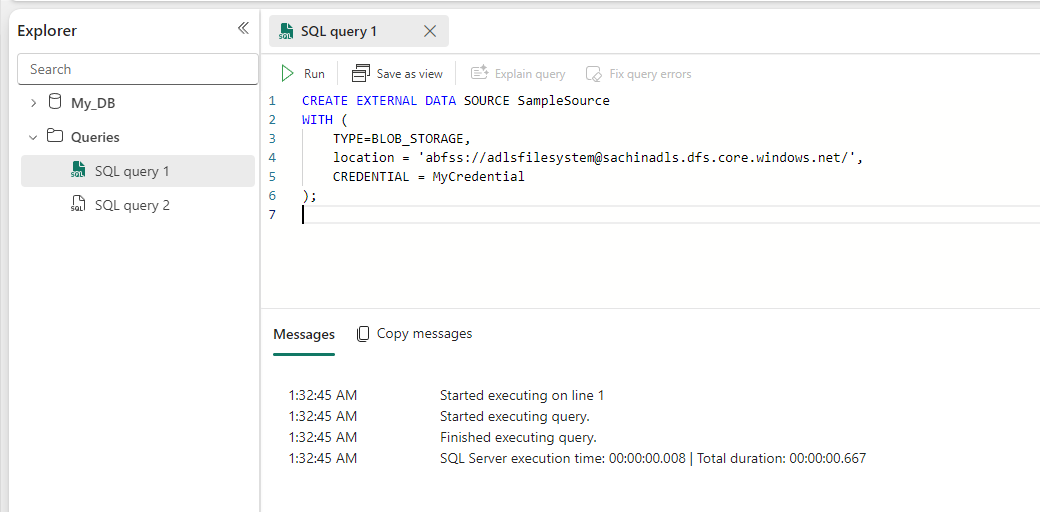

CREATE EXTERNAL DATA SOURCE SampleSource

WITH (

TYPE=BLOB_STORAGE,

location = 'abfss://adlsfilesystem@sachinadls.dfs.core.windows.net/',

CREDENTIAL = MyCredential

);

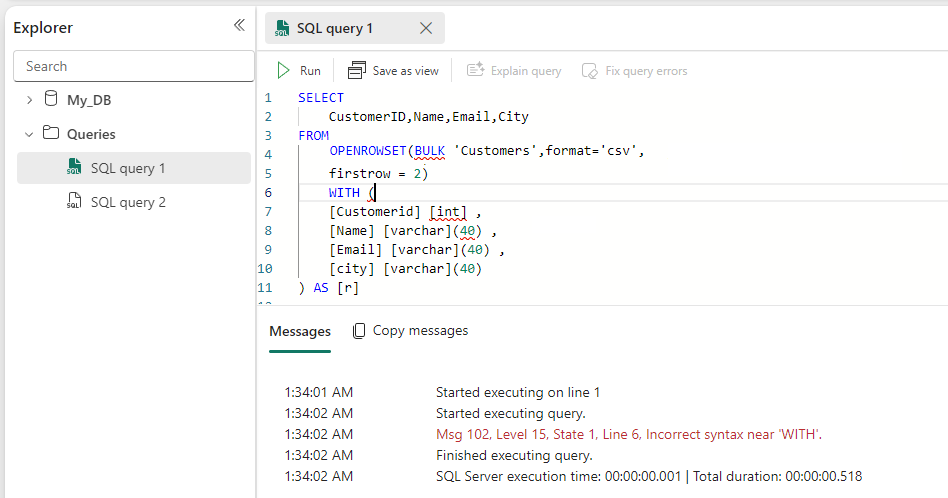

and when I use OPENROWSET to query the data that External Data Source points to, the query errors out

SELECT

CustomerID,Name,Email,City

FROM

OPENROWSET(BULK 'Customers',format='csv',

firstrow = 2)

WITH (

[Customerid] [int] ,

[Name] [varchar](40) ,

[Email] [varchar](40) ,

[city] [varchar](40)

) AS [r]

go

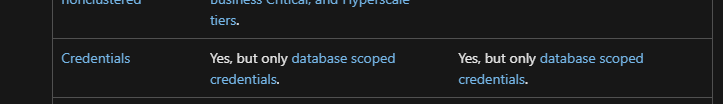

I checked the feature comparison between Azure SQL Database and Fabric SQL Database

and I found out that Database Scoped Credentials are indeed supported

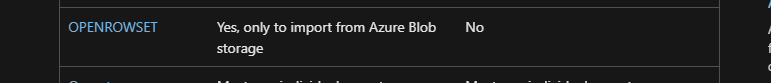

but OPENROWSET isn’t..similar to Synapse dedicated pool

and there is no mention if External Data Source is supported or not.

So I am quite unsure the purpose Database Scoped Credentials and External Data Source serve in Fabric Database.

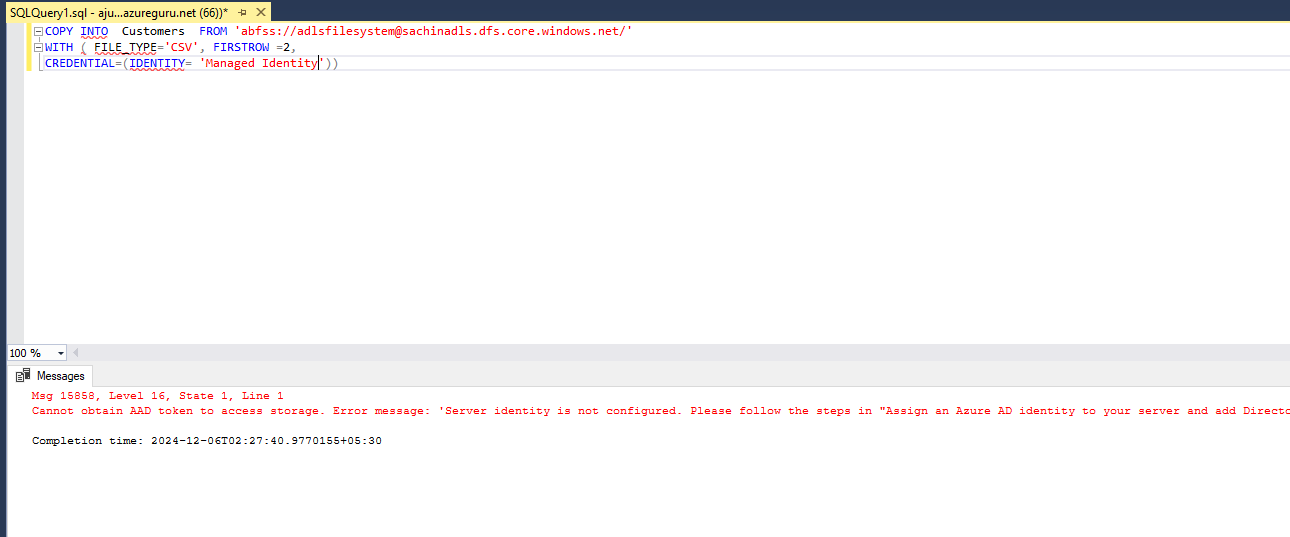

Just out of curiosity I tried using Managed Identity in the COPY command knowing that Managed Identity is now replaced with Workspace Identity in Fabric

COPY INTO Customers FROM 'abfss://adlsfilesystem@sachinadls.dfs.core.windows.net/'

WITH ( FILE_TYPE='CSV', FIRSTROW =2,

CREDENTIAL=(IDENTITY= 'Managed Identity')

and as expected it errored out with a never seen error message which was a first one for me.

Msg 15858, Level 16, State 1, Line 1 Cannot obtain AAD token to access storage. Error message: 'Server identity is not configured. Please follow the steps in "Assign an Azure AD identity to your server and add Directory Reader permission to your identity" (https://aka.ms/sqlaadsetup)'.

Risks with Use of COPY command

A major issue with the COPY command approach is that using it in your production environment is far from ideal. You definitely wouldn't want to hardcode the secret values in your code or share it with anyone in first place.

And since there is nothing equivalent to Azure Key Vault in Fabric there is no way to encrypt your secrets or securely store them.

The feature is on the roadmap to be released in Q1 2025.

https://learn.microsoft.com/en-us/fabric/release-plan/data-factory#data-source-identity-management-azure-key-vault

But then this would mean you have to store credentials on Azure Key vault and access them from Fabric and key vault wont be inherently a Fabric thing.Though it is possible to access Azure Key vault in a Fabric Notebook using the MSSPARKUTILS package.

As it's not possible to access key vaults from SQL. So if you don't want to risk exposing the secrets then it's prudent not to use COPY command in prod environment.

Conclusion

The COPY command is great command if you want to quickly move data across without going through the overhead of creating data pipeline and works well with all protocols and endpoints.

But given the limitations that comes with its use limited only to Data warehouse I would definitely like that it should be supported in Fabric Database as well.

Thanks for reading !!!

Subscribe to my newsletter

Read articles from Sachin Nandanwar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by