Upgrade Fabric Workspaces To The Latest GA Runtime

Sandeep Pawar

Sandeep PawarIt’s always a good idea to use the latest GA runtime for the Spark pool in Fabric. Unless you change it manually, the workspace will always use the previously set runtime even if a new version is available. To help identify the runtime that workspaces are using and to upgrade multiple workspaces at once, use the code below, powered by Semantic Link.

Here I scrape the MS Docs to get the latest GA runtime and use the APIs to check/upgrade the runtimes.

import sempy.fabric as fabric

import requests

import pandas as pd

client = fabric.FabricRestClient()

def get_latest_ga_runtime(url=None):

"""

Get the latest GA Fabric runtime

ref : https://learn.microsoft.com/en-us/fabric/data-engineering/runtime

"""

url = "https://learn.microsoft.com/en-us/fabric/data-engineering/lifecycle" if url is None else url

df = pd.read_html(url)[0]

return (df[df['Release stage'] == 'GA']

.assign(Version=lambda x: x['Runtime name'].str.extract(r'(\d+\.\d+)'))

['Version'].max())

latest_rt = get_latest_ga_runtime()

def get_workspace_runtime(workspaceId=None):

"""

Get current runtime of the default environment of a Fabric workspace

"""

workspaceId = fabric.get_notebook_workspace_id() if workspaceId is None else workspaceId

try:

result = client.get(f"v1/workspaces/{workspaceId}/spark/settings")

return result.json()['environment']['runtimeVersion']

except (requests.RequestException, KeyError):

return "na"

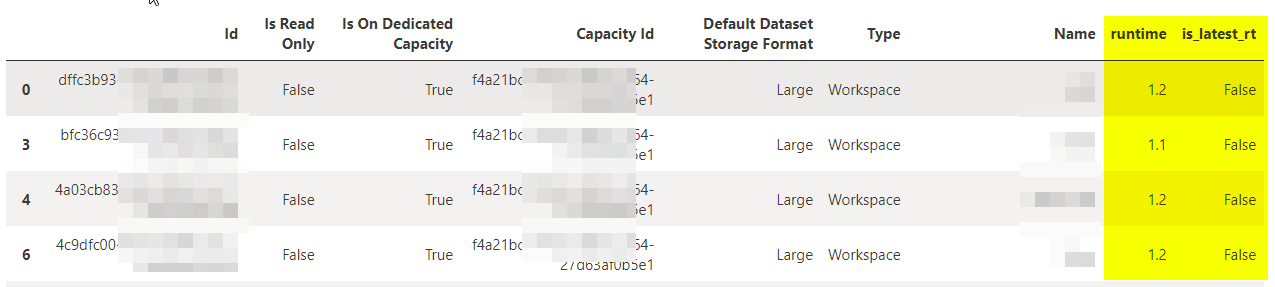

workspaces = (fabric.list_workspaces()

.query('`Is On Dedicated Capacity`==True and `Name`!="Admin monitoring"') #Only P or F

.assign(runtime=lambda df: df['Id'].apply(get_workspace_runtime))

.assign(is_latest_rt = lambda rt:rt['runtime'] ==latest_rt ))

workspaces

Outdated workspaces

You need to be a workspace admin to change the runtime version

outdated_rt_ws = workspaces[workspaces['is_latest_rt']==False]

display(outdated_rt_ws)

def upgrade_runtime(runtime:str, workspaceId=None):

workspaceId = fabric.get_notebook_workspace_id() if workspaceId is None else workspaceId

payload ={"environment": {"runtimeVersion": runtime }}

return client.patch(f"/v1/workspaces/{workspaceId}/spark/settings", json = payload)

## Either iterate over the above outdated_rt_ws dataframe or upgrade the runtime for one specific workspace

## if workspaceId=None, the workspace of teh notebook is used

upgrade_runtime(runtime = latest_rt, workspaceId=None)

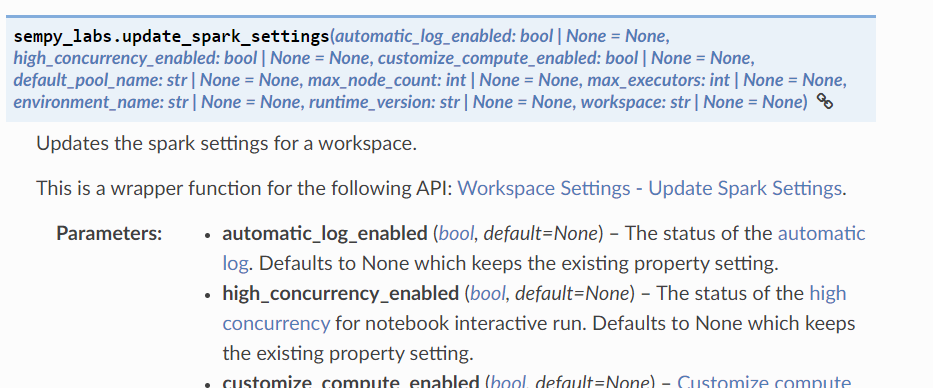

Update :

You can also use Semantic Link Labs to get and update the spark settings. Thanks to Michael Kovalsky for the tip.

Needless to say, before changing the runtime version, ensure your spark jobs can run in the specified runtime.

Subscribe to my newsletter

Read articles from Sandeep Pawar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sandeep Pawar

Sandeep Pawar

Microsoft MVP with expertise in data analytics, data science and generative AI using Microsoft data platform.