Use GraphQL API in Microsoft Fabric to query Cosmos DB

Sachin Nandanwar

Sachin Nandanwar

At the beginning of December 2024 Microsoft released “Microsoft Fabric API for GraphQL™ for Azure Cosmos DB Mirroring.”

With this feature, you can mirror/replicate Cosmos DB to OneLake in real time of the transactional data and then integrate GraphQL API to query the same data on OneLake.

I have been a big fan of Cosmos DB .For me personally, its one of the best NoSQL databases out there. Unfortunately its been sometime that I haven’t had the opportunity to work with it.

I remember few years ago when working on a Cosmos DB project there wasn’t any native support to expose the Cosmos DB data through GraphQL in Azure. I guess it was added just last year.

https://devblogs.microsoft.com/cosmosdb/announcing-data-api-builder-for-azure-cosmos-db/

That time I had to write custom classes and use third party GrpahQL API’s like HotChocolate to expose the Cosmos DB data through GraphQL endpoints.

I have written a article on similar lines on how to create GraphQL custom objects from the underlying data.

You can check it out here : https://www.azureguru.net/graphql-with-azure-functions

I penned few articles on Comsos DB sometime ago. Kindly go through them to understand more about Cosmos DB

Getting started with Cosmos DB :

https://www.azureguru.net/getting-started-with-cosmos-db-on-azure

Data Operations In Cosmos DB - Part 1 :

https://www.azureguru.net/crud-operations-in-cosmos-db-part1

Data Operations In Cosmos DB - Part 2 :

https://www.azureguru.net/data-operations-in-cosmos-db-part-2

Also I had posted couple of articles on LLM implementation in Cosmos DB. You can check them out here and here.

Also I would recommend to go through my article on

GraphQL in Microsoft Fabric : https://www.azureguru.net/graphql-in-microsoft-fabric

The video link for the walkthrough on approach used in this article is available here.

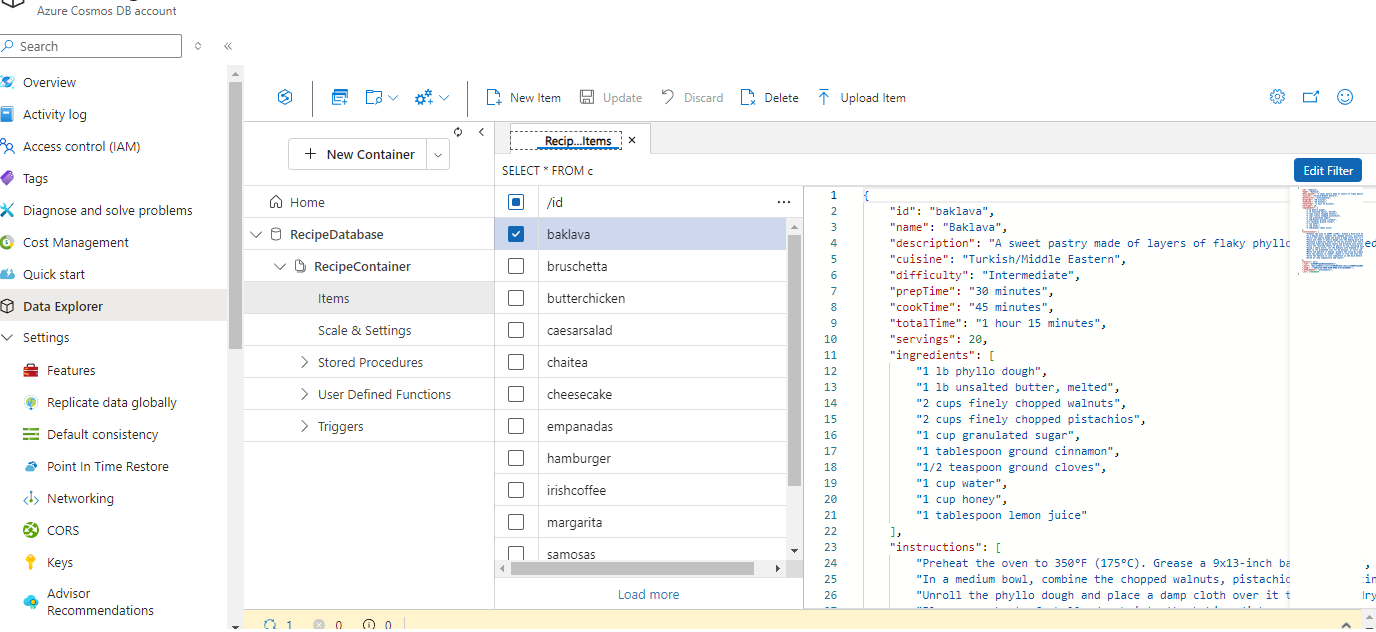

Cosmos DB Setup

To get started, I would have to upload some data into the Cosmos DB.I will use the same data that I have used in one of my earlier article on Cosmos DB.

https://www.azureguru.net/data-operations-in-cosmos-db-part-2#heading-the-setup

So there are thirteen json files that I would upload to Cosmos DB container. You can find the files here

Below is the code that uploads all the files to the Cosmos DB container.

using Microsoft.Azure.Cosmos;

using Newtonsoft.Json;

using PartitionKey = Microsoft.Azure.Cosmos.PartitionKey;

namespace CosmosBulkOperations

{

internal class BulkOperations

{

static CosmosClient cosmosclient;

static Container container;

public static async Task Main(string[] args)

{

Set_Env_Variables("COSMOS_ENDPOINT", "Your Cosmos DB URI");

Set_Env_Variables("COSMOS_KEY", "Your Cosmos DB Keys");

cosmosclient = new(

accountEndpoint: Environment.GetEnvironmentVariable("COSMOS_ENDPOINT"),

authKeyOrResourceToken: Environment.GetEnvironmentVariable("COSMOS_KEY")

);

Database database = await cosmosclient.CreateDatabaseIfNotExistsAsync(id: "Recipe");

container = await database.CreateContainerIfNotExistsAsync(id: "RecipeContainer", partitionKeyPath: "/id");

}

public static void Set_Env_Variables(string name, string value)

{

Environment.SetEnvironmentVariable(name, value, EnvironmentVariableTarget.Process);

}

public static List<Recipe> ParseDocuments(string Folderpath)

{

List<Recipe> ret = new List<Recipe>();

Directory.GetFiles(Folderpath).ToList().ForEach(f =>

{

var jsonString = System.IO.File.ReadAllText(f);

Recipe recipe = JsonConvert.DeserializeObject<Recipe>(jsonString);

recipe.id = recipe.name.ToLower().Replace(" ", "");

ret.Add(recipe);

}

);

return ret;

}

public static async Task AddDocuments()

{

List<Recipe> recipes = new();

recipes = ParseDocuments("Your source files Path");

TransactionalBatch batch = null;

int cnt = 0;

foreach (Recipe recipe in recipes)

{

string partitionvalue = recipe.id;

Microsoft.Azure.Cosmos.PartitionKey partitionKeys = new(partitionvalue);

batch = container.CreateTransactionalBatch(partitionKeys)

.CreateItem<Recipe>(recipe);

using TransactionalBatchResponse response = await batch.ExecuteAsync();

cnt = cnt + response.Count;

}

Console.WriteLine("No of documents uploaded : " + cnt);

}

}

public class Recipe

{

public string id { get; set; }

public string name { get; set; }

public string description { get; set; }

public string cuisine { get; set; }

public string difficulty { get; set; }

public string prepTime { get; set; }

public string cookTime { get; set; }

public string totalTime { get; set; }

public int servings { get; set; }

public List<string> ingredients { get; set; }

public List<string> instructions { get; set; }

}

}

The code creates a database RecipeDatabase and a container RecipeContainer for you if they dont exist.

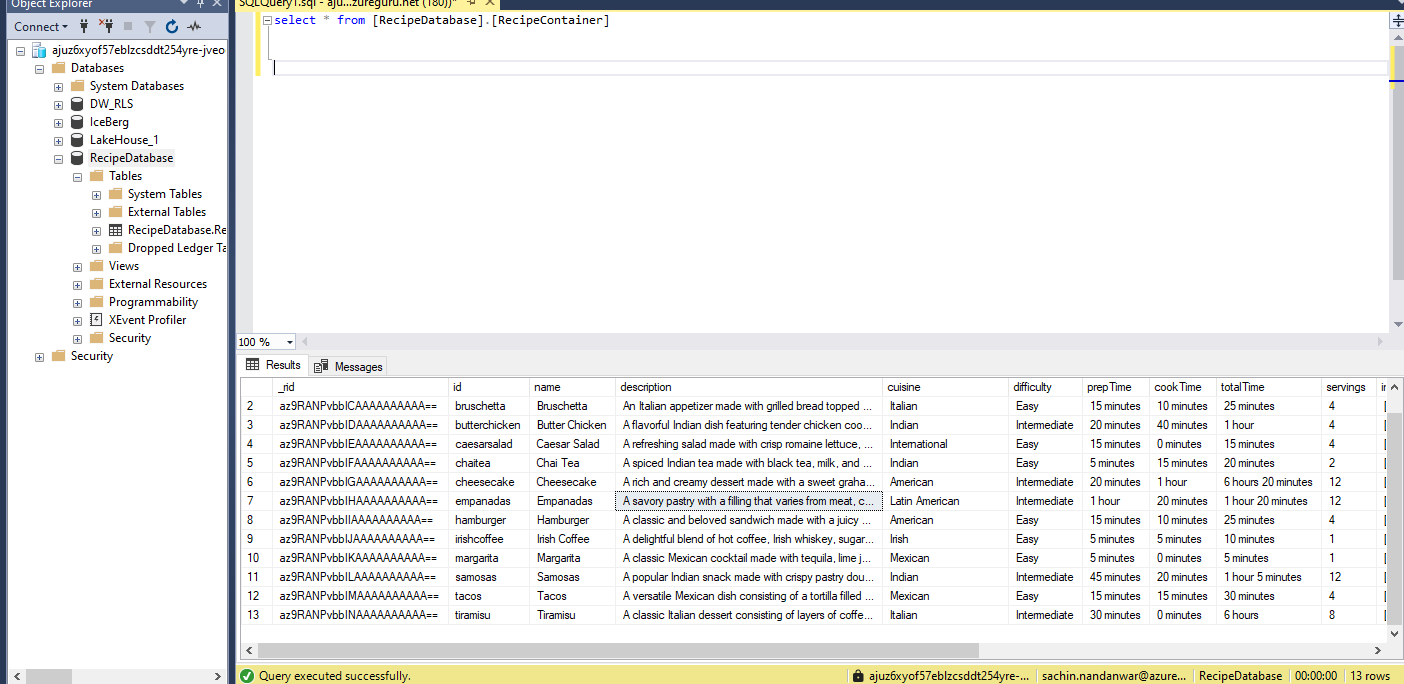

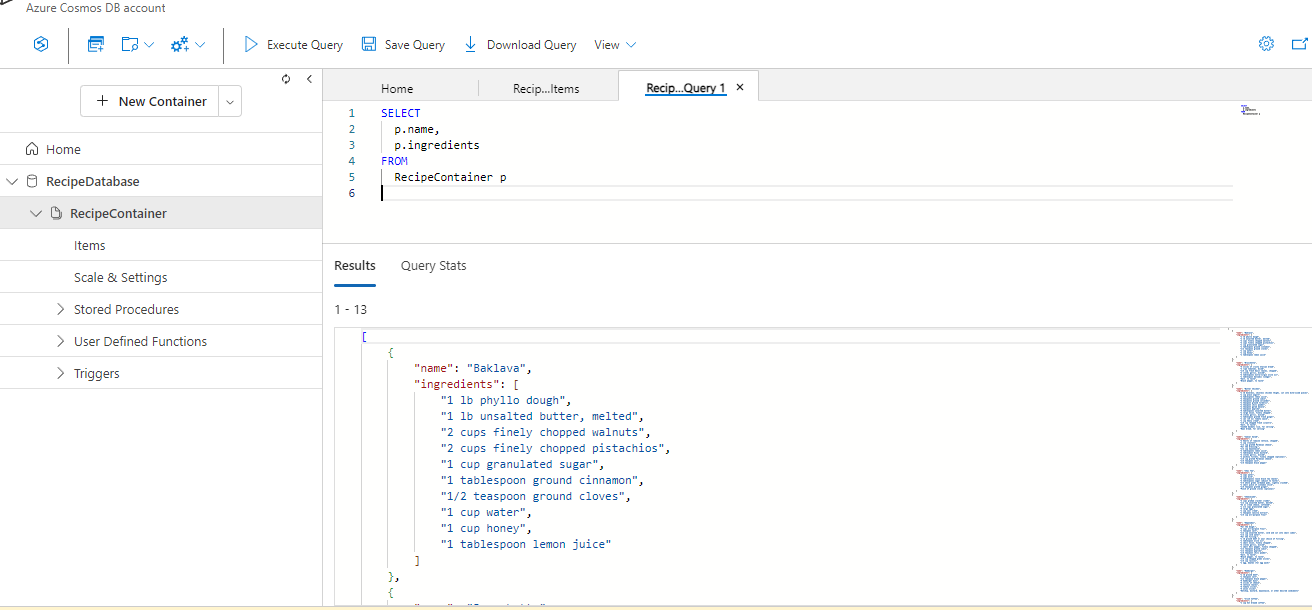

Once done, double check the data in the container

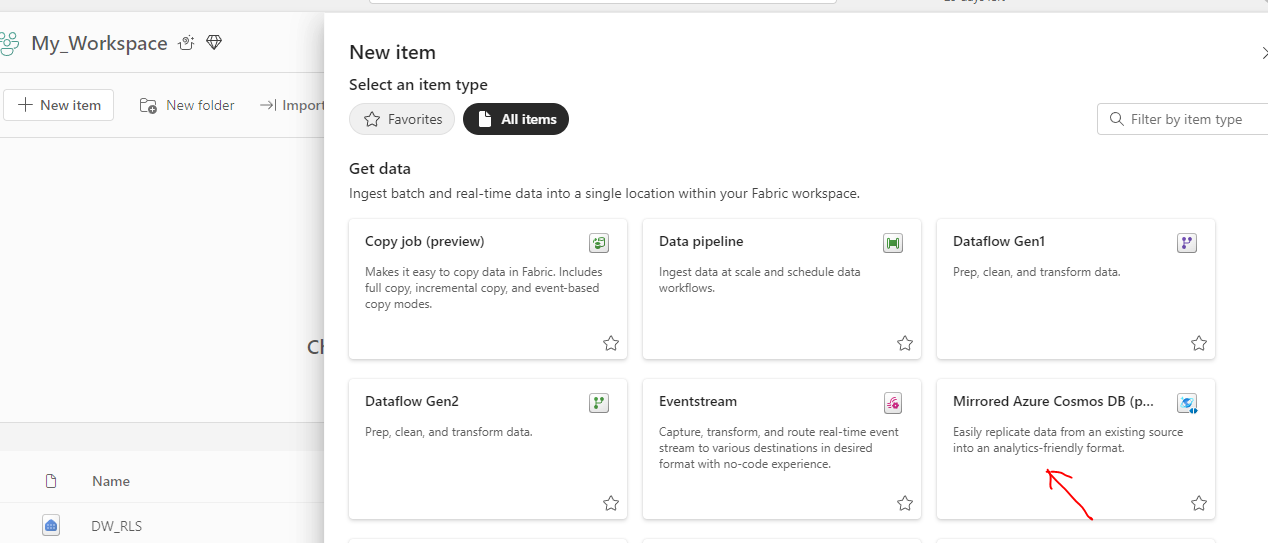

Mirroring Setup between Cosmos DB and OneLake

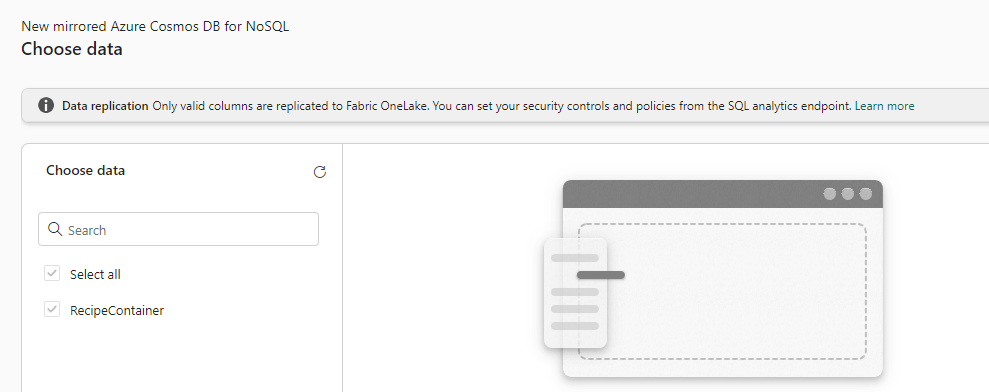

To setup mirroring between Cosmos DB and OneLake, under the target workspace, select Mirrored Azure Cosmos DB(preview).

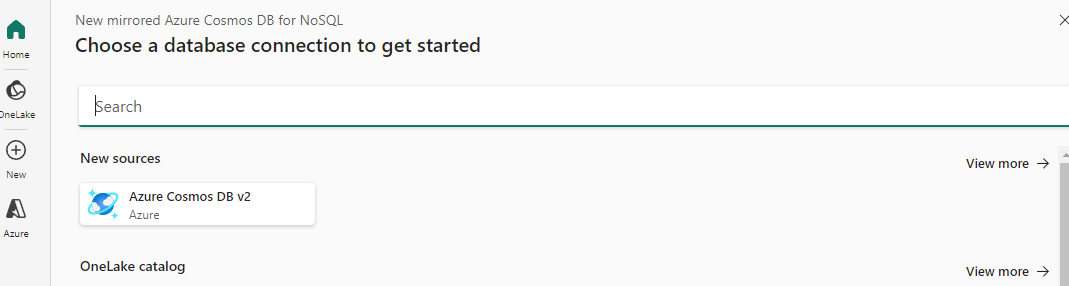

Select Azure Cosmos DB v2 as source

Enter the require details

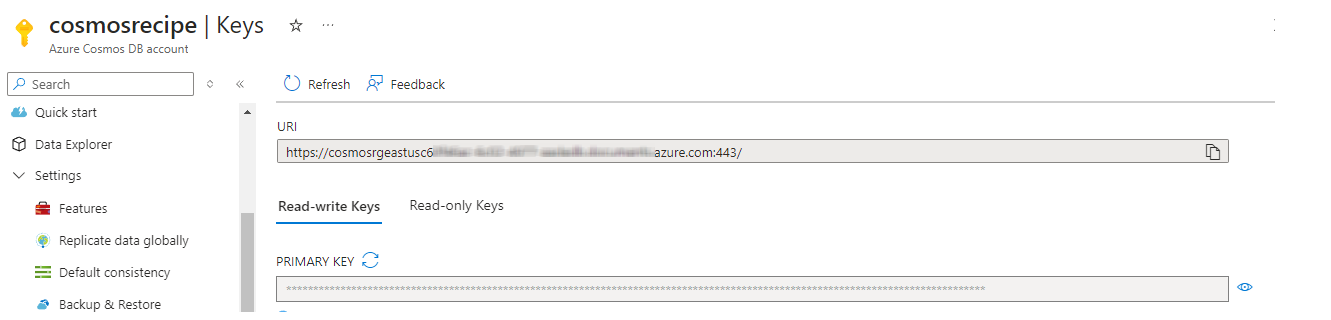

The Cosmos DB endpoint and the Account key can be grabbed from the Cosmos DB settings.

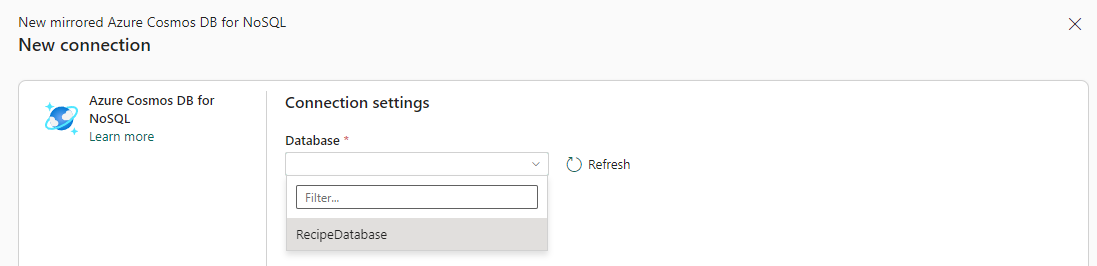

Next ,select the Cosmos DB database from that source that needs to be replicated to OneLake.

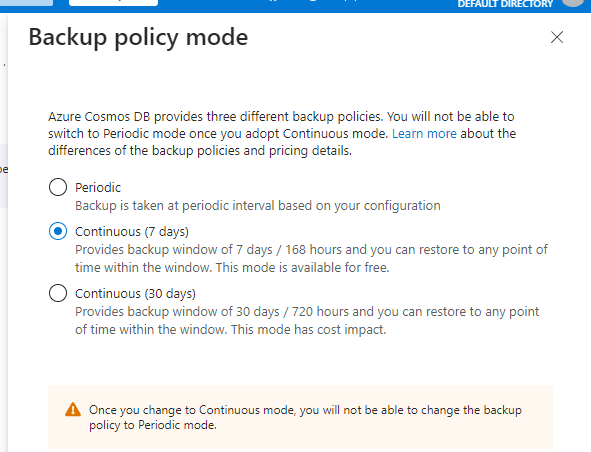

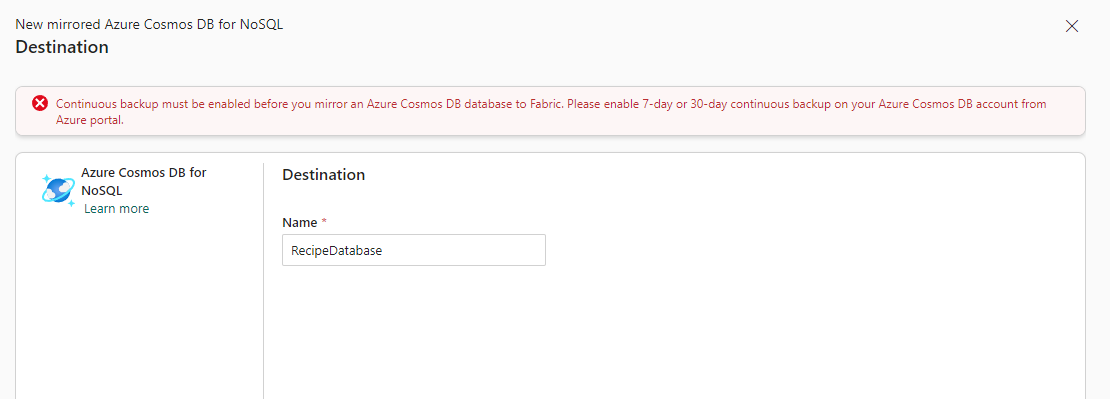

Note : Please ensure that you have set up Continuous Backup for your Cosmos DB

else the mirroring setup would fail.

Once the backup setting is fixed select the source database.

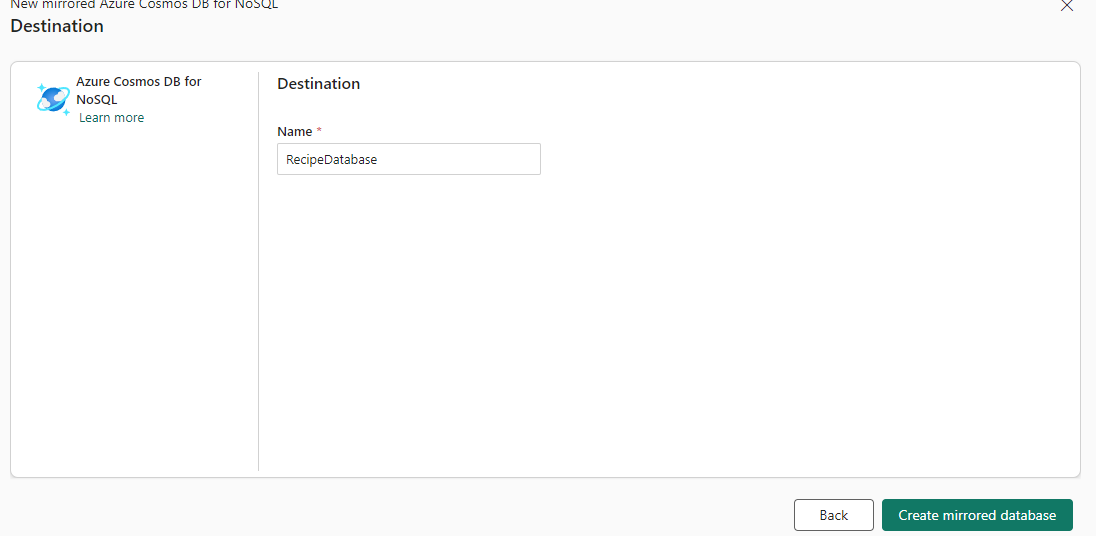

and name the mirrored database in the Destination.

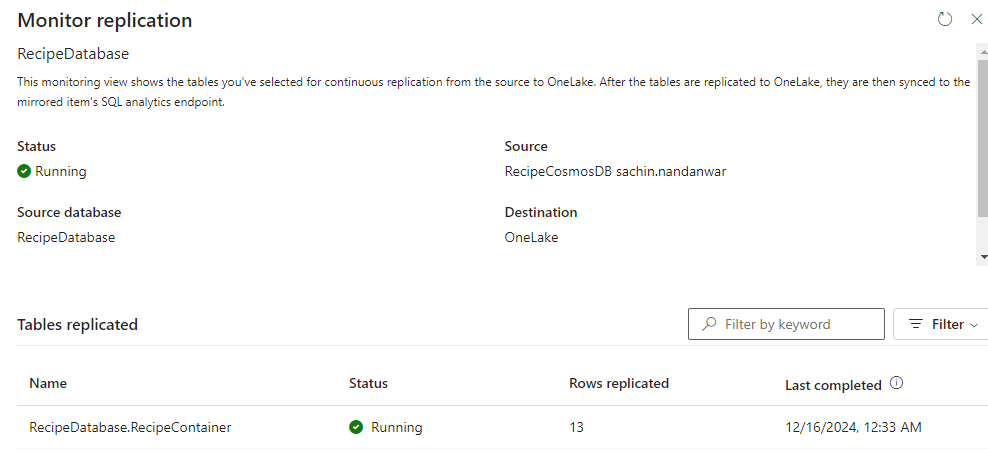

Once done you can monitor the replication in the Monitor replication page

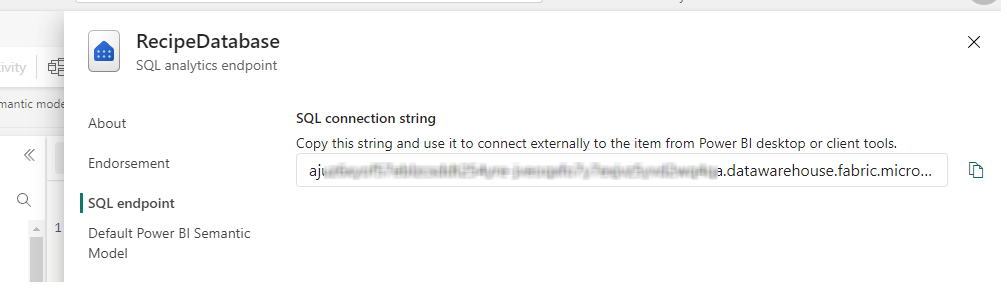

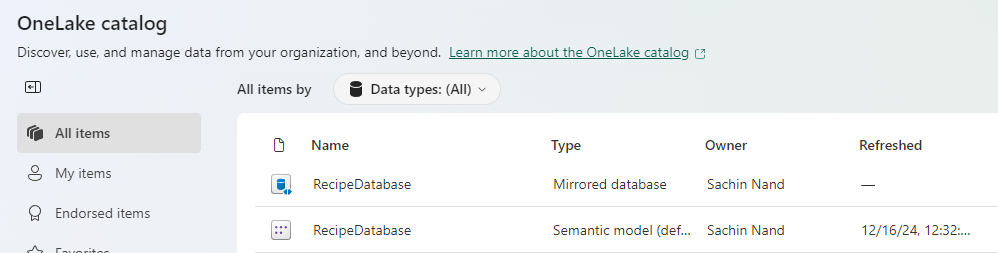

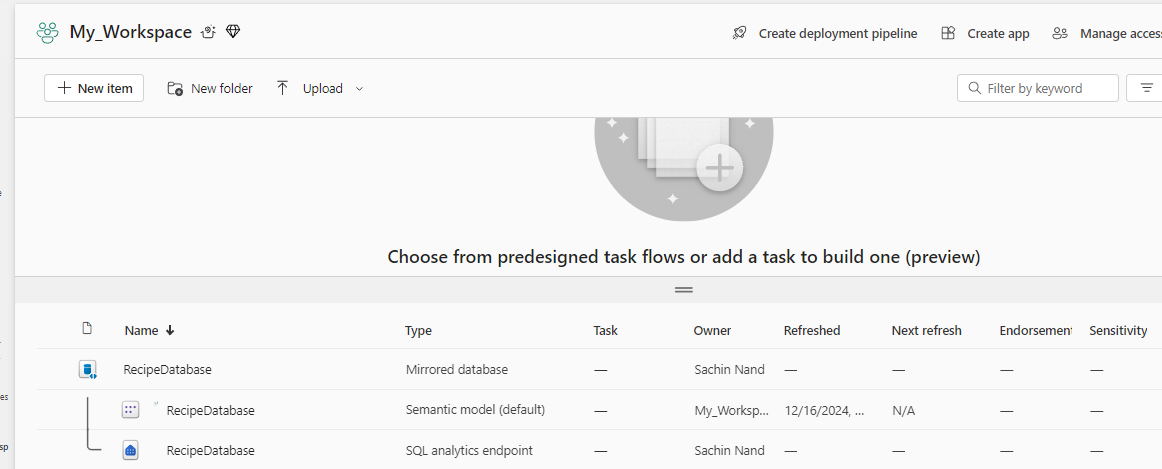

The mirrored database is exposed through SQL Analytics endpoints , semantic model in OneLake catalog and also under the workspace that it was created.

SQL Analytics endpoints :

OneLake catalog :

Workspace Items :

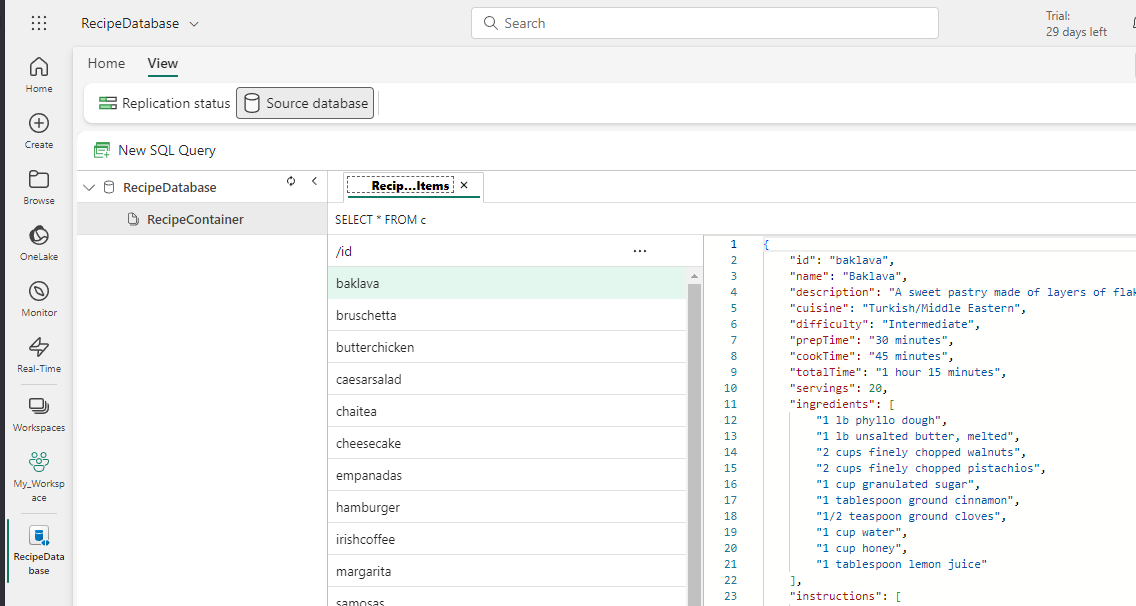

Through the mirrored database , you can now review the source data through the Fabric UI otherwise you would have to do it through the Azure UI. So no need to juggle across the Azure Portal and Fabric portal . Isn’t that fantastic ?

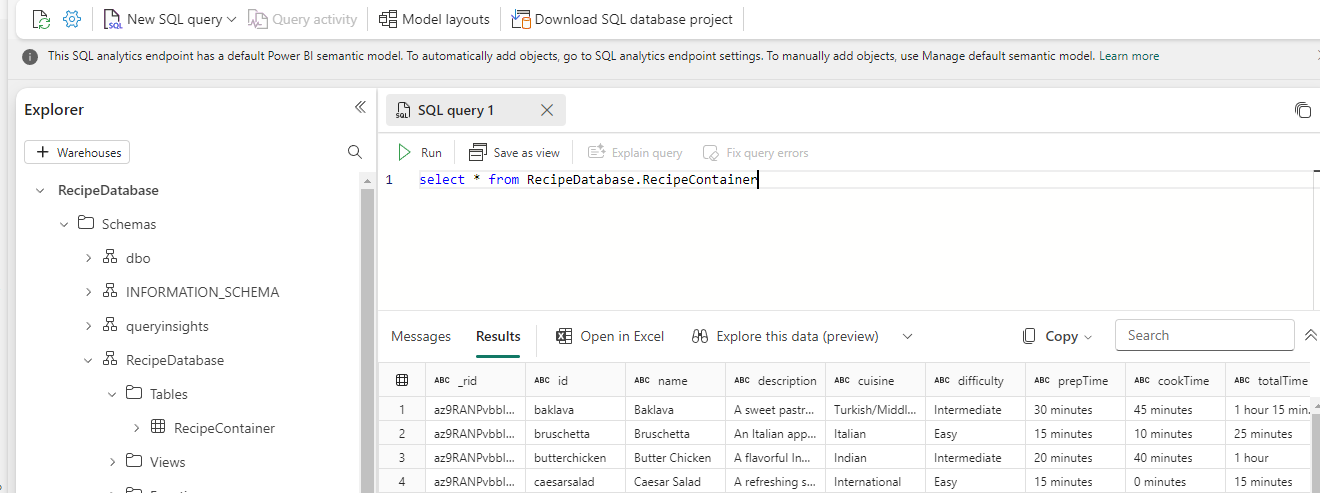

Its also possible to query the source data through SQL Analytics endpoint using TSQL and not the Cosmos DB flavor of SQL which is syntactically a bit different than TSQL.

Reference : https://learn.microsoft.com/en-us/azure/cosmos-db/nosql/query/

Another cool feature is that, as the mirrored database is accessible through the SQL analytics endpoint, you can now use SSMS to query the replicated Cosmos DB data and the output is displayed in tabular format

unlike the Cosmos DB query output

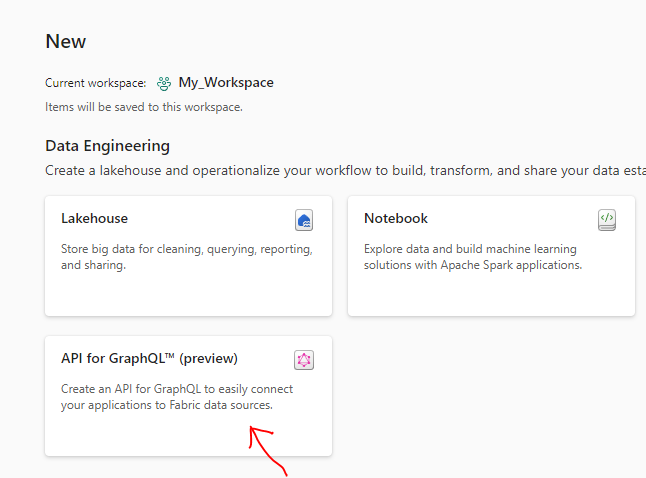

GraphQL Setup for Cosmos DB replicated data

Create a new GraphQL API item

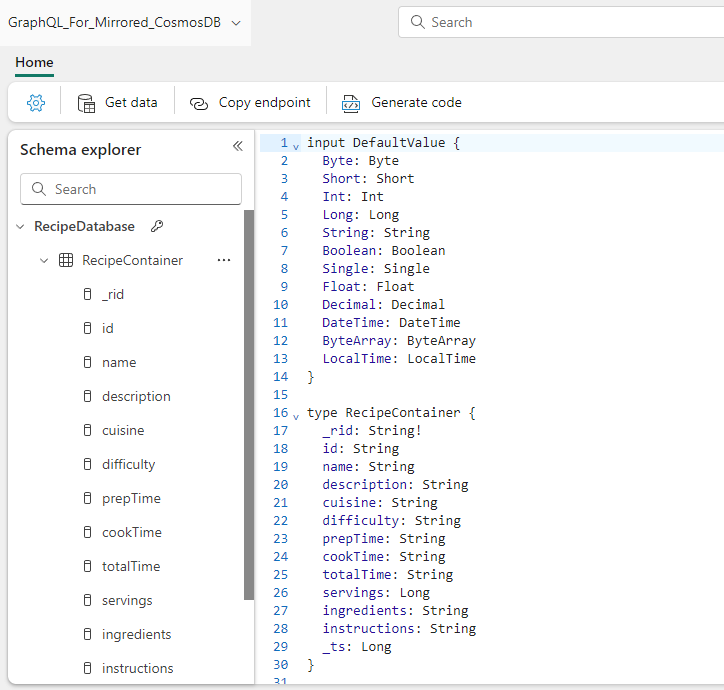

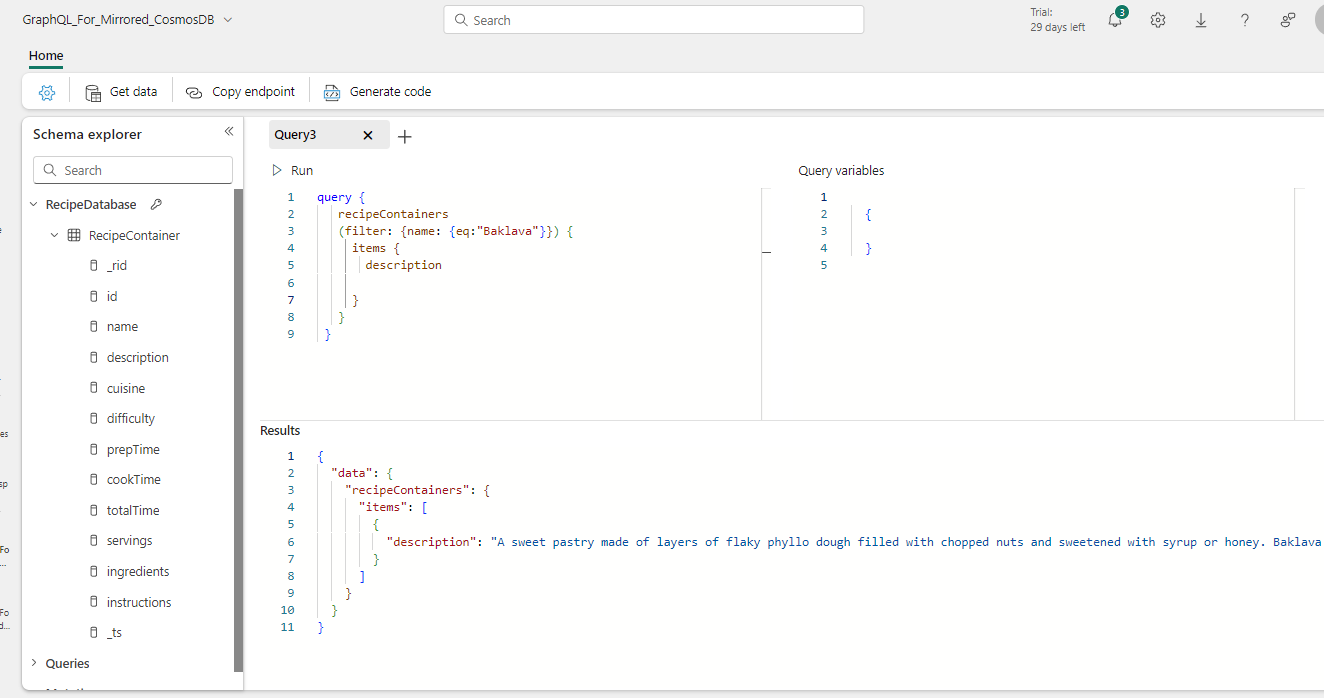

Set the data source of the GraphQL to Cosmos DB replicated database. Once done the attributes of the source container should be visible in GraphQL page.

A sample GraphQL query that retrieves data from the Cosomos DB database.

query {

recipeContainers (filter: { name: { eq: "Baklava"}})

{

items {

id,

name,

description

}

}

}

Query in the Fabric GraphQL UI.

I will now use the GraphQL endpoint in a C# console application and use the same query to retrieve data from the mirrored data source.

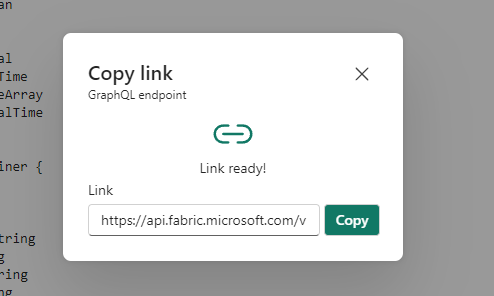

First , copy the GraphQL endpoint

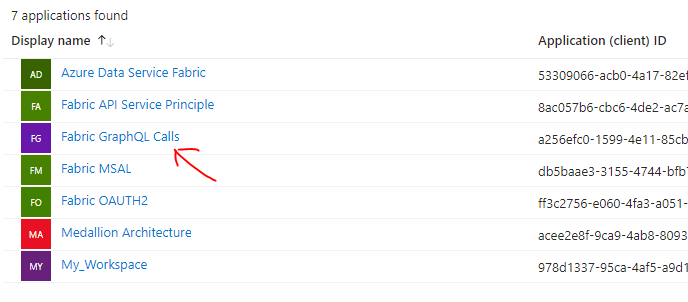

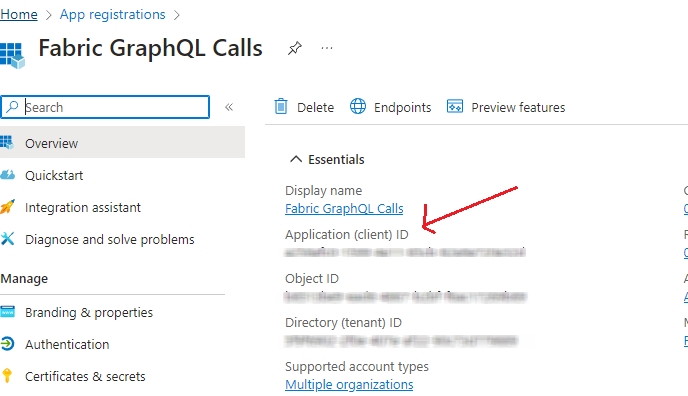

I used a service principal in my Entra account to make the GraphQL calls

We just require the ClientId.

and use the following C# code to make GraphQL API calls.

//Install the following npm packages

//GraphQL.Client.Http

//GraphQL.Client.Serializer.Newtonsoft

//Microsoft.Identity

using GraphQL.Client.Http;

using GraphQL.Client.Serializer.Newtonsoft;

using Microsoft.Identity.Client;

namespace CosmosMirrorGraphQL

{

class Program

{

private const string ClientId = "This is the Client ID from your Entra account";

private const string Authority = "https://login.microsoftonline.com/organizations";

private const string GraphQLUri = "Fabric GraphQL endpoint URI";

// private static string[] scopes = new string[] { "https://analysis.windows.net/powerbi/api/Item.Execute.All" };

private static string[] scopes = new string[] { "https://api.fabric.microsoft.com/.default" };

static async System.Threading.Tasks.Task Main(string[] args)

{

try

{

PublicClientApplicationBuilder PublicClientAppBuilder =

PublicClientApplicationBuilder.Create(ClientId)

.WithAuthority(Authority)

.WithRedirectUri("http://localhost");

IPublicClientApplication PublicClientApplication = PublicClientAppBuilder.Build();

AuthenticationResult result = await PublicClientApplication.AcquireTokenInteractive(scopes)

.ExecuteAsync()

.ConfigureAwait(false);

var graphQLClient = new GraphQLHttpClient(GraphQLUri, new NewtonsoftJsonSerializer());

graphQLClient.HttpClient.DefaultRequestHeaders.Authorization = new System.Net.Http.Headers.AuthenticationHeaderValue("Bearer", result.AccessToken);

HttpResponseMessage response = await graphQLClient.HttpClient.GetAsync(GraphQLUri);

graphQLClient.Options.IsValidResponseToDeserialize = response => response.IsSuccessStatusCode;

var query = new GraphQLHttpRequest

{

Query = @"query {

recipeContainers (filter: { name: { eq: ""Baklava""}})

{

items {

id,

name,

description

}

}

}

"

};

Console.WriteLine((await graphQLClient.SendQueryAsync<dynamic>(query)).Data.ToString());

}

catch (Exception ex)

{

Console.WriteLine($"Error: {ex.Message}");

}

}

}

}

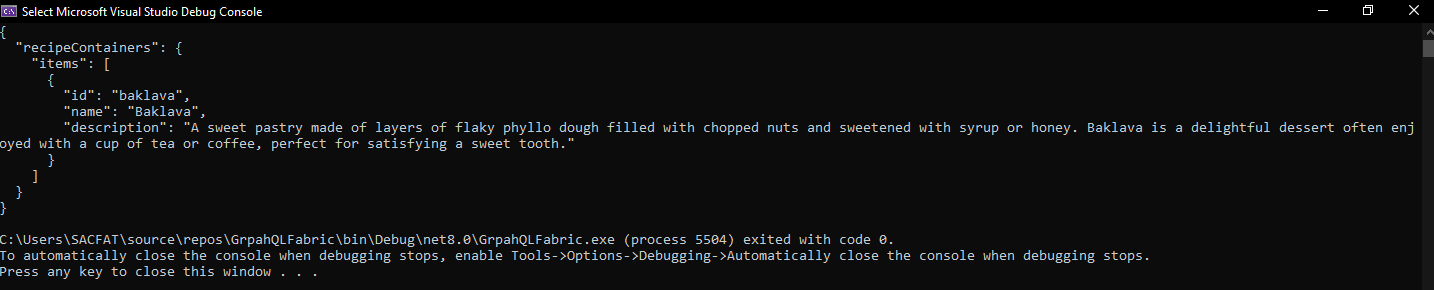

executing the code should display the output in the console window.

Walkthrough

Conclusion

In conclusion, integrating Microsoft Fabric API for GraphQL™ with mirrored Azure Cosmos DB data offers a robust solution for building near real-time analytical applications. By harnessing GraphQL’s efficient querying capabilities and the seamless data integration provided by Microsoft Fabric, developers can create responsive and scalable applications with ease. Whether you choose to connect through the SQL Analytics Endpoint or attach the mirrored database to a Fabric Warehouse, this approach enables efficient access to up-to-date data, empowering you to deliver rich insights and exceptional user experiences.

The above conclusion was copied from here . 😅

Thanks for reading !!!

Subscribe to my newsletter

Read articles from Sachin Nandanwar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by