A Christmas Ramble

Samuel Drew

Samuel Drew

Let’s put a bow on this website-making project. We set out to create a website in Django and to host it in AWS. The primary goal was to learn the Django framework because that was what we did at my workplace at the time. These days, however, I no longer need to use Python/Django and my interests are focused generally in systems-level programming, and less-so in website development.

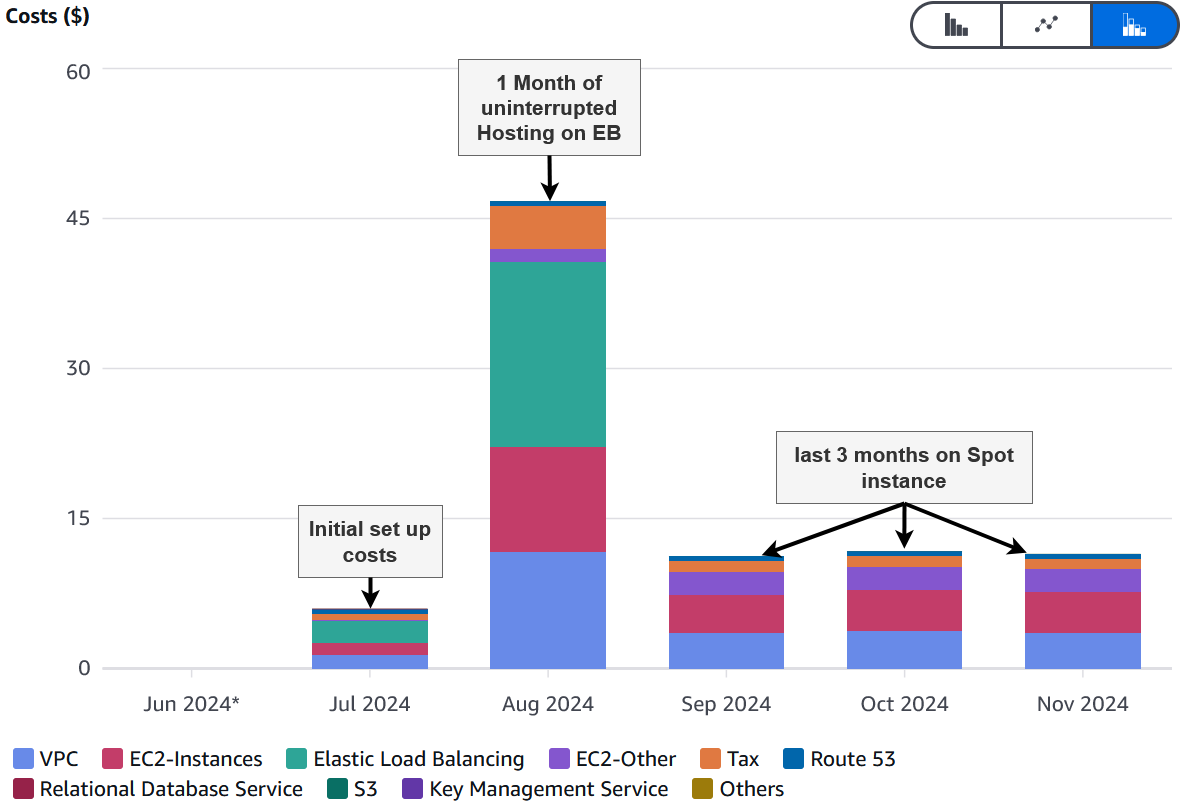

There’s a few outstanding items that I have to address in this post. So without further TODOs (I’m hilarious btw), lets take a look at what we did to bring down our aws billing to less than a quarter of our previous Elastic Beanstalk costs and how the website has handled the shift.

Measurable Indifference

Following on from my last post, I mentioned that we would be looking to move from Elastic Beanstalk to EC2 spot requests. EB was useful. Initially, it was a great starting point. But after our foray into Elastic Beanstalk to get the website live, I've come to the following conclusion:

EB is for people with new projects that just want to get started and don't much care how anything works or how much it costs[^1].

I’m not pointing fingers because this was me not too long ago. Something I’ve learned since leaving full-time work is that it pays to know how to actually put it together yourself so that you can cut out the bits that you don't need and minimise the costs that you can.

Here’s what I did…

In my last post, we distilled the dizzying morass that was our AWS billing page into 3 distinct actions we can take to reduce our total spending on the website.

Remove all static IPv4 addresses

Decommission the Network Load Balancer that was doing nothing but routing https to http and handling the SSL for us

Move from Elastic Beanstalk to a spot fleet to host the Django app

The last three months of AWS bills has felt like a warm hug from my wallet

Now you might be thinking, “well, yeah of course it costs less, we now have to do all the server management and scaling ourselves!” To which I say the short answer is yes… and no… twice…

Yes, it’s a bit more work: we will need to manage the server configuration now. But that’s good! we want to know exactly what’s running on our instance (instead of vaguely knowing that Django is being used to handle http requests somehow).

And “no”: The whole server configuration can be defined in a single script that gets run every time the server starts. No ongoing management required, just set and forget.

Yes, scaling is something to manage: If we were managing any kind of load on my website, we would have to set up scaling rules.

But “no” because: Until we start seeing the thousands of readers and clients that my mum says I deserve, we can stick to the smallest morsel of compute power that AWS can charge for.

Server Configuration in one file:

First, we switched off the EB which removed all the resources in commissioned including the expensive Load Balancer and the unnesesary static IP addresses.

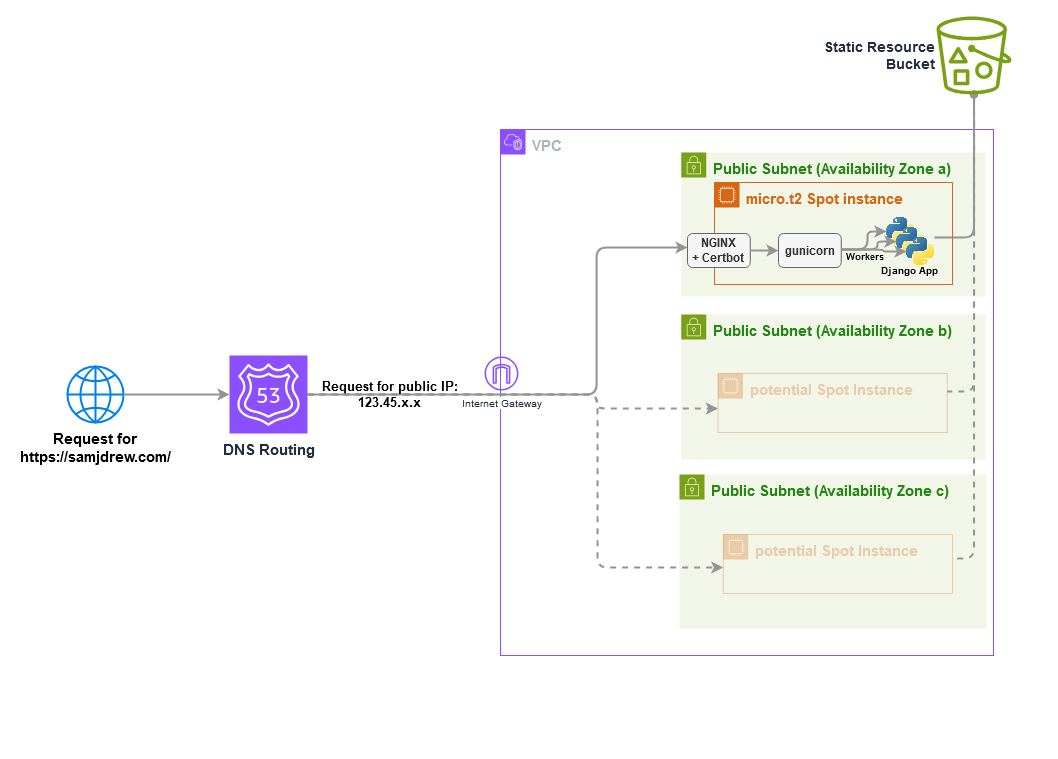

We then needed to define our own basic VPC. This can be just a standard public VPC with an internet gateway and 3 public subnets, one in each ap-southeast-2 availability zone (we have no backend database so public subnets will do just fine for our purposes).

Next, we moved the server to a spot fleet request (‘fleets’ can be configured to be 1 instance). The request uses a configuration object called a ‘launch template’ containing all our capacity and compute preferences and configuration information.

The spot request will look for the cheapest of any t2.micro, t2.small, t3.micro, or t3.small available in any AZ in ap-southeast-2 and assign it to the appropriate subnet in our VPC. The instance is then automatically given a public IP address by our VPC.

The launch template also includes a "user data" section that is executed every time the server instance starts up. If you’re more familiar with Docker, you can think of the ‘launch template’ as AWS lingo for a dockerfile and the confusingly named "user data" section is an entrypoint script that gets called in your image's dockerfile. The point is, all the code in “user data” gets run. First thing. As soon as our instance is running.

This is very important because spot instances will get interrupted semi-regularly by aws (I've found mine are replaced at least once per day).

Every time our EC2 instance changes, our “user data” should perform the following actions:

Find the instance’s public IP address (These first three steps are required because we now have no load balancer and therefore no static IP address to always route traffic to. So whenever a new server is started, we have to directly edit the A record in our DNS settings to tell the internet that the website's host server is now here).

Change our Route53 A record to set samjdrew.com to go to this new instance’s public IP.

Search our code packages S3 bucket for the latest version of the website and unzip it.

Install Python 3.11 and NGINX.

In the unzipped source code, activate Django's python venv and install requirements.

Configure gunicorn daemon workers to run the Django app.

Start gunicorn and NGINX.

use certbot-nginx to assign a free SSL certificate and set up https on nginx [²].

The interested reader can check out the actual code over on my website repo.

Do diagrams help? In my head, it’s simpler than this makes it look.

This is everything we need. And that’s how I’ve left it for the past 3 months. Of course, now and again, I check in on the website as often as my ego prompts me to but it’s been up every time I’ve checked. I half expected there to be some disarray somehow manifesting from my negligence but it looks like we’ve written a good system.

Let’s briefly consider scalability

The magic word of cloud platform engineering - scalability - is a term thrown around so much, you’d think it’s a requirement for any cloud-hosted solution. There’s a section in chapter one of Martin Kleppmann’s Designing Data-Intensive Applications:

[Scalability] is not a one-dimensional label that we can attach to a system: it is meaningless to say “X is scalable” or “Y doesn’t scale.” Rather, discussing scalability means considering questions like “If the system grows in a particular way, what are our options for coping with that growth?” and “How can we add computing resources to handle the additional load?” -

The rest of the section goes on to point out that before we discus scalability, we need to have a way to measure load on the system and the performance of the system. We also need to know what our performance goals for the system are. Do we want the 99.9th percentile to experience webpage load times of less than 1 second? Is that doable? How much work will that take? What about the 99.99th percentile?

In a commercial setting, this makes a lot of sense. Having a way to reduce your cloud spending when traffic is low and only increase when demand for your service goes up is the Great Cloud Dream™. Scaling up and/or out at peak volume periods and scaling back down/in when traffic usually dies down is a neat, effective way to minimise wasted resources and is one of the major selling points of Cloud resources over on-prem. Autoscaling is the sledgehammer approach and is only really appropriate when you’re dealing with unpredictable loads.

Scalability is a tool - or maybe a power-tool, to present a more visual analogy. It can and should be used but only AFTER:

Defining how you’ll measure performance and load

Using those definitions to define your Service Level Objectives (SLO and SLA if you’re working with a client)

Setting up billing alerts (which should be done as soon as you set up any cloud hosting account that’s tied to your wallet)

For this website, there’s no sense in implementing an autoscaling group with a maximum instance count greater than 1 because our 1 instance should be able to handle hundreds of requests per second.

As far as we’re concerned, we can be content in the knowledge that the small handful of clients or employers who bother to click on our website are getting our website.

The left-overs

There were some issues beneath the surface that I haven’t addressed:

Github actions were broken since moving away from Elastic Beanstalk meant we had to come up with a way of getting my source code onto the server (this was particularly embarrassing for me to ignore for so long as someone who once hadDevOpsin his job title).- This is fixed now. After merging to master, github actions sends a zipped package of the code to an S3 bucket, removes the “-latest” suffix from the old package and appends it to the new one, temporarily scales the spot fleet to 2 instances and then terminates the old instance after 3 minutes. The new instance handles all the server set up and domain redirection as described above and a new version of our website is live about 4 minutes after we merge to master.

The pages on certifications and experience still haven’t been implemented, which is hilarious for a website that’s supposed to be a portfolio landing page

we don’t talk about the photography website.

Jumping back into this project after three months, after not thinking about or working on anything Python/Django, I found it daunting trying to remember how Django organises itself. Python/Django has taken a sharp nose-dive in my list of priorities and unfortunately, the website has been in limbo because of that.

I’ll always be intrigued by cloud platforms and automating build systems. However, in my spare time, I’ve been trying to expand into a few new areas that I’m not familiar enough with.

In the past 3 months, I've:

learned to use Cmake/ninja to build large C projects

been re-learning C/C++ and assembly

Learned to draw a triangle in OpenGL

reading:

Martin Kleppmann's Designing Data-Intensive systems and

Thorsten Ball’s Writing an Interpreter in Go

Been working on my own indie game in Godot 4

The point is, everything I learned about django/python and HTML/CSS while throwing this website together this year is buried under several dense layers of unrelated CS stuff that I had to sift through to write this. The Python/Django atrophy has begun and I don’t want to strain myself trying to strengthen those muscles again when it’s no longer a thing I need for my job and I have other priorities preceding it.

This isn’t the post for discussing learning goals and professional growth[³]. I just wanted to wrap up the last outstanding thing I said I’d write about with regards to creating and hosting a website.

Some day I may start measuring data from my nginx access logs and use that data to make a more informed decisions around scaling and traffic. But at the moment, I have other things I’m excited to learn about and the website is up so I’m not fussed.

Just one more thing, my old domain mountainbean.online expired this week and my domain registrar wanted me to pay $80 US to renew it which was never going to happen. Thus you may have noticed I’ve moved to samjdrew.com. If the savvy reader has any idea where I can get better domain registrars that don’t hold my domain to ransom every year, please write in and let me know. Feedback is also welcome.

[^1]: I had a whole analogy here where you would ask your mate to by groceries and cook dinner for you but it turns out your mate got kickbacks from the grocery store so he buys you a bunch of stuff you don’t need to run up your grocery bill. It’s a bit cynical and the analogy was not exactly relatable so decided not to force my up-and-coming novella “Stretchy- Legume Steve” upon you.

[²]: It’s worth noting somewhere that I don’t have to ever worry about renewing my certificates. My servers are rebooted around every 2 days and a new certificate is generated each time. (Edit: turns out this is a problem because if I ever go over 5 certificates in a week, certbot stops giving me new certs that week. I’ll have to work in a way to store, access and renew my certs.

[³]: but if it were, it’d be inspirational af.

Subscribe to my newsletter

Read articles from Samuel Drew directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Samuel Drew

Samuel Drew

I am a developer from Brisbane. Now living in Wellington. I've gone from a degree in software engineering to a career in traditional engineering and then back again to software engineering as a DevOps and Cloud infrastructure engineer. I love learning how things are made and I try to simplify things down to understand them better. I'm using hashnode as a blogging platform to practice my technical writing.