LLMs Unpacked: How They Actually Work

Himesh Parashar

Himesh Parashar

Large Language Models (LLMs) are reshaping how we interact with technology, particularly in the realm of natural language processing. This blog aims to provide an in-depth understanding of what LLMs are, how they function, and their implications for our digital world.

Introduction to Large Language Models

LLMs are sophisticated mathematical functions designed to predict the next word in a sequence of text. They are trained on vast amounts of text data, enabling them to understand and generate human-like language. Imagine you find a movie script where a character’s dialogue with an AI assistant is incomplete. By utilizing an LLM, you could fill in the gaps, making it appear as if the AI is responding sensibly.

When you interact with a chatbot powered by an LLM, the model predicts the next word based on the context provided. Instead of giving a single deterministic answer, LLMs assign probabilities to all possible next words, which allows for varied and nuanced responses.

How LLMs Learn

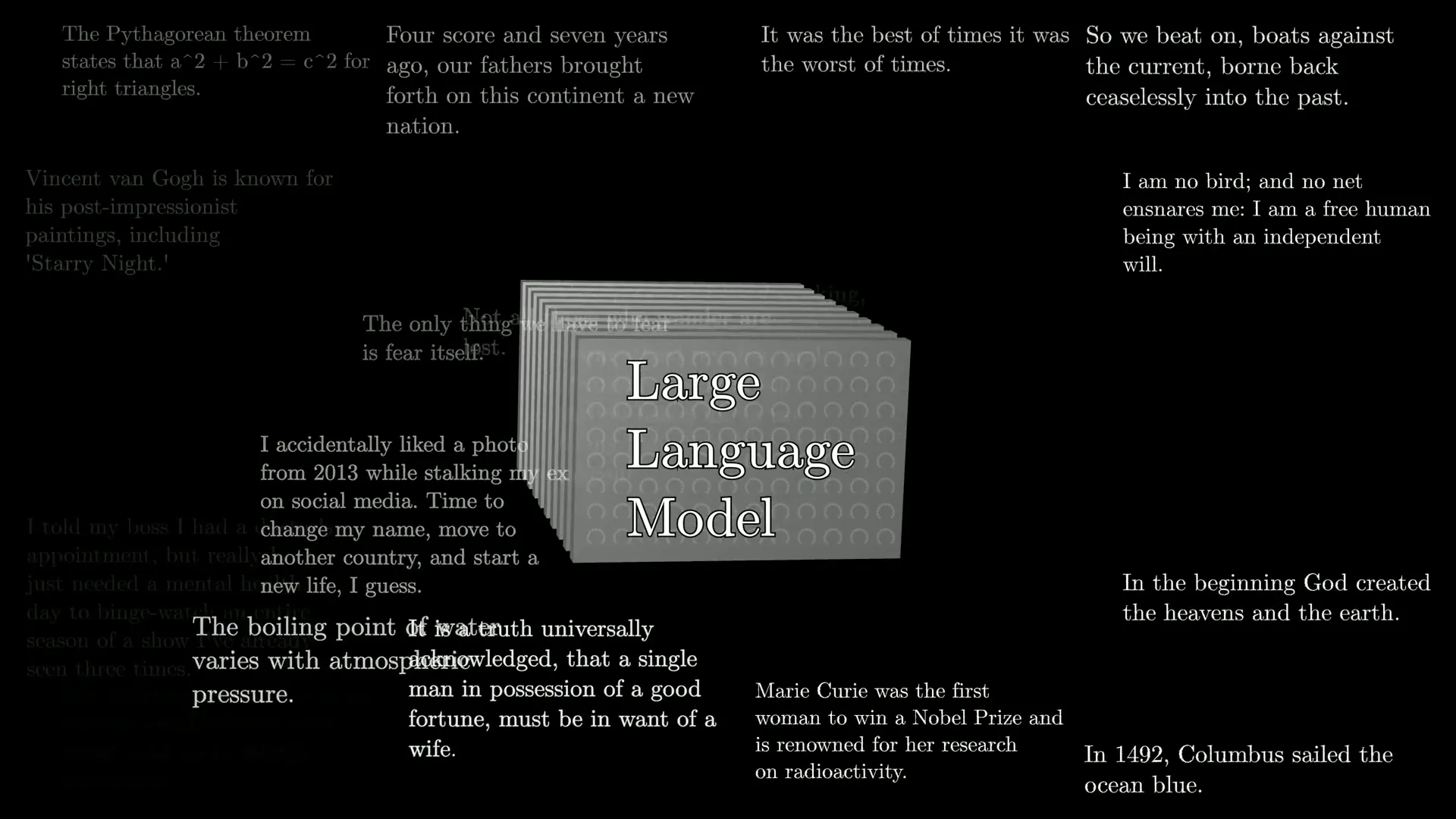

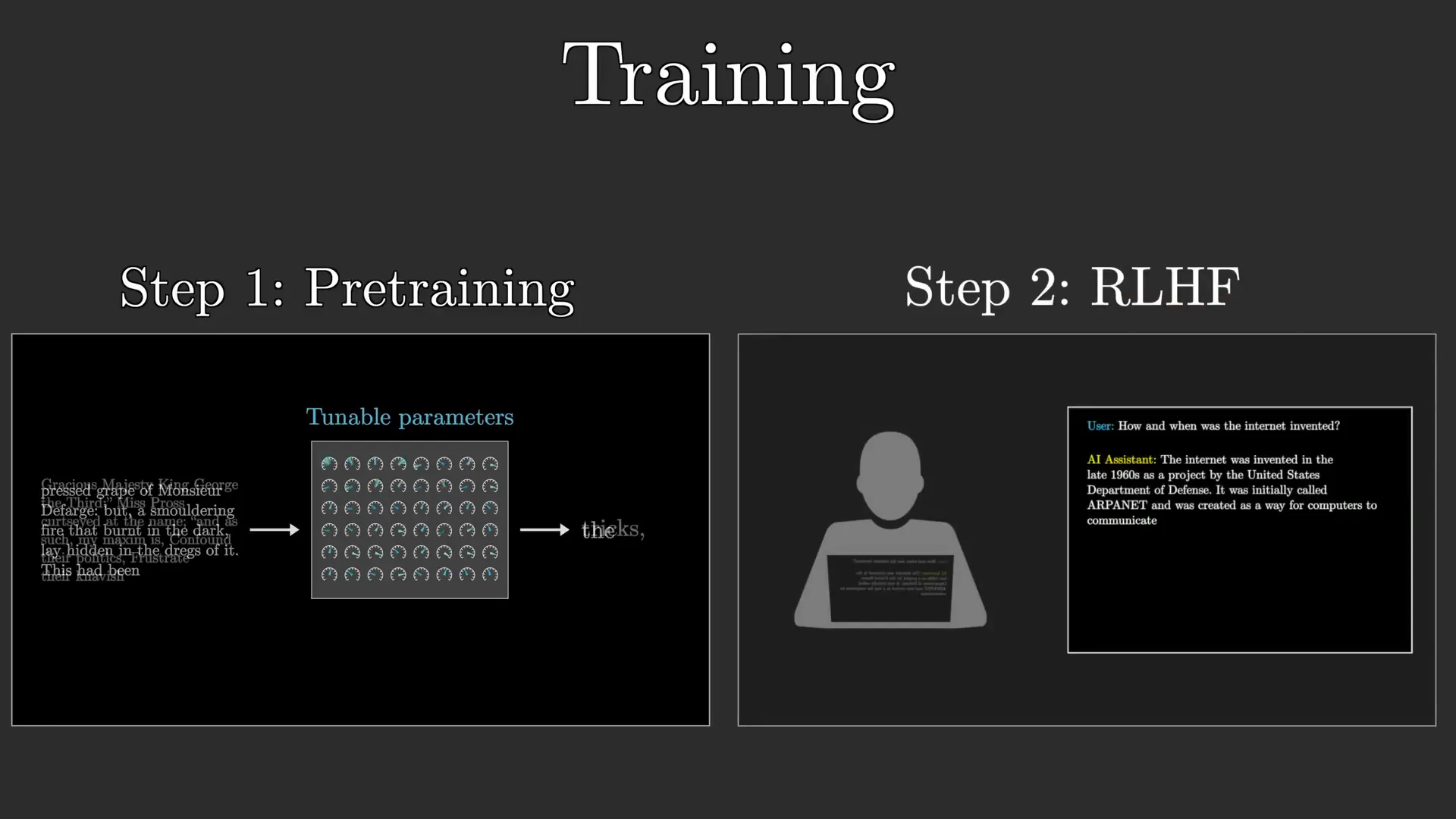

The learning process of LLMs can be broken down into two key phases: pre-training and fine-tuning. During pre-training, the model is exposed to a massive dataset, enabling it to learn the structure, grammar, and semantics of language. This stage is computationally intensive, requiring vast resources and time.

Pre-Training Phase

During pre-training, the model processes billions of sentences. For instance, to train a model like GPT-3, a human would need over 2,600 years of non-stop reading to cover the same amount of text. The model learns by adjusting its parameters, which are initially set randomly, based on the text data it encounters.

Every time a model processes a training example, it tries to predict the last word in a sequence. If it gets it wrong, an algorithm called backpropagation adjusts the parameters to improve future predictions. This iterative process allows the model to provide more accurate responses over time.

Fine-Tuning Phase

After pre-training, LLMs undergo fine-tuning, which is crucial for adapting them to specific tasks, such as being an AI assistant. This phase involves reinforcement learning with human feedback, where human workers flag unhelpful predictions, helping the model learn from corrections and user preferences.

The Power of Transformers

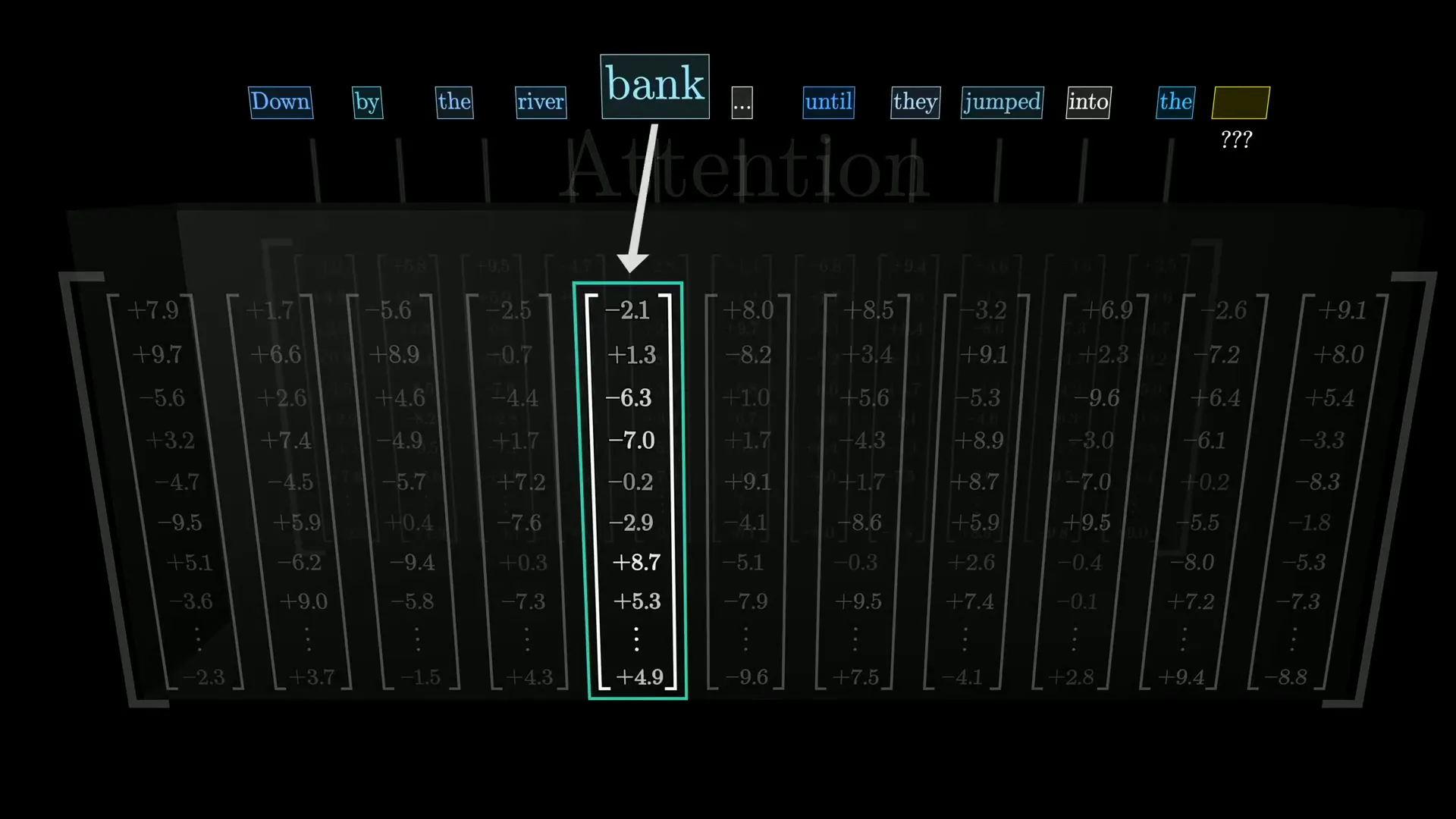

The introduction of the transformer model in 2017 revolutionized LLMs. Unlike earlier models that processed text sequentially, transformers analyze all words in a sentence simultaneously, allowing for more efficient training and better contextual understanding.

Attention Mechanism

A defining feature of transformers is the attention mechanism, which enables the model to focus on different parts of the input text. This allows words to influence each other’s meaning based on context. For example, the word "bank" can mean a financial institution or the side of a river, depending on surrounding words.

Additionally, transformers use feed-forward neural networks, enhancing their ability to learn complex language patterns. Through many iterations of these operations, the model refines its understanding, resulting in highly fluent and contextually appropriate predictions.

Challenges and Considerations

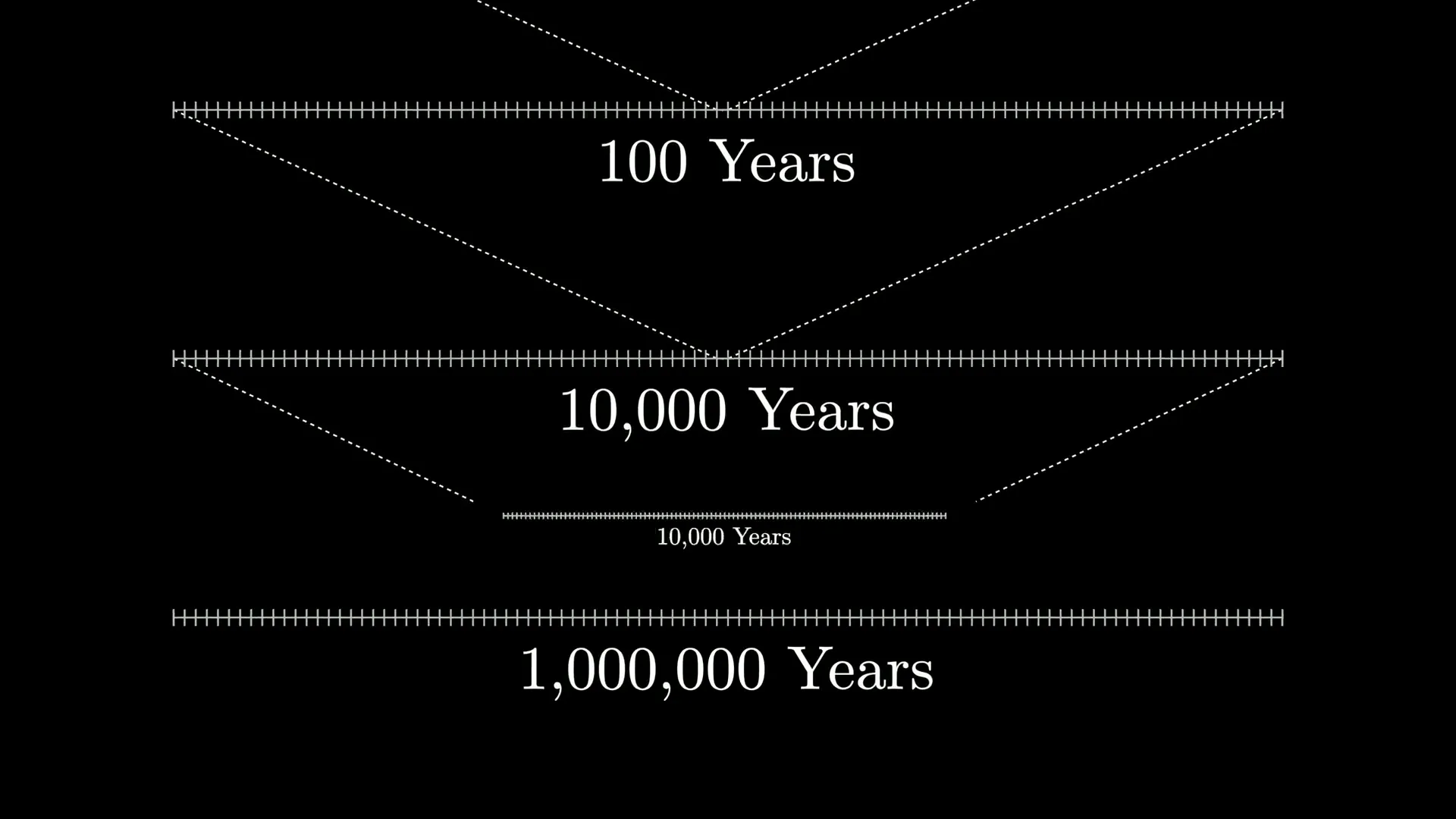

Despite their advancements, LLMs face challenges. The sheer scale of computation required for training is staggering. For instance, training the largest models could take over 100 million years if performed at a rate of one billion calculations per second.

Moreover, LLMs can inadvertently learn biases present in their training data, leading to problematic outputs. Researchers are actively working to mitigate these issues, ensuring that LLMs are more reliable and ethical in their applications.

Applications of Large Language Models

LLMs have a wide range of applications, including:

Chatbots and Virtual Assistants: LLMs can power conversational agents that provide customer support or personal assistance.

Content Generation: They can create articles, stories, and even poetry, making them valuable tools for writers.

Language Translation: LLMs can help translate languages more accurately and fluently.

Sentiment Analysis: Businesses can use LLMs to analyze customer feedback and sentiment from social media and reviews.

Conclusion

Large Language Models represent a significant leap in artificial intelligence, enabling machines to understand and generate human language with remarkable fluency. As technology continues to evolve, the potential applications of LLMs will expand, offering exciting possibilities for enhancing human-computer interaction.

If you're intrigued by the mechanics of LLMs and want to explore deeper, consider visiting the Computer History Museum to see related exhibits. For those looking for more technical insights, there are numerous resources available online to further your understanding of transformers and attention mechanisms.

Embrace the future of technology with an informed perspective on how LLMs are changing our world.

Subscribe to my newsletter

Read articles from Himesh Parashar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by