AttriBERT - Session-based Product Attribute Recommendation with BERT

Abhay Shukla

Abhay Shukla

Authors in this paper propose approach to recommend personalized refinements (or filters) in feeds, such as search results, based on in-session user interactions. The model is based on the encoder only architecture of BERT and uses the Masked Language Modelling (MLM) task for refinement prediction.

The full paper can be read here https://dl.acm.org/doi/10.1145/3539618.3594714.

The two proposed ways to model the personalized session-based refinement recommendation are:

Helping the Journey (HTJ): given the partially-complete session, predict the dictionary of attribute-values which the user will explore next

Helping the decision (HTD): given the partially-complete session, directly predict the attribute-values of product the user will purchase

Refinements Suggest Strong User Intent

Authors note that, “search sessions with refinements applied have higher purchase rate compared to sessions without any refinement. Applying the right refinement earlier in the shopping mission can help customer find product of their choice quickly and in turn reduce session lengths and session abandonment.”

Personalized Refinement Recommendations

Session-based recommendation system (SBRS) can provide personalized refinement recommendations based on in-session user behavior. For example, when searching for "shoes" different refinements like Color:Black and Brand:Nike or Color:Blue and Brand:Adidas can be recommended based on the preferences inferred from recent user interactions. A non-personalized system on the contrary would recommend the same refinements to all the users.

Product Representation Using Attributes

Users in shopping journey are more likely to interact with products with similar attributes. Hence, product representation based on product attributes would be more informative for identifying attributes to recommend next.

Problem Formulation

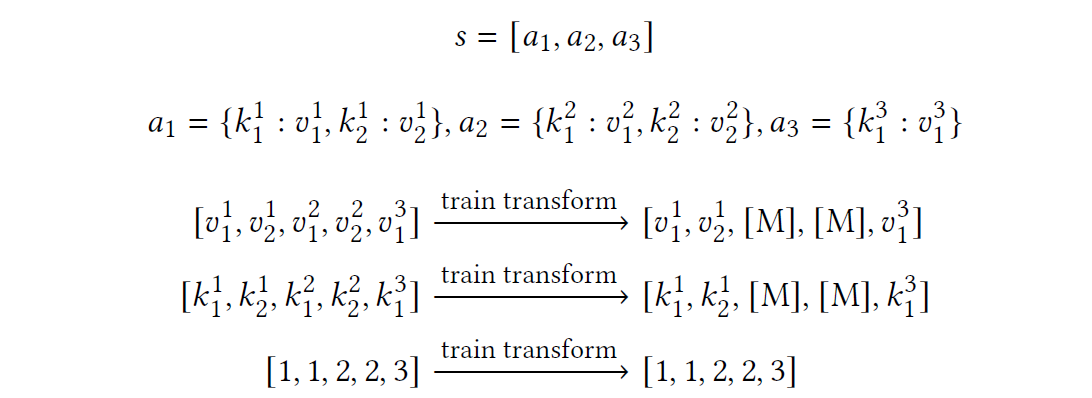

User session is represented as \(S = [a_{1}, a_{2}, …, a_{t}, …a_{n}]\) a chronological sequence of products user interacted. Each product is represented as dictionary of attribute-value pairs, \(a_i = \{k^{i}_{1}\\:v^{i}_{1},...,k^{i}_{n}\\:v^{i}_{n}\}\) The problem can then be formulated as,

Helping the Journey (HTJ)

Predict the attribute-values of next product user will interact with given their interaction history till time step t, i.e.

$$P(a_{t+1} = a | a_1, ..., a_t)$$

Helping the decision (HTD)

Predict the attribute-values of product user will purchase given their interaction history till time step t, i.e.

$$P(a_p = a | a_1, ..., a_t)$$

where a is the attribute-value of the product in prediction context.

AttriBERT

AttriBert utilizes the BERT architecture for attribute prediction.

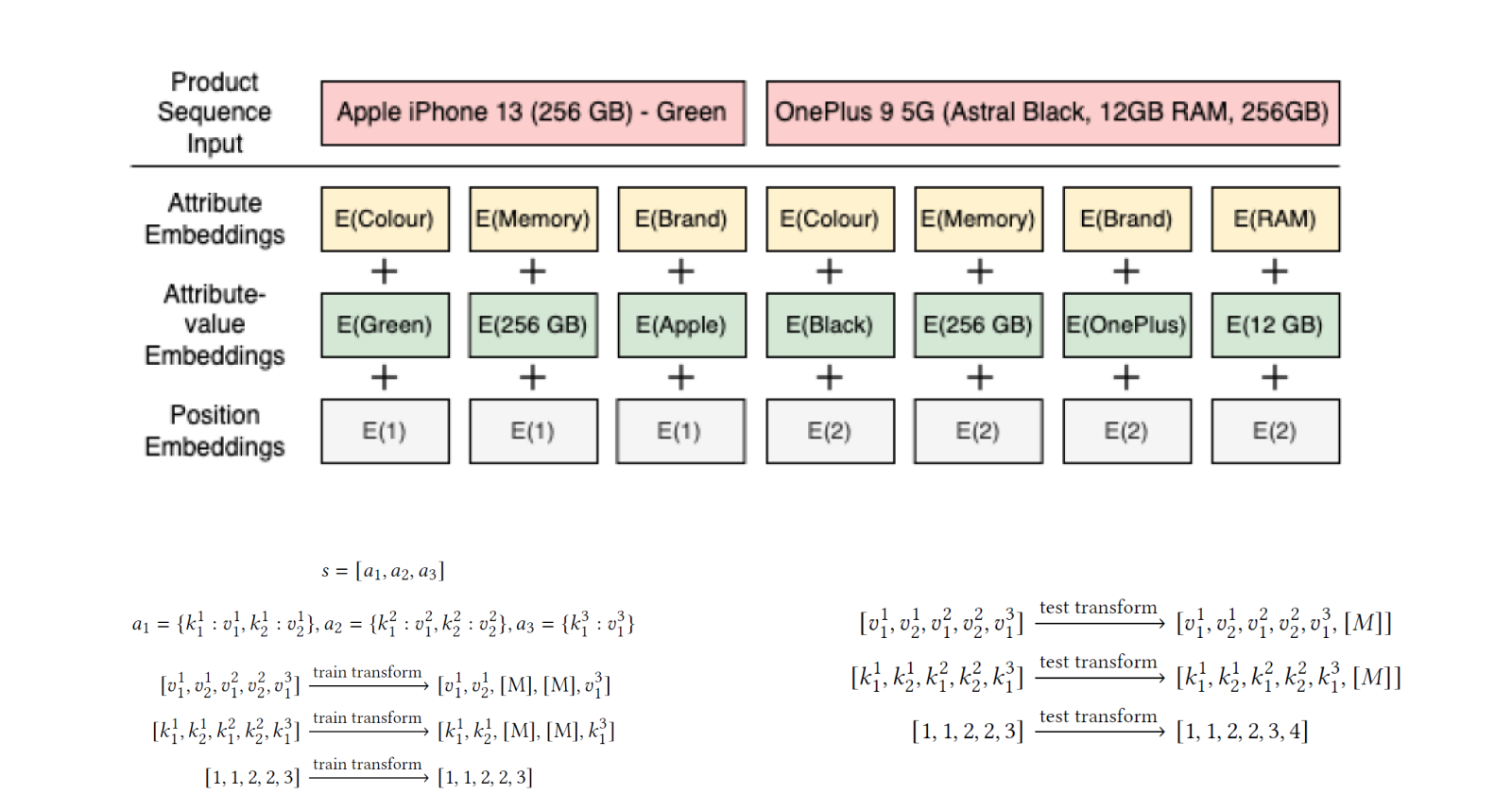

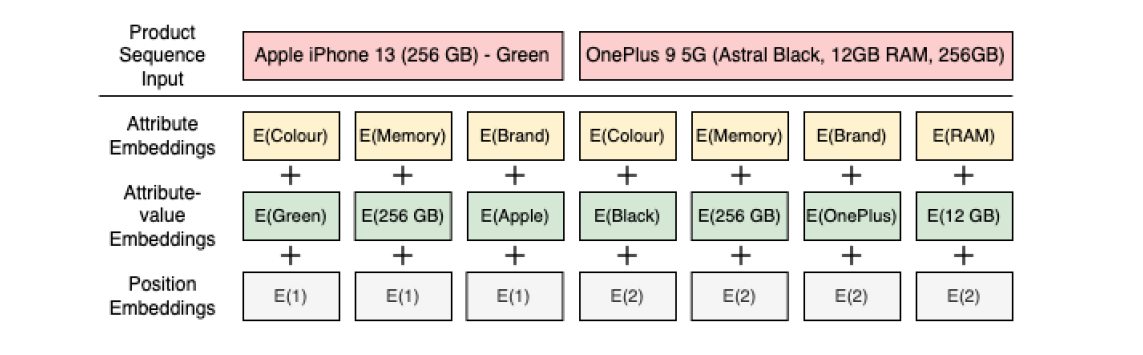

Product Representation

Two variants of the model are proposed,

AttriBERT: product is represented only by attribute-values, and

AttriBERT+: product is represented by attribute and attribute-values both

Positional Embedding

Each attribute-value is assigned a position index such that attribute-values of the i-th product are assigned position index of i.

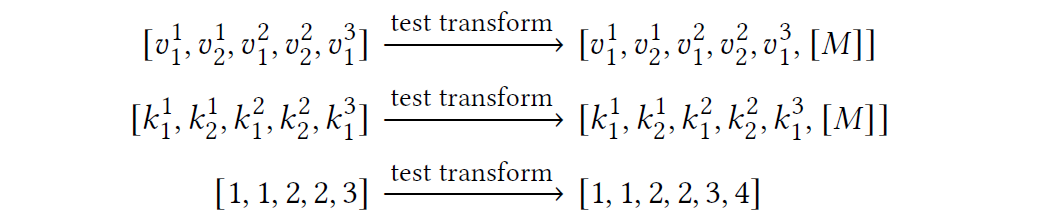

Training: Whole Product Attribute (WPA) Mask Modeling

For each product, with probability p all its corresponding attributes and attribute-values are masked (i.e., replace with special mask token "[M]"). During training the model has to predict the original attribute-values corresponding to the masked tokens based on its left and right context.

Output Projection

Given the output of the final encoder layer, assuming we masked \(v_t\) the masked product is predicted based on \(h_t\). A feedforward network with GELU activation is used to produce an output distribution over target attribute-values:

$$𝑃(v) = softmax(GELU(h^TW + b)E^T + b')$$

where W is the projection matrix, b and b’ are bias terms, \(E \in R^{|V|\ \times\ d}\) is the embedding matrix for the attribute-value set V and embedding size d.

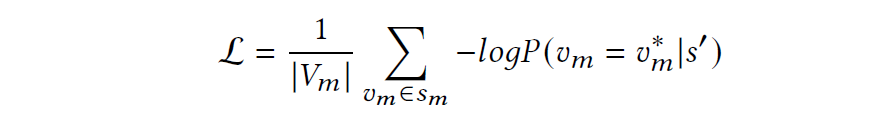

Loss

Negative log likelihood of the masked targets is defined as the loss,

Inference/Prediction

Similar to BERT4Rec, special token "[M]" is appended to the end of sequence, and then the attribute-values are predicted based on the final hidden representation of the "[M]".

Data

Only sessions with purchase are used for the model as training on non-purchase sessions could result in refinement recommendations which result in session abandonment (non-conversion).

Metrics

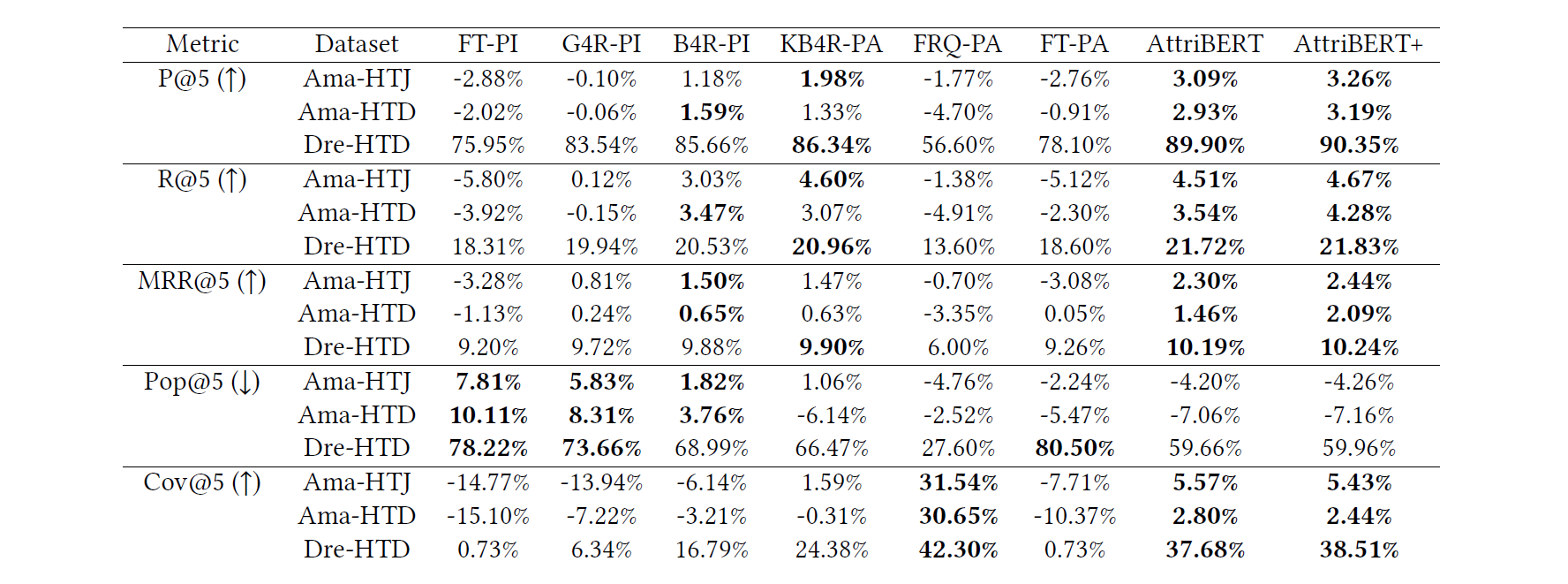

Three types of metrics for evaluation.

Customer behavior metrics: Precision, Recall and Mean Reciprocal Rank (MRR) to quantify how well the model captures the attribute-value preferences of customers.

Diversity metrics: Coverage to quantify the percentage of unique attribute-values appearing in model predictions; and

Popularity Bias (Pop) to quantifies the tendency of model to recommend popular (high frequency) attribute-values.

Results

AttriBERT+ model outperforms other models in most of the metrics.

Subscribe to my newsletter

Read articles from Abhay Shukla directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by