Order of fields in Structured output can hurt LLMs output

Dhaval Singh

Dhaval Singh

We at Seezo deal with structured output(SO) a lot, and hence I have a lot of interest in understanding what’s the best way to prompt LLMs, esp when constraining their output to JSON and how can it affects result.

Recently, I came across Let Me Speak Freely? A Study on the Impact of Format Restrictions on Performance of Large Language Models and a rebuttal blog Say What You Mean: A Response to 'Let Me Speak Freely' and another blog Structured outputs can hurt the performance of LLMs addressing the approach and questioning few things from both the paper and blog. These papers and blogs sparked a lot of discussions across the board and I found quite a bit of insightful comments on how folks deal with LLMs and SO. The one I found particulalry interesting was “Does the order of fields of your JSON affect the output?”.

While it does seem logically obvious, that if you allow the LLMs to reason before they answer, the results are much better, I couldn’t find any conclusive proof of it ie. Evals that supported this. So, i did a quick little eval, modifying the code shared in Structured outputs can hurt the performance of LLMs to verify the claims are real and if so, by how much.

The experiment

I picked 3 reasoning tasks:

GSM8K: A dataset from of grade school math word problems.

Last Letter: A dataset of simple word puzzles that require concatening the last letters of a list of names.

Shuffled Objects: A dataset that requires reasoning about the state of a system after a sequence of shuffling operations.

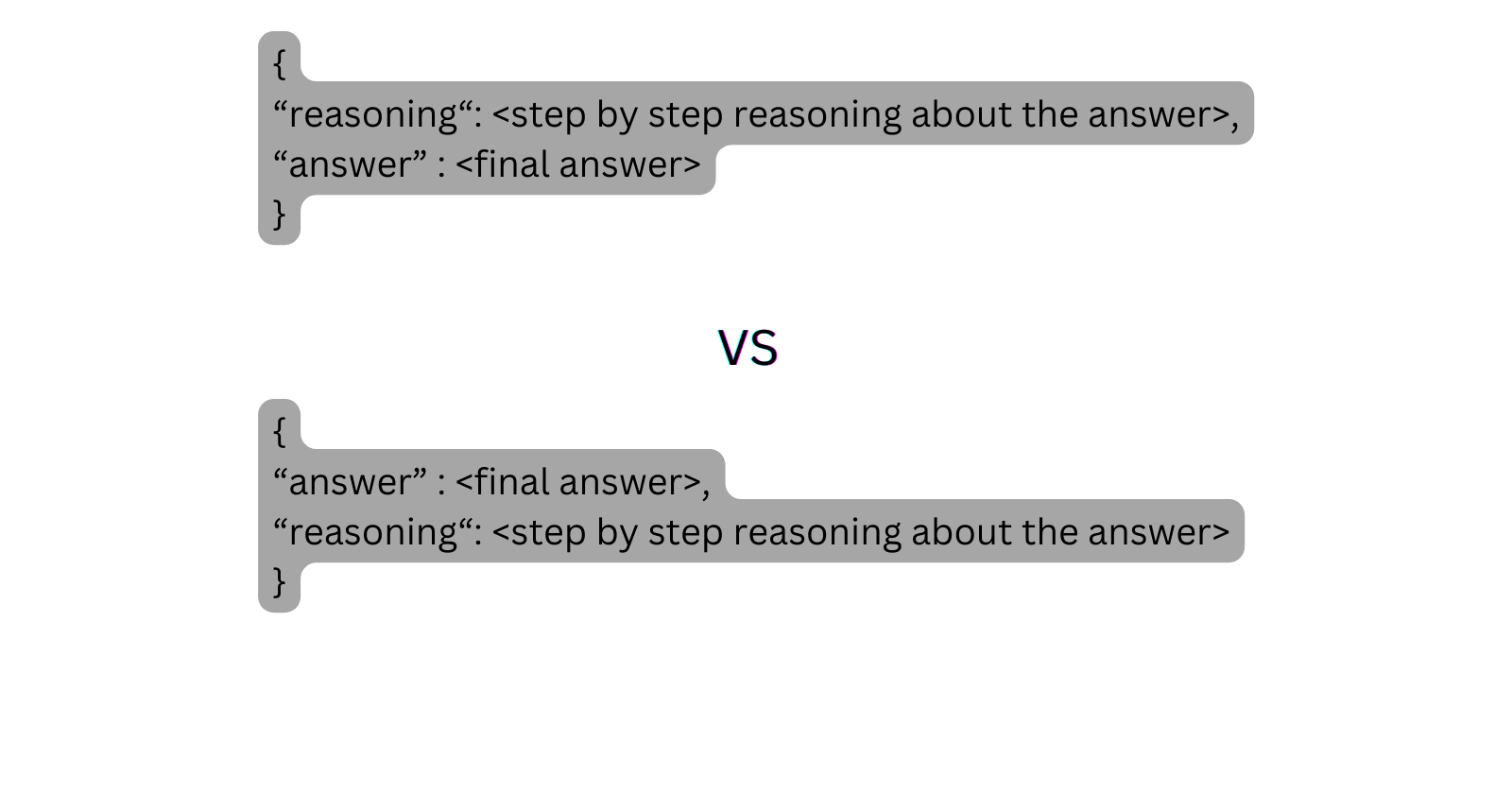

and all of the prompts ask the LLMs to respond in this format:

{

“reasoning“: <step by step reasoning about the answer>,

“answer” : <final answer>

}

I wanted to check what happens when you reverse the order ask the LLM to respond in

{

“answer” : <final answer>,

“reasoning“: <step by step reasoning about the answer>

}

For few-shot prompts, i changed the order of fields in the prompts as well in the reverse case.

Results

TLDR; Ordering the JSON fields in such a way that forces the LLM to reason first, improves the results by a huge margin!

GPT 4o-mini

| Dataset | Prompt Type | score_with_so_json_mode (Mean, CI) | score_with_so_json_mode_reverse (Mean, CI) |

| GSM8k | Zero-shot | 94.20% (92.15% - 96.25%) | 31.80% (27.71% - 35.89%) |

| GSM8k | Few-shot | 93.60% (91.45% - 95.75%) | 34.80% (30.62% - 38.98%) |

| Last Letter | Zero-shot | 90.00% (85.18% - 94.82%) | 2.67% (0.08% - 5.25%) |

| Last Letter | Few-shot | 91.33% (86.82% - 95.85%) | 6.00% (2.19% - 9.81%) |

| Shuffled Obj | Zero-shot | 79.67% (74.64% - 84.71%) | 14.23% (9.85% - 18.60%) |

| Shuffled Obj | Few-shot | 63.82% (57.80% - 69.84%) | 16.67% (12.00% - 21.33%) |

GPT 4o & GSM8K

Even for SOTA non-reasoning models, this has a significant difference!

| Dataset | Prompt Type | score_with_so_json_mode (Mean, CI) | score_with_so_json_mode_reverse (Mean, CI) |

| GSM8k | Zero-shot | 95.75% (94.67% - 96.84%) | 53.75% (51.06% - 56.44%) |

How to read the scores:

Mean Score: The proportion of correct predictions (e.g., if 90% of predictions are correct, the mean score is

0.9or90%).Confidence Interval (CI): A range that is likely to contain the true proportion of correct predictions, based on the sample data:

- Example: If the mean is

90%with a CI of[85%, 95%], you can be 95% confident the true performance lies between85%and95%.

- Example: If the mean is

Conclusion

While the data supports our intial hypothisis, which seemed obvious, the actual reason for me to do this experiment was to force myself(& others) to keep the ordering of JSON fields (which barely mattered before prompting) in mind when dealing with LLMs, esp in reasoning tasks.

These are not some simple gains, it’s massive.

You can check out the code i modified and used and change it to run your own evals. This also has an option to run it for llama-8b.

I had posted my intial results on reddit(which got some traction and u/fabiofumarola shared the paper: Order Matters in Hallucination: Reasoning Order as Benchmark and Reflexive Prompting for Large-Language-Models that talks about the same thing, in this comment!

There are even more things like ‘name of the JSON field‘, ‘What is the best generic order of fields for JSON‘, etc which can affect the results quite a bit. I plan on doing my own evals and sharing it in the future :)

I also share random things more frequently on my twitter, follow me if this seems interesting.

Subscribe to my newsletter

Read articles from Dhaval Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by