How to Use Deepseek with a Private Ollama Server

Manas Singh

Manas Singh

Introduction

With the power of Ollama and the ease of Tailscale VPN, you can host a private AI model server that is both accessible and secure, allowing for local chat, code assistance, and remote access from mobile devices.

Ollama

Let's start by installing Ollama, which helps serve LLM models. Install Ollama on the server machine using the official installer or system package managers. Then, download the models that are compatible with the machine. The latest Deepseek-R1 models, 7B and 8B, require 16 GB of memory and can run on a MacBook Pro. After installation, make sure Ollama is working:

# Choose the model that can run on your hardware

ollama run deepseek-r1:7b

Local AI code assitant

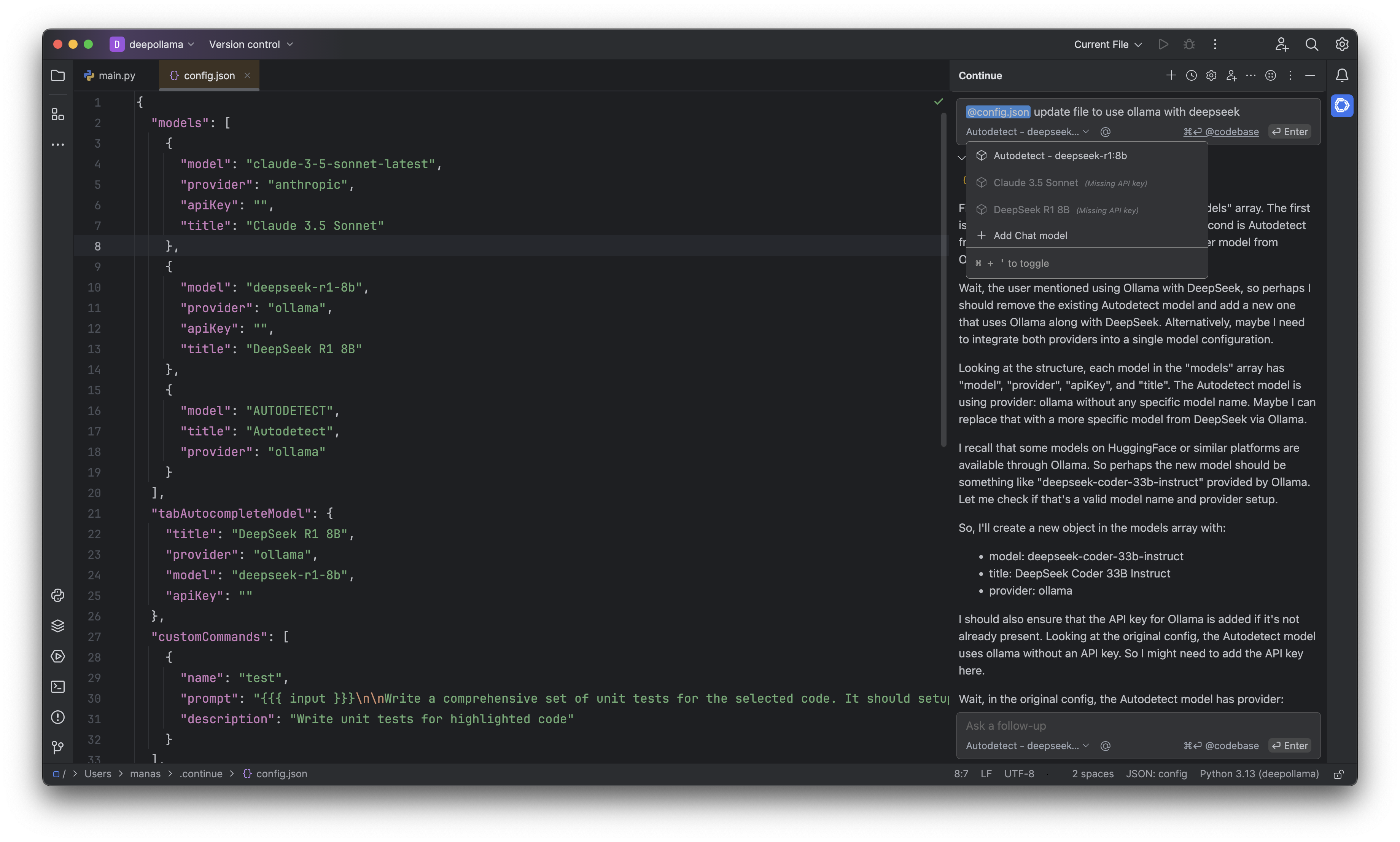

With the Ollama server running, you can use it for code assistance. You can use the open-source Continue plugin for VSCode or IntelliJ IDEs. To use the local LLM, you need to configure the config.json.

Interestingly, you can use Deepseek to modify the config JSON itself! Set the context with the config.json, the Deepseek model, and then prompt:

update file to use ollama with deepseek

Here’s a screenshot of the chat

Tailscale

Tailscale allows you to create software defined networks. This is a secure alternative to something like ngrok. Install Tailscale on the server and clients using the preferred method (e.g., Homebrew for macOS or package managers for Linux/Windows) or App Store. Login with your preferred auth service, e.g. Google is supported.

Connect Devices

If you do not want to use CLI, just visit the admin console to add devices. There are apps available for iOS, Android and other OS.

Remember to note down the IP or DNS hostname of the server machine and mobile devices.

Configure Ollama to Use Tailscale Server

# MacOS example

launchctl setenv OLLAMA_HOST "<tailscale hostname>"

# Adjust port numbers as necessary based on your setup.

Access Models Across Devices

Ensure ollama is running:

ollama serve

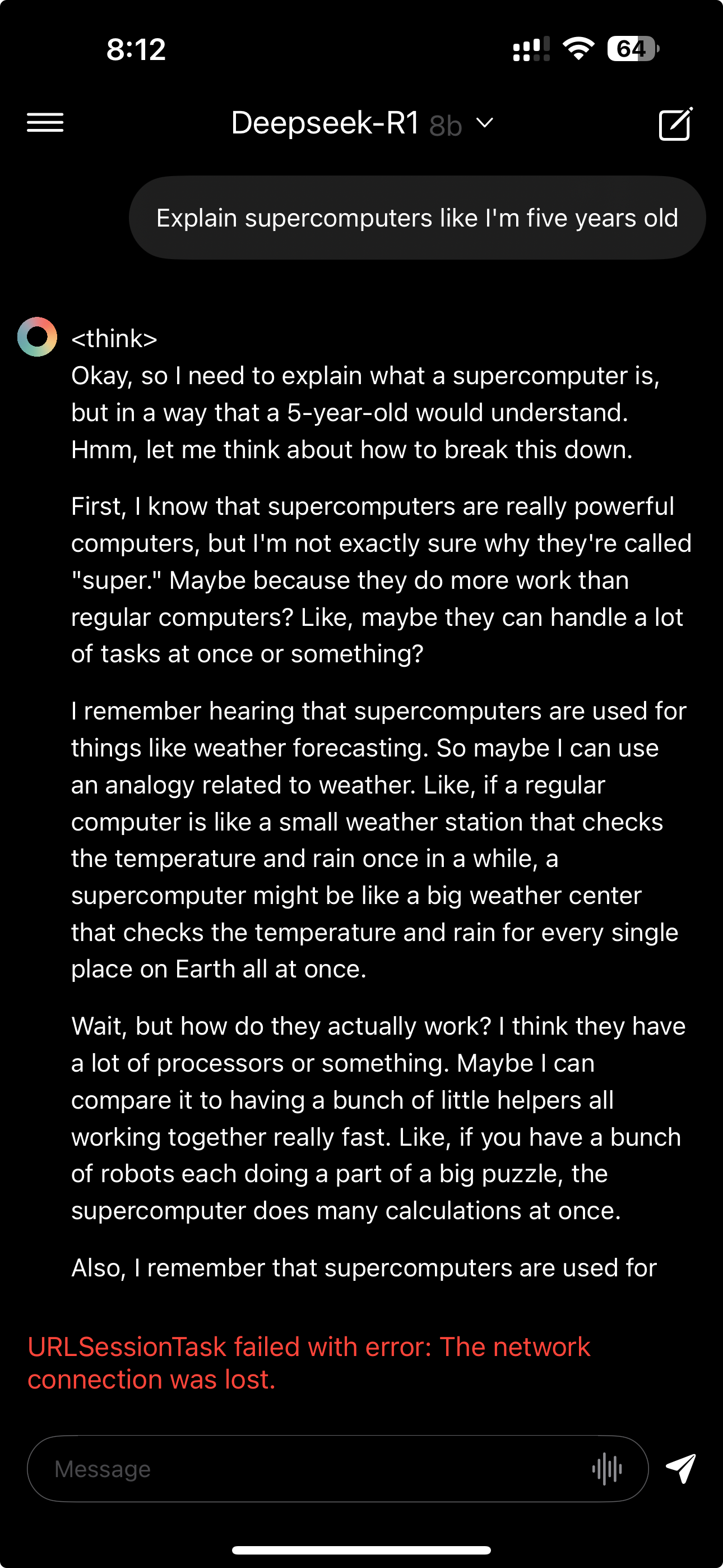

Now, install ollama compatible app on the mobile device. Enchanted works well for iOS. In the settings, set ollama server as the tailscal IP/Hostname with the port 11434

Now, you can use the ollama model from the private server on the mobile device.

Subscribe to my newsletter

Read articles from Manas Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Manas Singh

Manas Singh

14+ Years in Enterprise Storage & Virtualization | Python Test Automation | Leading Quality Engineering at Scale