Understanding Embeddings : How AI Learns Meaning from Text, Images, and Data

Raghul M

Raghul M

Hey Everyone 👋🏻 !

In my previous blog, we explored how Transformers work and how they revolutionized modern AI, paving the way for major advancements.

Today, let's dive into embeddings—the foundation of Large Language Models (LLMs). We'll cover how embeddings work, different types of embeddings, and their applications. Let's get started!

What is an Embedding?

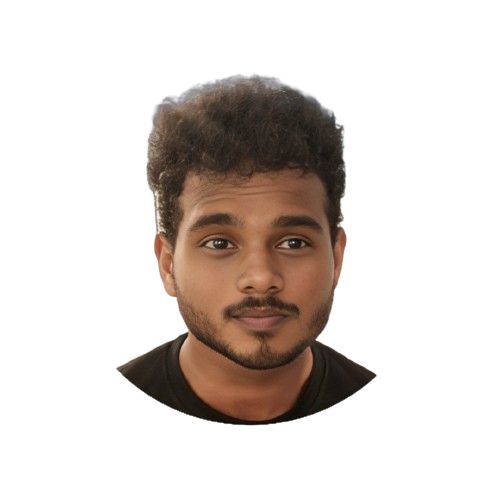

An embedding is a numerical representation of data (words, sentences, images, videos, audio, documents) in a vector format within a multidimensional space. These representations capture meaning and relationships between data points.

It is also known as a vector embedding.

📌 Example:

Image sources : towardsdatascience.com

Words with similar meanings—like "king" and "queen"—will have embeddings that are closer together in vector space.

Types of Embeddings

Word Embeddings

Represent individual words in a multi-dimensional space.

Capture relationships between words.

📌 Models: Word2Vec, GloVe, FastText (by Facebook)

📌 Use Cases: Machine translation, chatbots, search engines

Text Embeddings

- Represent longer texts (phrases, sentences, paragraphs, or documents) as vectors.

📌 Models: BERT, Paragraph2Vec

📌 Use Cases: Text classification, sentiment analysis

Note:

Word embeddings focus on individual words.

Text embeddings capture the meaning of entire texts.

Sentence Embeddings

Represent entire sentences in vector form.

Capture both meaning and context.

Similar sentences have embeddings that are closer together.

📌 Models: Sentence-BERT (SBERT), Universal Sentence Encoder (USE) [by Google], Infersent

📌 Use Cases: Semantic search, text retrieval

Note: Sentence embeddings are a type of text embedding but specifically focus on entire sentences

Image Embeddings

Convert images into feature vectors.

Helps in image similarity searches & object recognition.

📌 Models: CNN (ResNet, VGG, CLIP)

📌 Use Cases: Image search, object detection

Graph Embeddings

Represent nodes, edges, or entire graphs as vectors.

Used in social networks, fraud detection, recommendation systems.

📌 Models: Node2Vec, GraphSAGE

📌 Use Cases: Fraud detection, social network analysis

Video Embeddings

- Convert both spatial (image) and temporal (motion) features into a meaningful vector sequence.

📌 Models: C3D, CLIP (for video)

📌 Use Cases: Video search, activity recognition

Audio Embeddings

Convert sound waves into vector representations.

Capture pitch, tone, and speech meaning.

📌 Models: Wav2Vec, OpenL3

📌 Use Cases: Speech recognition, music classification

Note: All these embeddings fall under vector embeddings.

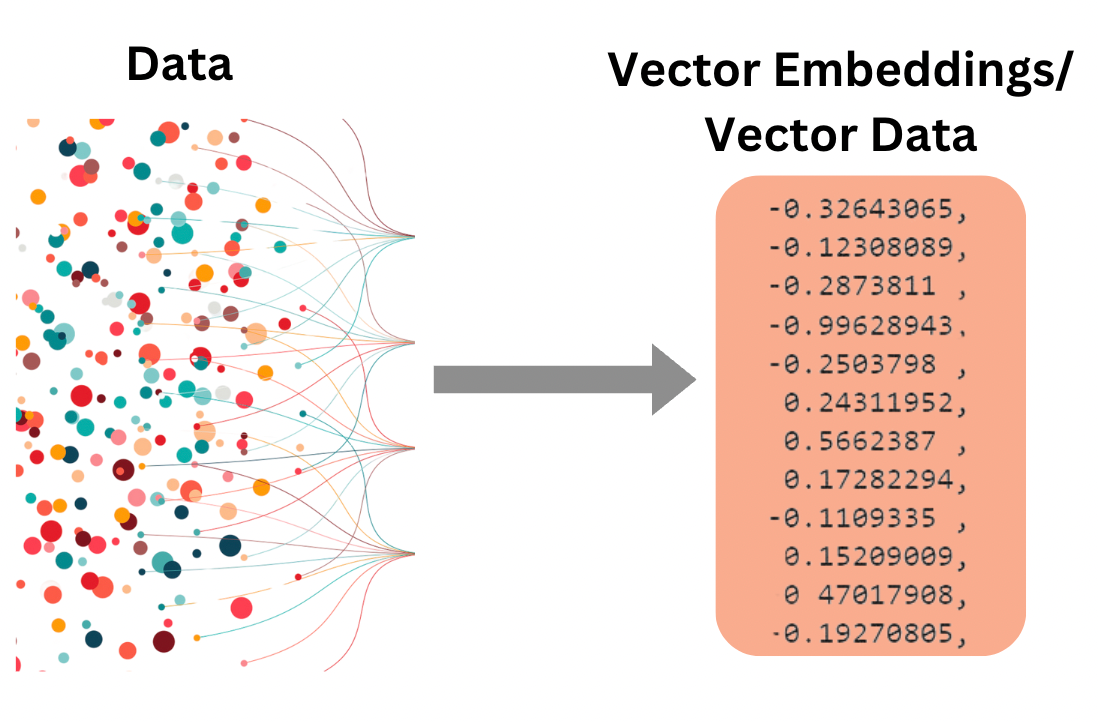

Shared Embedding Space

A shared embedding space is a common vector space where different types of data (e.g., text & images) are mapped close together if they are related.

Image sources : dailydoseofds.com

📌 Example: CLIP (Contrastive Language-Image Pretraining)

- Developed by OpenAI to create a shared embedding space for images & text.

- Allows images and textual descriptions to be compared directly.

Use Case: You can search for images using text descriptions!

Applications of Embeddings :

Image sources : lyzr.ai

Large Language Models (LLMs): Convert input tokens into token embeddings.

Semantic Search: Retrieves similar sentences to improve search relevance.

RAG (Retrieval-Augmented Generation): Uses sentence embeddings to retrieve relevant text.

Recommendations: Finds similar products using vector search.

Anomaly Detection: Identifies unusual patterns in data.

Famous Word Embedding Models :

Image sources : medium.com

Word2Vec → Predicts a word based on surrounding words (developed by Google).

GloVe → Similar to Word2Vec but with a different mathematical approach (by Stanford).

Lets Understank Tokens :

Image sources : edenai.co

What is a Token?

A token is a small unit of text used in NLP models. It can be:

A word (e.g., "cat")

A subword (e.g., "play" and "ing" in "playing")

A character (e.g., "C", "a", "t")

A symbol or punctuation (e.g., "!")

Example:

- Sentence:"I love AI!"

- Tokens:["I", "love", "AI", "!"]

Token vs. Embedding

| Feature | Token | Embedding |

| Definition | A unit of text (word, subword, character) | A numeric vector representing meaning |

| Format | Text | Numbers (vector) |

| Example | "cat" → Token | "cat" → [0.23, 0.87, -0.45, ...] |

Tokens help models read text.

Embeddings help models understand meaning.

Example Code: How Embeddings Works : https://github.com/Raghul-M/GenAI/blob/main/Embedings/Token-Embeddings.ipynb

Conclusion :

Embeddings have become a fundamental building block in modern AI, enabling machines to understand and represent complex data in a way that drives advancements in natural language processing, search, and more. Their ability to convert words, sentences, or documents into numerical vectors allows for more efficient and accurate tasks like similarity search and classification. As technology evolves, embeddings will continue to play a crucial role in shaping the future of AI.

Connect with me on Linkedin: Raghul M

Subscribe to my newsletter

Read articles from Raghul M directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Raghul M

Raghul M

I'm the founder of CareerPod, a Software Quality Engineer at Red Hat, Python Developer, Cloud & DevOps Enthusiast, AI/ML Advocate, and Tech Enthusiast. I enjoy building projects, sharing valuable tips for new programmers, and connecting with the tech community. Check out my blog at Tech Journal.