Application Security in GKE Enterprise

Tim Berry

Tim Berry

This is the ninth post in a series exploring the features of GKE Enterprise, formerly known as Anthos. GKE Enterprise is an additional subscription service for GKE that adds configuration and policy management, service mesh and other features to support running Kubernetes workloads in Google Cloud, on other clouds and even on-premises. If you missed the first post, you might want to start there.

Regardless of where our clusters run or how our applications are configured, security must be a fundamental consideration in any design. The power and flexibility of Kubernetes can bring an increased level of complexity, enlarging attack surfaces and making security considerations all the more important. In my last post, I explored how Cloud Service Mesh can help us to identify our workloads and then encrypt and control their communication. Even though we’ve completed our Service Mesh journey in this series, we’ll now continue the topic of security in this post and learn some of the most useful Kubernetes features that can help us keep our workloads secure. Heads up that some of these are specific to GKE, and some of are just generally available in Kubernetes.

The one thing we cannot afford to do is be complacent about security! A long time ago people used to make terrible mistakes like assuming an application was secure if it was “behind the firewall”, but as we know, the Internet is a zero-trust environment and should be treated as such. The Cloud Native Computing Foundation (CNCF) summarises this with its “4 Cs” that we should care about when it comes to security: Code, Container, Cluster and Cloud. It’s our job to secure each of these things to the best of our ability!

To achieve this, we’re going to learn the following things in this post:

Leveraging Workload Identity for your apps

Trusting container deployments with Binary Authorization

Integrating other Google Cloud security tools

Securing Traffic with Kubernetes Network Policies

Of course, we can’t cover every Kubernetes security topic in this post! We’re going to investigate some of these specialised areas, but you should already have a solid grounding in Kubernetes security concepts such as Role-Based Access Control (RBAC) and namespaces. If you need a quick brush up, I’d recommend reviewing the documentation here: https://kubernetes.io/docs/concepts/security/

Leveraging Workload Identity for your apps

I’ve mentioned Workload Identity a few times already in this series of posts, and chances are that you have it enabled by default in your fleet settings. Like most Google products, Workload Identity has a self-explanatory name, and provides your workloads with an identity. But why is that important?

One of the most fundamental security concepts you should embed in your system designs is the principle of least privilege. Simply put: this principle states that any component of a system should be granted only the permissions it requires to perform its function, and nothing greater. Okay, but why is that important?

You can consider any component in your system as a potential attack surface, vulnerable to being compromised and used as a jumping off point into other parts of your system. The permissions that a single component is granted will determine what other parts of your system it can access and to what extent (for example, read only or read and write). By reducing the permissions granted to each component you are therefore reducing the size of that attack surface.

Historically, when Google’s Cloud IAM was in its infancy, the principle of least privilege was difficult to achieve. All Google Cloud services run with a service account as an identity, and it was common for compute services (including GKE nodes) to run with the default Compute Engine service account. Some early Google Cloud engineer in their naivety, figured that this service account should have the “Project Editor” role. The upshot of this meant that any compromised service suddenly had complete access to everything in a project – all the other services, all the data, all the APIs.

Fast forward to today when we have an extremely well structured and powerful Cloud IAM service, and a set of carefully crafted predefined roles. Now when we need a workload to access something, we can define an IAM binding that grants a specific set of permissions only, therefore achieving the principle of least privilege. All we have to do is identify ourselves as the service account to which the IAM roles have been bound.

This used to mean downloading and managing service account keys, but this created an additional attack surface! If you accidentally left your keys lying around, someone could use them to essentially steal the identity of a service account and access any systems for which it had permissions. However, recently all of the compute platforms in Google Cloud have been improved so that manual keys are no longer required. Instead, the platform itself will manage a short-lived token to provide access to a service account.

Workload Identity Federation

This capability has now been extended to Kubernetes service accounts within GKE (note that these are Kubernetes objects inside your cluster, not Cloud IAM service accounts – although the two can be used together, more on that in a moment!)

When you run a Pod workload with a Kubernetes service account identity, you can now create an IAM binding for that identity to specific IAM roles and permissions. For example, if your Pod workload needs to access the Cloud Storage API to write to a specific bucket, you can grant the specific granular permission to allow this with an IAM binding, rather than using the default service account of the cluster node.

To do this, you reference the Kubernetes service account as a principal in the membership of the IAM policy binding. The membership identifier is a bit cumbersome however, as it contains your project number, the name of your workload identity pool, a namespace and the name of the Kubernetes service account.

Here’s an example where the project number is 123456123456 and the project name is my-project, which makes the workload identity pool my-project.svc.id.goog. Assuming we have a namespace called frontend and a Kubernetes service account called fe-web-sa, this would make the identifier:

principal://iam.googleapis.com/projects/123456123456/locations/global/workloadIdentityPools/my-project.svc.id.goog/subject/ns/frontend/sa/fe-web-sa

Federated identity works in all GKE clusters, but clusters in fleets have an additional advantage. Rather than an identity pool spanning a single cluster, all clusters attached to a fleet share the same fleet-wide pool. This means that you can create an IAM binding just once, and it will be applied to all clusters in the fleet where the specified namespace and service account name match (bonus points if you remember the concept of sameness that I talked about very early on in this series!)

Let’s walk through an example of creating a workload that access the Cloud Storage API to demonstrate some best practices. We’ll assume we have a storage bucket that contains some files, and a GKE cluster with Workload Identity enabled. In this example, we’ll add the IAM policy binding at the level of an individual bucket, not at the project level.

Following the examples we used earlier to explain the IAM principal membership, let’s go ahead and create a namespace called frontend and a Kubernetes service account called fe-web-sa:

kubectl create namespace frontend

kubectl -n frontend create serviceaccount fe-web-sa

Now that we’ve created a Kubernetes service account, we can simply reference it as the member (or principal) in an IAM policy binding. In this example, we’ll grant the Storage Object User role to a bucket called frontend-files. This predefined IAM role grants access to create, view, list, update and delete objects and their metadata, but it doesn’t grant the user any further permissions to manage ACLs or IAM policies. This is an example of just the right level of privilege for a use-case where a workload needs read-write access to objects in a bucket, without being able to change the bucket itself.

We’ll create the binding with this command:

gcloud storage buckets add-iam-policy-binding gs://frontend-files \

--member=serviceAccount:my-project.svc.id.goog/subject/ns/frontend/sa/fe-web-sa \

--role=roles/storage.objectUser

Now, let’s create a workload that actually uses these permissions. In a real-world use case, we can perhaps imagine a front-end web server Pod that grabs its files from Cloud Storage on startup. For testing purposes, we’ll just spin up a Pod that contains the gcloud tool so we can test if our permissions work. We’ll define the Pod as follows:

apiVersion: v1

kind: Pod

metadata:

name: test-pod

namespace: frontend

spec:

serviceAccountName: fe-web-sa

containers:

- name: test-pod

image: google/cloud-sdk:slim

command: ["sleep","infinity"]

Once the container is up and running, we can test permissions by running some gcloud commands that should only be possible with the correct IAM binding. For example, we could list the contents of the bucket:

kubectl -n frontend exec -it test-pod -- gcloud storage ls gs://frontend-files

Or try to create a new file by copying one that already exists inside the bucket:

kubectl -n frontend exec -it test-pod -- gcloud storage cp gs://frontend-files/test1.txt gs://frontend-files/test2.txt

Of course, this isn’t the sort of thing your workloads are likely to be doing, but the key thing is that our Pod uses its service identity to get the permissions it needs, and only those permissions. Thanks to identity federation, we were able to treat our Kubernetes service account as a principal in an IAM binding just like we would with a Cloud IAM service account.

However, at the time of writing there were some limitations with specific APIs and the extent to which federated identities could be used with them. You can find these details documented here: https://cloud.google.com/iam/docs/federated-identity-supported-services

Identity with unsupported APIs

If you need to control access to a specific Google Cloud API in a way that is not supported by federated identity, you can still fall back to a legacy method that achieves the same thing. In this method, we create a Cloud IAM service account with the correct IAM bindings and permissions, then we allow the Kubernetes service account to impersonate the IAM service account.

There are a few more moving parts to this process. Assuming we have already created our Kubernetes service account fe-web-sa and our workload that uses that service account, we now need to create a matching Cloud IAM service account, which in this case we’ll call iam-fe-web-sa:

gcloud iam service-accounts create iam-fe-web-sa

In this scenario, we add the policy bindings to the Cloud IAM service account. So, following our previous example, we’ll grant it the Storage Object User role on the same bucket as before. Remember that Cloud IAM service accounts are identified by an email address in the format of <service-account-name>@<project-name>.iam.gserviceaccount.com which makes our command:

gcloud storage buckets add-iam-policy-binding gs://frontend-files \

--member=serviceAccount: iam-fe-web-sa@my-project.iam.gserviceaccount.com \

--role=roles/storage.objectUser

Next, we need to create an IAM policy that allows the Kubernetes service account to impersonate the IAM service account:

gcloud iam service-accounts add-iam-policy-binding iam-fe-web-sa@my-project.iam.gserviceaccount.com \

--role roles/iam.workloadIdentityUser \

--member "serviceAccount:my-project.svc.id.goog/subject/ns/frontend/sa/fe-web-sa"

And finally, we annotate the Kubernetes service account so that GKE understands there is a link between the two service accounts:

kubectl annotate serviceaccount fe-web-sa \

--namespace frontend \

iam.gke.io/gcp-service-account=iam-fe-web-sa@my-project.iam.gserviceaccount.com

This essentially triggers the same process as identity federation and allows the cluster to obtain and use short-lived credentials automatically. These approaches may seem like extra effort, but once again: it cannot be stressed enough how important least privilege is as a security principle. In the unfortunate event of an attacker gaining access to a component of your system, these practices drastically reduce the further damage they can do.

So, we’ve covered regulating the API access that our workloads have when running in our clusters, but what about regulating what workloads will run in the first place?

Trusting container deployments with Binary Authorization

If you recall the 4 Cs we discussed earlier, the first and most important is “Code”. We can put all kinds of structural security in place, but we’re still trusting the code that runs inside our containers. It’s helpful then to have a way to guarantee that only trusted container images should be allowed to run, and all other containers images should be blocked. This is the purpose of Binary Authorization.

Binary Authorization works with two main concepts: attestations and policies:

An attestation is essentially an electronic signature, attached to a container image, that confirms that the image has been signed by an attestor. An attestor can run at any stage in your container image build pipeline to provide important safeguards in the build process. For example, you may want to leverage Google’s Artifact Analysis service to scan the metadata of your images and check their vulnerability severity. Or you can use third-party scanning services like Kritis Signer or Voucher or use your own services to check containers as they go through the build process. The important thing is that at each stage, if the attestor is happy that the container is compliant, it creates a signed attestation.

A Binary Authorization policy then determines which attestations are required for a container image to be deployed. Optional rules can be added to a policy to control which clusters and service identities can deploy an image, and these can be scoped to a namespace if you wish. You can also choose if policies should just evaluate or enforce the rules you have set.

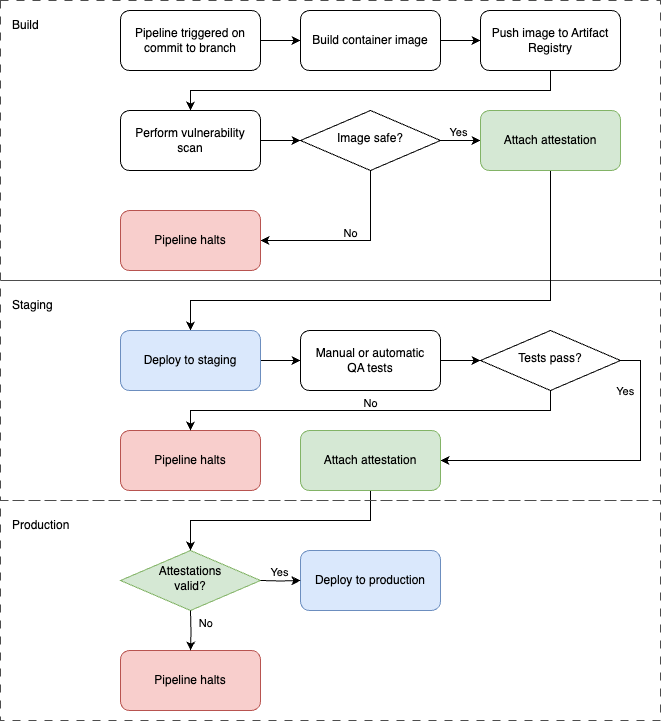

A typical CI/CD pipeline that embeds Binary Authorization would leverage an attestation at each environment stage, as shown below:

Here we can see the usual process of building and pushing an image on a commit. The build pipeline then triggers the vulnerability scan, and we determine if the output of the scan deems the image to be safe or not. For example, an image that contains no CVEs with a severity score greater than five could possibly be considered safe (but your mileage may vary!) At this point, a safe image can generate an attestation that can be checked later.

The pipeline can go on to deploy to a staging environment, and then run quality assurance tests to make sure the updated deployment works as expected. These tests could be automated or manually run by a member of a QA team, and if they are passed, we generate another attestation to say so.

Finally, when the image is due to be deployed to the production cluster, a Binary Authorization policy can ensure that both positive attestations are in place, and check that they have been signed by the relevant attesters. If they are not, the deployment will be denied, protecting the production environment.

Unfortunately, configuring Binary Authorization in a CI/CI pipeline is a complex process which would warrant an entire blog post series of its own, so I can’t cover it here! If you want to try this out for yourself, Google’s own guide is recommended: https://cloud.google.com/binary-authorization/docs/cloud-build

Let’s jump ahead to the final C in the 4 we discussed at the start of this post. Even if our code, container and cluster are air-tight, surely there are some platform tools in Google Cloud that can help us?

Integrating other Google Cloud security tools

You may already be familiar with some of the tools available in Google Cloud that can generally help with platform security. In this section we’ll discuss two of them specifically – Cloud Armor and Cloud IAP – and how they can be integrated into the GKE Enterprise configurations we’ve been discussing so far. Let’s start with Cloud Armor!

Cloud Armor

Google’s Cloud Armor is essentially a Web Application Firewall (WAF), applied as a set of configuration polices which combine with Google’s different load balancing options to filter incoming Layer 7 network traffic and protect your workloads and backends. Cloud Armor policies can comprise multiple rules, and there are several types of rules you can use:

IP

allowlistanddenylistrules can filter traffic based on IP or CIDR, and can return a variety of HTTP response codesSource geography rules can exclude traffic from specific geolocations

Preconfigured WAF rules can protect your workloads from common attacks maintained by the Open Worldwide Application Security Project (OWASP)’s Core Ruleset (CSR) list. These include attacks like SQL injection, cross scripting, PHP injection and many more.

Bot Management rules help you manage requests that may be coming from automated clients or bots. For example, you can force requests to identify themselves through a reCAPTCHA challenge.

Rate limiting rules can throttle requests or temporarily ban clients that exceed a predefined rate threshold.

You can also write custom rules using Google’s Common Expression Language (CEL). Cloud Armor works with any Google Cloud Load Balancer and is not limited to backends on GKE. However, if you’ve configured your cluster ingress using the GKE Gateway Controller you can easily associate a Cloud Armor policy with your Gateway to protect the services it exposes.

We do this by creating a GCPBackendPolicy object. This object contains details of the additional functionality that should be added to the load balancer in its spec, such as referencing a Cloud Armor policy. The GCPBackendPolicy then targets a specific Service, or in the case of a multi-cluster service, a ServiceImport.

Here’s an example where we have already created a Cloud Armor policy called web-security-policy, and we want to use it to protect a backend Service called store:

apiVersion: networking.gke.io/v1

kind: GCPBackendPolicy

metadata:

name: webstore-backend-policy

namespace: store

spec:

default:

securityPolicy: web-security-policy

targetRef:

group: ""

kind: Service

name: store

Note that the GCPBackendPolicy must exist in the same namespace as the Gateway that it is attached to, and only a single GCPBackendPolicy object may be used per service. The targetRef points to our store Service; the group parameter is blank because Services belong to the core API group. If we had set up store as a multi-cluster service, we would simply change the targetRef section to refer to the ServiceImport object instead, like this:

targetRef:

group: net.gke.io

kind: ServiceImport

name: store

At the time of writing, some limited aspects of the GCPBackendPolicy could be applied to the Gateway object itself, not just the service, which could potentially save you a lot of time if you want to apply the same security policy to lots of different services. It’s worth checking the documentation at https://cloud.google.com/kubernetes-engine/docs/how-to/configure-gateway-resources to see if this support has been expanded.

Identity Aware Proxy

Google’s Identity Aware Proxy (IAP) is an additional layer of protection that can be enabled for applications that are exposed via load balancers, in use cases where you only want to grant access to your own organization’s users. As part of Google’s “BeyondCorp” methodology, it provides a simple way to prove a user’s identity rather than having them connect to an internal application using a VPN.

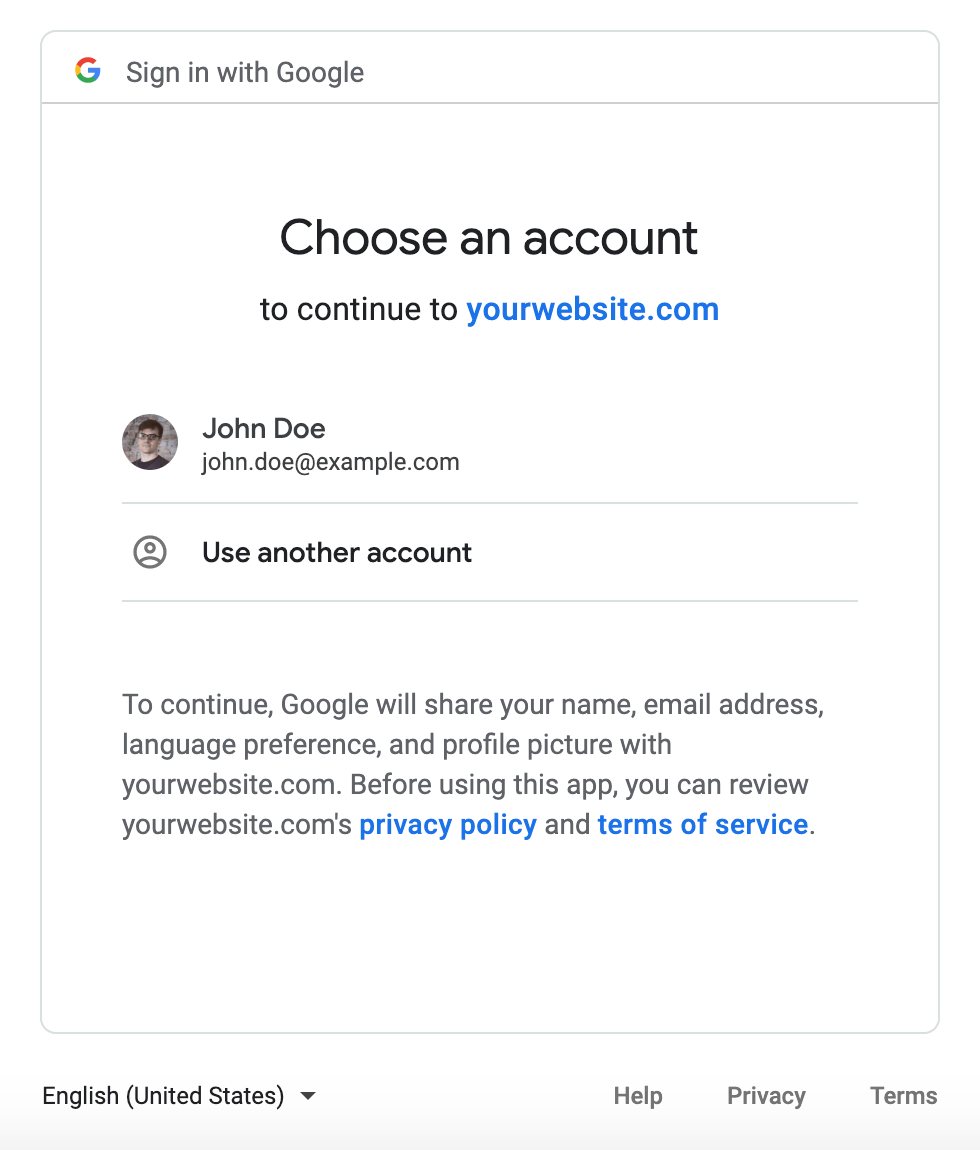

IAP works by intercepting requests that travel through a load balancer and redirecting users to the Google sign-in page. Here they must successfully authenticate themselves with Google’s identity services. This completes the authentication stage of IAP.

Next comes authorization. For a user’s request to be passed to the backend service, IAP checks that the correct IAM policy is in place to allow this. A user requires the IAP-Secured Web App User role assigned to them in the same Google Cloud project as the backend resource.

The benefit of this functionality is that IAP is easy to drop in to provide a highly secure method of authentication and authorization without having to modify any of your backend workloads. The downside is that it only works for users who actually have accounts within your Google organization (ie. your Google domain), because you’ll need to be able to assign IAM roles to them within your project. Just in case this scenario is relevant to you, let’s discuss how we can add IAP to one of our GKE backend services.

Configuring the Consent Screen

The Consent Screen is the user interaction that appears when a user is asked to sign into their Google account to access your service, as shown below.

We’ve all seen this a hundred times; we’re logging in with our Google credentials, but a third-party application wants to know who we are. When you enable IAP for your Google Cloud project, you’ll need to configure a consent screen with the name of your application, some contact details and optionally an image for a logo. Then you’ll need to decide what scopes your consent screen is asking for.

The scopes in your consent screen determine what information you want Google to pass onto your application if authentication is successful. For example, if you’re trying to access a third-party application that needs to write files to your Google Drive, you would be presented with this information at the consent screen. An application can’t access scopes unless they are specifically authorized by the end-user in this way. It might be that all you need is a user’s email address to identify them in your own data, but there are dozens of other scopes you can include if they are necessary.

Once you’re completed the Consent Screen setup, IAP is enabled on your project, but there’s still one more step to take. You’ll need to generate an OAuth 2.0 Client ID so that your IAP integration has access to the correct APIs. You can do this from the Credentials screen in the APIs section of the Cloud Console. Once you’ve created the OAuth 2.0 Client ID, download the credentials file and extract the client ID and secret. We’ll use these in a moment.

Configuring IAP for GKE backends

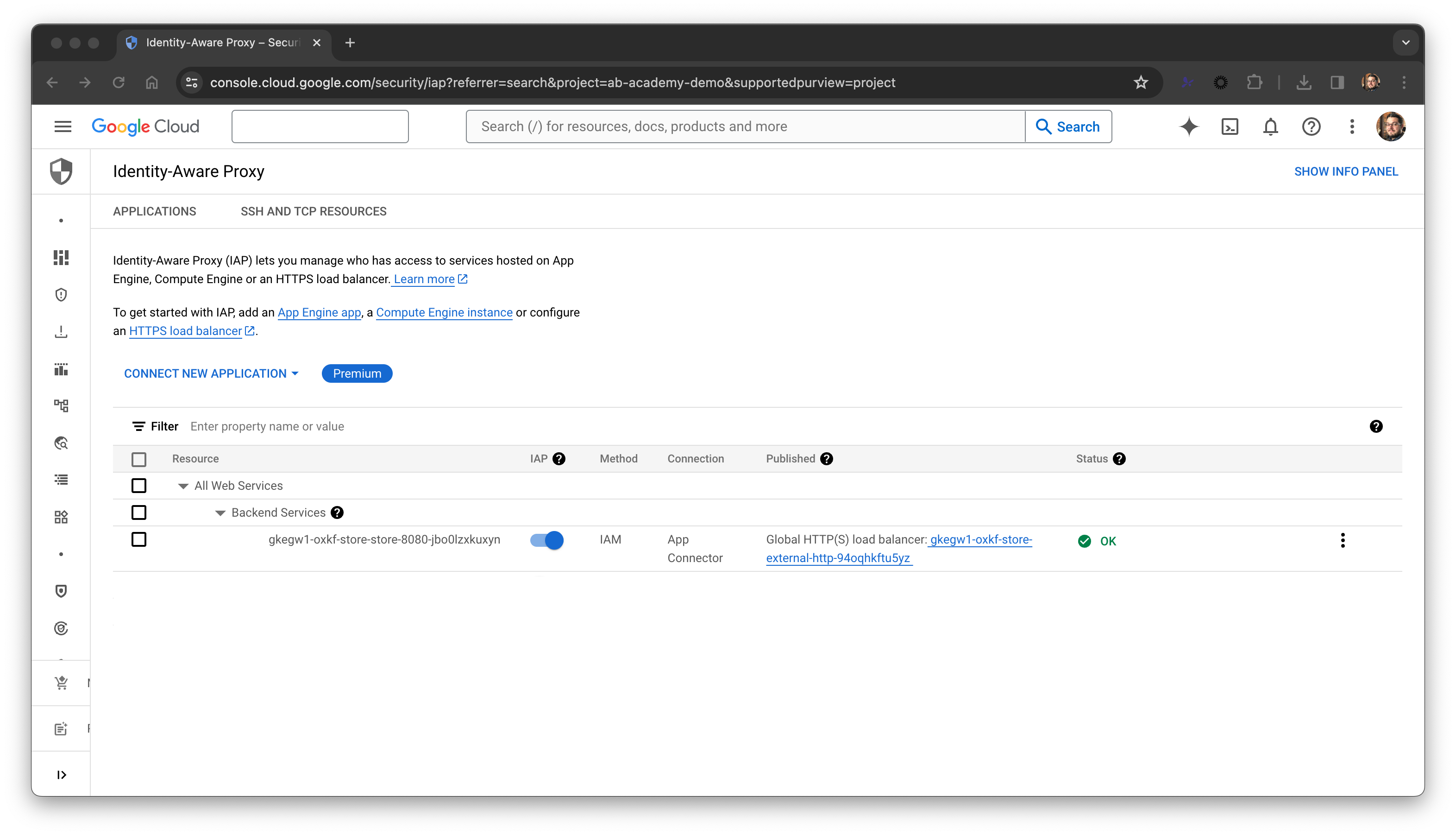

Any services running in your cluster should now be available as backends that can be secured by IAP (along with any services in your project from other Compute options, such as Compute Engine, App Engine or Cloud Run). You can see a list of these backends to confirm your GKE services are included with this command:

gcloud compute backend-services list

Don’t be concerned with the compute sub-command! Services exposed by the GKE Gateway controller will show up in that list. You can also see this list on the IAP page in the Cloud Console, although at this stage it will show that OAuth is not properly configured. We’ll fix that next!

First, we need to store the OAuth 2.0 secret in a Kubernetes Secret object. Take the secret that you extracted from the credentials file a moment ago and write it into a text file called secret.txt. Then we’ll store this in a Secret called oauth-secret:

kubectl -n store create secret generic oauth-secret --from-file=key=secret.txt

Now we can create the GCPBackendPolicy to attach IAP to our service. In this example, you’ll need to replace <CLIENT_ID> with the OAuth 2.0 client ID from your credentials file:

apiVersion: networking.gke.io/v1

kind: GCPBackendPolicy

metadata:

name: iap-backend-policy

namespace: store

spec:

default:

iap:

enabled: true

oauth2ClientSecret:

name: oauth-secret

clientID: <CLIENT_ID>

targetRef:

group: ""

kind: Service

name: store

Once again, we can change the targetRef like before if we’re using a ServiceImport rather than a Service. It will take a few minutes for the configuration to synchronize, but you can check on its status with this command:

kubectl -n store describe gcpbackendpolicy

This should show you a message like: Application of GCPGatewayPolicy "default/backend-policy" was a success. You should now also see that everything is okay in the IAP page of the Cloud Console:

External users who now try to access your application through the load balancer will be redirected to the Google sign-in process using the consent screen that you configured. That user will need to successfully authenticate with their Google credentials, and they’ll need the IAP-Secured Web App User role in your project’s IAM bindings to be granted access.

Other policies are available to help you configure your Gateway resources, but these two are the most useful regarding application security. For full details you can check out the documentation here: https://cloud.google.com/kubernetes-engine/docs/how-to/configure-gateway-resources

Securing Traffic with Kubernetes Network Policies

In the previous post we described using a collection of AuthorizationPolicy objects with our service mesh to control the flow of traffic between our various workloads. As we’ve already learned, the service mesh approach is very powerful and quite comprehensive. However, it can be difficult to apply control logic between your workloads and other points on the network that exist outside of your mesh. And after reading the last few posts in this series, you may have actually decided that you don’t want a mesh after all!

Thankfully, we still have a Kubernetes-native form of traffic control in the form of NetworkPolicies. While these objects do not require a service mesh, the network plugin for GKE must be updated to support them. You can use the --enable-network-policy argument when creating a new cluster, or enable network policy enforcement on an existing cluster with this command:

gcloud container clusters update my-cluster \

--update-addons=NetworkPolicy=ENABLED

NetworkPolicies can look a little confusing at first, but once you understand their logic, they are quite easy to read. The primary components of the object are:

A

podSelector: This element chooses which Pods should be affected by the policyPolicy types: You can include Ingress and Egress rules in a policy, for traffic entering and leaving a

Podrespectively.The rules themselves: Inside your Ingress and Egress elements, you can specify rules that determine whether traffic is allowed. These rules are based on additional

Podselectors, namespace selectors or IP blocks to match the source of traffic for Ingress rules, or the destination of traffic for Egress rules.

Let’s take a look at the example NetworkPolicy from the Kubernetes documentation to walk through an example:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

role: db

policyTypes:

- Ingress

- Egress

ingress:

- from:

- ipBlock:

cidr: 172.17.0.0/16

except:

- 172.17.1.0/24

- namespaceSelector:

matchLabels:

project: myproject

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 6379

egress:

- to:

- ipBlock:

cidr: 10.0.0.0/24

ports:

- protocol: TCP

port: 5978

At the start of the spec is a podSelector, which determines which Pods will be affected by this policy by “selecting” them. You may see podSelectors in other parts of the policy, and this is why indentation levels in YAML are so important! In this example, all Pods that contain the label role with the value db in their metadata will be selected and affected.

If you don’t want to select specific Pod labels, you can instead affect an entire namespace. You can do this by specifying a namespace (either in the YAML or at the time of applying a manifest), and using an empty podSelector:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: all-pods-policy

metadata: secure-namespace

spec:

podSelector: {}

Going back to the example from the Kubernetes documentation, once we know which Pods are being affected, we then specify which policyTypes we’re going to include in our policy. Once again, we specify Ingress rules to control how traffic should be allowed into selected Pods, and Egress rules to control how traffic should be allowed out. Each set of rules then gets its own section in our YAML manifest. Incidentally, if you just want to create Ingress rules on their own, you can leave out the policyTypes section entirely. By default, Kubernetes will then expect you only to define the Ingress section.

Next is our ingress section, where we specify the sources from which we will accept network traffic. Put another way, the Pods we selected a moment ago will only receive traffic from sources that match these rules. In our example, we have three different types of source:

The

ipBlockspecifies a network range of172.17.0.0/16, but excludes the specific sub-range of172.17.1.0/24.The

namespaceSelectoruses labels to match the originating namespace of anyPod. In this case,Podsmust come from a namespace that contains the metadata labelprojectwith a value ofmyprojectfor this rule to evaluate to true.The

podSelector, used as a source, matchesPodsthat contain the labelrolewith the value offrontend.

It’s important to note that any of these sources can match for this rule to evaluate to true and for the ingress traffic to be allowed. However, we also have a ports section in our ingress rule. This specifies that only TCP traffic on port 6379 will be accepted. As you can see, this is a fairly restrictive policy.

Finally, we have an egress section. We could use the same variety of sources and selectors as we used for ingress, but in this example, we simply specify an ipBlock and ports parameter. This means that Pods selected by this policy will only be allowed to connect to IP addresses within the specified CIDR range on TCP port 5978.

Designing network policies

So how should we design our network policies? They can quickly become complex and cumbersome, so it's important to understand some basic logic of how they work. As we’ve already seen, network policies can apply two types of restrictions: Ingress for incoming connections and Egress for outgoing connections.

In both cases, if a Pod is not selected by any policy, a connection is allowed (in other words, it is not isolated or restricted). Remember that a Pod can be selected in several different ways as we saw in the previous example, including just by being part of a namespace that has been selected. But if no Ingress policies select a Pod, all inbound connections for that Pod will be allowed. Likewise, if no Egress policies select a Pod, all outbound connections from that Pod will be allowed.

If a Pod is selected by a policy, then only the traffic allowed by the rules of that policy will be allowed. Ingress and Egress are evaluated separately, however. Also, return traffic for any allowed connection is also implicitly allowed.

When deciding how to design our policies, remember that they are additive. Once a policy applies to a Pod, we’re starting with nothing being allowed and adding exceptions with our rules. This means that rules can’t conflict with each other, and the ordering of rules doesn’t matter.

We can create multiple policies that may apply to a single Pod or group of Pods. For this reason, it's recommended to create a policy per single intention. A connection from a frontend to a backend would qualify as a single intention. A backend may also accept connections from elsewhere, but these could be separated into additional policies.

Some sensible defaults

In most environments it's likely that you may select specific groups of Pods for specific connection types, but you may still wish to apply defaults to all other Pods that don’t have their own unique rules. We can use the logic of the NetworkPolicy object to apply some sensible defaults quite easily.

For example, when you’ve finished creating specific ingress rules for some workloads, you might want to make sure that all other Pods are denied Ingress traffic, even if they aren’t specifically selected. This policy will do that:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-ingress

spec:

podSelector: {}

policyTypes:

- Ingress

This works because we don’t specify a namespace, and we use an empty podSelector. Then we apply an empty ingress policy – essentially specifying that we want to control ingress, but without supplying any rules that allow it. This policy will have no effect on Egress traffic.

Conversely, maybe you want to explicitly allow all ingress connections. The policy is similar, but now we supply an empty ingress rule, thereby matching all incoming traffic:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all-ingress

spec:

podSelector: {}

ingress:

- {}

policyTypes:

- Ingress

Remember what we said earlier about policy addition and conflict? What do you think would happen if we added an additional ingress policy to select some Pods and try to control their inbound traffic? The answer is: it would have no effect. No incoming network traffic can now be denied with this blanket policy in place.

Once again, controlling ingress like this has no effect on egress traffic. However, you can create the egress equivalent of these rules simply by changing the policyType, and swapping the empty ingress parameter for an empty egress parameter on the allow-all rule.

Finally, if you want a blanket rule to deny all traffic except for what is specified in other policies, you can deny everything in one go with this policy:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-all

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

NetworkPolicies may seem like an additional chore, but you should simply consider them as an extension of any other configuration you create for your workload, alongside such standard objects as Deployment and Service. Doing the hard work up front means that in the unfortunate event that a workload is compromised or starts misbehaving, its potential to cause further damage inside your system is considerably reduced.

Summary

In this post I’ve tried to cover some of the most essential security topics you need to know in addition to the security aspects of service mesh we already learned about. We’ve revisited Workload Identity, introduced Binary Authorization and discussed integrations with Cloud Armor and Cloud IAP. Finally, we’ve looked at NetworkPolicies and tried to normalize them as part of the standard configuration for any workload. In doing so, we’ve tried to emphasize that steps to improve security are a fundamental part of the way you design and operate your systems and applications. They’re not a “nice to have”; you ignore them at your peril. Do the work now and your future self will thank you!

But of course, we’ve barely touched the surface. Hopefully I’ve convinced you why this topic is important, but the whole world of Kubernetes security is too big to squeeze into a blog series that is ostensibly about operating GKE. I would strongly recommend researching the world of Pod Security Admission and Admission Controllers in general, Role Based Access Control (RBAC), and the various hardening guides available from the Kubernetes project and others.

The next post will be the last in this series (for now)! We may have learned a lot about GKE Enterprise and how to deploy its many features, but so far, we’ve been doing the work manually for the most part. Manual work doesn’t scale and is liable to human error, so to finish the series we’ll learn about automation and how modern DevOps tools and GitOps patterns can help us deploy securely again and again.

See you next time!

Subscribe to my newsletter

Read articles from Tim Berry directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by