Natural language boosts LLM performance in coding, planning, and robotics

PixelProgrammer

PixelProgrammer

Large language models (LLMs) are becoming more useful for programming and robotics tasks. However, for complex reasoning problems, there is still a big gap between these systems and humans. Without the ability to learn new concepts like humans, these systems struggle to create good abstractions. Abstractions are high-level representations of complex ideas that ignore less important details. As a result, LLMs have difficulty handling more advanced tasks.

Fortunately, researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have discovered a wealth of abstractions in natural language. In three papers to be presented at the International Conference on Learning Representations this month, the team demonstrates how everyday words provide valuable context for language models. This helps them create better overall representations for tasks like code synthesis, AI planning, and robotic navigation and manipulation.

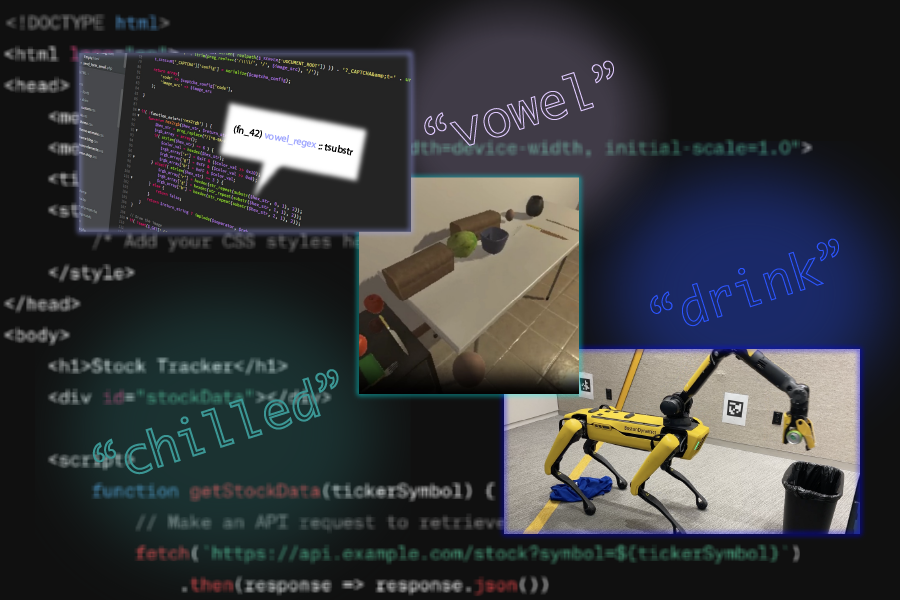

The three frameworks each build libraries of abstractions for their specific tasks: LILO (Library Induction from Language Observations) can synthesize, compress, and document code; Ada (Action Domain Acquisition) focuses on sequential decision-making for AI agents; and LGA (Language-Guided Abstraction) assists robots in understanding their environments to create more practical plans. Each system uses a neurosymbolic approach, a type of AI that combines human-like neural networks with logical, program-like components.

LILO: A neurosymbolic framework for coding

Large language models can quickly write solutions for small coding tasks but cannot yet create entire software libraries like human software engineers. To enhance their software development abilities, AI models need to refactor code into libraries of concise, readable, and reusable programs.

Refactoring tools, such as the MIT-led Stitch algorithm, can automatically identify abstractions. Inspired by the Disney movie “Lilo & Stitch,” CSAIL researchers combined these algorithmic refactoring methods with large language models (LLMs). Their neurosymbolic method, LILO, uses a standard LLM to write code and then pairs it with Stitch to find abstractions that are well-documented in a library.

LILO’s focus on natural language allows it to perform tasks requiring human-like commonsense knowledge, such as identifying and removing all vowels from a string of code and drawing a snowflake. In both cases, the CSAIL system outperformed standalone LLMs and a previous library learning algorithm from MIT called DreamCoder, showing its ability to understand words in prompts more deeply. These promising results suggest that LILO could help with tasks like writing programs to manipulate documents like Excel spreadsheets, assisting AI in answering questions about visuals, and drawing 2D graphics.

Subscribe to my newsletter

Read articles from PixelProgrammer directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

PixelProgrammer

PixelProgrammer

بت یک