Serving Stable Diffusion XL on Google Cloud

Steven J. Munn

Steven J. Munn

Serving outputs from generative AI models is notoriously resource-intensive1. At Space Runners, we are primarily focused on diffusion models for generating or modifying images, which we apply to fashion items. Visitors should be excited to create with our platform, so we need our models to be customized (through fine-tuning or network architecture modifications) to produce results that stand out. However, we also need to ensure that our model outputs are served quickly2.

Setting up generative AI for Space Runners involves many steps and technologies. A previous blog post on our tech stack mentions some of the tools we are using at the code level. Today, we will focus more on the MLOps/DevOps aspects of how things work in Google Kubernetes. We will first explain why we chose Kubernetes over other GCP products for hosting our models. Then, we will dive into the specifics of setting up generative AI infrastructure with Google Kubernetes Engine (GKE).

Model Serving with Google Cloud Platform

Google offers three major products for creating images with Generative AI3. In order of least to most customizable they are:

Google Imagen/Gemini

Vertex AI

Google Kubernetes Engine (GKE)

At the time we set up our Ablo website, Imagen/Gemini did not produce images of sufficient quality for our use case in fashion. The images contained artifacts, did not adhere closely to prompts, and could not be styled with training data. This may change in the future, but for the time being, it’s not a viable option for us.

Vertex AI vs Manual Setup in GKE

Although VertexAI is a powerful platform, abstracting away a lot of GKE’s complexity, it is still has some severe limitations and we ultimately chose to setup our models manually in GKE. For quick reference, here is a list of pros and cons that summarize our experience with the two services.

Vertex AI Pros

Fully managed node scaling

Built-in latency monitoring

Automatic request queue handling

Vertex AI Cons

Poor visibility into debugging logs

Arbitrary and non-configurable timeouts and size limits

GKE Pros

Proven track record with 10 years of general access

Popular with lots of support

Versatile

GKE Cons

Relatively complex

Requires a lot of manual setup and monitoring

The Vertex AI documentation does not make it very clear that deploying custom docker containers is an option for inference. The documentation for this is in the "custom training" section.

For our particular use-cases, the biggest limitation we ran into with Vertex AI is that we could not configure readiness checks and timeouts to give our docker containers enough time to load the neural network checkpoint data (see the next section for more details on that topic). With poor visibility into the logs for Vertex AI, rather than go back and forth with GCP support, we switched to GKE.

Google Kubernetes Setup

Storage

Checkpoints for SDXL models, including Control Nets, LoRAs for styling, IP-Adapters, and other components, range between 15GB and 70GB. Including these files in a Docker image will result in long build, push, and startup times. The best approach to handle this is to store all the checkpoints in a folder and add that folder to a .dockerignore file. Then, add code to load the checkpoints from a storage bucket once the Docker container starts up in GKE or another context.

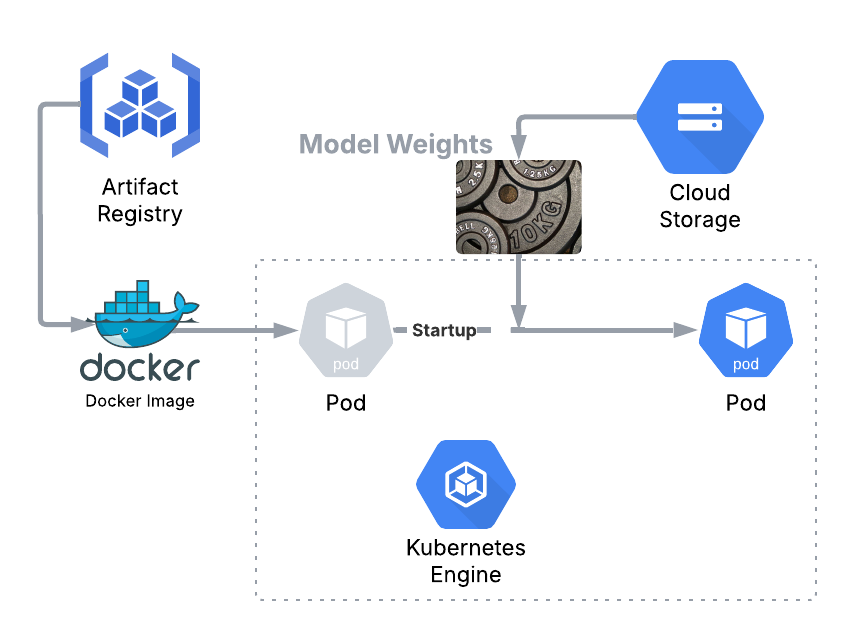

At a high level the process looks like the figure below,

The GCP Artifact Registry stores the docker images for the model servers. GKE takes these images to spin up pods. After the pods have started up, they fetch model weights and other data from cloud storage using a gcloud storage cp command.

Request Flow

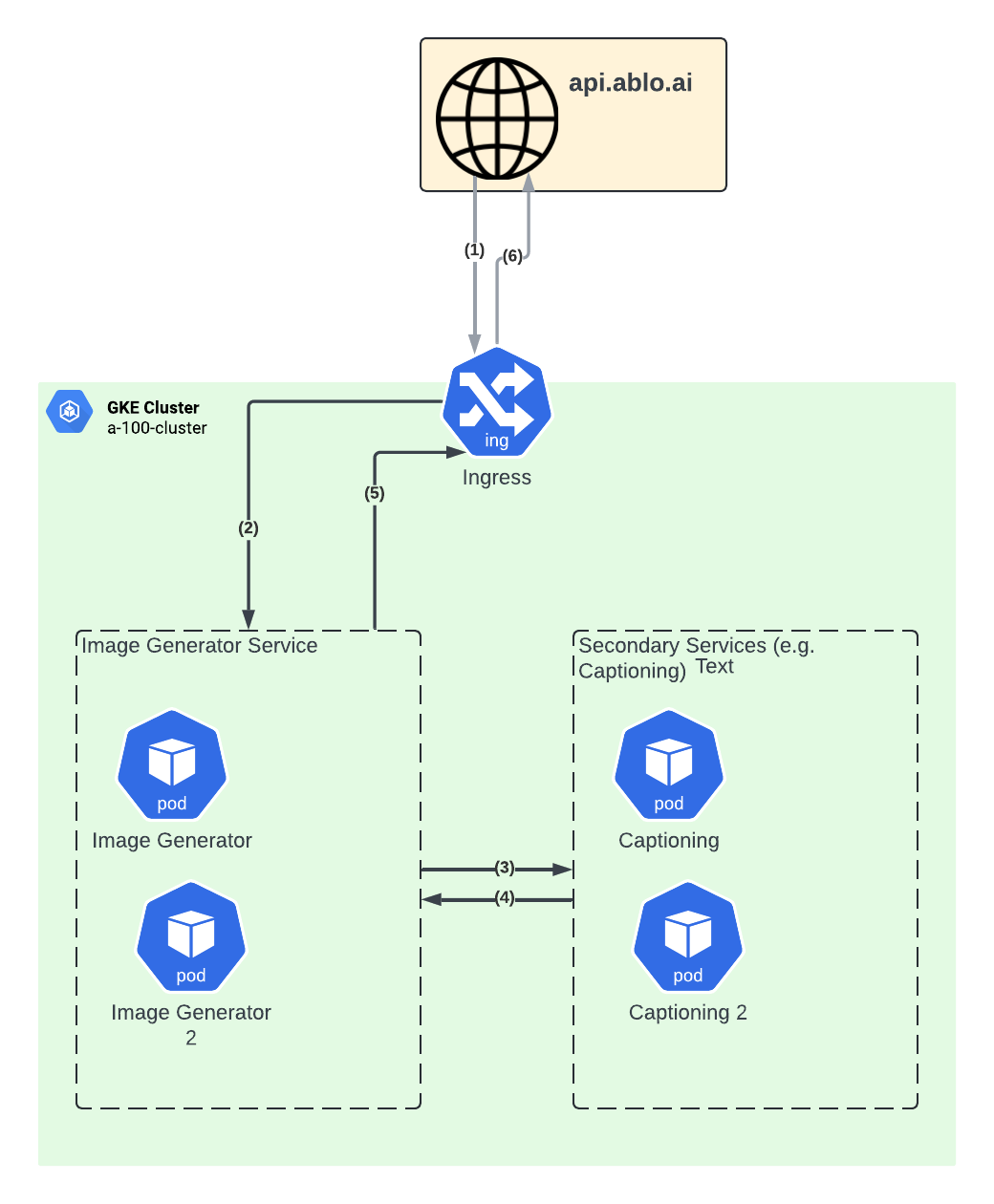

Information about image styles and the prompt engineering that goes around that need to be easy to edit. The backend API services handles fetching this type of information from our Postgres database (see our tech stack). The backend service then creates a request and passes it along to GKE.

It’s also important to note that the ML serving instances on GKE are much more expensive to run at $5+ per hour versus the machines that run the backend services, so we want to minimize compute time on the ML instances as much as possible.

In the diagram above, numbers inside the arrows indicate the order of execution. For some workflows like our photo transformer, we need to use secondary services like an image captioning model. Since these only ever are called by the generative AI service, they are not exposed outside of GKE.

Observability and Alerting

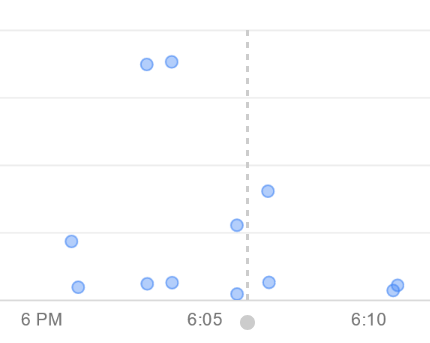

GKE offers versatile observability and alerting tools. For machine learning inference, the most useful so far have been Cloud Trace and log-based metrics. Cloud trace allows us to look at the run time of requests, and then investigate all the logs associate with the request. To achieve this, we use python’s opentelemetry.

Log-based metrics help us setup dashboards and watch the distribution of inference times. The 95th and 99th quantiles help us get an idea of what inference times are in the worst-case scenarios.

Choosing the Right Hardware

Our goal is to try to get inference times on the order of 10 seconds—the time at which most people will lose focus to something else2. Running SDXL, or any large diffusion model without distillation, in that time-frame requires a GPU or more specialized hardware, such as TPUs and FPGA. Our customization requirements however make it very difficult to compile our workflows for more specialized hardware. This makes Nvidia GPUs our hardware of choice. This is also part of the reason we went to GCP rather than AWS: The options for mid-range data center GPUs are more numerous.

SDXL, with all of the control nets, LoRAs, and IP-adapters that we are loading use up anywhere from 20 to 40 GB of GPU VideoRAM. So the GPUs we have to choose from are:

Nvidia L4 (24 GB VRAM)

Nvidia A100 (40 GB VRAM)

Nvidia A100 “Ultra” (80 GB VRAM)

The L4 is much cheaper than the A100, especially on a cost per GB of VRAM. Anything we can fit on the L4, we run on an L4; however, at least two of our major workflows require more VRAM.

Nvidia GPU sharing comes in handy here because it makes it possible to have multiple GKE pods share a graphics card. For all of the extra-large workflows that do not fit in the L4 GPU, we have A100 “Ultra” configured to share 2 pods providing 40 GB of VRAM each.

Conclusion

Setting up generative AI inference pipeline in Google Cloud’s Kubernetes Engine was a challenge that spanned the course of several weeks (and months of maintenance and perfecting). It has proven itself as powerful and reliable platform giving us all the options we need to create images for our platform.

After deciding all the architecture and systems for our workflows, the next challenge is to write code and build docker containers. This is something we will examine in a future blog post, so stay tuned!

Hugo Huang, Harvard Business Review. What CEOs Need to Know About the Costs of Adopting GenAI

Jakob Nielsen, UX Tigers Blog. The Need for Speed in AI

Google Cloud. AI and machine learning products

Subscribe to my newsletter

Read articles from Steven J. Munn directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Steven J. Munn

Steven J. Munn

Steven Munn is a Machine Learning Engineer at Space Runners, where he builds training and deployment pipelines for generative AI. The Space Runners platform, Ablo, enables users to create and customize fashion items while earning royalties. Steven manages generative AI tools that facilitate the design process through user prompts and input images. Previously, Steven worked on diverse machine learning applications including customer service chatbots at Linc Global, manufacturing defect detection at Samsung, air traffic control simulation and management at Airbus, and social media research at Hughes Research Labs (HRL). Steven is well-versed in production-grade, end-to-end deployment of machine learning and AI systems, and eager to tackle the challenges of scaling larger generative AI models.