Lift And Shift Application Workload ( AWS Project )

Divya vasant satpute

Divya vasant satpute

About this project

Multi Tier Web Application Stack [VProfile ]

Host And Run on AWS cloud for production

Lift and shift strategy

Before getting started, let’s understand what is Refactoring strategy.

Refactoring is one of the strategies for migrating applications to AWS. It involves re-architecting workloads to support AWS cloud-native capabilities from the ground up. This strategy requires a significant investment in effort and resources but is considered the most future-proof migration approach. The outcome of refactoring is a cloud-native application that fully exploits cloud innovation.

Benefits of the migrating application using Refactoring strategy.

Some benefits of refactoring include long-term cost reduction by matching resource consumption demand and eliminating waste. This can result in a better return on investment compared to less cloud-native applications. Refactoring can also increase resilience by decoupling application components and using highly-available AWS-managed services. Additionally, refactored applications can be more responsive to business events and can exploit AWS innovation.

Introduction

Briefly introduce the concept of a Multi-Tier Web Application.

Explain the Lift and Shift Strategy and why it’s useful for migrating applications to AWS.

Introduce VProfile as the application you are migrating.

📌 Scenario Overview

You have an application stack running in your on-premises data center, utilizing a mix of physical and virtual machines. The stack includes:

🔹 Windows Server – Hosting various enterprise applications

🔹 DNS – Providing name resolution services

🔹 Oracle Database – Managing structured data

🔹 LAMP Stack – Running PHP-based applications

🔹 Java & Tomcat – Backend microservices

🔹 NGINX+ – Load balancing and reverse proxy

🔹 PHP – Web-based applications

To modernize and improve scalability, security, and cost-efficiency, we will migrate this workload to AWS using the Lift and Shift approach.

☁️ Migration Strategy: Lift and Shift to AWS

The Lift and Shift Strategy moves applications without refactoring, ensuring a fast and smooth migration to AWS.

Problem And Challenges

⚠️ Challenges of On-Premises Infrastructure

Organizations running applications on on-premises physical/virtual machines often face several operational bottlenecks. Let’s explore the key challenges:

🚧 Problems with On-Premises Infrastructure

🔴 Complex Management

Managing multiple servers, databases, and networking components is time-consuming and requires manual intervention.

Dependency tracking and troubleshooting are difficult in a distributed environment.

🔴 Scalability Limitations

Scaling infrastructure up or down based on demand is complex and expensive.

Requires manual hardware provisioning and capacity planning to avoid under/overutilization.

🔴 High Upfront & Operational Costs

Capital Expenditure (CapEx): Heavy initial investment in hardware, networking, and storage.

Operational Expenditure (OpEx): Recurring costs for maintenance, electricity, cooling, and IT personnel.

🔴 Manual & Time-Consuming Processes

Setting up new servers, deploying applications, and maintaining infrastructure is manual and error-prone.

Lack of automation leads to slower deployment cycles and inconsistent configurations.

🔴 Difficult to Automate & Maintain

- Legacy applications often require custom scripts for deployment.

Monitoring, patching, and backup processes require manual effort.

Solution

✅ Solution: Lift and Shift to AWS

GitHUB REPO : https://github.com/divyasatpute/vprofile-awsliftshift-project

Migrating to AWS using a Lift and Shift approach resolves these challenges while ensuring scalability, security, and cost optimization.

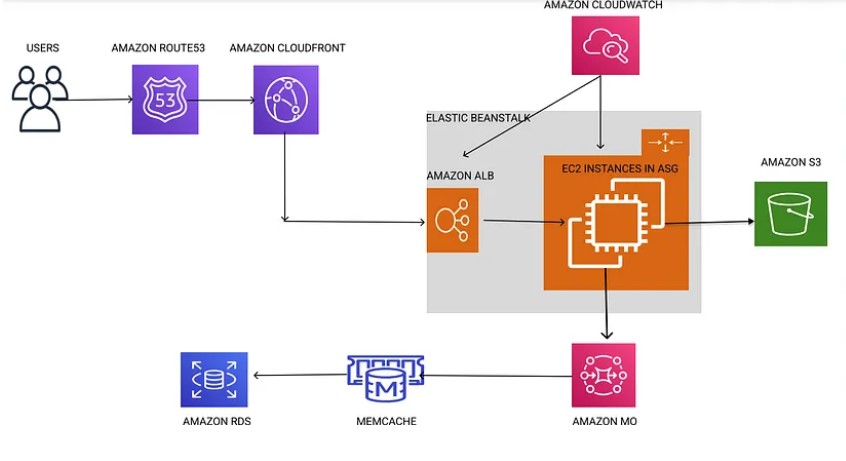

AWS Cloud Setup (After Migration)

🔹 Compute Layer (EC2 & Scaling)

✅ Amazon EC2 for running Tomcat, RabbitMQ, MySQL, Memcached

✅ Auto Scaling Groups for dynamic VM scaling

✅ Elastic Load Balancer (ELB) for distributing traffic

🔹 Storage & Data Layer

✅ Amazon EFS (Elastic File System) for shared storage across VMs

✅ Amazon S3 for storing logs, backups, and assets

✅ Amazon RDS (MySQL) for relational database migration

🔹 Networking & Security

✅ Amazon Route 53 for Private DNS Service and domain resolution

✅ NGINX Replacement with AWS ELB for traffic balancing

✅ Security Groups & IAM Roles for controlled access

FLOW OF EXECUTION

Login to AWS Account

Create Key Pairs

Create Security groups

Launch Instances with user data [BASH SCRIPTS]

Update IP to name mapping in route 53

Build Application from source code

Upload to S3 bucket

Download artifact to Tomcat Ec2 Instance

Setup ELB with HTTPS [Cert from Amazon Certificate Manager]

Map ELB Endpoint to website name in Hostinger DNS

Verify

Build Autoscaling Group for Tomcat Instances.

Step by Step Guidance

Create security group for load-balancer

Click on EC2

Go to Security Group

Give Name and description

Allow HTTP:80 IPv4, IPv6

Allow HTTPS:443 IPv4 , IPv6

Click On Create Security Group

2nd Security Group For App

Click on EC2

Go to Security Group

Give Name and description

Allow custom TCP : 8080 and select loadbalancer group which we created before

Allow Custom TCP :22 and select My IP

Click On Create Security Group

3nd Security Group For App

Click on EC2

Go to Security Group

Give Name and description

Allow MySQL/Aurora , make sure to select source section app security group which we created before

Allow Custom TCP :11211 , make sure to select source section app security group which we created before

Allow Custom TCP :5672 , make sure to select source section app security group which we created before

Allow Custom TCP :22 source : My IP

Click On Create Security Group

After adding security Group edit inbound rule

Allow All Traffic and source code select backend itself

Note : If You Don’t know where this port are coming from check out GitHub- repo

[ Vprofile-Project / src/Main/Resource/Application Properties ] in this location you will got all ports

Now Create KEY-PAIR

Go To key-pair

Give name , and .pem format if you use gitbash

Create key pair

Setting-up EC2 instances

Now we are going to set -up 4 EC2 instances one for MYSQL , 2nd for Memcache and 3rd for RabbitMQ and 4 Th for Tomcat server

We are going to provision this instance and set up all the services by using the userdata script.

And while we launch these instances, we have to make sure we put them in the right security group.

These three instances goes into back end security group. Tomcat instance goes into the app security group.

First thing we'll do is we will clone our source code.

git clone https://github.com/hkhcoder/vprofile-project.git

Launch Instance for MYSQL

Go to AWS account

Click On Launch Instance

Give Name and Provide Tag also , so you can follow correct standards

Select AMI Linux 2023

Instance Type T2 micro

select Key pair which we created at 1st

on network setting select for MYSQL backed security group

in Advanced setting go to user data section and paste script which is available in github userdata mysql.sh

This is MYSQL.sh also available on userdata mysql.sh

#!/bin/bash

DATABASE_PASS='admin123'

sudo dnf update -y

sudo dnf install git zip unzip -y

sudo dnf install mariadb105-server -y

# starting & enabling mariadb-server

sudo systemctl start mariadb

sudo systemctl enable mariadb

cd /tmp/

git clone -b main https://github.com/hkhcoder/vprofile-project.git

#restore the dump file for the application

sudo mysqladmin -u root password "$DATABASE_PASS"

sudo mysql -u root -p"$DATABASE_PASS" -e "ALTER USER 'root'@'localhost' IDENTIFIED BY '$DATABASE_PASS'"

sudo mysql -u root -p"$DATABASE_PASS" -e "DELETE FROM mysql.user WHERE User='root' AND Host NOT IN ('localhost', '127.0.0.1', '::1')"

sudo mysql -u root -p"$DATABASE_PASS" -e "DELETE FROM mysql.user WHERE User=''"

sudo mysql -u root -p"$DATABASE_PASS" -e "DELETE FROM mysql.db WHERE Db='test' OR Db='test\_%'"

sudo mysql -u root -p"$DATABASE_PASS" -e "FLUSH PRIVILEGES"

sudo mysql -u root -p"$DATABASE_PASS" -e "create database accounts"

sudo mysql -u root -p"$DATABASE_PASS" -e "grant all privileges on accounts.* TO 'admin'@'localhost' identified by 'admin123'"

sudo mysql -u root -p"$DATABASE_PASS" -e "grant all privileges on accounts.* TO 'admin'@'%' identified by 'admin123'"

sudo mysql -u root -p"$DATABASE_PASS" accounts < /tmp/vprofile-project/src/main/resources/db_backup.sql

sudo mysql -u root -p"$DATABASE_PASS" -e "FLUSH PRIVILEGES"

Launch instance

Launch Instance for Memcache

Go to AWS account

Click On Launch Instance

Give Name and Provide Tag also , so you can follow correct standards

Select AMI Linux 2023

Instance Type T2 micro

select Key pair which we created at 1st

on network setting select for MYSQL backed security group

in Advanced setting go to user data section and paste script which is available in github userdata memcache.sh

#!/bin/bash

sudo dnf install memcached -y

sudo systemctl start memcached

sudo systemctl enable memcached

sudo systemctl status memcached

sed -i 's/127.0.0.1/0.0.0.0/g' /etc/sysconfig/memcached

sudo systemctl restart memcached

sudo memcached -p 11211 -U 11111 -u memcached -d

Launch instance

Launch Instance for RabbitMQ

Go to AWS account

Click On Launch Instance

Give Name and Provide Tag also , so you can follow correct standards

Select AMI Linux 2023

Instance Type T2 micro

select Key pair which we created at 1st

on network setting select for MYSQL backed security group

in Advanced setting go to user data section and paste script which is available in github userdata RarbbitMq.sh

#!/bin/bash

## primary RabbitMQ signing key

rpm --import 'https://github.com/rabbitmq/signing-keys/releases/download/3.0/rabbitmq-release-signing-key.asc'

## modern Erlang repository

rpm --import 'https://github.com/rabbitmq/signing-keys/releases/download/3.0/cloudsmith.rabbitmq-erlang.E495BB49CC4BBE5B.key'

## RabbitMQ server repository

rpm --import 'https://github.com/rabbitmq/signing-keys/releases/download/3.0/cloudsmith.rabbitmq-server.9F4587F226208342.key'

curl -o /etc/yum.repos.d/rabbitmq.repo https://raw.githubusercontent.com/hkhcoder/vprofile-project/refs/heads/awsliftandshift/al2023rmq.repo

dnf update -y

## install these dependencies from standard OS repositories

dnf install socat logrotate -y

## install RabbitMQ and zero dependency Erlang

dnf install -y erlang rabbitmq-server

systemctl enable rabbitmq-server

systemctl start rabbitmq-server

sudo sh -c 'echo "[{rabbit, [{loopback_users, []}]}]." > /etc/rabbitmq/rabbitmq.config'

sudo rabbitmqctl add_user test test

sudo rabbitmqctl set_user_tags test administrator

rabbitmqctl set_permissions -p / test ".*" ".*" ".*"

sudo systemctl restart rabbitmq-server

Launch instance

Launch Instance for Tomcat Server

Go to AWS account

Click On Launch Instance

Give Name and Provide Tag also , so you can follow correct standards

Select AMI ubuntu 2024

Instance Type T2 micro

select Key pair which we created at 1st

on network setting select for tomcat server select app security group

in advanced setting

#!/bin/bash

sudo apt update

sudo apt upgrade -y

sudo apt install openjdk-17-jdk -y

sudo apt install tomcat10 tomcat10-admin tomcat10-docs tomcat10-common git -y

Launch instance

Step 2

DNS Route 53

create Hosted zone

click on Route 53 service go to hosted zone section

make sure Same name give if you changing name configure same as in application properties file

Create Record for db01 server

Create Record for mc01 server

Create Record for rmq01 server

Create Record for app01 server

After Connect your app01 machine on terminal and verify where your configuration is correct or not by following command

ping -c 4 db01.vprofile.in

same verify all records which you created

Step 3

Build And Deploy Artifact

Create Bucket

Go to AWS s3 bucket

click on create bucket

Go To IAM service

Create user

Give Name and Give s3 full access and create User

Create Access Key

Create Role

Go To IAM service

click on create Role

Attach s3FullAccess Policy

And Enter Create

Now Attach This Role To App01 EC2 instance

Go To EC2 Instance select vprofile-app01

Click on Action

Click On Security

Click On IAM role

Update IAM role

On Your application Properties File Edit Same Changes

And save File

Install Maven 3.9.9 version On your System

Install Java 17 version On your system (according to compability change version )

And Install AWS CLI

Package application

mvn clean package

cp /home/ubuntu/vprofile-project/target/vprofile-v2.war /var/lib/tomcat10/webapps/ROOT.war

Create a Target Group

Go to EC2 instance click on target group

Give name and port no 8080

go to advanced health checking setting override 8080

click on next

Check mark app01 instance

click on include pending below

verify 8080 and hit create target group

Create Loadbalancer

Click on Create Loadbalancer

Click on Application Loadbalancer

Give Name

Check mark all availability zones

Remove Default security group and give elb security group

Give target Group

And Create Loadbalancer

✅Result

Let’s build an amazing DevOps & Cloud community together! 💡👩💻

🔗 Connect with Me Everywhere! 🌍

📌 LinkedIn: Divya Satpute

📌 Instagram (DevOps Content): @teacode1122

📌 GitHub: https://github.com/divyasatpute/vprofile-awsliftshift-project

📌 Hashnode (Technical Blogs): https://learnwithdivya.hashnode.dev/

📌 YouTube (Teacode - DevOps Learning): https://www.youtube.com/@Teacode-1122

💬 Let’s connect, collaborate, and grow together in the DevOps & Cloud world! 🚀✨

Subscribe to my newsletter

Read articles from Divya vasant satpute directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Divya vasant satpute

Divya vasant satpute

, I'm a seasoned DevOps engineer 🛠️ with a knack for optimizing software development lifecycles and infrastructure operations. 💡 Specializing in cutting-edge DevOps practices and proficient in tools like Docker, Kubernetes, Ansible, and more, I'm committed to driving digital transformation and empowering teams to deliver high-quality software with speed and confidence. 💻