Understanding CNN with Project

Omkar Kasture

Omkar Kasture

In this blog we’ll understand complete working of CNN and it’s application in real time problem.

Understanding Convolutional Neural Networks (CNNs)

Motivation Behind CNNs

Why do we need CNNs over traditional artificial neural networks (ANNs)? CNNs excel in tasks like image and speech recognition because they consider spatial relationships between pixels, unlike ANNs, which treat each pixel as an independent input. CNNs have even surpassed human accuracy in some image recognition tasks!

The Limitation of Artificial Neural Networks

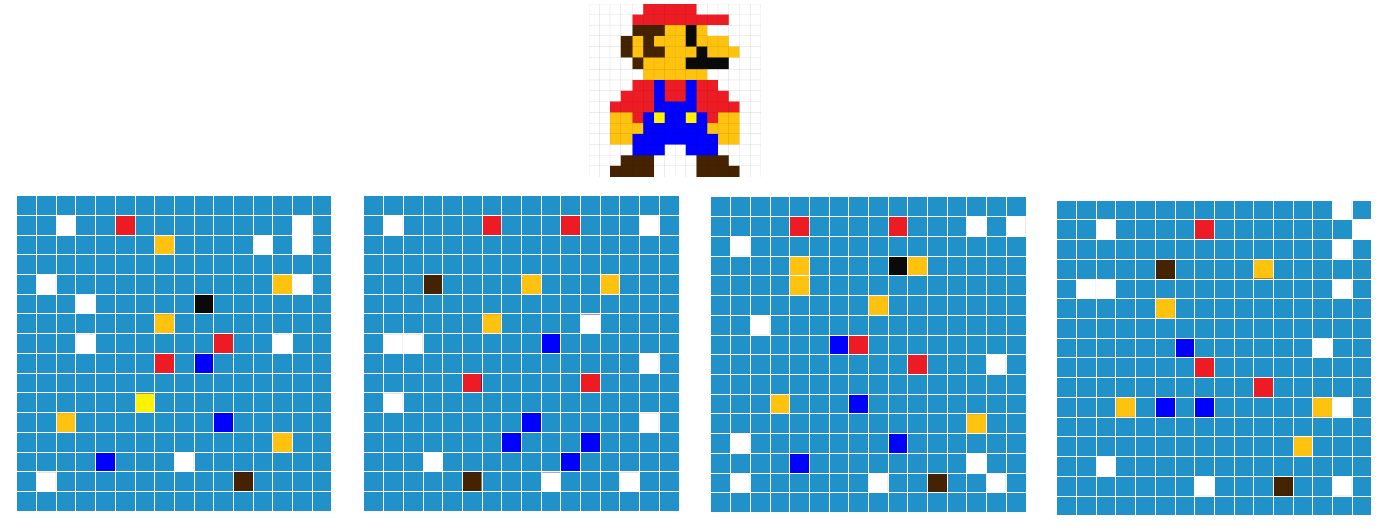

In a traditional ANN, every pixel of an image is fed into the first layer as an individual feature. For a 16x16 pixel image, this means 256 inputs. However, without considering the relationship between neighboring pixels, the model struggles to recognize objects. Imagine trying to identify Mario if only 25 random pixels are revealed—it’s nearly impossible because we lose critical spatial information!

How CNNs Overcome This Limitation

CNNs mimic the human visual system by detecting patterns in small groups of pixels rather than treating each pixel independently. Our brain processes visual information hierarchically—some neurons detect edges, others recognize shapes, and higher-level neurons combine these features into a complete object. CNNs use a similar hierarchical approach.

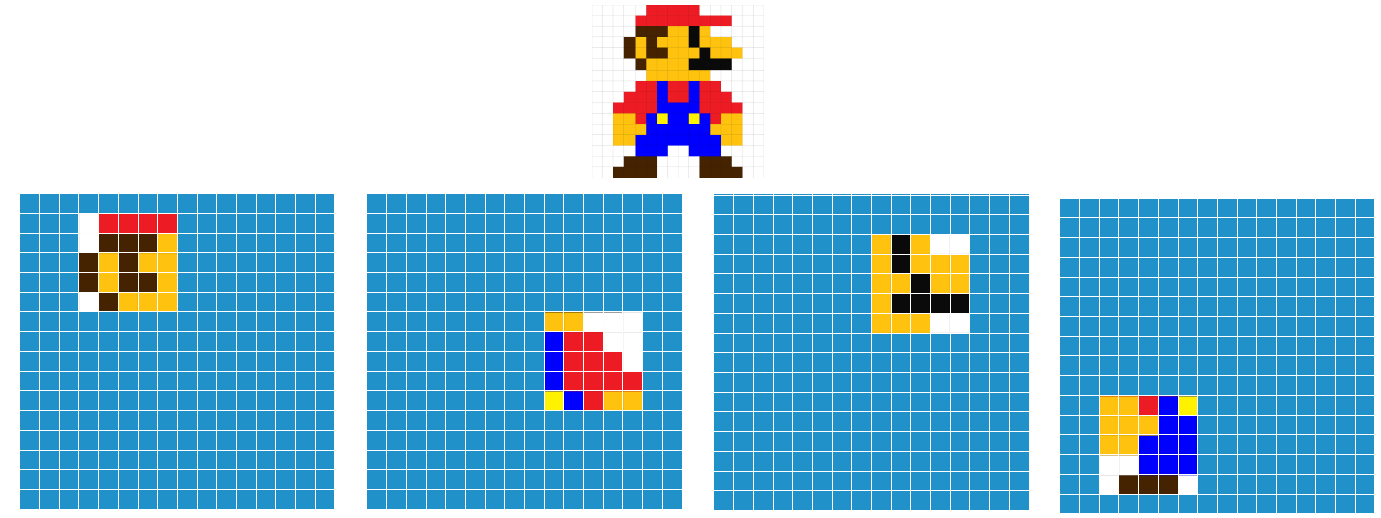

Instead of analyzing individual pixels, CNNs scan groups of pixels. This allows the network to recognize features like Mario’s eyes, mustache, and outfit. Unlike randomly chosen pixels, grouped pixels provide meaningful visual cues, improving the network’s ability to classify objects accurately.

Convolutional Layer

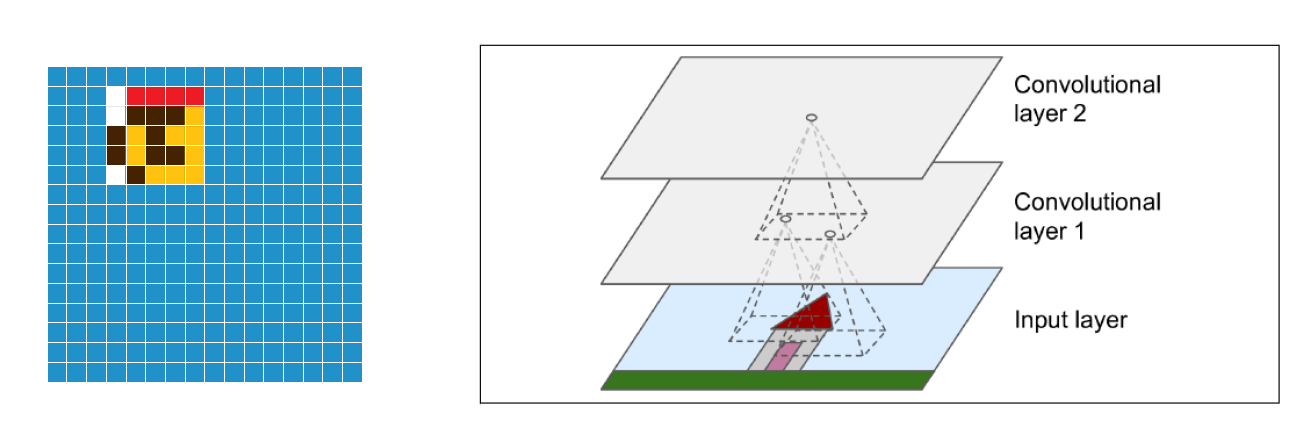

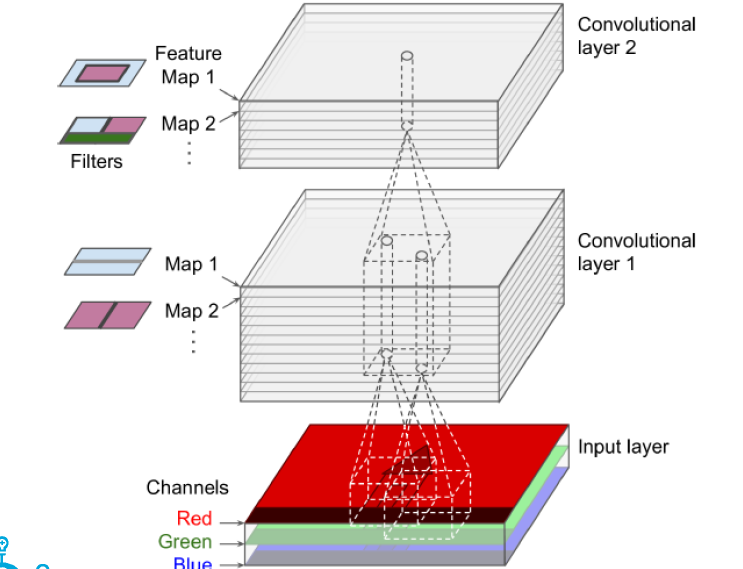

The key component of CNNs is the convolutional layer, which applies filters (small matrices) to scan regions of an image and extract features like edges, textures, or complex patterns. Each neuron in a convolutional layer focuses on a small receptive field (a region of pixels) and processes information from that region.

For example:

A 5x5 receptive field captures a small portion of an image.

A 3x3 receptive field is commonly used for detecting finer details.

These filters slide across the image, creating a feature map that highlights important characteristics.

So in this first layer (Convolutional Layer 1), first neuron gets information stored in the pixels within first rectangular box only. This second neuron gets information from pixels of second rectangle only.

Similarly, in the second (convolutional layer 2), information of all the neurons in this small rectangle in layer-1 is taken as input by neuron in layer-2.

This architecture allows the network to concentrate on lower-level features in the first layer and then assemble these features into larger, higher-level features in the next hidden layer and so on.

Stride

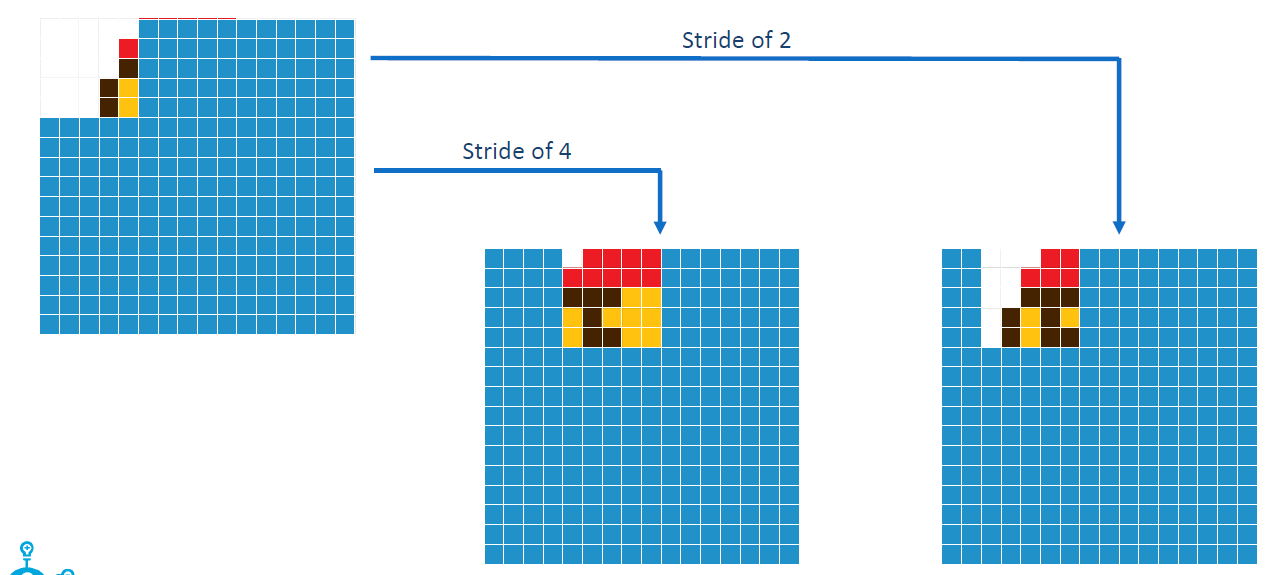

The first neuron in the convolutional layer looks at a specific set of pixels. The next neuron in the convolutional layer will have a slightly shifted receptive field. This shift in the view from one neuron to another is called the stride.

For example, if the next neuron is shifted two pixels to the right, we say that this window has a stride of two. Each neuron on the convolutional layer will have a window shifted two pixels to the right. Similarly, a stride of four means taking a jump of four pixels when looking at the next neuron.

Using a small stride results in significant overlap between receptive fields, while a larger stride reduces overlap. A small stride leads to more neurons in the upper layer, whereas a larger stride requires fewer neurons.

Thus, the stride determines the size of the upper layer, the amount of overlap, and the receptive fields.

Padding

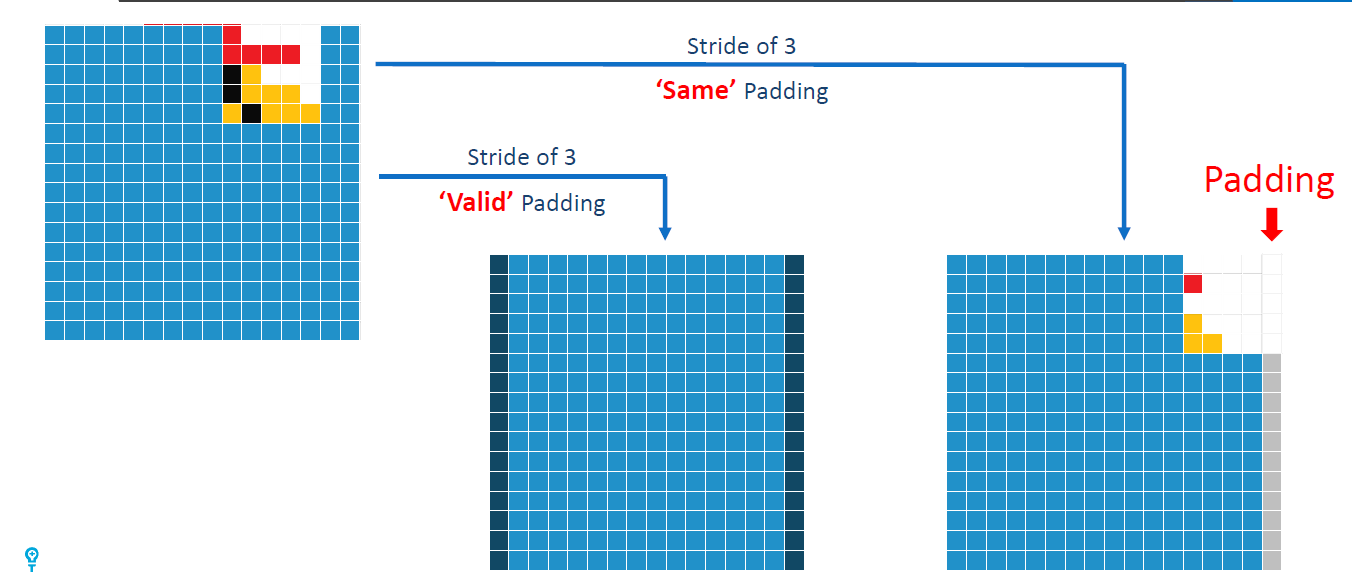

One problem that arises with large stride values is that we may not be able to cover the entire image.

For example, if we use a 5×5 window moving with a stride of 3, at some point, there may not be enough remaining pixels to fully accommodate the window.

To address this, we have two options:

Valid Padding: We ignore extra pixels at the border that cannot fit within the window, effectively reducing the image size.

Same Padding: We add extra rows and columns of dummy pixels so that the entire image can be covered, ensuring all neurons have the same receptive field.

By default, padding is set to "valid." If border pixels are important, "same" padding can be used to retain those features.

Filters

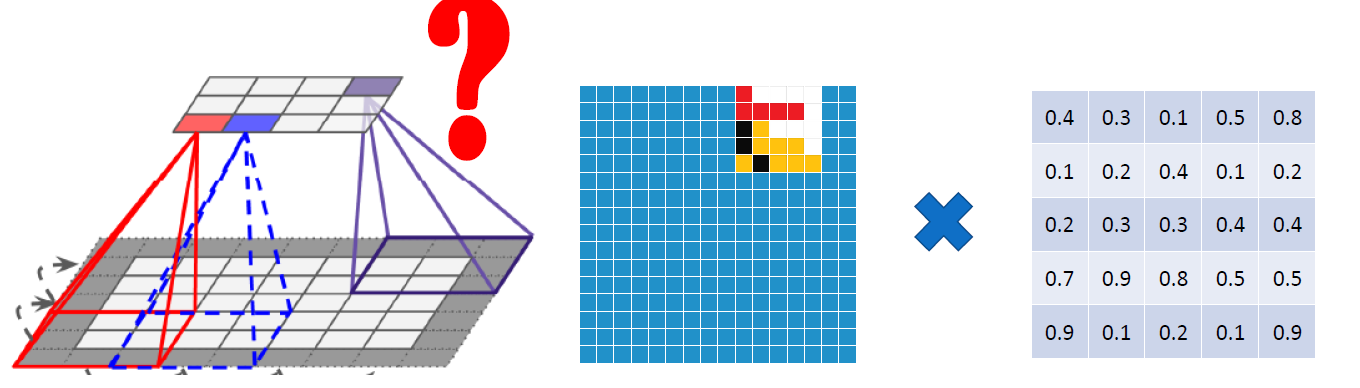

So far, we've discussed that a cell in a Convolutional layer receives information from a group of cells in the previous layer. For example, a specific cell (marked in red) in the Convolutional layer gathers data from nine neighboring cells within a highlighted red region. But what does this process actually mean?

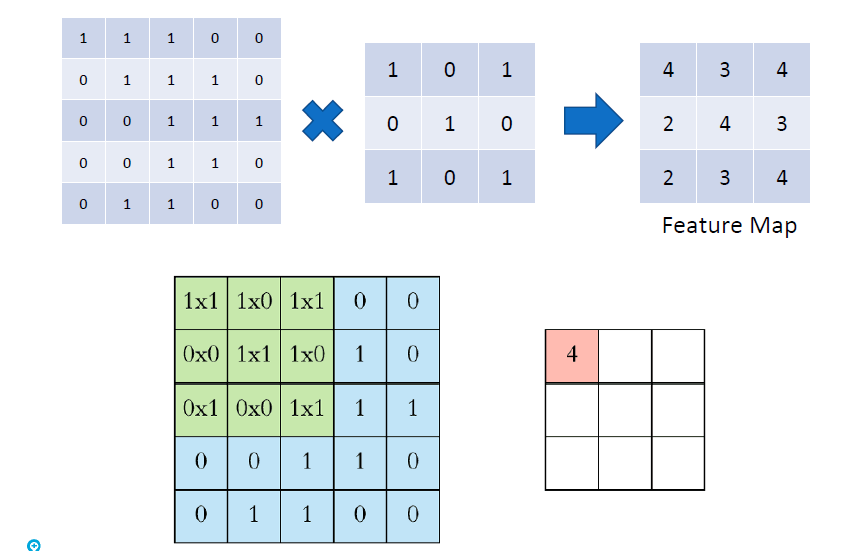

Since each cell in a Convolutional layer represents multiple pixels from the original image, we need a way to condense those pixel values into a single representative value. This is achieved using a filter— It is a matrix that has the dimensions same as that of the receptive field.

For instance, if the receptive window is 5×5, the filter will also be 5×5. Similarly, a 3×3 window will have a 3×3 filter.

How Filters Work?

Each pixel within the receptive window is multiplied by the corresponding value in the filter. These products are then summed up to produce a single value that represents the entire window.

This process is repeated across the entire image to create a feature map—a transformed representation of the input where certain features are emphasized.

Learning Filter Values

Now, an important question arises: How do we determine the values inside the filter?

The great news is we don’t have to manually choose them! The neural network automatically learns these values during training. As the model processes more data, it fine-tunes the filter values to recognize useful patterns.

Layers of Feature Extraction

A Convolutional layer consists of several feature maps. These maps are then passed to the next layer, where higher-level features are extracted by combining information from all previous feature maps.

For example, a cell in Convolutional Layer 2 does not just receive data from a single feature map—it processes all feature maps from the previous layer. This allows the network to recognize more complex patterns by combining different features.

Channels

Images can be either grayscale (black and white) or colored. In a grayscale image, each pixel holds a single value representing brightness, typically ranging from 0 (black) to 255 (white), with shades of gray in between.

In contrast, a colored image has three values per pixel—Red (R), Green (G), and Blue (B). By mixing them in different intensities (ranging from 0 to 255), various colors are created.

A color image consists of three separate layers, known as channels—one for red, one for green, and one for blue

Pooling Layer

A pooling layer in CNN reduces the size of feature maps to lower computational cost and prevent overfitting. It does this by summarizing regions of the input using functions like:

Max Pooling: Takes the highest value in each region, preserving dominant features.

Average Pooling: Computes the average of values in each region, smoothing the features.

Pooling typically uses a stride of 2, reducing the feature map’s width and height by half, which lowers memory usage and the number of trainable parameters. Max pooling is preferred as it retains key features while reducing complexity.

Building CNN model for Fashion Categorization

Refer:

CNN project from scratch- Cats Vs Dogs Classification

Subscribe to my newsletter

Read articles from Omkar Kasture directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Omkar Kasture

Omkar Kasture

MERN Stack Developer, Machine learning & Deep Learning