Autogenerate semantic model from a OLTP source using Tabular Object Model (TOM) for a Fabric workspace

Sachin Nandanwar

Sachin Nandanwar

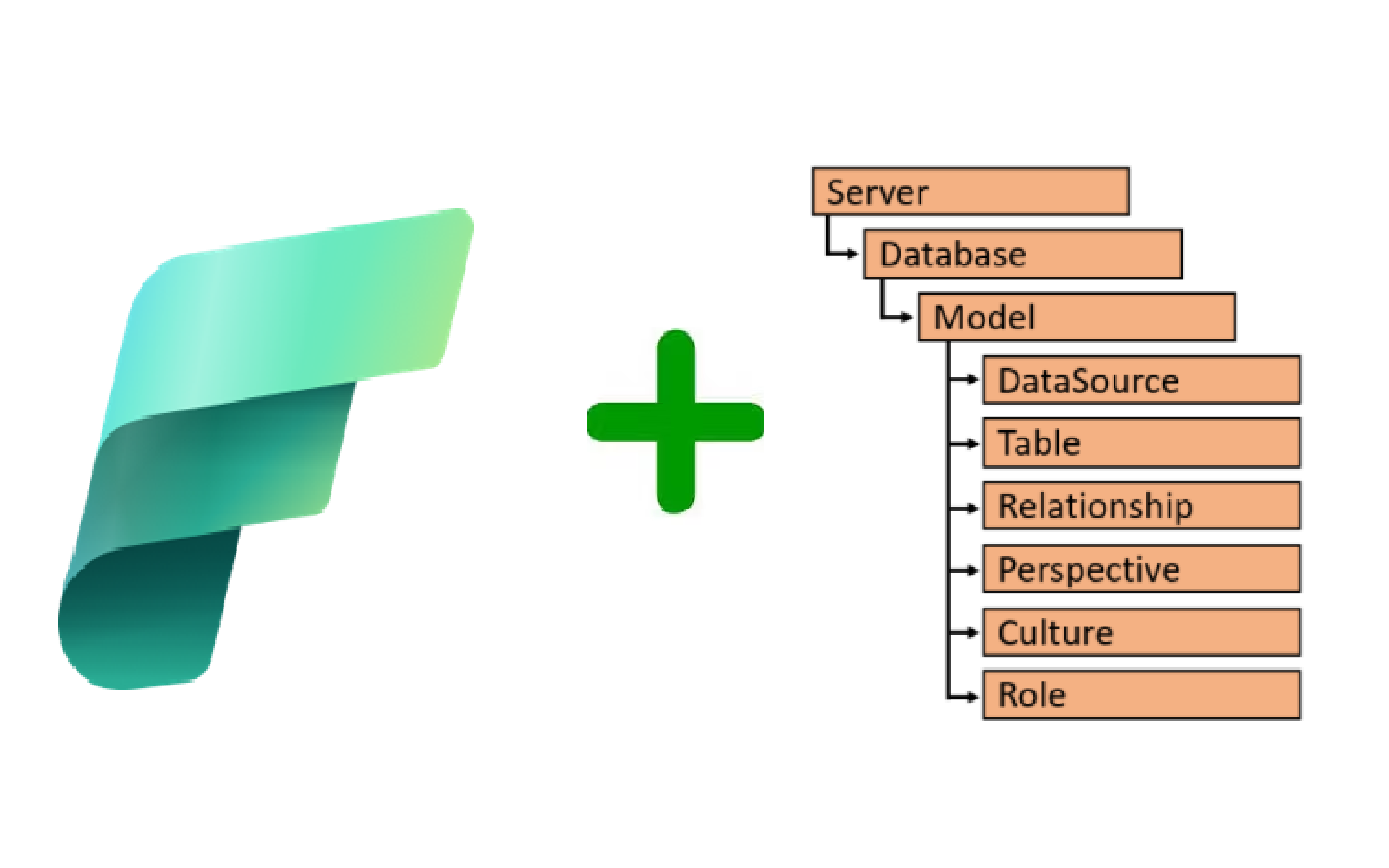

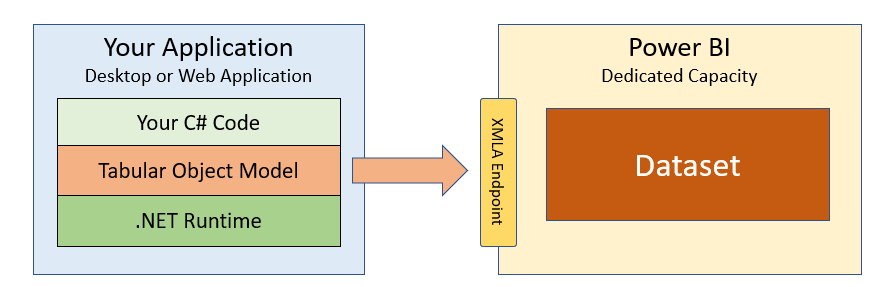

The Tabular Object Model (TOM) is a programmatic model used for interacting with tabular data models in Microsoft’s data tools such as SQL Server Analysis Services (SSAS), Azure Analysis Services and Power BI. It provides the fundamental building blocks for creating, defining and managing your semantic model in Power BI and Microsoft Fabric.

Its a .NET library that provides an abstract layer on top of the XMLA endpoint through which you can create and modify models directly in the Power BI Service/Fabric.

Image credit : Mircosoft

In essence with TOM, developers can create, manage, and manipulate tabular data structures like tables, columns, measures, hierarchies, relationships, and calculated columns. This entire process can be seamlessly automated.

In this article I will demonstrate on how to create a semantic model using star schema modelling technique from an AdventureWorks OLTP database. I will create relationship across the different entities, create hierarchies, measures and calculated columns all through TOM libraries and once the model is generated, the code will the auto deploy it to a Fabric workspace.

If you are a Python fan there are Python wrappers around the TOM object model in the sempy libraries. You can find them here.

In the upcoming articles I will demonstrate how to leverage TOM libraries to modify, validate and serialize TMSL and TMDL metadata of the semantic model.

If you want to skip the writeup you can watch the walkthrough here.

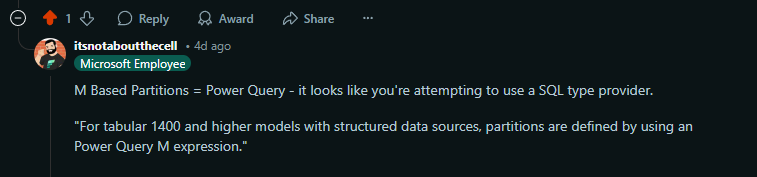

A brief note on the context of this article : So, I was facing an issue while coding the semantic model and no matter what I did, I could not resolve it. I ended up posting the issue on the Fabric’s Reditt forum. You can find the post here.

I would like to give a big shout out to the following response I received to the post on the Reddit forum which thankfully helped me resolve the issue.

So, essentially, what the above comment means is that for tabular versions above 1400you HAVE TO use M query to fetch the data from the source and the partitions have to be M based. SQL queries will NOT WORK. Also, Premium workspaces dont work for tabular versions 1400and below.

SetUp

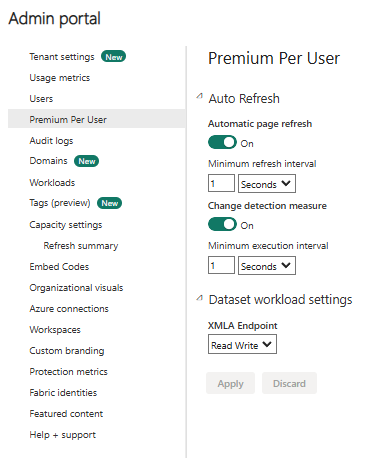

To get started, you will require your Fabric workspace where you intent to deploy the semantic model to run on a Premium license.

Once that is in place, enable the XMLA Read Write option. I am using a Premium Per User license.

To enable XMLA read write for Premium Per User license, goto Admin portal and select Premium per User option and then, under Data Workload settings select the Read Write option and Apply.

Next, create a new console application and Install the following Nuget packages

dotnet add package Microsoft.AnalysisServices.NetCore.retail.amd64 --version 19.61.3

dotnet add package Spectre.Console --version 0.47.0

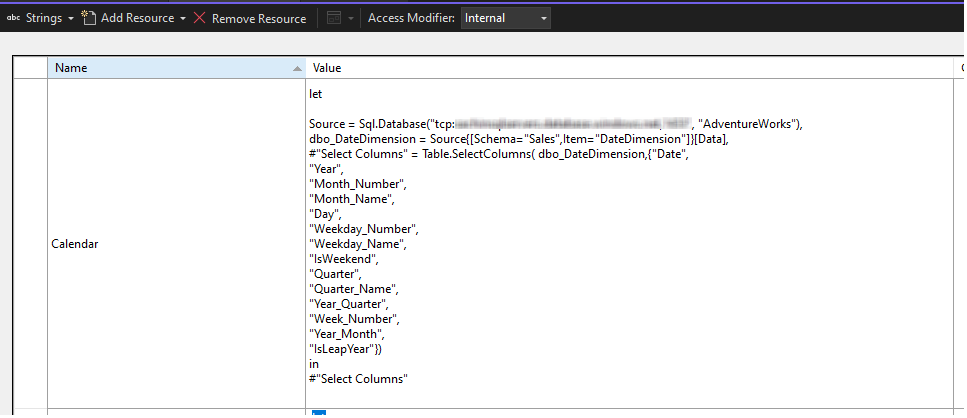

In the next step, use M queries to fetch the data from the your underlying source. As I was using a C# console application I leveraged the use of Resource files.

Basically resource files are physical dictionary objects that can store data in Key Value pair in XML format.

The image below is an example of an M query stored in a resource file of the console application that I used to fetch data from a Calendar table of the Adventureworks OLTP database.

I will be using the following tables from the OLTP database to create the star schema model

Sales.CustomerProduction.ProductProduction.ProductCategoryProduction.ProductSubCategorySales.SalesTerritorySales.SalesOrderDetailSales.SalesOrderHeaderSales.DateDimension

The first five tables above will serve as dimension tables.SalesOrderHeader and SaledOrdeDetail as fact tables.Sales.DateDimension is a calendar table that I created using the following script, that generates date ranges from the year 2005 to 2035.

CREATE TABLE [Sales].[DateDimension](

[Date] [datetime] NOT NULL,

[Year] [int] NULL,

[Month_Number] [int] NULL,

[Month_Name] [varchar](20) NULL,

[Day] [int] NULL,

[Weekday_Number] [int] NULL,

[Weekday_Name] [varchar](20) NULL,

[IsWeekend] [bit] NULL,

[Quarter] [int] NULL,

[Quarter_Name] [varchar](2) NULL,

[Year_Quarter] [varchar](10) NULL,

[Week_Number] [int] NULL,

[Year_Month] [varchar](7) NULL,

[IsLeapYear] [bit] NULL,

CONSTRAINT [PK__DateDime__77387D069AD224A3] PRIMARY KEY CLUSTERED

(

[Date] ASC

)WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF) ON [PRIMARY]

) ON [PRIMARY]

GO

WITH CTE AS (

SELECT CAST('2005-01-01' AS DATE) AS Date

UNION ALL

SELECT DATEADD(DAY, 1, Date)

FROM DateCTE

WHERE Date < '2035-12-31'

)

INSERT INTO [Sales].[DateDimension]

SELECT

Date,

YEAR(Date) AS Year,

MONTH(Date) AS Month_Number,

DATENAME(MONTH, Date) AS Month_Name,

DAY(Date) AS Day,

DATEPART(WEEKDAY, Date) AS Weekday_Number,

DATENAME(WEEKDAY, Date) AS Weekday_Name,

CASE

WHEN DATEPART(WEEKDAY, Date) IN (1, 7) THEN 1

ELSE 0

END AS IsWeekend,

DATEPART(QUARTER, Date) AS Quarter,

'Q' + CAST(DATEPART(QUARTER, Date) AS VARCHAR) AS Quarter_Name,

CAST(YEAR(Date) AS VARCHAR) + '-Q' + CAST(DATEPART(QUARTER, Date) AS VARCHAR) AS Year_Quarter,

DATEPART(WEEK, Date) AS Week_Number,

FORMAT(Date, 'yyyy-MM') AS Year_Month,

CASE

WHEN (YEAR(Date) % 4 = 0 AND YEAR(Date) % 100 <> 0) OR (YEAR(Date) % 400 = 0) THEN 1

ELSE 0

END AS IsLeapYear

FROM CTE

OPTION (MAXRECURSION 0);

Note: You can find repository of the entire code used in this article on Github here.

Code

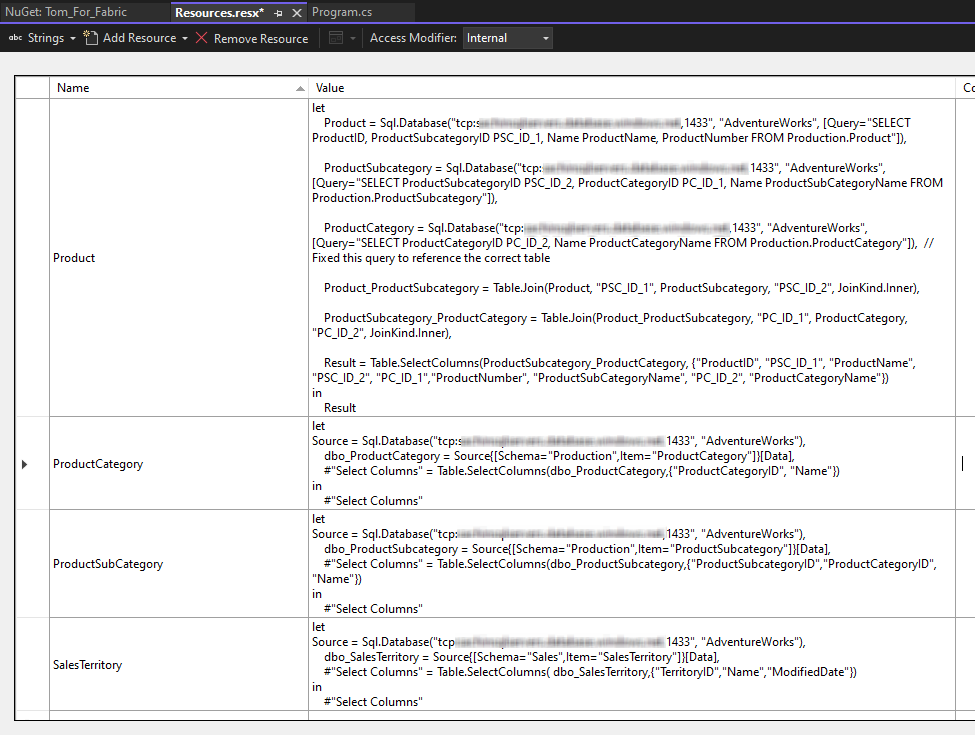

I used the following M queries to fetch the data from the source. These queries are stored in the Resource file(.resx) file of the console application.

Please replace yourserver in tcp:yourserver,1433 settings that you see below with your SQL server instance and the port number.

Resource File»

//Calendar table

let

Source = Sql.Database("tcp:yourserver,1433", "AdventureWorks"),

dbo_DateDimension = Source{[Schema="Sales",Item="DateDimension"]}[Data],

#"Select Columns" = Table.SelectColumns( dbo_DateDimension,{"Date",

"Year",

"Month_Number",

"Month_Name",

"Day",

"Weekday_Number",

"Weekday_Name",

"IsWeekend",

"Quarter",

"Quarter_Name",

"Year_Quarter",

"Week_Number",

"Year_Month",

"IsLeapYear"})

in

#"Select Columns"

//Customer table

let

SalesCustomer = Sql.Database("tcp:yourserver,1433", "AdventureWorks",

[Query="SELECT CustomerID,AccountNumber FROM Sales.Customer"]),

PersonPerson = Sql.Database("tcp:yourserver,1433", "AdventureWorks",

[Query="SELECT BusinessEntityID,FirstName,LastName FROM Person.Person"]),

JoinTables = Table.Join(SalesCustomer, "CustomerID", PersonPerson, "BusinessEntityID",

JoinKind.Inner),

Result = Table.SelectColumns(JoinTables, {"CustomerID","AccountNumber", "FirstName", "LastName"})

in

Result

//Fact Sales

let

SalesOrderHeader = Sql.Database("tcp:yourserver,1433", "AdventureWorks",

[Query="SELECT SalesOrderID SalesOrderId_H,CustomerID,SalesOrderNumber,

PurchaseOrderNumber,OrderDate,DueDate,ShipDate,TerritoryID FROM Sales.SalesOrderHeader"]),

SalesOrderDetails = Sql.Database("tcp:yourserver,1433", "AdventureWorks",

[Query="SELECT SalesOrderID SalesOrderId_D,ProductID, OrderQty,CarrierTrackingNumber,OrderQty,

UnitPrice,UnitPriceDiscount FROM Sales.SalesOrderDetail"]),

JoinTables = Table.Join(SalesOrderHeader, "SalesOrderId_H", SalesOrderDetails, "SalesOrderId_D",

JoinKind.Inner),

Result = Table.SelectColumns(JoinTables, {"SalesOrderId_H","CustomerID","ProductID","TerritoryID",

"SalesOrderNumber","PurchaseOrderNumber","OrderDate","DueDate","ShipDate","CarrierTrackingNumber",

"OrderQty","UnitPrice","UnitPriceDiscount"})

in

Result

//Product Table

let

Product = Sql.Database("tcp:yourserver,1433", "AdventureWorks",

[Query="SELECT ProductID, ProductSubcategoryID PSC_ID_1, Name ProductName,

ProductNumber FROM Production.Product"]),

ProductSubcategory = Sql.Database("tcp:yourserver,1433", "AdventureWorks",

[Query="SELECT ProductSubcategoryID PSC_ID_2, ProductCategoryID PC_ID_1,

Name ProductSubCategoryName FROM Production.ProductSubcategory"]),

ProductCategory = Sql.Database("tcp:yourserver,1433", "AdventureWorks",

[Query="SELECT ProductCategoryID PC_ID_2, Name ProductCategoryName FROM Production.ProductCategory"]),

Product_ProductSubcategory = Table.Join(Product, "PSC_ID_1", ProductSubcategory, "PSC_ID_2", JoinKind.Inner),

ProductSubcategory_ProductCategory = Table.Join(Product_ProductSubcategory, "PC_ID_1", ProductCategory, "PC_ID_2", JoinKind.Inner),

Result = Table.SelectColumns(ProductSubcategory_ProductCategory, {"ProductID", "PSC_ID_1",

"ProductName", "PSC_ID_2", "PC_ID_1","ProductNumber", "ProductSubCategoryName",

"PC_ID_2", "ProductCategoryName"})

in

Result

//Product Category table

let

Source = Sql.Database("tcp:yourserver,1433", "AdventureWorks"),

dbo_ProductCategory = Source{[Schema="Production",Item="ProductCategory"]}[Data],

#"Select Columns" = Table.SelectColumns(dbo_ProductCategory,{"ProductCategoryID", "Name"})

in

#"Select Columns"

//Product Sub Category table

let

Source = Sql.Database("tcp:yourserver,1433", "AdventureWorks"),

dbo_ProductSubcategory = Source{[Schema="Production",Item="ProductSubcategory"]}[Data],

#"Select Columns" = Table.SelectColumns(dbo_ProductSubcategory,{"ProductSubcategoryID",

"ProductCategoryID", "Name"})

in

#"Select Columns"

// SalesTerritory table

let

Source = Sql.Database("tcp:yourserver,1433", "AdventureWorks"),

dbo_SalesTerritory = Source{[Schema="Sales",Item="SalesTerritory"]}[Data],

#"Select Columns" = Table.SelectColumns( dbo_SalesTerritory,{"TerritoryID","Name"})

in

#"Select Columns"

Snippet of the resource file

Next, create the methods to define different entities of the semantic model.

Note : I have removed all the customized informational messages across all methods posted through Spectre.Console to make the code more readable.

Customers »

public static Table CreateCustomers(Database database, Server server)

{

Table tableCustomers = new Table()

{

Name = database.Model.Tables.GetNewName("Dim_Customers"),

Description = "Customer Details",

Partitions = {

new Partition() {

Name = "All Customers",

Source = new MPartitionSource() {

Expression = Properties.Resources.Customer

}

}

},

Columns =

{

new DataColumn() {

Name = "CustomerID",

DataType = DataType.Int64,

SourceColumn = "CustomerID",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "AccountNumber",

DataType = DataType.String,

SourceColumn = "AccountNumber",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "FirstName",

DataType = DataType.String,

SourceColumn = "FirstName",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "LastName",

DataType = DataType.String,

SourceColumn = "LastName",

SummarizeBy = AggregateFunction.None

}

}

};

return tableCustomers;

}

Products »

Note: Here we have created hierarchy across ProductCategory,ProductSubCategory and ProductCategory entities. The hierarchy levels are defined through the ordinal property of the Hierarchy class.

public static Table CreateProducts(Database database, Server server)

{

Table tableProduct = new Table()

{

Name = database.Model.Tables.GetNewName("Dim_Products"),

Description = "Product Details",

Partitions = {

new Partition() {

Name = "All Products",

Source = new MPartitionSource() {

Expression = Properties.Resources.Product

}

}

},

Columns =

{

new DataColumn() {

Name = "ProductID",

DataType = DataType.Int64,

SourceColumn = "ProductID",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "ProductSubcategoryID",

DataType = DataType.Int64,

SourceColumn = "PSC_ID_1",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Product",

DataType = DataType.String,

SourceColumn = "ProductName",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "ProductNumber",

DataType = DataType.String,

SourceColumn = "ProductNumber",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "ProductCategoryID",

DataType = DataType.String,

SourceColumn = "PC_ID_1",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "ProductSubCategory",

DataType = DataType.String,

SourceColumn = "ProductSubCategoryName",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "ProductCategory",

DataType = DataType.String,

SourceColumn = "ProductCategoryName",

SummarizeBy = AggregateFunction.None

},

},

};

tableProduct.Hierarchies.Add(

new Hierarchy()

{

Name = "Product Hierarchy",

Levels = {

new Level() { Ordinal=0, Name="ProductCategory", Column=tableProduct.Columns["ProductCategory"] },

new Level() { Ordinal=1, Name="ProductSubCategory", Column=tableProduct.Columns["ProductSubCategory"] },

new Level() { Ordinal=2, Name="Product", Column=tableProduct.Columns["Product"] }

}

}

);

return tableProduct;

}

SalesTerritory »

public static Table CreateSalesTerritory(Database database, Server server)

{

Table tableSalesTerritory = new Table()

{

Name = database.Model.Tables.GetNewName("Dim_SalesTerritory"),

Description = "SalesTerritory Details",

Partitions = {

new Partition() {

Name = "All SalesTerritory",

Source = new MPartitionSource() {

Expression = Properties.Resources.SalesTerritory

}

}

},

Columns =

{

new DataColumn() {

Name = "TerritoryID",

DataType = DataType.Int64,

SourceColumn = "TerritoryID",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Name",

DataType = DataType.String,

SourceColumn = "Name",

SummarizeBy = AggregateFunction.None

}

,

new DataColumn() {

Name = "ModifiedDate",

DataType = DataType.DateTime,

SourceColumn = "ModifiedDate",

SummarizeBy = AggregateFunction.None

}

}

};

return tableSalesTerritory;

}

ProductCategory »

public static Table CreateProductCategory(Database database, Server server)

{

Table tableProductCategory = new Table()

{

Name = database.Model.Tables.GetNewName("Dim_ProductCategory"),

Description = "ProductCategory Details",

Partitions = {

new Partition() {

Name = "All ProductCategory",

Source = new MPartitionSource() {

Expression = Properties.Resources.ProductCategory

}

}

},

Columns =

{

new DataColumn() {

Name = "ProductCategoryID",

DataType = DataType.Int64,

SourceColumn = "ProductCategoryID",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Name",

DataType = DataType.String,

SourceColumn = "Name",

SummarizeBy = AggregateFunction.None

},

}

};

return tableProductCategory;

}

ProductSubcategory»

public static Table CreateProductSubcategory(Database database, Server server)

{

Table tableProductSubcategory = new Table()

{

Name = database.Model.Tables.GetNewName("Dim_ProductSubCategory"),

Description = "ProductSubcategory Details",

Partitions = {

new Partition() {

Name = "All ProductSubcategory",

Source = new MPartitionSource() {

Expression = Properties.Resources.ProductSubCategory

}

}

},

Columns =

{

new DataColumn() {

Name = "ProductSubcategoryID",

DataType = DataType.Int64,

SourceColumn = "ProductSubcategoryID",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "ProductCategoryID",

DataType = DataType.Int64,

SourceColumn = "ProductCategoryID",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Name",

DataType = DataType.String,

SourceColumn = "Name",

SummarizeBy = AggregateFunction.None

},

}

};

return tableProductSubcategory;

}

FactSales »

Note: Here we added two measures CustomerCount and Total Quantity

public static Table CreateFactSales(Database database, Server server)

{

Table tableFactSales = new Table()

{

Name = database.Model.Tables.GetNewName("FactSales"),

Description = "Sales Details",

Partitions = {

new Partition() {

Name = "All SalesOrders",

Source = new MPartitionSource() {

Expression = Properties.Resources.FactSales

}

}

},

Columns =

{

new DataColumn() {

Name = "SalesOrderID",

DataType = DataType.Int64,

SourceColumn = "SalesOrderID_H",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "CustomerID",

DataType = DataType.Int64,

SourceColumn = "CustomerID",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "ProductID",

DataType = DataType.Int64,

SourceColumn = "ProductID",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "TerritoryID",

DataType = DataType.Int64,

SourceColumn = "TerritoryID",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "SalesOrderNumber",

DataType = DataType.String,

SourceColumn = "SalesOrderNumber",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "PurchaseOrderNumber",

DataType = DataType.String,

SourceColumn = "PurchaseOrderNumber",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "OrderDate",

DataType = DataType.DateTime,

SourceColumn = "OrderDate",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "DueDate",

DataType = DataType.DateTime,

SourceColumn = "DueDate",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "ShipDate",

DataType = DataType.DateTime,

SourceColumn = "ShipDate",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "CarrierTrackingNumber",

DataType = DataType.String,

SourceColumn = "CarrierTrackingNumber",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "OrderQty",

DataType = DataType.Int64,

SourceColumn = "OrderQty",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "UnitPrice",

DataType = DataType.Decimal,

SourceColumn = "UnitPrice",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "UnitPriceDiscount",

DataType = DataType.Decimal,

SourceColumn = "UnitPriceDiscount",

SummarizeBy = AggregateFunction.None

},

},

};

tableFactSales.Measures.Add(new Measure { Name = "CustomerCount", Expression = "Count(FactSales[CustomerID])" });

tableFactSales.Measures.Add(new Measure { Name = "Total Quantity", Expression = "Sum(FactSales[OrderQty])", FormatString = @"\$#,0;(\$#,0);\$#,0" });

return tableFactSales;

}

Calendar »

Note: Here we have created hierarchy across Year,Quarter and Month attributes .As was the case with the Product hierarchy ,the hierarchy level here is defined through the ordinal property of the Hierarchy class

public static Table CreateCalendarTable(Database database, Server server)

{

Table tableCalendarDB = new Table()

{

Name = database.Model.Tables.GetNewName("Dim_Calendar"),

Description = "SalesTerritory Details",

Partitions = {

new Partition() {

Name = "All CalendarDate",

Source = new MPartitionSource() {

Expression = Properties.Resources.Calendar

}

}

},

Columns =

{

new DataColumn() {

Name = "Date",

DataType = DataType.DateTime,

SourceColumn = "Date",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Year",

DataType = DataType.Int64,

SourceColumn = "Year",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Month",

DataType = DataType.Int64,

SourceColumn = "Month_Number",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Month Name",

DataType = DataType.String,

SourceColumn = "Month_Name",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Day",

DataType = DataType.Int64,

SourceColumn = "Day",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Weekday",

DataType = DataType.Int64,

SourceColumn = "Weekday_Number",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Day Name",

DataType = DataType.String,

SourceColumn = "Weekday_Name",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "IsWeekend",

DataType = DataType.Boolean,

SourceColumn = "IsWeekend",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Quarter",

DataType = DataType.Int64,

SourceColumn = "Quarter",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Quarter Name",

DataType = DataType.String,

SourceColumn = "Quarter_Name",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Quarter Year",

DataType = DataType.String,

SourceColumn = "Year_Quarter",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Week Number",

DataType = DataType.Int64,

SourceColumn = "Week_Number",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Year Month",

DataType = DataType.String,

SourceColumn = "Year_Month",

SummarizeBy = AggregateFunction.None

},

new DataColumn() {

Name = "Is Leap Year",

DataType = DataType.Boolean,

SourceColumn = "IsLeapYear",

SummarizeBy = AggregateFunction.None

}

}

};

tableCalendarDB.Hierarchies.Add(new Hierarchy()

{

Name = "Calendar Hierarchy",

Levels = {

new Level() { Ordinal = 0, Name = "Year", Column = tableCalendarDB.Columns["Year"] },

new Level() { Ordinal = 1, Name = "Quarter", Column = tableCalendarDB.Columns["Quarter Name"] },

new Level() { Ordinal = 2, Name = "Month", Column = tableCalendarDB.Columns["Month Name"] }

}

});

return tableCalendarDB;

}

In the next step we add the tables to the model and define relationships across different entities. The CompatibilityLevel used here is 1520.

public static string CreateSemanticModel(string DatabaseName, Server server)

{

try

{

string newDatabaseName = server.Databases.GetNewName(DatabaseName);

var database = new Database()

{

Name = newDatabaseName,

ID = newDatabaseName,

CompatibilityLevel = 1520,

StorageEngineUsed = Microsoft.AnalysisServices.StorageEngineUsed.TabularMetadata,

Model = new Microsoft.AnalysisServices.Tabular.Model()

{

Name = DatabaseName + "AdventureWorks",

Description = "AdventureWorks start schema using Tabular Object Model(TOM)"

}

};

Table tableCustomers = CreateCustomers(database, server);

database.Model.Tables.Add(tableCustomers);

Table tableProduct = CreateProducts(database, server);

database.Model.Tables.Add(tableProduct);

Table tableProductCategory = CreateProductCategory(database, server);

database.Model.Tables.Add(tableProductCategory);

Table tableProductSubcategory = CreateProductSubcategory(database, server);

database.Model.Tables.Add(tableProductSubcategory);

Table tableSalesTerritory = CreateSalesTerritory(database, server);

database.Model.Tables.Add(tableSalesTerritory);

Table tableCalendarDB = CreateCalendarTable(database, server);

database.Model.Tables.Add(tableCalendarDB);

Table tableFactSales = CreateFactSales(database, server);

database.Model.Tables.Add(tableFactSales);

database.Model.Relationships.Add(new SingleColumnRelationship

{

Name = "ProductCategory to ProductSubcategory",

ToColumn = tableProductCategory.Columns["ProductCategoryID"],

ToCardinality = RelationshipEndCardinality.One,

FromColumn = tableProductSubcategory.Columns["ProductCategoryID"],

FromCardinality = RelationshipEndCardinality.Many

});

database.Model.Relationships.Add(new SingleColumnRelationship

{

Name = "Customers to FactSales",

ToColumn = tableCustomers.Columns["CustomerID"],

ToCardinality = RelationshipEndCardinality.One,

FromColumn = tableFactSales.Columns["CustomerID"],

FromCardinality = RelationshipEndCardinality.Many

});

database.Model.Relationships.Add(new SingleColumnRelationship

{

Name = "Products to FactSales",

ToColumn = tableProduct.Columns["ProductID"],

ToCardinality = RelationshipEndCardinality.One,

FromColumn = tableFactSales.Columns["ProductID"],

FromCardinality = RelationshipEndCardinality.Many

});

database.Model.Relationships.Add(new SingleColumnRelationship

{

Name = "SalesTerritory to FactSales",

ToColumn = tableSalesTerritory.Columns["TerritoryID"],

ToCardinality = RelationshipEndCardinality.One,

FromColumn = tableFactSales.Columns["TerritoryID"],

FromCardinality = RelationshipEndCardinality.Many

});

database.Model.Relationships.Add(new SingleColumnRelationship

{

Name = "Product to ProductSubcategory",

ToColumn = tableProductSubcategory.Columns["ProductSubcategoryID"],

ToCardinality = RelationshipEndCardinality.One,

FromColumn = tableProduct.Columns["ProductSubcategoryID"],

FromCardinality = RelationshipEndCardinality.Many

});

database.Model.Relationships.Add(new SingleColumnRelationship

{

Name = "Date to SalesOrderDate",

FromColumn = tableFactSales.Columns["OrderDate"],

FromCardinality = RelationshipEndCardinality.Many,

ToColumn = tableCalendarDB.Columns["Date"],

ToCardinality = RelationshipEndCardinality.One,

IsActive = true

});

database.Model.Relationships.Add(new SingleColumnRelationship

{

Name = "Date to SalesDueDate",

FromColumn = tableFactSales.Columns["DueDate"],

FromCardinality = RelationshipEndCardinality.Many,

ToColumn = tableCalendarDB.Columns["Date"],

ToCardinality = RelationshipEndCardinality.One,

IsActive = false

});

database.Model.Relationships.Add(new SingleColumnRelationship

{

Name = "Date to SalesShipDate",

FromColumn = tableFactSales.Columns["ShipDate"],

FromCardinality = RelationshipEndCardinality.Many,

ToColumn = tableCalendarDB.Columns["Date"],

ToCardinality = RelationshipEndCardinality.One,

IsActive = false

});

server.Databases.Add(database);

server.Execute(Microsoft.AnalysisServices.Tabular.JsonScripter.ScriptCreate(database));

database.Model.RequestRefresh(Microsoft.AnalysisServices.Tabular.RefreshType.Full);

return "Success";

}

catch (Exception ex)

{

return ex.Message;

}

}

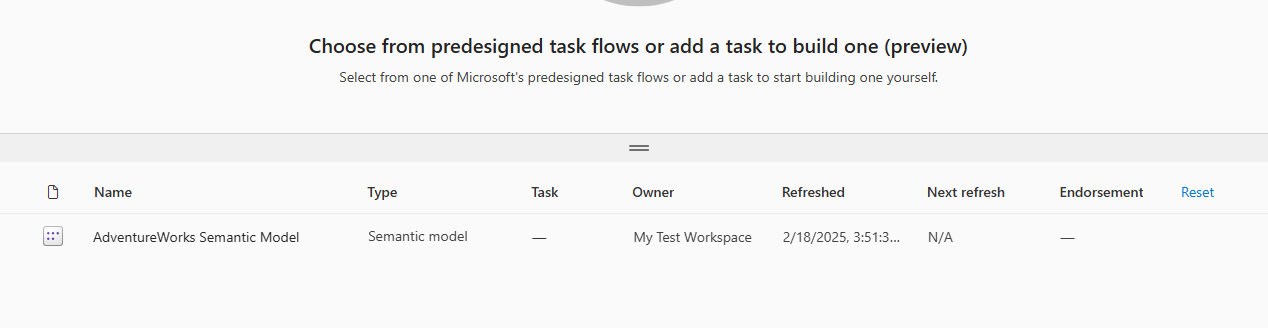

In the Main method of the Console application, we first connect to our workspace and then deploy the semantic model.

The workspace used below is MyTestWorkspace. Replace it with your workspace name in the workspaceConnection variable.

static void Main(string[] args)

{

string workspaceConnection = "powerbi://api.powerbi.com/v1.0/myorg/My%20Test%20Workspace";

string connectString = $"DataSource={workspaceConnection};";

Server server = new Server();

server.Connect(connectString);

string status = CreateSemanticModel("AdventureWorks Semantic Model", server);

if (status == "Success")

{

AnsiConsole.MarkupLine($"Successfully created and deployed the [Red]AdventureWorks Semantic Model[/]");

AnsiConsole.MarkupLine("");

AnsiConsole.MarkupLine($"Please re-enter the datasource credentials to fetch the data from the source and then refresh the model");

}

else

{

AnsiConsole.MarkupLine("");

AnsiConsole.MarkupLine($"Deployment of [Red]AdventureWorks Semantic Model[/] failed with error [Red]{status}[/]");

}

}

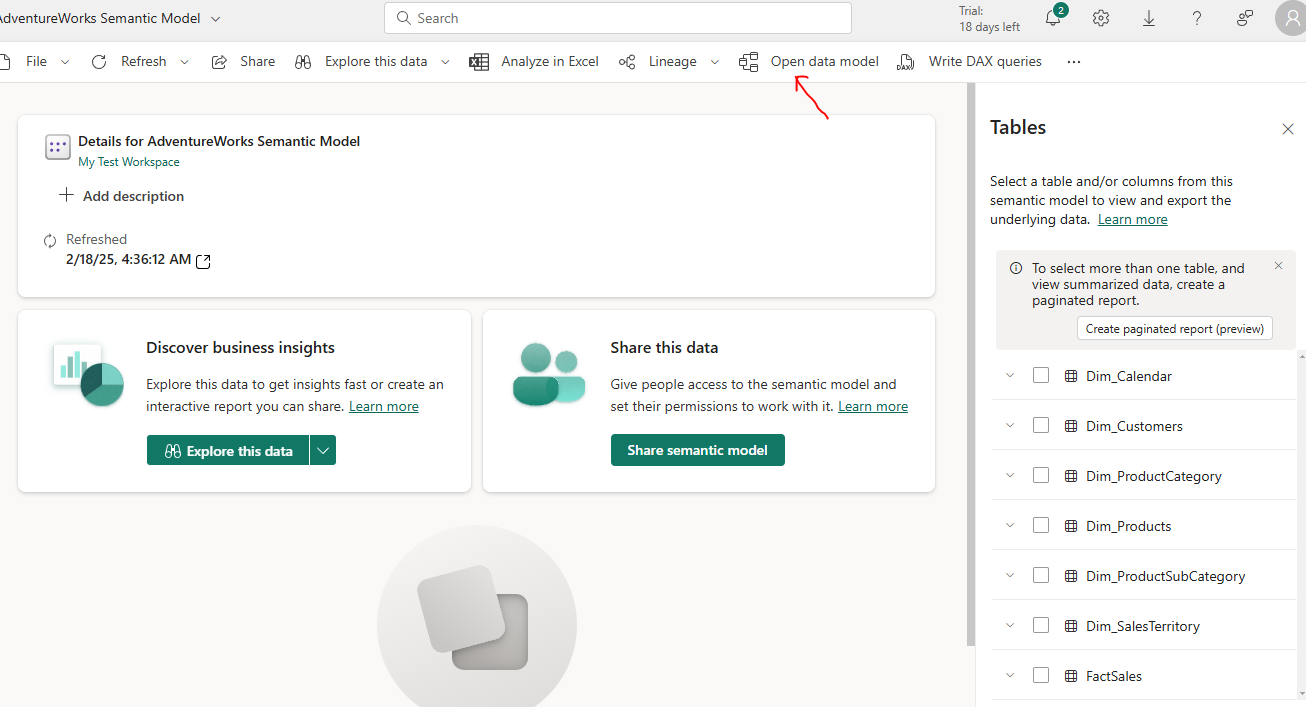

Once the model is deployed successfully you should see it under the workspace

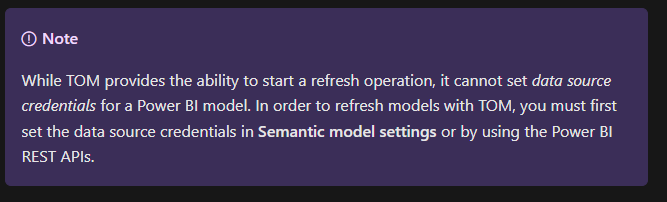

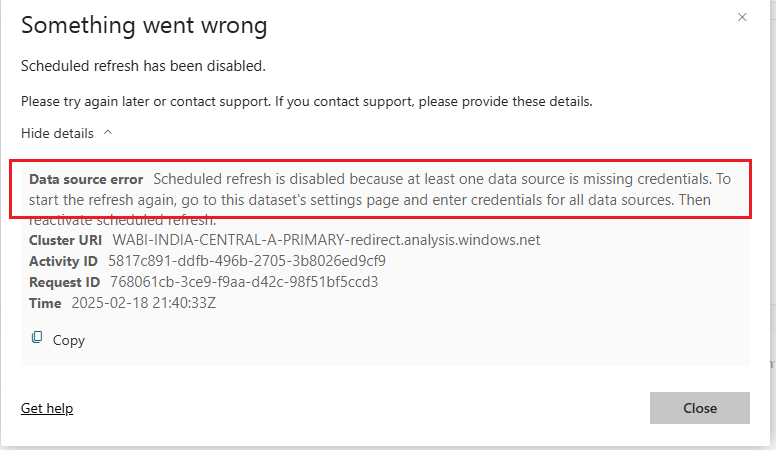

Note : You will have to set the semantic model data source credentials manually or through REST API’s.

or else you will end up with the following error when you refresh the model.

In a follow up article I will demonstrate on how to set up the data source credentials through Power BI REST APIs so that the complete end to end process is fully automated.

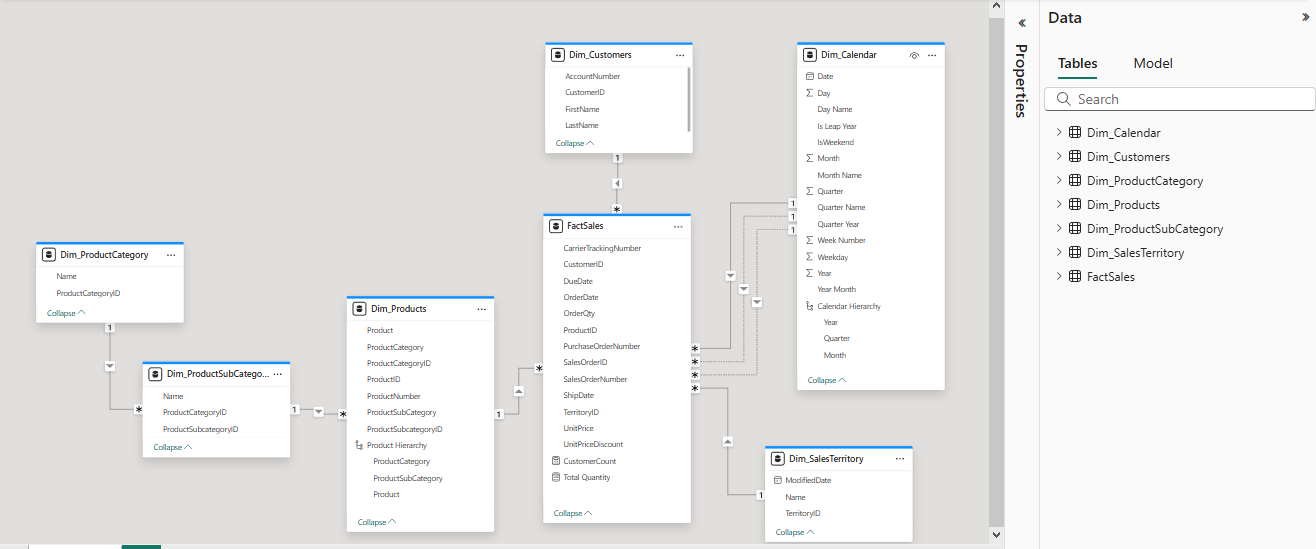

Once everything executes successfully, open the model and under the Data Model option

you can view the schematic of the model

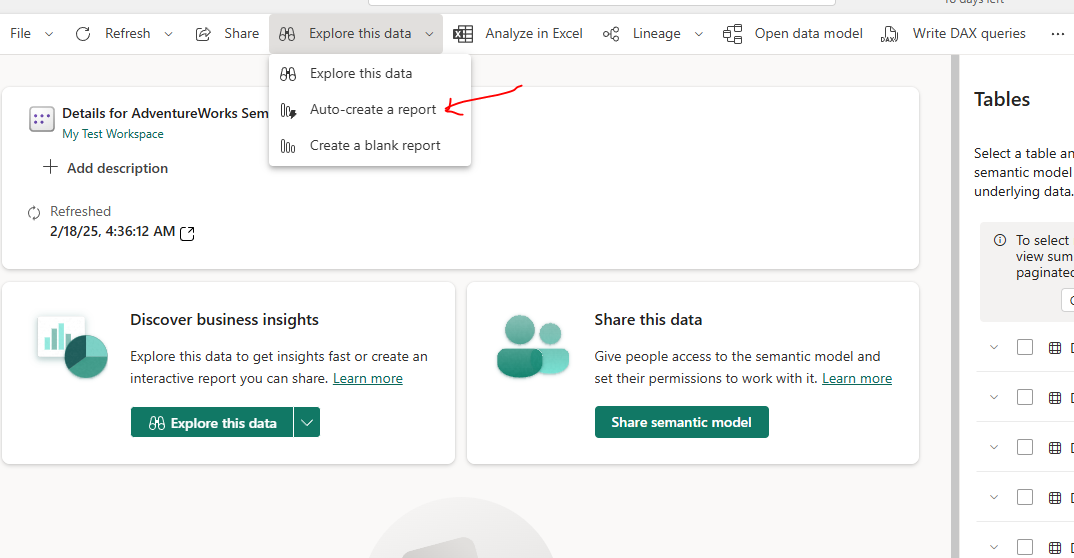

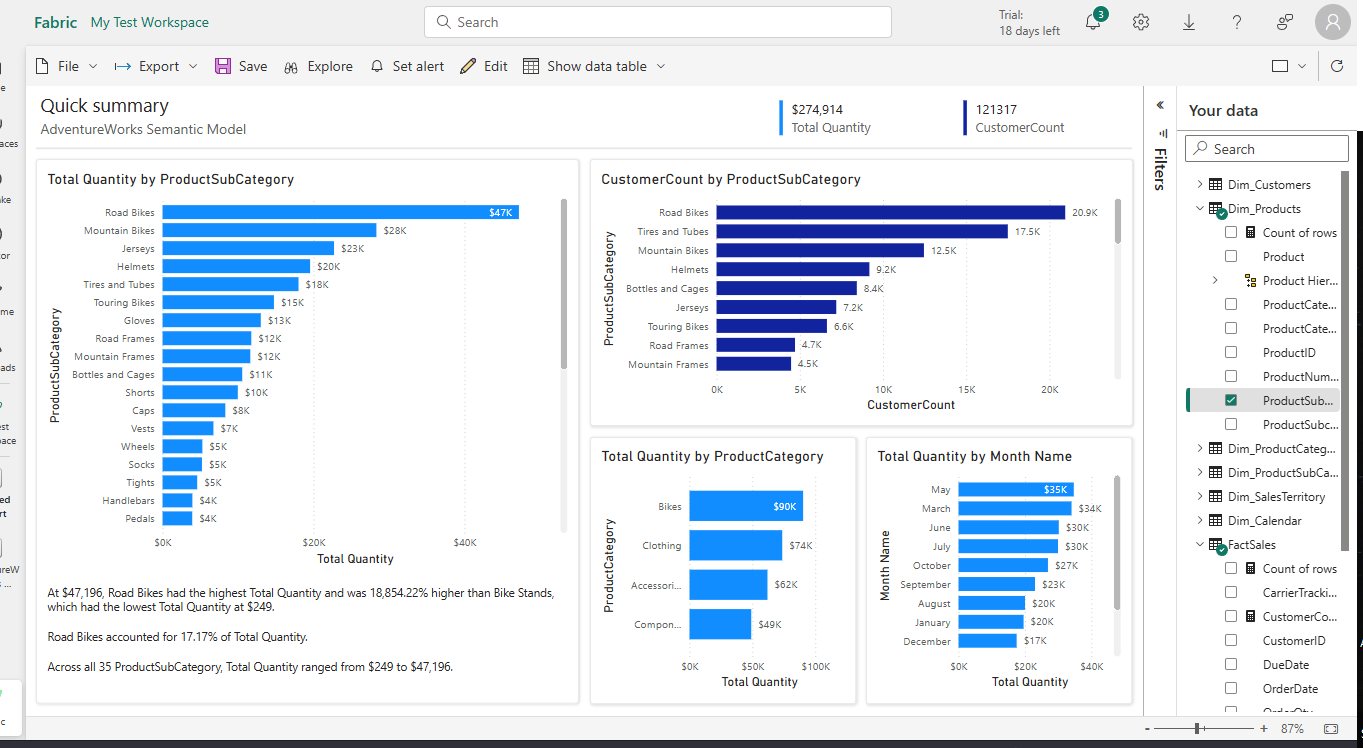

Selecting the “Auto-create a report” option

will generate a Quick summary report based on the underlying data and the structures across each entities involved.

Congragulation’s, you have successfully auto created and deployed a semantic model to a Fabric workspace.

Walkthrough

Conclusion

In conclusion the Tabular Object Model (TOM) is a powerful framework that enables efficient management, automation and customization of semantic models. By providing programmatic access through APIs, TOM allows users to create, modify and optimize data models dynamically.

Its integration with TMSL and TMDL structures enhances flexibility with respect to semantic model automation.

Thanks for reading !!!

Subscribe to my newsletter

Read articles from Sachin Nandanwar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by