Lost and Found...

Adelle Hasford

Adelle HasfordImagine you just finished giving a presentation on the benefits of using AWS cloud services as opposed to maintaining on-prem locations. You join in the networking session go around different places, and then head back to your office. Now, you are thinking of getting lunch and reaching out for your phone. But it is nowhere to be found. So, how do you backtrack to find your missing gadget?

Problem

With the advancement of technology, a fast-paced lifestyle has arisen to support the needs of growing populations. With the increased busyness comes an increase in the issue of lost items. This problem, is expensive in both time and money (Tan & Chong, 2023).

For this project, we focus on Ashesi University and like most other universities, it has a lost and found collection point for student and staff to claim their belongings. However, many members remain unaware of these locations and other tools employed (RepoApp, 201). The growing university community and environment make it unfeasible to search for lost items on-premises physically.

Before delving into the solution, we shall dive into the current system in place for this.

How does Ashesi track lost and found items

Reporting lost items

Students/staff need to submit a form with the following details

Personal information: full name, school email and phone number

Item details: name, details and any additional context

Last seen: location, time and event

Users may also upload a picture of the lost item to help speed up the search process.

Student welfare chairpersons (or staff officers) then broadcast an email to the university population concerning the missing item.

Reporting found items

Students/staff can drop off the item at one of the collection points; usually the case for random objects

The finder can hand it over to the authorities (library officer, coordinator or student welfare officer), which is usually the case for expensive items.

Reply to the email concerning the lost item and report the missing item found, or contact the student welfare chairperson directly.

Retrieving lost but found items

Meet the officers for identification for identification and collection

Go to the collection point and retrieve the item

Student welfare chairs contact the reporter of the missing item

The process outlined above is the traditional method utilised by Ashesi University and involves manual record-keeping and cumbersome retrieval processes. Officers need to match reports of found items to reports of lost items before reaching out to facilitate the return of these items. These systems lead to the inefficiencies and delays in item recovery.

Hence, there is a need to find an efficient way to retrieve these lost items. The wide spread of technology makes it possible to utilise new methods to facilitate lost-and-found tracking. By leveraging AWS's robust infrastructure, we can develop a more efficient and automated system to track and recover lost items, reducing the number of unclaimed belongings and helping users quickly locate their property.

Proposed Solution

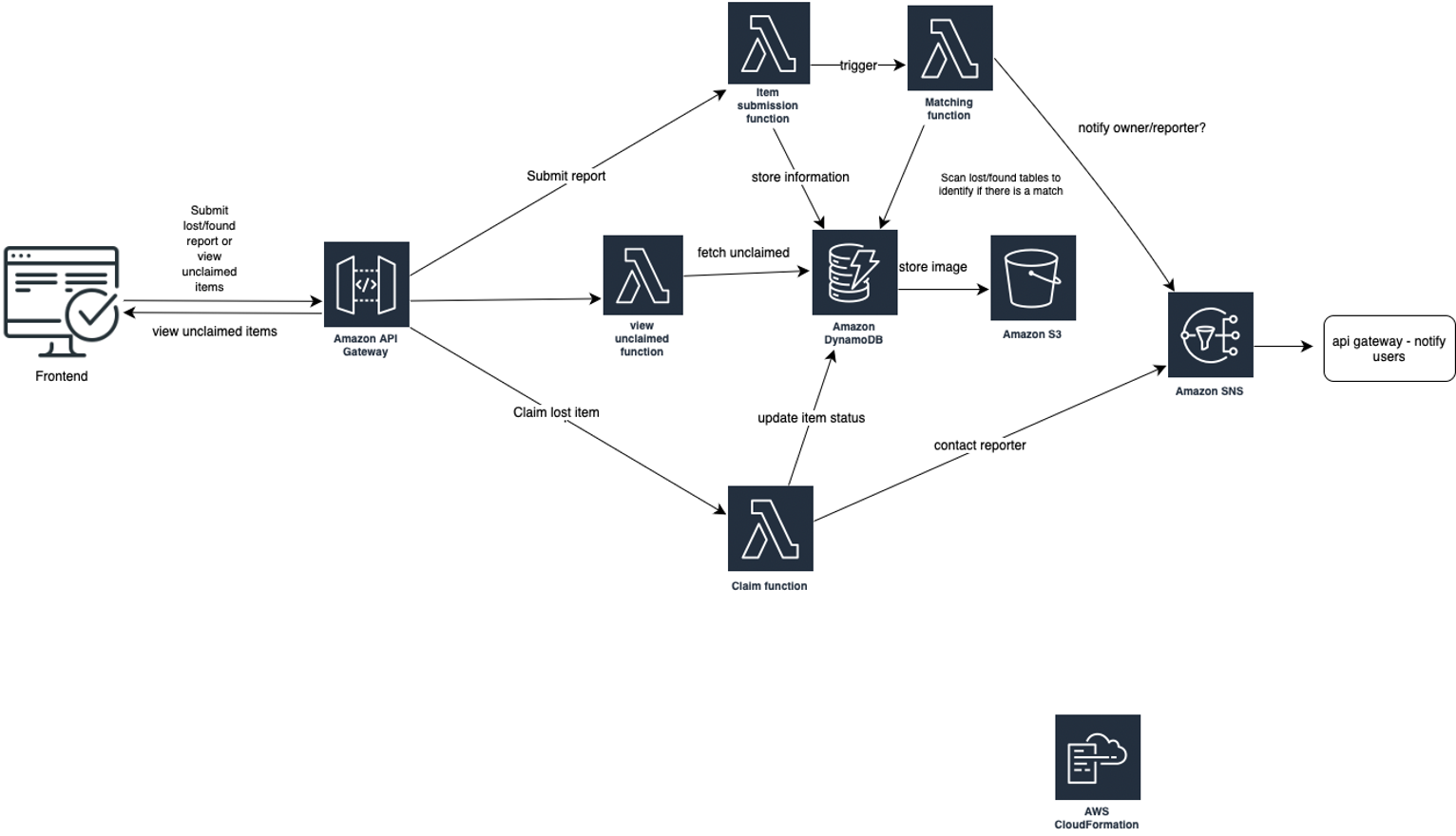

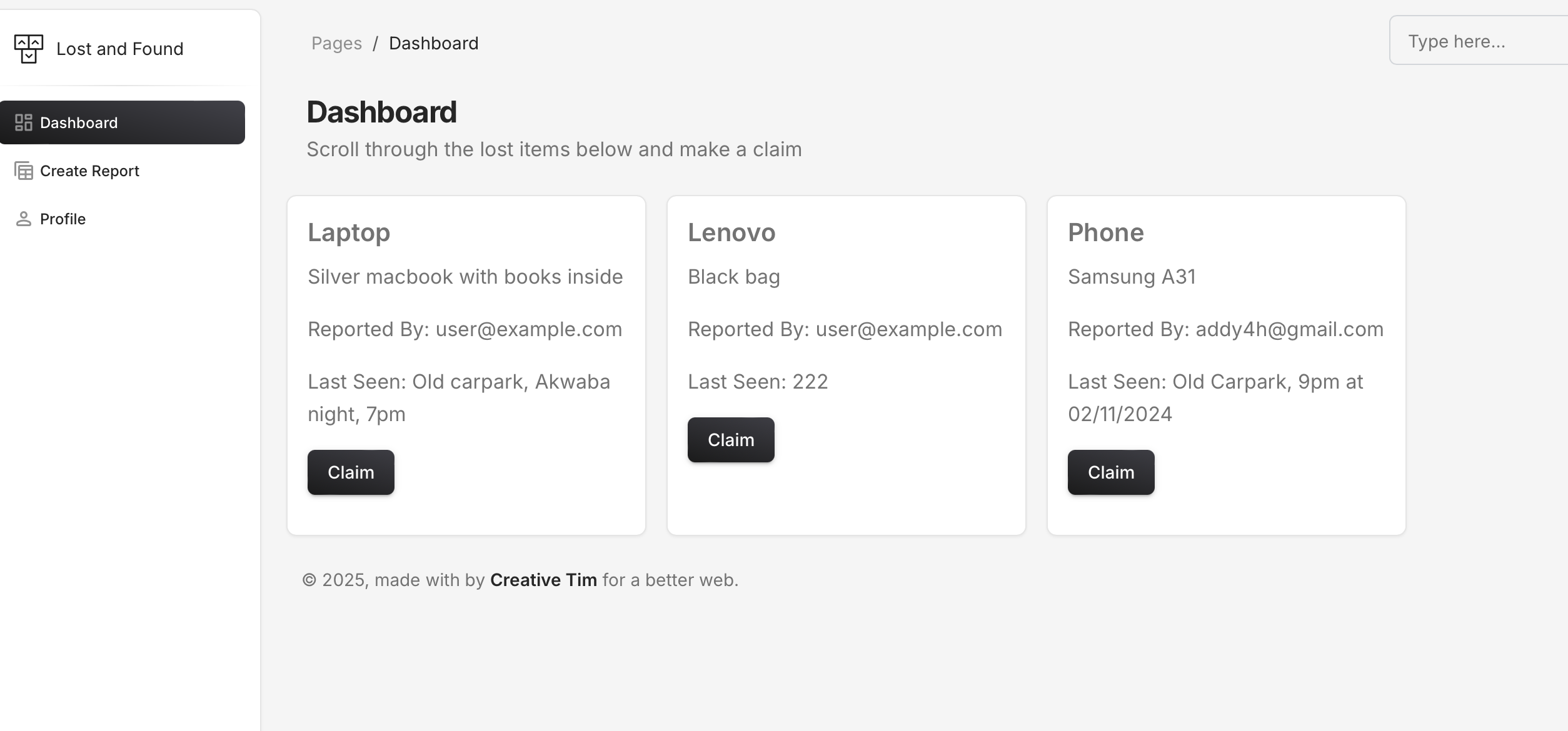

The proposed solution utilises AWS services to produce a simple web app that users may interact with.

Core Features

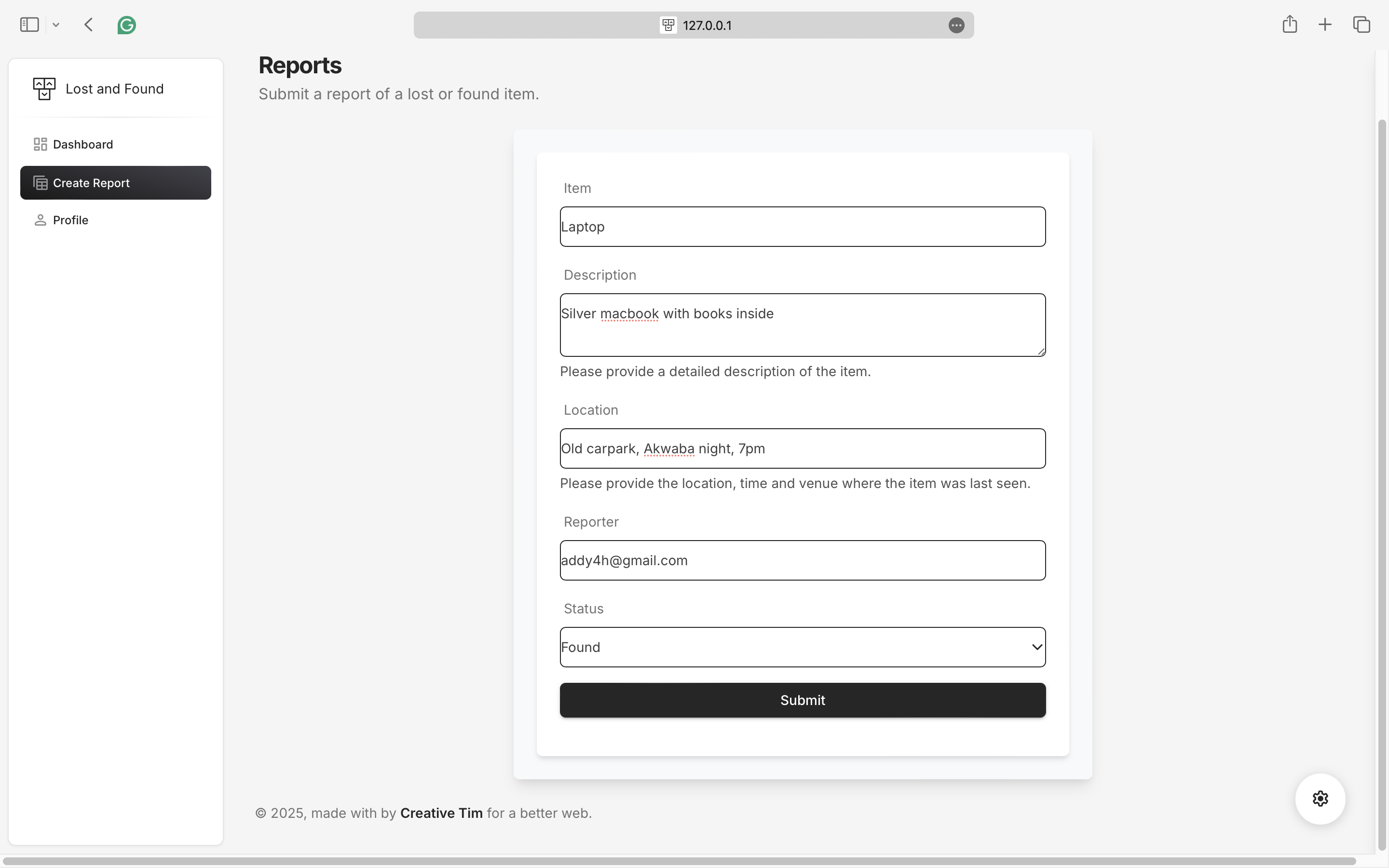

User Submission – Users can submit reports for lost and found items.

Matching System – Automatically match lost items with found reports based on keywords, date, and location.

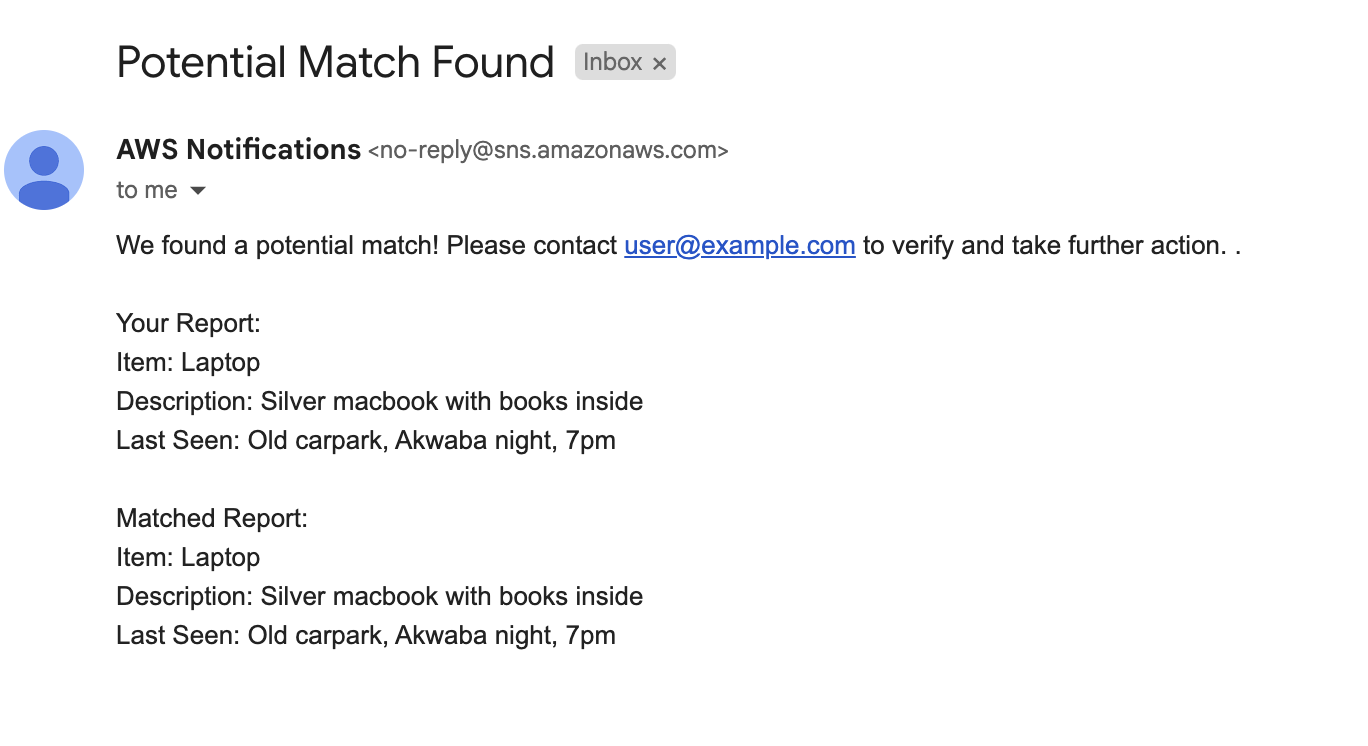

Notifications – Notify users when a match is found.

AWS Service Breakdown

S3 – Store lambda functions and cloud formation templates

DynamoDB – Store reports and user info

API Gateway + AWS Lambda – Handle API requests for item submissions, matches, claims, and notifications.

CloudFormation – Automate infrastructure setup.

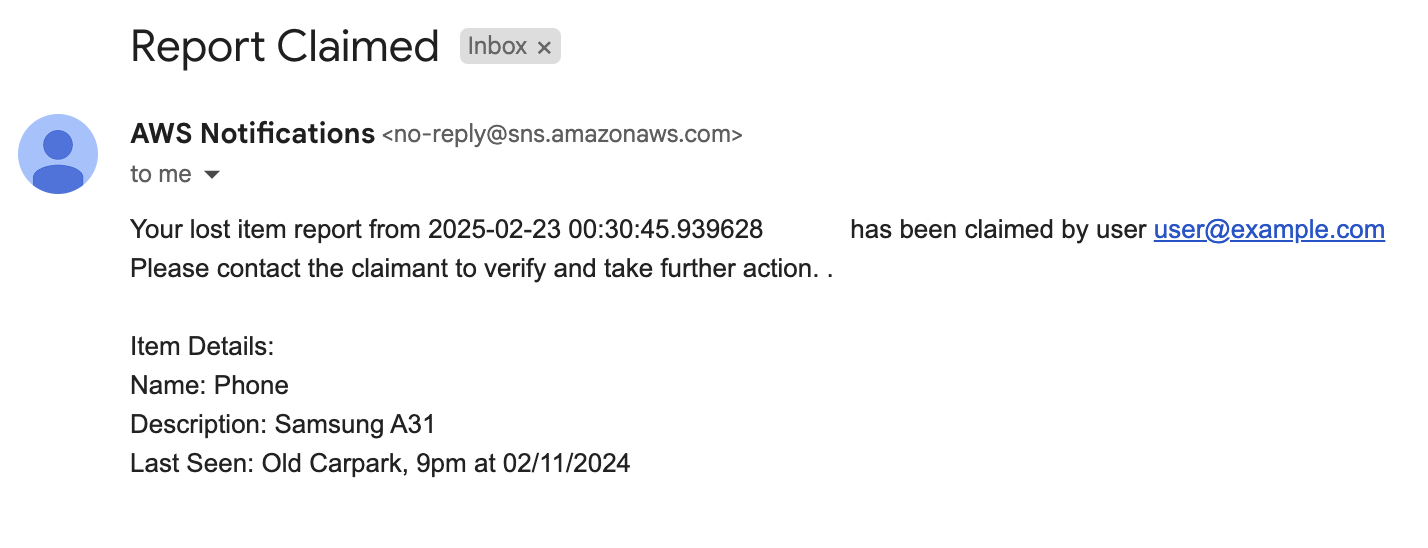

SNS – Send email notifications for matches and claims

Here’s a basic run down of how the proposed system would work:

Implementation

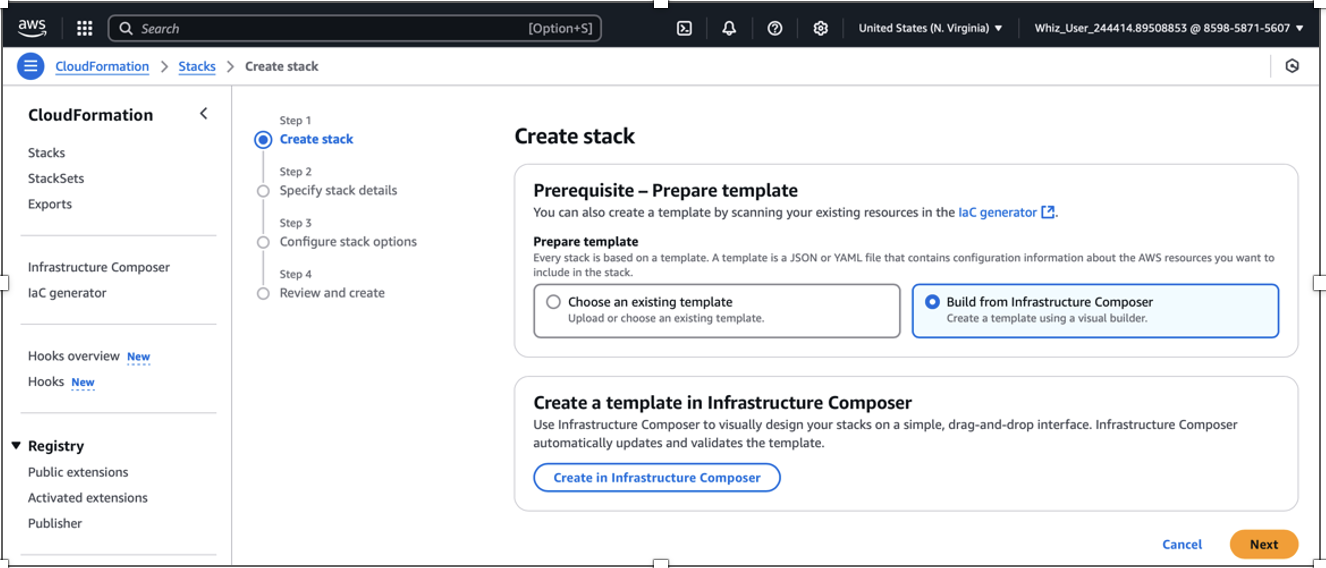

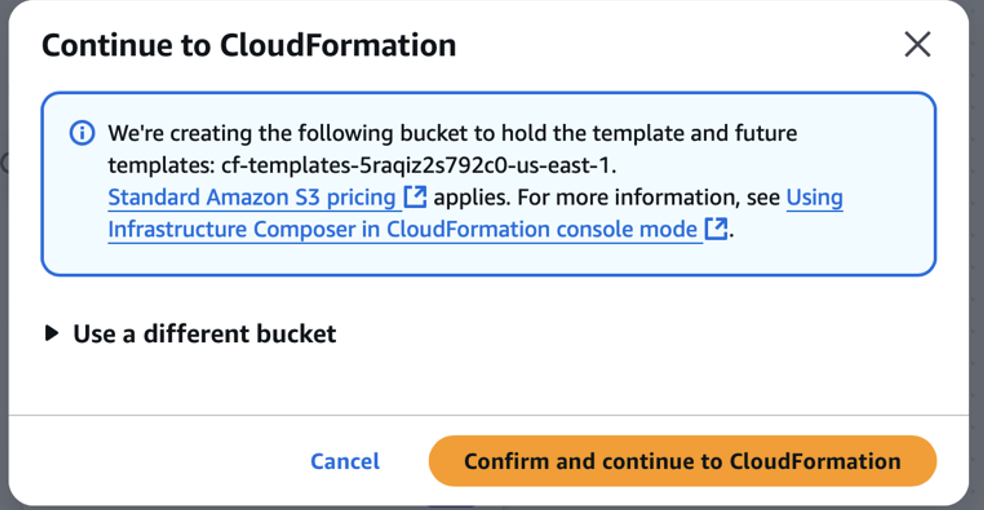

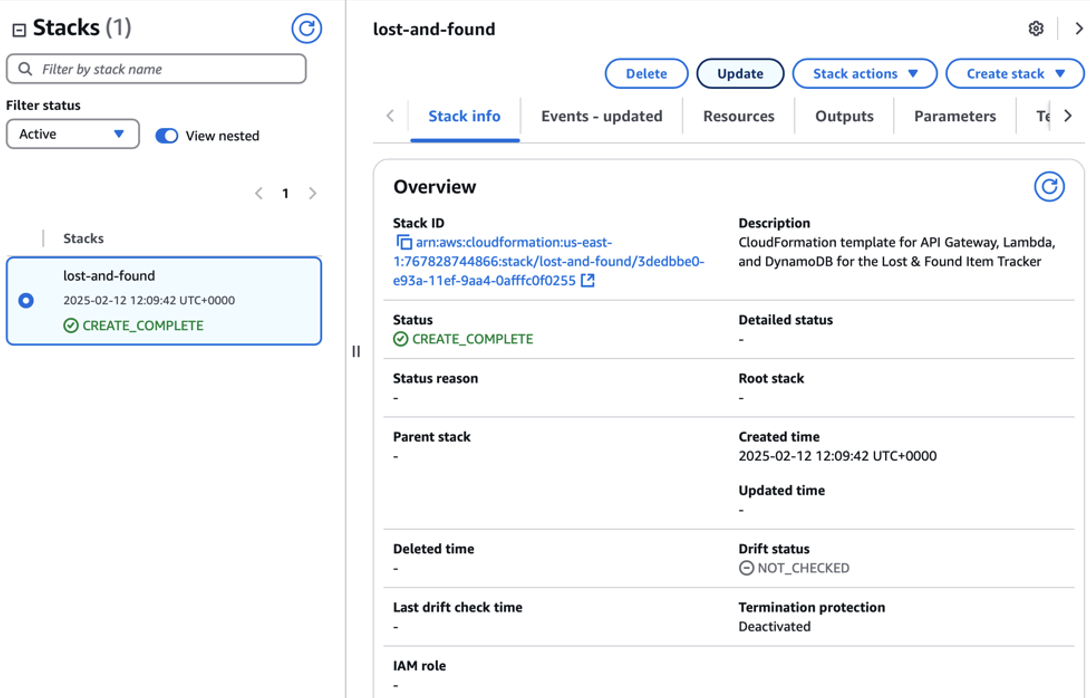

For this project, we will use AWS CloudFormation to help with the quick deployment and re-usability.

To create a stack:

Navigate to AWS management console > CloudFormation

Navigate to Stacks in the pane

Create Stack

Select

Create in Infrastructure composerNavigate to

templateon the canvasCopy the contents of this yaml file → this creates the tables we will be using for the project

AWSTemplateFormatVersion: '2010-09-09'

Description: CloudFormation template for API Gateway, Lambda, and DynamoDB for the Lost & Found Item Tracker

Resources:

# DynamoDB Table

LostAndFound:

Type: 'AWS::DynamoDB::Table'

Properties:

TableName: 'LostandFound'

AttributeDefinitions:

- AttributeName: report_id

AttributeType: S

BillingMode: PAY_PER_REQUEST

KeySchema:

- AttributeName: report_id

KeyType: HASH

StreamSpecification:

StreamViewType: NEW_AND_OLD_IMAGES

DependsOn: LambdaExecutionRole

# Users Table

Users:

Type: 'AWS::DynamoDB::Table'

Properties:

TableName: 'Users'

AttributeDefinitions:

- AttributeName: user_id

AttributeType: S

BillingMode: PAY_PER_REQUEST

KeySchema:

- AttributeName: user_id

KeyType: HASH

DependsOn: LambdaExecutionRole

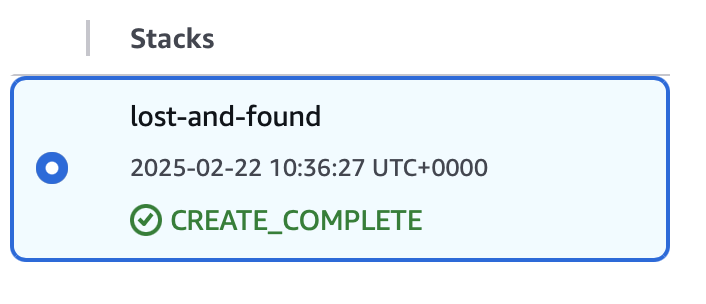

Provide a stack name.

Under configure stack options, set deletion policy to delete all regardless of the retention policy.

Acknowledge all the necessary items in capabilities and transform and then create.

A successful creation appears with this message:

Lambda functions

In this project, we have 3 main lambda functions

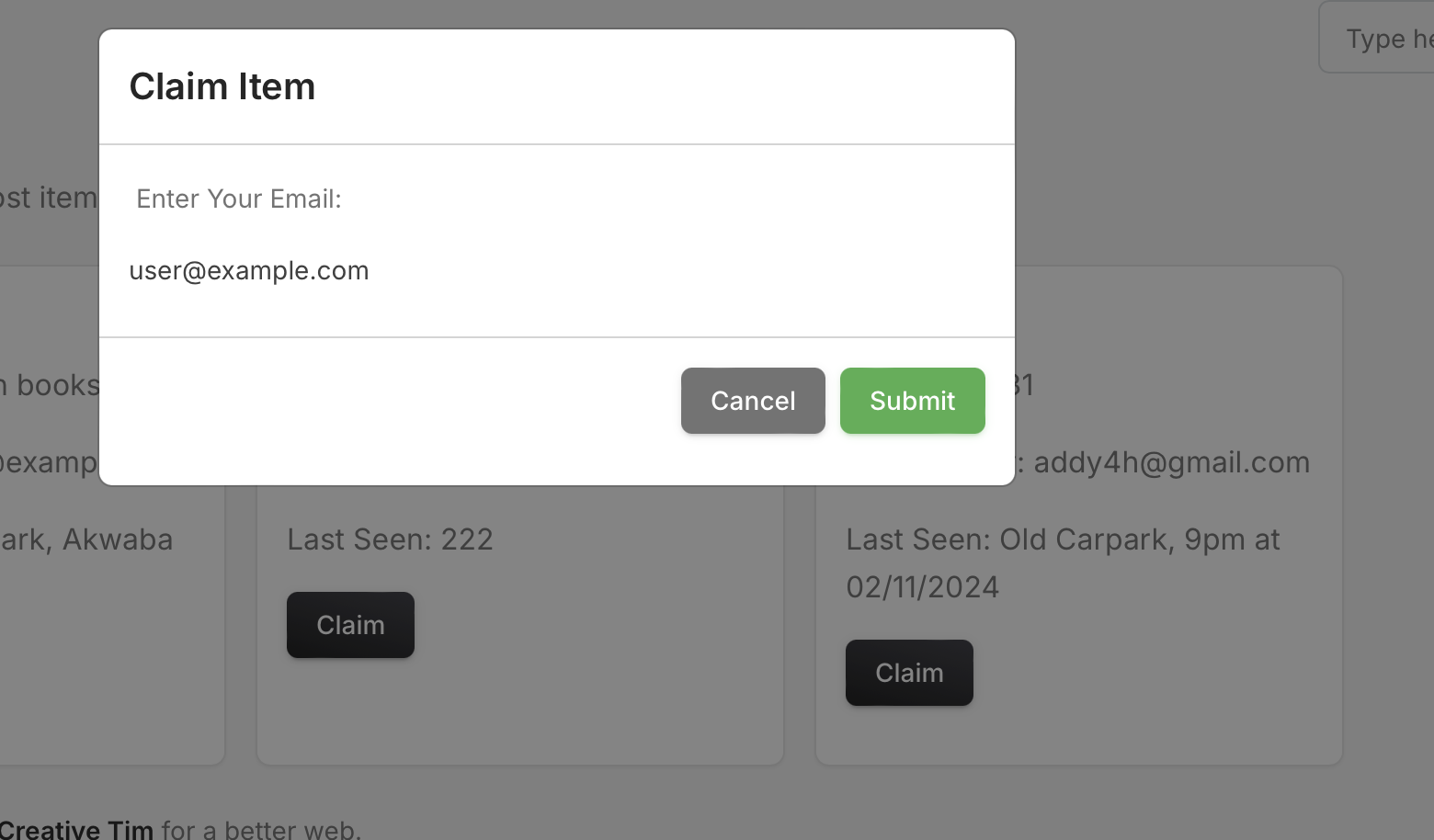

Reports Lambda: This function manages reports' creation, retrieval, and claiming. Users can create a lost or found report. Retrievals handle the logic of returning only lost items; with claims, users are notified when a successful claim is made.

Matching Lambda: This function manages the logic for when a match between lost and found reports is made and notifies the user. It uses fuzzy logic to match reports based on name and description.

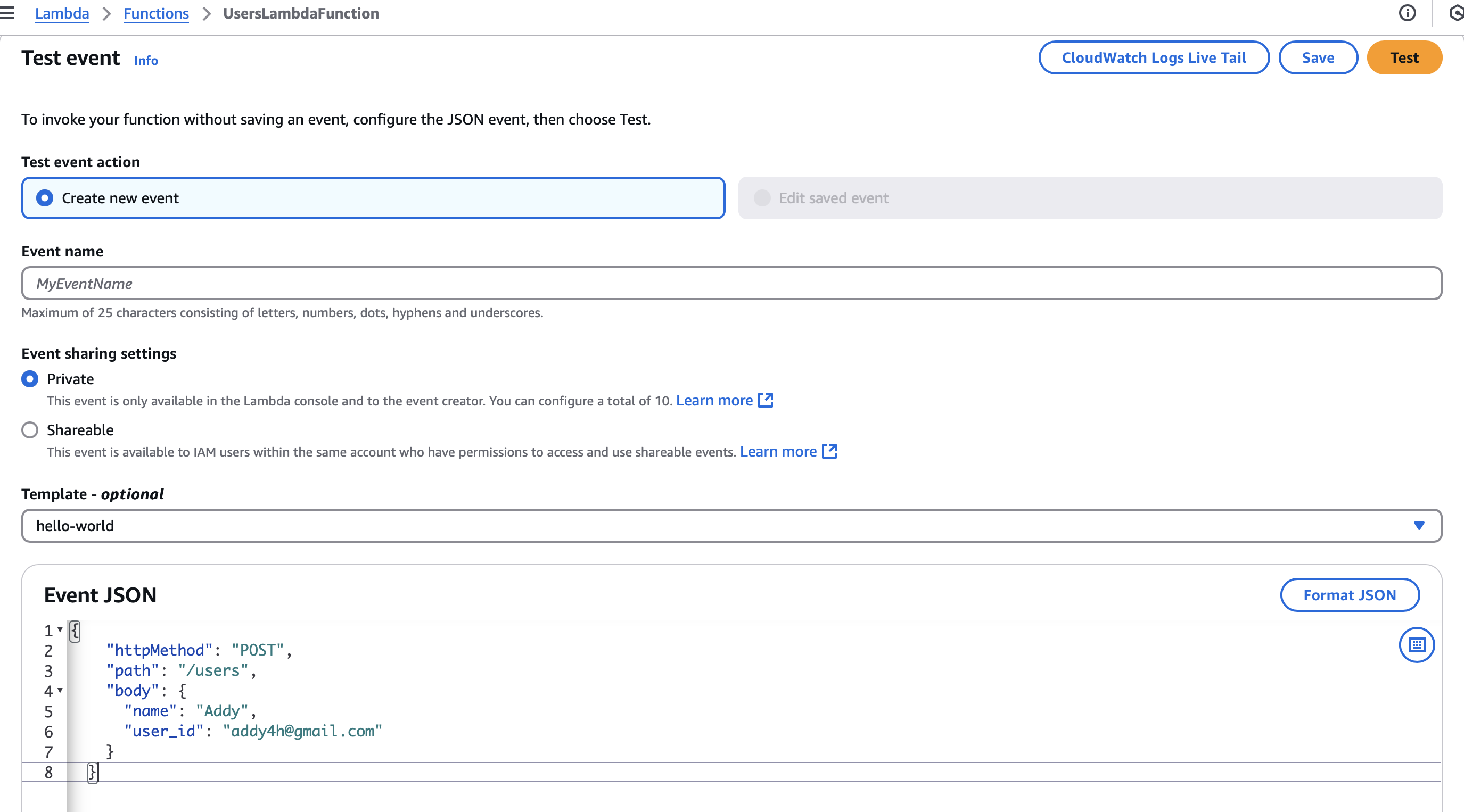

Users Lambda: This function manages the creation and retrieval of users. It serves as a basic version of an API instead of using AWS Cognito. It is also responsible for subscribing users to the SNS topic

The code for each of these functions can be found below.

NB: the header part in the response is due to CORS enabling which we will talk about later

# REPORT LAMBDA

import boto3

import json

import uuid

import datetime

import os

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('LostandFound')

sns_client = boto3.client('sns')

SNS_TOPIC = os.getenv('NOTIFS')

# handling reports

def lambda_handler(event, context):

http_method = event['httpMethod']

path = event['path']

if http_method == 'POST' and path == '/reports':

return create_report(event)

elif http_method == 'GET' and path == '/reports':

return get_reports(event)

elif http_method == 'PATCH' and path.startswith('/reports/'):

return make_claim(event)

else:

return {

'statusCode': 404,

"headers": {

"Access-Control-Allow-Origin": "*",

"Content-Type": "application/json"

},

'body': json.dumps('Invalid route')

}

def create_report(event):

body = event['body'] if isinstance(event['body'], dict) else json.loads(event['body'])

reportId = str(uuid.uuid4()) # random unique id

user_email = body.get('reported_by')

item_name = body.get('name')

item_description = body.get('description')

item_location = body.get('last_seen')

item_status = body.get('item_status') # track if it's a lost or found report

item = {

'report_id': reportId,

'reported_by': user_email,

'name': item_name,

'description': item_description,

'last_seen': item_location,

'item_status': item_status,

'created_at': str(datetime.datetime.now())

}

table.put_item(Item=item)

return {

'statusCode': 200,

"headers": {

"Access-Control-Allow-Origin": "*",

"Content-Type": "application/json"

},

'body': json.dumps('Item created successfully')

}

def get_reports(event):

response = table.scan(

FilterExpression="item_status = :status",

ExpressionAttributeValues={":status": "lost"}

)

return {

"statusCode": 200,

"headers": {

"Access-Control-Allow-Origin": "*",

"Content-Type": "application/json"

},

"body": json.dumps(response["Items"])

}

def make_claim(event):

reportId = event['pathParameters']['report_id']

body = event['body'] if isinstance(event['body'], dict) else json.loads(event['body'])

user_email = body.get('user_id')

send_claim_notif(reportId, user_email)

# Update report status to 'claimed'

table.update_item(

Key={'report_id': reportId},

UpdateExpression='SET item_status = :status',

ExpressionAttributeValues={':status': 'claimed'}

)

return {

"statusCode": 200,

"headers": {

"Access-Control-Allow-Origin": "*",

"Content-Type": "application/json"

},

"body": json.dumps({"message": "Report status updated to claimed"})

}

# set up SNS function to notify users

def send_claim_notif(report_id, user_email):

response = table.get_item(Key={'report_id': report_id})

report = response['Item']

sns_message = (

f"Your {report['item_status']} item report from {report['created_at']}\

has been claimed by user {user_email}\n"

f"Please contact the claimant to verify and take further action. .\n\n"

f"Item Details:\n"

f"Name: {report['name']}\n"

f"Description: {report['description']}\n"

f"Last Seen: {report['last_seen']}\n"

)

sns_client.publish(

TopicArn=SNS_TOPIC,

Message=sns_message,

Subject="Report Claimed",

MessageAttributes={

'user_id': {'DataType': 'String', 'StringValue': report['reported_by']}

}

)

# MATCHING LAMBDA

import boto3

import json

import string

import os

from thefuzz import fuzz

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('LostandFound')

sns_client = boto3.client('sns')

SNS_TOPIC = os.getenv('NOTIFS')

# handling matches

def lambda_handler(event, context):

# process new reports

for record in event['Records']:

if record['eventName'] == 'INSERT':

new_report = record['dynamodb']['NewImage']

best_match = find_best_match(new_report)

if best_match:

# set up SNS for notifying users

print(f"Best match found: {best_match}")

send_match_notif(best_match, new_report)

return {

"statusCode": 200,

"body": json.dumps("Match process completed")

}

def preprocess_text(text):

if not text:

return ""

text = text.lower()

text = text.translate(str.maketrans('', '', string.punctuation))

return text.strip()

def find_best_match(new_report):

item_status = new_report["item_status"]["S"]

if item_status not in ["lost", "found"]:

return None

# determine opposite status for matching

target_stats = "found" if item_status == "lost" else "lost"

# retrieve reports with target stats

response = table.scan(

FilterExpression="item_status = :status",

ExpressionAttributeValues={":status": target_stats}

)

reports = response.get("Items", [])

if not reports:

return None

best_match = None

best_score = 0

for report in reports:

score = compute_match_score(new_report, report)

if score > best_score:

best_score = score

best_match = report

return best_match if best_score > 80 else None

def compute_match_score(new_report, old_report):

# preprocess both reports

newr_name = preprocess_text(new_report["name"]["S"])

newr_desc = preprocess_text(new_report["description"]["S"])

oldr_name = preprocess_text(old_report.get("name", ""))

oldr_desc = preprocess_text(old_report.get("description", ""))

# calculate fuzz ratio for name and description

name_score = fuzz.token_sort_ratio(newr_name, oldr_name)

desc_score = fuzz.token_set_ratio(newr_desc, oldr_desc)*0.65 + fuzz.partial_ratio(newr_desc, oldr_desc)*0.4

return (name_score*0.4) + (desc_score*0.5)

def send_match_notif(match, original):

sns_message = (

f"We found a potential match! Please contact {match['reported_by']} to verify and take further action. .\n\n"

f"Your Report:\n"

f"Item: {original['name']['S']}\n"

f"Description: {original['description']['S']}\n"

f"Last Seen: {original['last_seen']['S']}\n\n"

f"Matched Report:\n"

f"Item: {match['name']}\n"

f"Description: {match['description']}\n"

f"Last Seen: {match['last_seen']}\n\n")

response = sns_client.publish(

TopicArn=SNS_TOPIC,

Message=sns_message,

Subject="Potential Match Found",

MessageAttributes={

'user_id':{'DataType': 'String', 'StringValue': original['reported_by']['S']}

}

)

print(f"Notification sent, message ID: {response['MessageId']}")

# USERS LAMBDA

import boto3

import json

import os

import datetime

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('Users')

sns = boto3.client('sns')

SNS_TOPIC = os.getenv('NOTIFS')

# lambda function to handle user details

def lambda_handler(event, context):

http_method = event['httpMethod']

path = event['path']

if http_method == 'POST' and path == '/users':

return create_user(event)

elif http_method == 'GET' and path.startswith('/users/'):

return get_user(event)

else:

return {

'statusCode': 404,

"headers": {

"Access-Control-Allow-Origin": "*",

"Content-Type": "application/json"

},

'body': json.dumps('Invalid route')

}

def create_user(event):

body = event['body'] if isinstance(event['body'], dict) else json.loads(event['body'])

user_email = body.get('user_id')

user_name = body.get('name')

# check if the user already exists

if check_user_exist(user_email):

return {'statusCode': 400, 'body': json.dumps('User already exists')}

user = {

'user_id': user_email,

'name': user_name,

'created_at': str(datetime.datetime.now())

}

table.put_item(Item=user)

subscribe_user(user_email)

return{

'statusCode': 200,

"headers": {

"Access-Control-Allow-Origin": "*",

"Content-Type": "application/json"

},

'body': json.dumps('User created successfully')

}

def check_user_exist(user_email):

response = table.get_item(Key={'user_id': user_email})

return 'Item' in response

def get_user(event):

user_email = event['pathParameters']['user_id']

response = table.get_item(Key={'user_id': user_email})

if 'Item' not in response:

return {"statusCode": 404, "body": json.dumps("User not found")}

else:

return {"statusCode": 200, "body": json.dumps(response['Item'])}

def subscribe_user(user_email):

try:

# subscribe user to SNS topic

print('Subscribing user: ', user_email)

response = sns.subscribe(

TopicArn=SNS_TOPIC,

Protocol='email',

Endpoint=user_email,

Attributes={"FilterPolicy": json.dumps({"user_id": [user_email]})}

)

print(f"Subscribed user: {user_email}")

except Exception as e:

return {"statusCode": 500,

"headers": {

"Access-Control-Allow-Origin": "*",

"Content-Type": "application/json"

},

"body": json.dumps(str(e))}

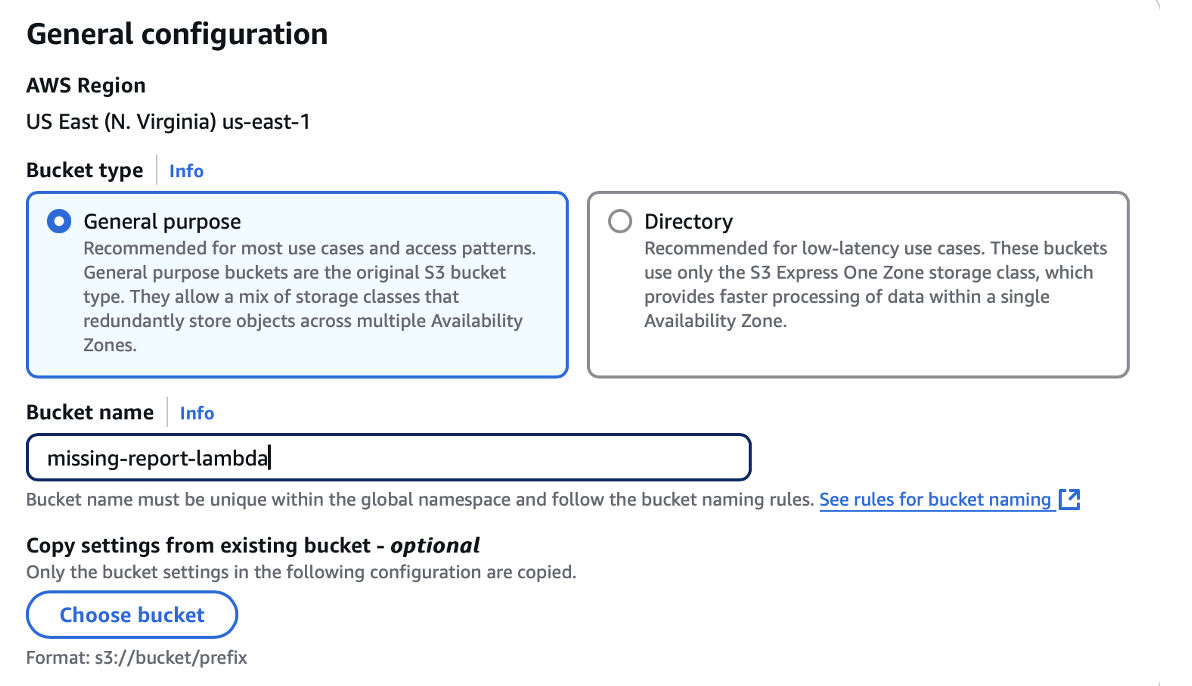

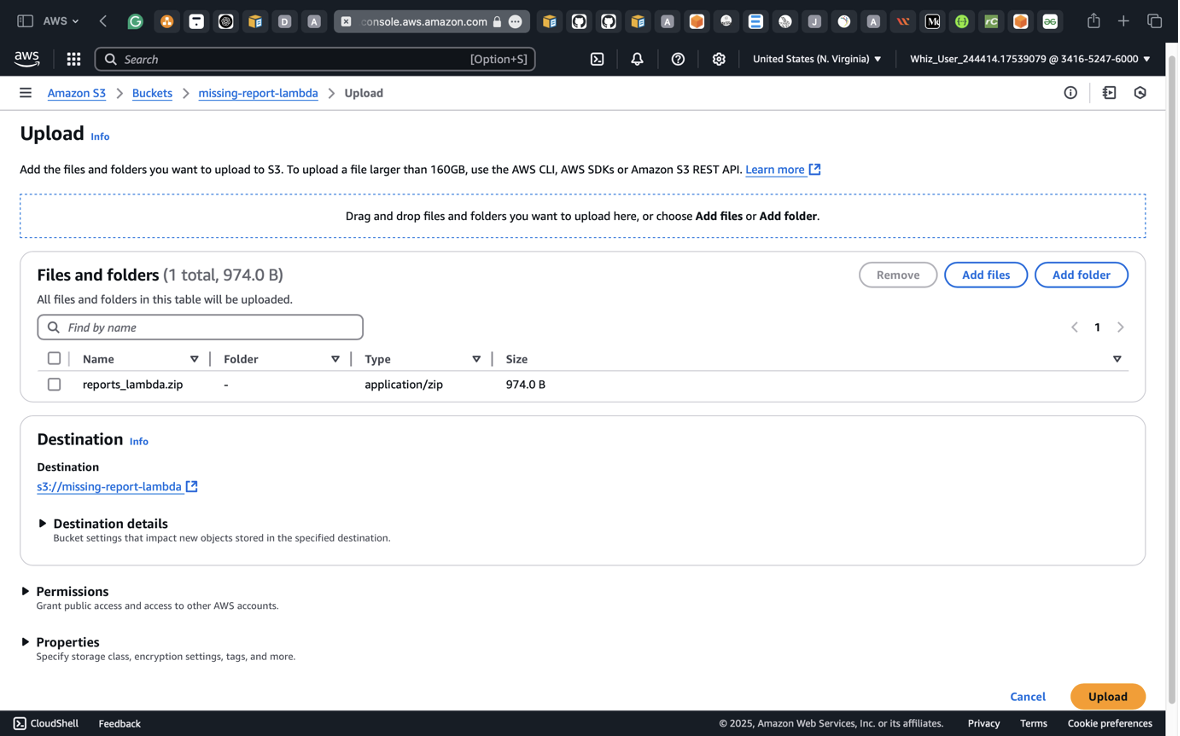

So, to utilise these functions and deploy them with our cloudformation template, we need to zip each function (and any dependencies they may need) and upload them into an s3 bucket.

Navigate to s3 on the console

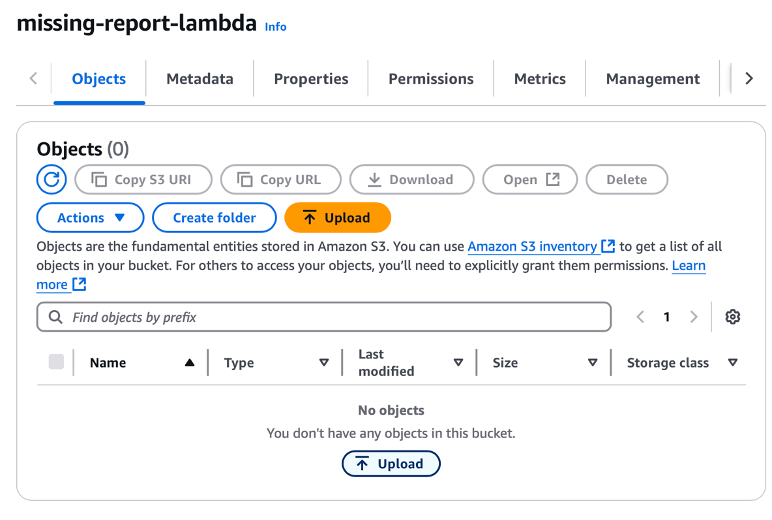

Create a bucket

Leave all other settings at default and click Create

Double-click the selected bucket’s name and upload the files into it

- Click upload and then proceed

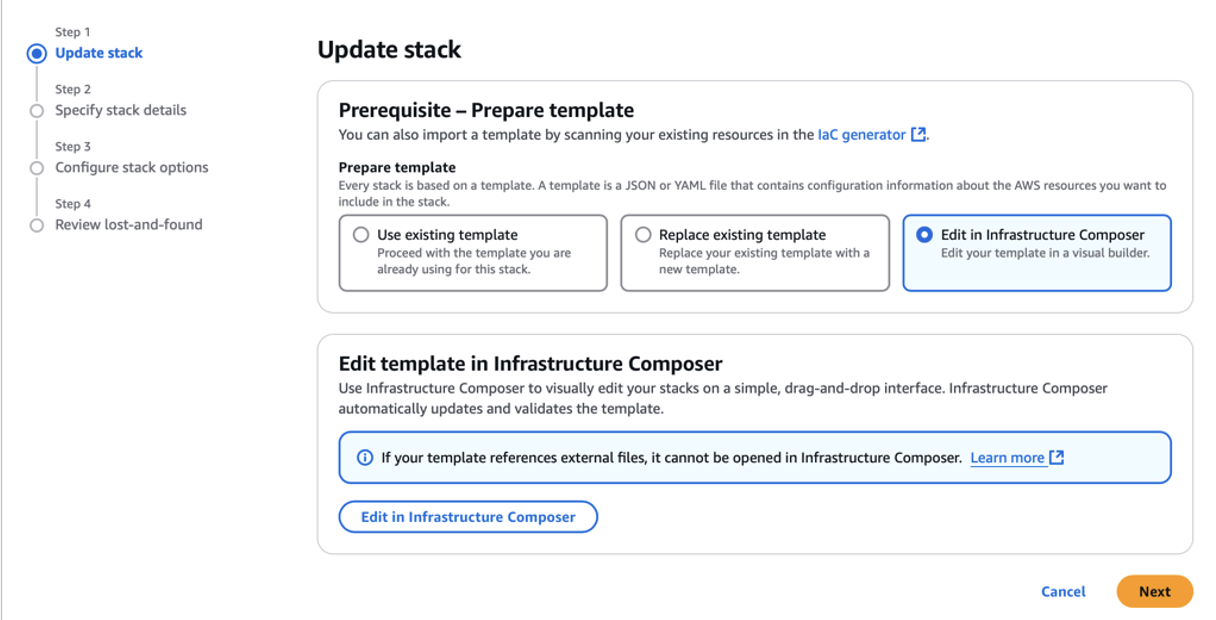

Next we want to go ahead and update our cloud formation template

- Click on update and edit in infrastructure composer

Next edit in the template part of the canvas and validate

Copy and paste this code

# Iam role for lambda - access DynamoDb, cloudwatch logs and sns

LambdaExecutionRole:

Type: 'AWS::IAM::Role'

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

Action:

- 'sts:AssumeRole'

Policies:

- PolicyName: LFAllAccess

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- 'dynamodb:PutItem'

- 'dynamodb:GetItem'

- 'dynamodb:UpdateItem'

- 'dynamodb:DeleteItem'

- 'dynamodb:Scan'

- 'dynamodb:Query'

- 'dynamodb:BatchGetItem'

- 'dynamodb:BatchWriteItem'

- 'dynamodb:TagResource'

- 'dynamodb:UntagResource'

- 'dynamodb:GetRecords'

- 'dynamodb:GetShardIterator'

- 'dynamodb:DescribeStream'

- 'dynamodb:ListStreams'

Resource: '*'

- Effect: Allow

Action:

- 'logs:CreateLogGroup'

- 'logs:CreateLogStream'

- 'logs:PutLogEvents'

Resource: "*"

- Effect: Allow

Action:

- 'sns:Publish'

- 'sns:Subscribe'

Resource: "*"

# Lambda function - users

UsersLambda:

Type: 'AWS::Lambda::Function'

Properties:

FunctionName: UsersLambdaFunction

Runtime: python3.9

Handler: users.lambda_handler

Code:

S3Bucket: "missing-report-lambda"

S3Key: "users_lambda.zip"

Role: !GetAtt LambdaExecutionRole.Arn

Timeout: 30

Environment:

Variables:

NOTIFS: !Ref LFsnsTopic

DependsOn:

- Users

- LFsnsTopic

# Lambda function - reports

ReportsLambda:

Type: 'AWS::Lambda::Function'

Properties:

FunctionName: ReportsLambdaFunction

Runtime: python3.9

Handler: reports.lambda_handler

Code:

S3Bucket: "missing-report-lambda"

S3Key: "reports_lambda.zip"

Role: !GetAtt LambdaExecutionRole.Arn

Timeout: 30

Environment:

Variables:

NOTIFS: !Ref LFsnsTopic

DependsOn:

- LostAndFound

- LFsnsTopic

# Lambda function - matching

MatchingLambda:

Type: 'AWS::Lambda::Function'

Properties:

FunctionName: MatchingLambdaFunction

Runtime: python3.9

Handler: matches.lambda_handler

Code:

S3Bucket: "missing-report-lambda"

S3Key: "matching_lambda.zip"

Role: !GetAtt LambdaExecutionRole.Arn

Timeout: 30

Environment:

Variables:

NOTIFS: !Ref LFsnsTopic

DependsOn:

- LostAndFound

- LFsnsTopic

MatchingLambdaEvent:

Type: 'AWS::Lambda::EventSourceMapping'

Properties:

EventSourceArn: !GetAtt LostAndFound.StreamArn

FunctionName: !GetAtt MatchingLambda.Arn

StartingPosition: TRIM_HORIZON

BatchSize: 1

DependsOn:

- LostAndFound

This code creates the the lambda functions by linking them to the appropriate folder in the s3 bucket and creates an execution role for the lambda to have access to dynamodb, cloudwatch for logs and monitoring and the SNS notifications.

The matching lambda event allows the function to respond/be triggered in response to dynamodb streams. The function is triggered only when an insert is done since this signifies the creation of a new report.

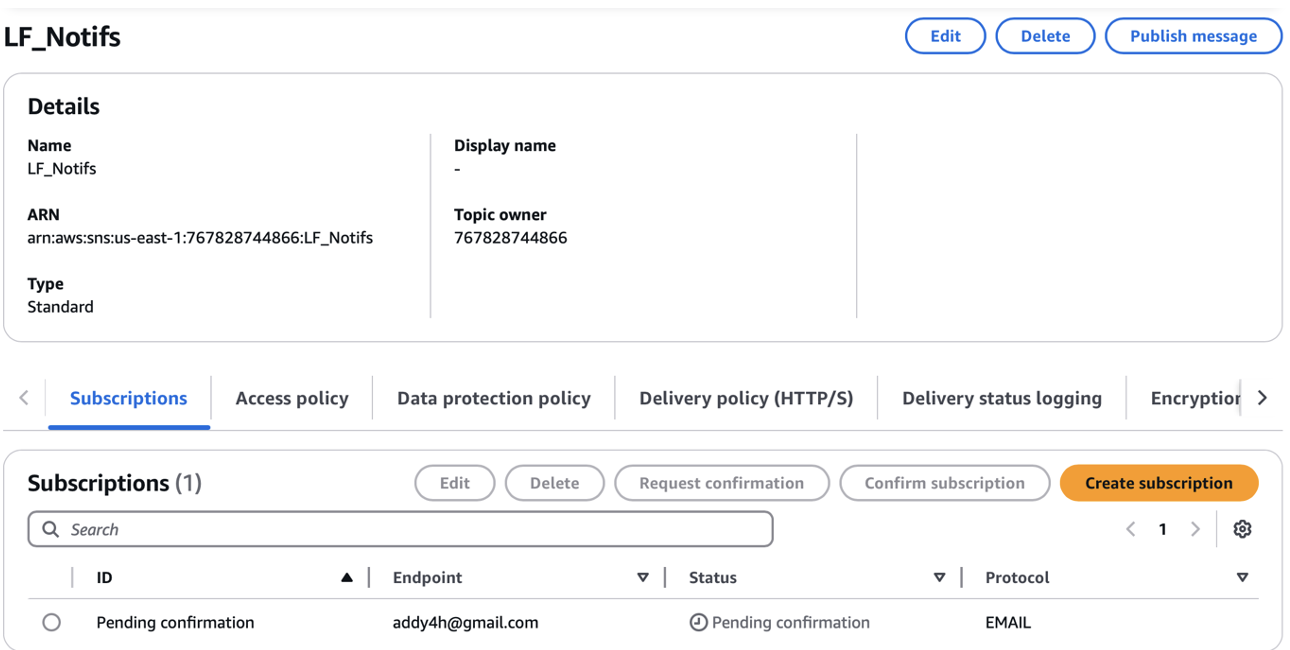

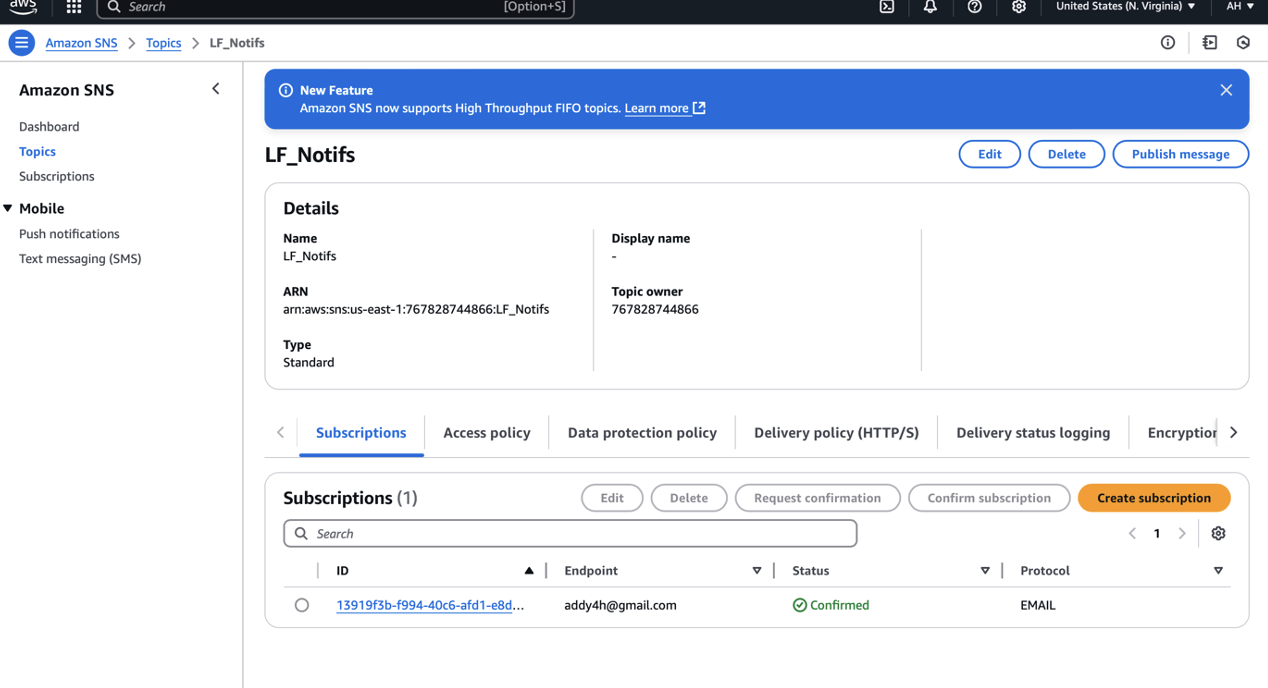

SNS notifications

In our cloud formation template, we created the notification this way

# SNS topic for emails

LFsnsTopic:

Type: 'AWS::SNS::Topic'

Properties:

TopicName: 'LF_Notifs'

The create user function handles users' subscription and utilises a filter policy based on user_id (email). This helps to ensure that users get relevant notifications whichis ensured by the message attributes in the claiming and matching functions

This topic is passed as an environment variable to our lambda functions to be utilised

API Gateway

Under the API gateway we have four major things to consider:

Creation of the API and its methods

Lambda Invocation permissions

Enabling Cors

Deployment

Once again all of this will be done using cloudformation

Creation of API and methods

We have 2 main paths.. /users and /reports. Hence in the template we have to create that clearly.

We also have sub resources or sub paths, eg: /users/{user_id}. That can be seen under the userIdresource

# API gateway

LostAndFoundAPI:

Type: 'AWS::ApiGateway::RestApi'

Properties:

Name: LostAndFoundApi

Description: API for Lost and Found tracker

DependsOn:

- ReportsLambda

- MatchingLambda

- UsersLambda

# define path for users

UsersResource:

Type: 'AWS::ApiGateway::Resource'

Properties:

RestApiId: !Ref LostAndFoundAPI

ParentId: !GetAtt LostAndFoundAPI.RootResourceId

PathPart: 'users'

DependsOn:

- LostAndFoundAPI

# define path for user_id

UserIdResource:

Type: AWS::ApiGateway::Resource

Properties:

RestApiId: !Ref LostAndFoundAPI

ParentId: !Ref UsersResource

PathPart: "{user_id}"

DependsOn:

- UsersResource

# define path for reports

ReportsResource:

Type: 'AWS::ApiGateway::Resource'

Properties:

RestApiId: !Ref LostAndFoundAPI

ParentId: !GetAtt LostAndFoundAPI.RootResourceId

PathPart: 'reports'

DependsOn:

- LostAndFoundAPI

# define path for report_id

ReportIdResource:

Type: AWS::ApiGateway::Resource

Properties:

RestApiId: !Ref LostAndFoundAPI

ParentId: !Ref ReportsResource

PathPart: "{report_id}"

DependsOn:

- ReportsResource

Next, we need to create the methods for the resources; here, we have 2 examples. The patch resource for the reports requires the id to be passed as a path parameter, and hence, we have enabled this in the integration request params and under authorisations as well.

# Post method - users

UsersMethodPOST:

Type: 'AWS::ApiGateway::Method'

Properties:

RestApiId: !Ref LostAndFoundAPI

ResourceId: !Ref UsersResource

HttpMethod: POST

AuthorizationType: NONE

Integration:

Type: AWS_PROXY

IntegrationHttpMethod: POST

Uri:

Fn::Sub: "arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${UsersLambda.Arn}/invocations"

DependsOn:

- UsersResource

# PATCH method - reports

ReportsMethodPATCH:

Type: 'AWS::ApiGateway::Method'

Properties:

RestApiId: !Ref LostAndFoundAPI

ResourceId: !Ref ReportIdResource

HttpMethod: PATCH

AuthorizationType: NONE

RequestParameters:

method.request.path.report_id: true

Integration:

Type: AWS_PROXY

IntegrationHttpMethod: POST

Uri:

Fn::Sub: "arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${ReportsLambda.Arn}/invocations"

RequestParameters:

integration.request.path.report_id: "method.request.path.report_id"

DependsOn:

- ReportIdResource

For this project, this is the mapping of methods to lambda functions

| Resource | Method | Lambda Integration |

| /users | POST | CreateUser (Users lambda) |

| /users/{userId} | GET | GetUser (Users lambda) |

| /reports | POST | SubmitReport (Reports lambda) |

| /reports | GET | GetLostReports (Reports lambda) |

| /reports/{reportId} | PATCH | ClaimItem (Reports lambda) |

Lambda Invoation permissions

ReportLambdaInvokePermission:

Type: 'AWS::Lambda::Permission'

Properties:

Action: 'lambda:InvokeFunction'

FunctionName: !GetAtt ReportsLambda.Arn

Principal: apigateway.amazonaws.com

SourceArn: !Sub "arn:aws:execute-api:${AWS::Region}:${AWS::AccountId}:${LostAndFoundAPI}/*"

DependsOn:

- Deployment

We need to provide the API gateway with permission to invoke every lambda function with an endpoint. This is the permissions for the report lambda function.

Enabling CORS

To enable Cors to use the cloud formation, we have to create an options method for every resource eg: /reports and subresource eg: /user_id that we have. This is an example for one method

ReportIdOptionsMethod:

Type: 'AWS::ApiGateway::Method'

Properties:

RestApiId: !Ref LostAndFoundAPI

ResourceId: !Ref ReportIdResource

HttpMethod: OPTIONS

AuthorizationType: NONE

Integration:

Type: MOCK

IntegrationResponses:

- StatusCode: "200"

ResponseParameters:

method.response.header.Access-Control-Allow-Headers: "'Content-Type,X-Amz-Date,Authorization,X-Api-Key,X-Amz-Security-Token'"

method.response.header.Access-Control-Allow-Methods: "'OPTIONS,PATCH'"

method.response.header.Access-Control-Allow-Origin: "'*'"

RequestTemplates:

application/json: "{\"statusCode\":200}"

MethodResponses:

- StatusCode: "200"

ResponseParameters:

method.response.header.Access-Control-Allow-Headers: true

method.response.header.Access-Control-Allow-Methods: true

method.response.header.Access-Control-Allow-Origin: true

We must ensure that cross-origin is set to a wildcard to allow any domain to access your API. In the methods section under response parameters, we include all the methods that relate to that resource. So for the report_id (sub) resource, it only has the PATCH method so that’s all we include.

The options method is used for pre-flight requests to check if the browser has CORS enabled

In creating our methods, we used the integration type AWS_PROXY which passes the information exactly as it is from the API to the lambda function. Hence we need to enable Access-Control-Allow-Origin as a wild card in the header of our response. Without this, Interaction between there API and any other browser is domain.

Deployment

# API gateway deployment

Deployment:

Type: AWS::ApiGateway::Deployment

DependsOn:

- ReportsMethodPOST

- ReportsMethodGET

- ReportsMethodPATCH

- UsersMethodPOST

- UsersMethodGET

- UsersOptionsMethod

- UserIdOptionsMethod

- ReportsOptionsMethod

- ReportIdOptionsMethod

Properties:

RestApiId: !Ref LostAndFoundAPI

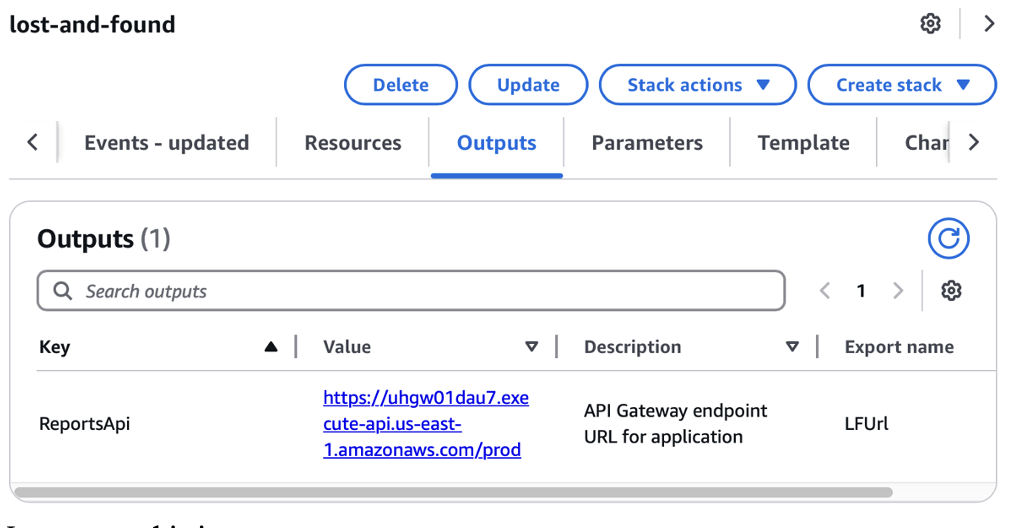

StageName: prod

# outputs

Outputs:

ReportsApi:

Description: "API Gateway endpoint URL for application"

Value:

Fn::Sub: "https://${LostAndFoundAPI}.execute-api.${AWS::Region}.amazonaws.com/prod"

Export:

Name: "LFUrl"

Transform: AWS::Serverless-2016-10-31

Deploy and enable output to retrieve the link

Testing

All tests were successful!

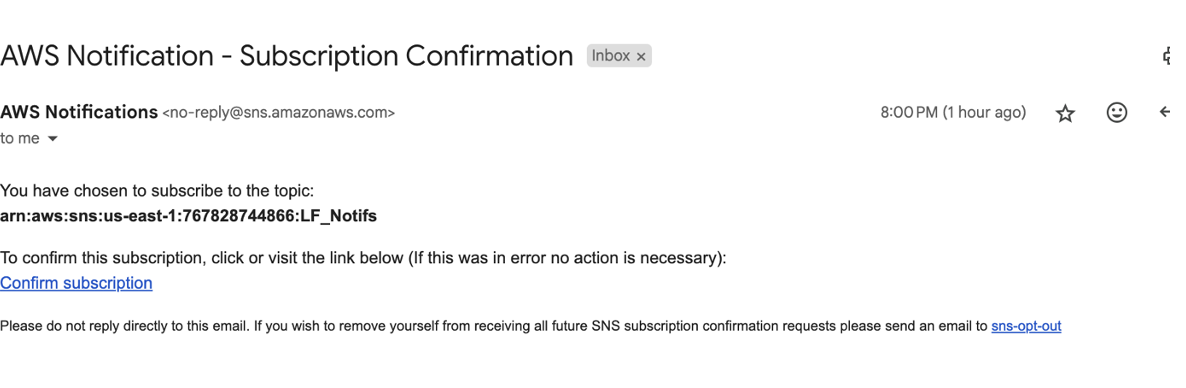

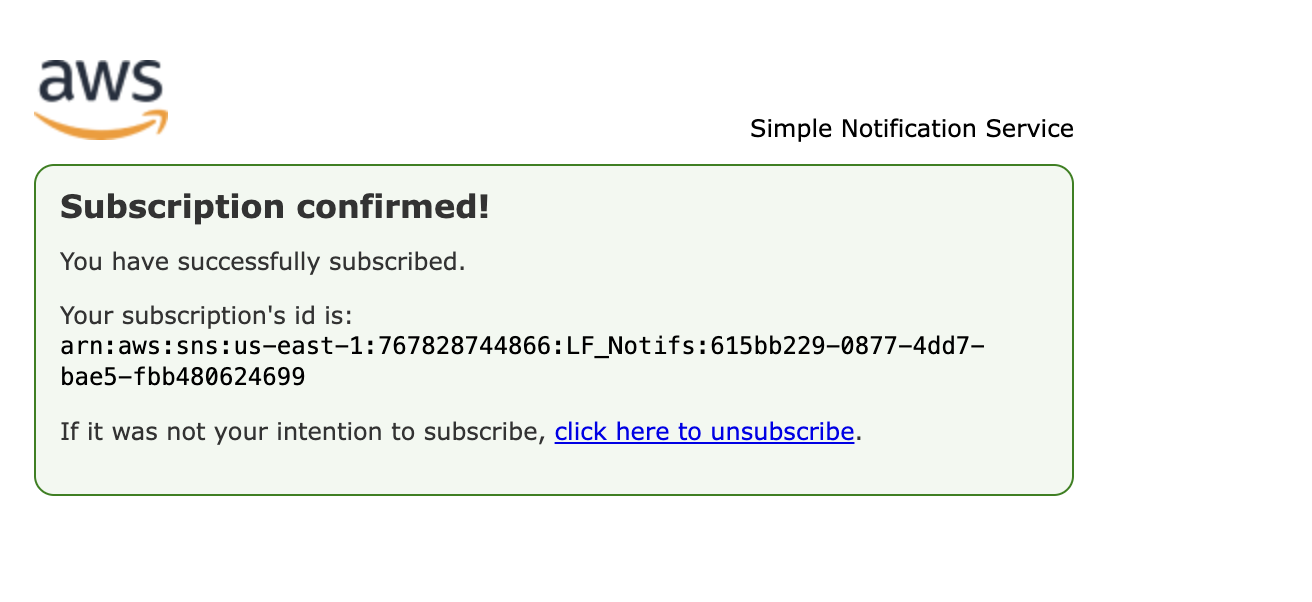

User was able to successfully log in and subscribe to SNS topic

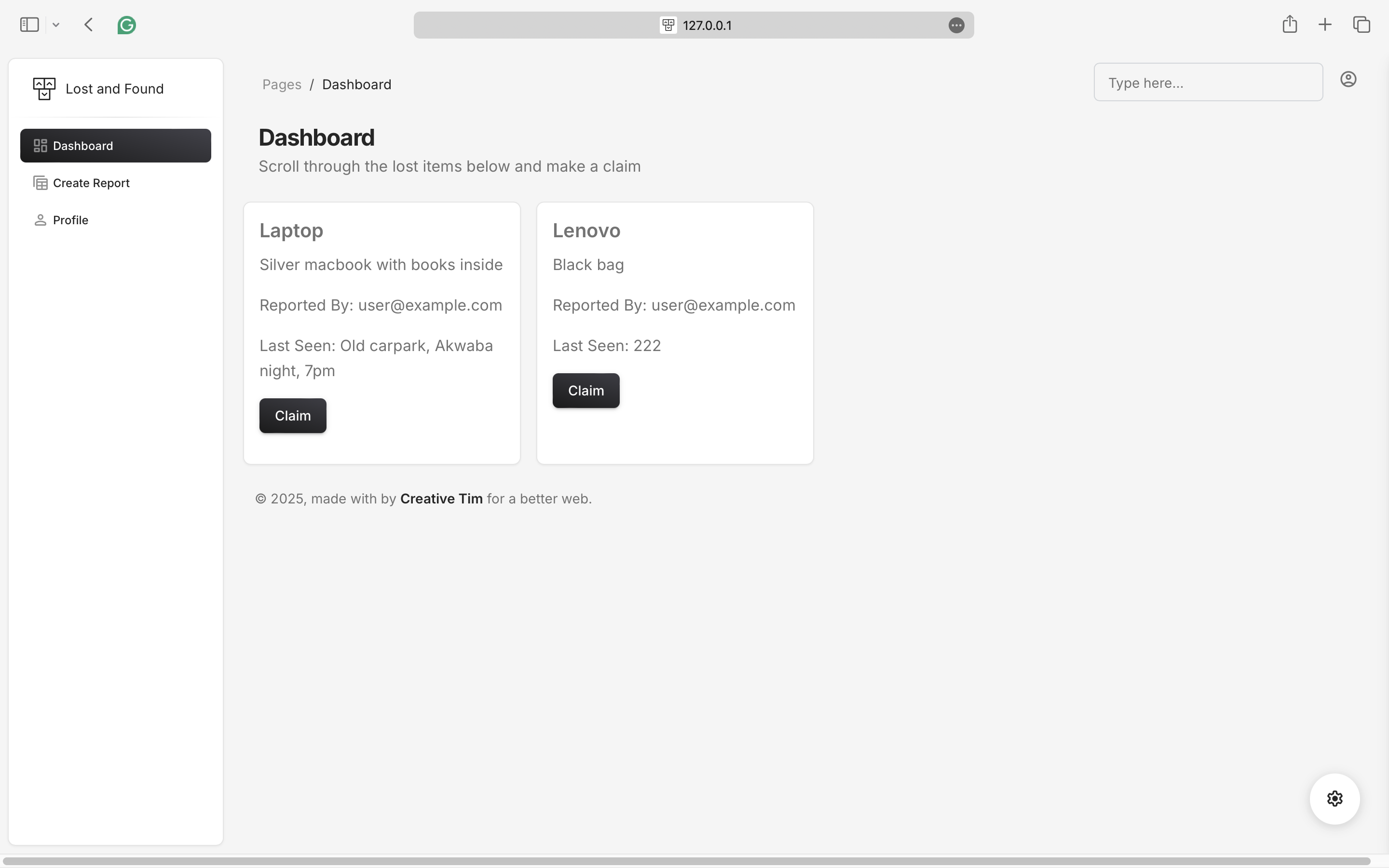

User successfully created a missing report

Dashboard only displays lost reports with new report created

User can claim reports

System finds potential match

Conclusion

In this little example, we have shown how much AWS can automate the little things that we do. Future work may include validating/authenticating claiming members to enhance safety in the community. Another aspect is the use of AWS cognito to handle the user registration etc and additional check to clear lost claimed lost items only after the item has been retrieved

Here is the link to the full back end for the system

Thank you for reading. I hope you found your phone now!

Subscribe to my newsletter

Read articles from Adelle Hasford directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by