Build a simple web scraper with Bun, Hono and Cheerio

Judge-Paul Ogebe

Judge-Paul Ogebe

In this article, we’ll walk through how to build a simple web scraper using Bun, Hono, and Cheerio. For this example we’ll build a web server with an endpoint that returns crypto prices without the use of an external API by directly scraping the necessary data from the website in our case Coingecko’s (https://www.coingecko.com) HTML content, I’d also show you how to use ChatGPT (or any LLM of choice) to write the code that’d extract the details you need, I am using ChatGPT over claude cause it allows me to paste large files and the HTML content I got was close to 1MB and Claude couldn’t handle it.

What You’ll Need

Bun: A modern JavaScript runtime built for speed.

Hono: A lightweight web framework/router to be used with bun.

Cheerio: A fast, jQuery-like library for parsing and traversing HTML on the server.

Setting Up Your Project

Install Bun following the steps outlined in their docs

Setup a new Hono project with bun.

bun create hono@latest web-scraper # select package manager and install dependencies ✔ Using target directory … web-scraper ? Which template do you want to use? bun ? Do you want to install project dependencies? yes ? Which package manager do you want to use? bunInstall cheerio and start dev server.

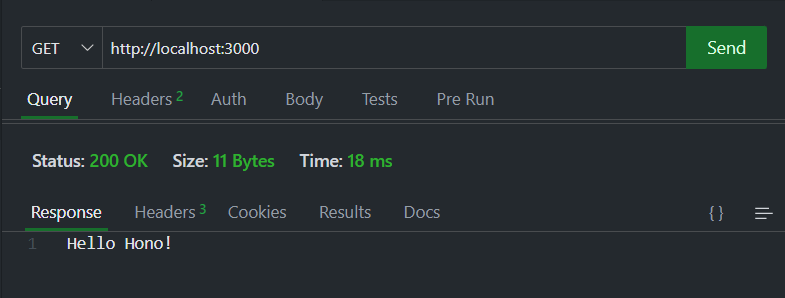

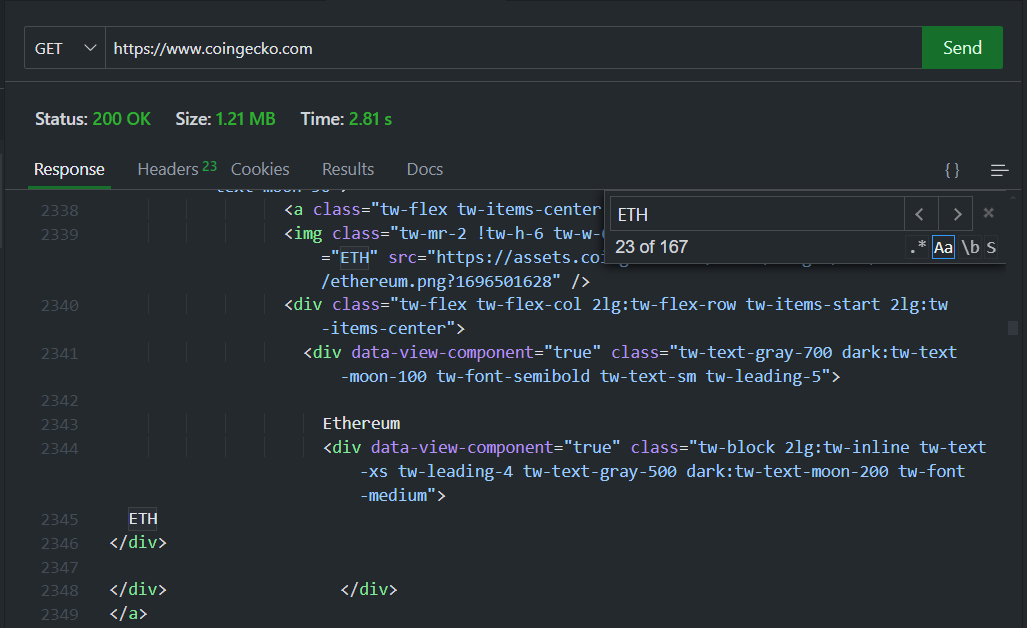

bun add cheerio bun devYou should get this response without an error if everything was done right.

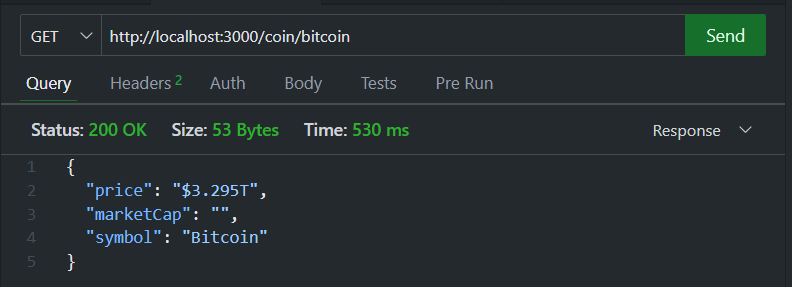

Using Thunder Client for HTTP testing.

Create the endpoints

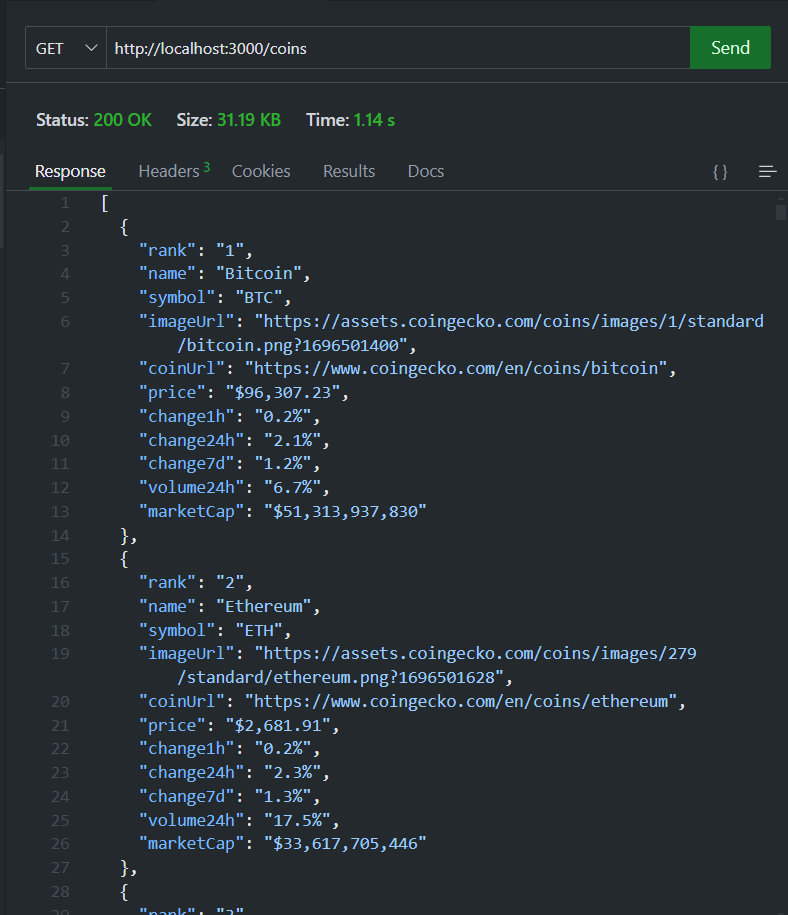

Now, we'll create two endpoints:

/coins– Retrieves a list of top cryptocurrencies along with their data, including prices./coin/:name– Retrieves the same data for a single cryptocurrency using its name as the identifier.

import { Hono } from "hono";

const app = new Hono();

const cryptoData = [

// dummy data used for testing

];

app.get("/coins", (c) => {

return c.json(cryptoData);

});

app.get("/coin/:name", (c) => {

const name = c.req.param("name");

const coin = cryptoData.find((crypto) => crypto.name === name);

if (coin) {

return c.json(coin);

} else {

return c.json({ error: "Coin not found" }, 404);

}

});

export default app;

Testing for Static vs. Dynamic Content

Before scraping any website using this method, it's essential to determine whether the content you wish to extract is rendered statically in the HTML source or dynamically using client-side frameworks like those powering Single Page Applications (SPAs).

Why It Matters

Cheerio parses static HTML content. However, if the data you're trying to scrape is dynamically loaded in the browser—such as when using a SPA framework like React or fetching data from an external API—it won't be present in the initial HTML response. In such cases, Cheerio may not find the expected content, and you might need a headless browser (e.g., Puppeteer or Playwright) to execute JavaScript.

How to Test the Target Website

Make a request to the exact URL you’re trying to scrape.

Search for the data you’re interested in the HTML, If you can find it it’s statically rendered.

Updating the endpoints

Update the endpoint to retrieve the HTML, then use the

loadmethod to parse the HTML string into a Cheerio object. This object allows you to navigate the DOM and manipulate the data as needed.import { Hono } from "hono"; import { load } from "cheerio"; const app = new Hono(); app.get("/coins", async (c) => { const res = await fetch("https://www.coingecko.com"); const html = await res.text(); const $ = load(html); return c.json(html); });

Next, we can save the entire HTML content as a file—or just the relevant section if the full HTML is too long—and attach it to ChatGPT. Then, we can ask it to generate Cheerio code to extract the required data.

After generating the code, be sure to test it thoroughly in case the LLM produces inaccurate results.

write cheerio code to loop through this table and extract necessary data like coin name symbol, price market cap e.t.c

// ChatGPT output had some minor mistakes I corrected but this was my final result

app.get("/coins", async (c) => {

const res = await fetch("https://www.coingecko.com");

const html = await res.text();

const $ = load(html);

const coins = [];

$("tbody tr").each((index, element) => {

const row = $(element);

const rank = row.find("td:nth-child(2)").text().trim();

const coinElement = row.find("td:nth-child(3) a");

const name = coinElement

.find("div.tw-font-semibold")

.text()

.trim()

.split(" ")[0]

.trim();

const symbol = coinElement.find("div.tw-text-xs").text().trim();

const imageUrl = coinElement.find("img").attr("src");

const coinUrl = "https://www.coingecko.com" + coinElement.attr("href");

const price = row.find("td[data-sort]").first().text().trim();

const change1h = row.find("td:nth-child(6)").text().trim();

const change24h = row.find("td:nth-child(7)").text().trim();

const change7d = row.find("td:nth-child(8)").text().trim();

const volume24h = row.find("td:nth-child(9)").text().trim();

const marketCap = row.find("td:nth-child(10)").text().trim();

coins.push({

rank,

name,

symbol,

imageUrl,

coinUrl,

price,

change1h,

change24h,

change7d,

volume24h,

marketCap,

});

});

return c.json(coins);

});

We can repeat the process for the coin/:name endpoint using the following prompt:

Take a look at this webpage and write Cheerio code to scrape the necessary coin-related data for that particular coin (e.g., price, market cap, symbol, etc.).

This generated the following response:

app.get("/coin/:name", async (c) => {

const name = c.req.param("name");

const res = await fetch(`https://www.coingecko.com/en/coins/${name}`);

const html = await res.text();

const $ = load(html);

const price = $('[data-price-target="price"]').first().text().trim();

const marketCap = $('[data-price-target="market_cap"]').first().text().trim();

const symbol = $('meta[property="og:title"]').attr("content").split(" ")[0];

return c.json({ price, marketCap, symbol });

})

However, when I tested it, it didn’t work as expected.

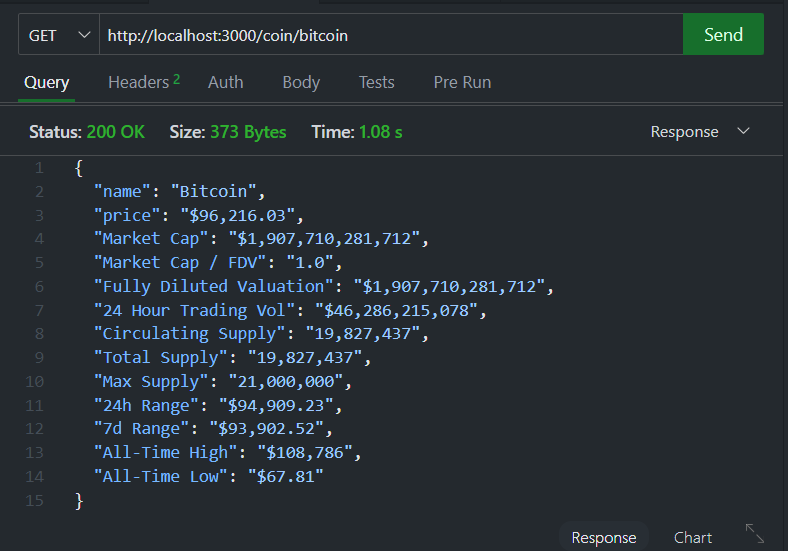

I had to manually inspect the response to find the data I was looking for. I noticed that the <span> element containing the coin’s price had a unique identifier: the attribute data-converter-target="price". Additionally, other essential data, such as market cap and total supply, were within tables. All the tables contained useful data except for the last one.

To extract the necessary information, I retrieved all tables, looped through them, extracted the headers and corresponding data, cleaned them, and ultimately arrived at the following solution:

app.get("/coin/:name", async (c) => {

const name = c.req.param("name");

const res = await fetch(`https://www.coingecko.com/en/coins/${name}`);

const html = await res.text();

const $ = load(html);

const tables = $("table").slice(0, -1);

const data = {};

tables.map((index, table) => {

$(table)

.find("tr")

.each((index, row) => {

const key = $(row).find("th").text().trim().split("\n")[0];

const value = $(row).find("td").text().trim().split("\n")[0];

if (key && value) {

data[key] = value;

}

});

});

const coin = {

name: $('meta[property="og:title"]').attr("content").split(" ")[0],

price: $('[data-converter-target="price"]').first().text().trim(),

...data,

};

if (coin?.name) {

return c.json(coin);

} else {

return c.json({ error: "Coin not found" }, 404);

}

});

Conclusion

In this article, we explored how to build a simple web scraper using Bun, Hono, and Cheerio to retrieve cryptocurrency prices directly from CoinGecko without relying on an external API. We covered setting up a Hono server, handling static vs. dynamic content, and using Cheerio to extract relevant data from the website’s HTML. Full code available here

Subscribe to my newsletter

Read articles from Judge-Paul Ogebe directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Judge-Paul Ogebe

Judge-Paul Ogebe

software dev trying a bit of everything, linux fanboy