DevOps, GitOps and CI/CD with GKE

Tim Berry

Tim Berry

This is the tenth post in a series exploring the features of GKE Enterprise, formerly known as Anthos. GKE Enterprise is an additional subscription service for GKE that adds configuration and policy management, service mesh and other features to support running Kubernetes workloads in Google Cloud, on other clouds and even on-premises. If you missed the first post, you might want to start there.

Learning how complex systems work is often an exploratory procedure. We need to slowly and methodically explore new features, learn their dependencies, and understand how they operate holistically with other components. This ultimately provides us with the knowledge to fully understand a system and to design architectures that use it. And that’s basically what I’ve been trying to do in this blog series!

But working manually is not a recommended way to manage production systems once they have been deployed. When organisations rely on critical infrastructure, we need build and deployment methods that are reliable, repeatable and auditable, and none of these things apply to iterative manual work. And that’s why the concept of Infrastructure as Code has long been established in IT to achieve these goals.

We can define infrastructure in code and rely on that code to be deterministically repeatable. We can even audit that code, as it provides a living documentation of what’s being built. Extending those principles to “Everything as Code”, the way we deploy applications can also be automated using many of the same approaches and tools. Giving us faster and safer deployment methods means we can iterate on software rapidly but reliably.

Software lifecycles are now managed almost exclusively through CI/CD pipelines. This approach combines Continuous Integration (CI), where developers synchronize code changes in a central repository as frequently as possible, and Continuous Delivery (CD), where new updates are released into a production environment. We’ve come a long way from the 6-month release cycles of a decade ago, to many cloud-native organisations deploying small code changes hundreds of times a day.

So in this post, we’re going to learn about the GKE tooling that we can use to achieve this sort of developer agility. We’ll be looking at:

An overview of DevOps and GitOps practices

Building a basic CI/CD architecture with Cloud Build and Cloud Deploy

Some considerations for private build infrastructure

By the end of this post, you should feel comfortable automating the complete lifecycle of your workloads on GKE!

Just one quick note before we get started: This post covers native CI/CD tools for GKE to help you build a software delivery framework – but we’re talking about your workloads here, not your clusters. It is also recommended that your GKE infrastructure is automated through Infrastructure as Code, as I touched on at the start of this series. We can’t get side-tracked into a large Terraform tutorial here though, so if you need some further reading on this topic, take a look at: https://cloud.google.com/docs/terraform

An overview of DevOps and GitOps practices

There’s a lot of debate about the true definition of the term “DevOps” (which comes from the contraction of Developer and Operations). In a nutshell, DevOps is a methodology. It’s a change to the way we work – the processes we follow and the tools we use, to introduce elements of the software engineering world into how we build and maintain infrastructure. GitOps is simply an extension of DevOps that implements automation based around a git repository as a single source of truth.

But why do we need DevOps? Why are automation and speed so important? While there’s definitely a business case for improving developer agility and getting new features to market faster than your competitors, speed is really just a benefit of doing DevOps properly – it's not a requirement for doing it in the first place. If you can trust your platform to allow you to iterate quickly, this means that it must be a reliable platform, and a reliable platform is much easier to fix when things go wrong.

So how does automation give us a reliable platform? Once again, it’s about removing the human variable from the equation. Historically, production computer systems could be considered to be in a fairly fragile state. Operators would dread having to update applications, not least because the updated code had probably been thrown over a wall by a development team with little knowledge of the actual server where it was intended to end up. Installing an update meant changing the state of a system that had been established by a hundred different manual interactions over the previous years. Even worse, in the event of a failure or disaster recovery scenario, how do you get that server back to that unknowable state?

As mentioned way back at the start of this series, the move to declarative configuration was a paradigm shift in the way we manage systems. If we trust that our declarative tools can maintain a deterministic state of a system, suddenly we’re not afraid to change that state. Rolling back a failed update or recovering from a failure simply means referring to an earlier documented state. And now we have modern tooling that achieves this model across infrastructure and application deployments.

A software delivery framework

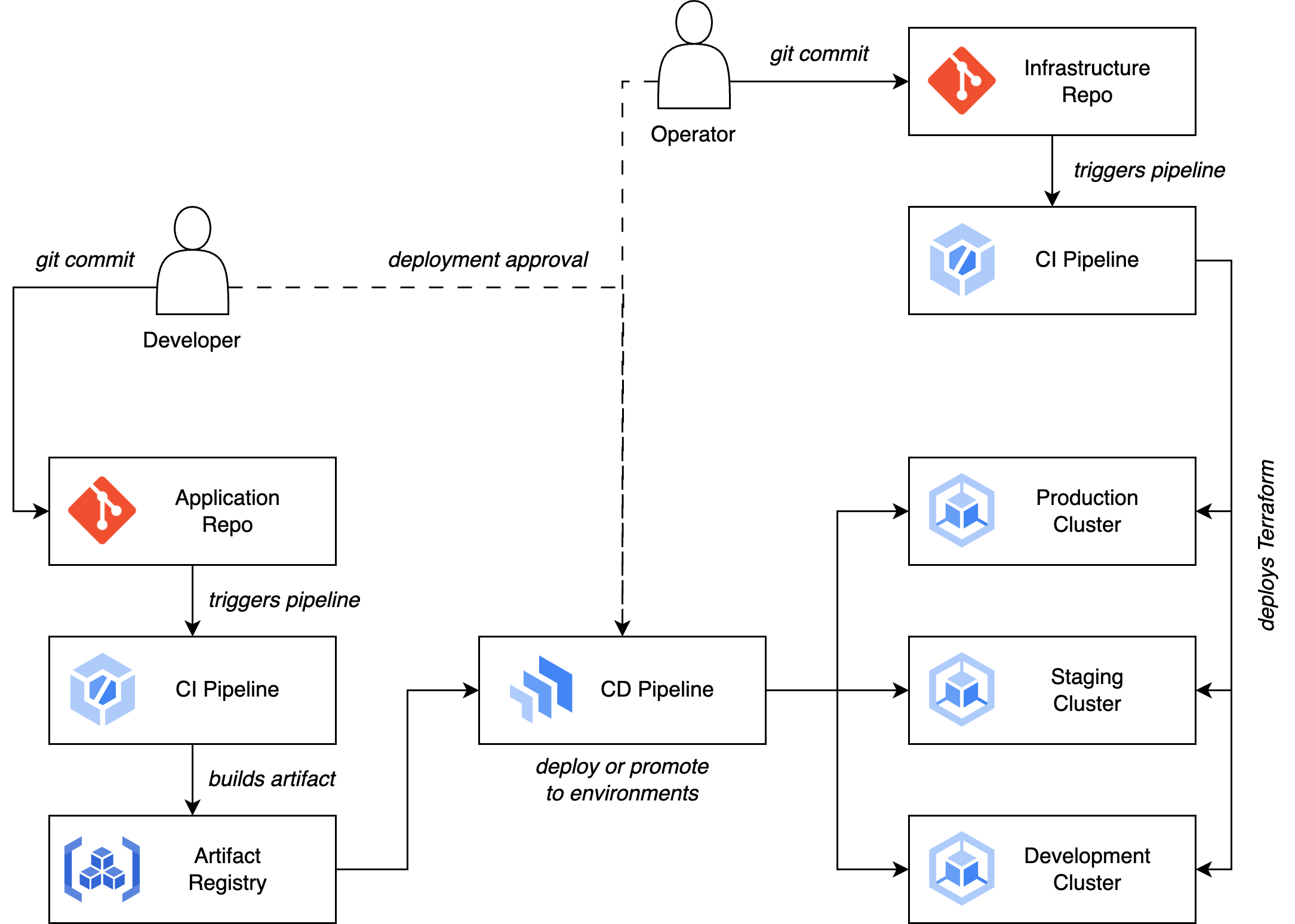

While real-life implementations will vary considerably based on team sizes, workloads and even organisation policies, we can describe an ideal architecture for a software delivery framework as illustrated below. Later on we’ll walk through actually building this reference architecture.

The foundation for the architecture is using git as a source of truth and basing all actions within the architecture (deploying or updating infrastructure or applications) as actions that are triggered by events in those git repositories. Manual changes are expressly forbidden by design, and this is achieved by restricting permissions in production environments to service accounts operated by the pipelines only. It’s worth noting that within those git repositories there are still many different options for how to collaborate and construct your workflows. That level of detail is outside our scope here, but if you’re interested in comparing git workflows such as Feature Branch and Forking, a good resource is Atlassian’s documentation here: https://www.atlassian.com/git/tutorials/comparing-workflows

Our architecture is separated by two distinct roles: Developer and Operator (these days, Operators might be called Platform Engineers). The Developer is responsible for developing and building applications and maintaining them in the Application Repo. The Operator is responsible for building infrastructure and maintaining this code in the Infrastructure Repo. Of course, depending on the size and layout of your teams, these could also be the same people in a single SRE team, but the important thing is the separation of duties in the respective pipelines. Let’s walk through them!

The Developer commits code that represents a new version of an application to a git repo (each application or microservice is likely to have its own repo).

This commit triggers a CI build pipeline.

The CI system will build a new artifact from the updated code (usually a Docker container).

Tests can and should be run at various stages of this process as well, from tests on the uncompiled code to tests on the final container image. If tests pass successfully, the image can be pushed into an artifact registry.

This in turn triggers the CD system, which deploys the application to a development cluster, or updates an existing deployment in place with the new container.

Using the CD system, Developers or Operators can monitor the state of deployments and choose to promote them through environments – from development to staging, and from staging to production.

Each environment uses its own specific configuration, also retrieved from the git source repository.

Meanwhile, the Operator is responsible for building and maintaining the cluster infrastructure itself, rather than the applications that run on it.

Infrastructure code belongs in its own git repo, and changes to infrastructure are handled through a different CI process.

Normally it's sufficient to use a CI tool such as Cloud Build to manage changes to infrastructure code (like Terraform).

Now we’ve discussed all of the theory, let’s go ahead and put all of this infrastructure in place!

Building a basic CI/CD architecture with Cloud Build and Cloud Deploy

We'll now walk through building a simple reference architecture that uses a GitOps approach to building an application (or updating an existing build), and then automatically deploying that application to a staging environment, where it can be manually promoted to a production environment. All the sample code below can be found in my repo here: https://github.com/timhberry/gke-cicd-demo

We’re going to concentrate on the Application Repo part of the diagram we looked at earlier. So to follow along you’ll need to have two GKE clusters set up and ready to go. In our code we refer to them as staging-cluster and prod-cluster, and both run in us-central1-c. Keep an eye out for these references in the code if you need to change them for your own environment. You will also need a git repo to host your application code. In the walkthrough we’ll use Github, but Bitbucket is also supported.

Before we get started, let’s look at an overview of the layout we want to build for our application repo. For demonstration purposes, our app will be a simple “Hello World” web server. To provide the CI/CD automation we need, we’ll bundle the following files along with our code in our repo:

cloudbuild.yaml: The configuration for Cloud Build. This will define how the CI pipeline builds our application and triggers the next stage.clouddeploy.yaml: The configuration for Cloud Deploy. This file defines how our CD pipelines should work, managing deployments to our staging and production clusters.skaffold.yaml: The configuration for Skaffold, an automation framework for Kubernetes used by Cloud Deploy (don’t worry, we’ll explain this in a moment!)k8s/deployment.yamlandk8s/service.yaml: Definitions for the Kubernetes objects our application needs.Dockerfile: The container manifest for building our image.

We’ll build up the repo piece by piece in the following sections.

Setting up permissions for Cloud Build

Before we can start using our repo, we need to provide some permissions to the Cloud Build service account. In the following commands, you’ll need your 10-digit project number, as it forms part of the server account name. You can find your project number on the dashboard of the Cloud Console, or by using the following command (replacing <PROJECT-NAME> with your project name):

gcloud projects describe <PROJECT-NAME> \

--format="value(projectNumber)"

In the following commands, we’ll use the fake project number 5556667778, so just make sure to substitute yours (along with your <PROJECT-NAME>). First, we give Cloud Build permission to create releases in Cloud Deploy:

gcloud projects add-iam-policy-binding <PROJECT-NAME> \

--member=5556667778@cloudbuild.gserviceaccount.com \

--role="roles/clouddeploy.releaser"

Next, we need to make sure that Cloud Build can act as the default Compute Engine service account. This requires roles to act as a service account and create service account tokens:

gcloud projects add-iam-policy-binding <PROJECT-NAME> \

--member serviceAccount:5556667778@cloudbuild.gserviceaccount.com \

--role roles/iam.serviceAccountTokenCreator

gcloud iam service-accounts add-iam-policy-binding \

5556667778-compute@developer.gserviceaccount.com \

--member serviceAccount: 5556667778@cloudbuild.gserviceaccount.com \

--role roles/iam.serviceAccountUser

Note that this isn’t exactly a best practice – ideally, we would create IAM policy bindings with the least privileges required for our pipelines, and not use the default service account. However, we’re trying to learn Cloud Deploy, and pages and pages of IAM instructions would probably distract from that!

With IAM out of the way, we can now start to set up our repo.

Creating a sample app with automated builds

To demonstrate our CI/CD pipeline, we’ll build a very simple application that will be easy to update so we can experiment with the update process. All the cool kids are using Golang these days, so I’ve built a small app in Python which you can find in the repo as server.py. In addition, we have a Dockerfile that specifies how to build the container image, a simple HTML template.html, and a requirements.txt file that Python uses to install the libraries it needs.

If you’ve checked out the repo locally, you can test the image on your own machine by building it and running it with Docker (or Podman):

docker build -t sample-app .

docker run -d -p 8080:8080 sample-app

When the container is running, try to load http://localhost:8080 in your browser and you should see a “Hello World!” message.

In our pipeline, Cloud Build will be responsible for building our image, tagging it, and storing it in the registry. Let’s create a basic cloudbuild.yaml file to do this for us:

steps:

- id: Build container image

name: 'gcr.io/cloud-builders/docker'

args: ['build', '-t', 'gcr.io/$PROJECT_ID/sample-app:$COMMIT_SHA', '.']

- id: Push to Artifact Registry

name: 'gcr.io/cloud-builders/docker'

args: ['push', 'gcr.io/$PROJECT_ID/sample-app:$COMMIT_SHA']

Once you have this file and your app code committed to your repo, it’s time to set up a Cloud Build trigger. This enables Cloud Build to run automatically whenever a new commit is made to your repo.

By default, Cloud Build will watch the main branch of your repo. In a real-world situation, you may want to build from feature branches or use PRs to merge to the main branch before a build is triggered. This will depend on your chosen Git workflow, and once again, I’m trying to keep this walkthrough as simple as possible!

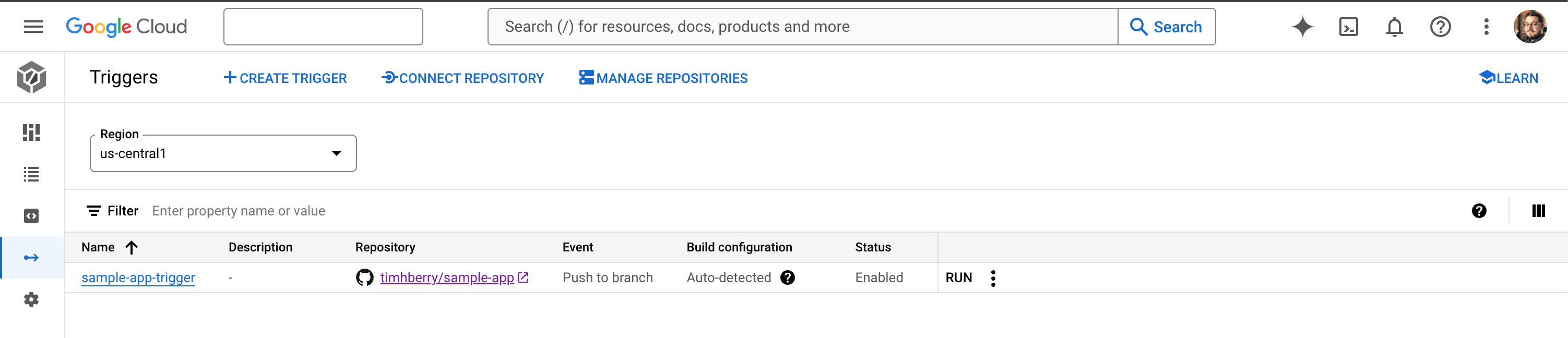

From the Cloud Build page in the Cloud Console, select Triggers and Create Trigger. You’ll need to give your trigger a name and then choose a repository. You'll have the option to connect a new repository here, which will forward you to Github to provide permission to connect Cloud Build. Once your repository is selected, we can leave everything else about the trigger at a default and click Create.

You should now see your trigger configured, as shown below. Note that the event is “Push to branch”, meaning that a push to the specified branch will trigger Cloud Build. The Build configuration is set to “Auto-detected”, which means that Cloud Build will look for a cloudbuild.yaml file, but if it can’t find one it will also look for a Dockerfile or a buildpacks configuration. A cloudbuild.yaml file will always take priority.

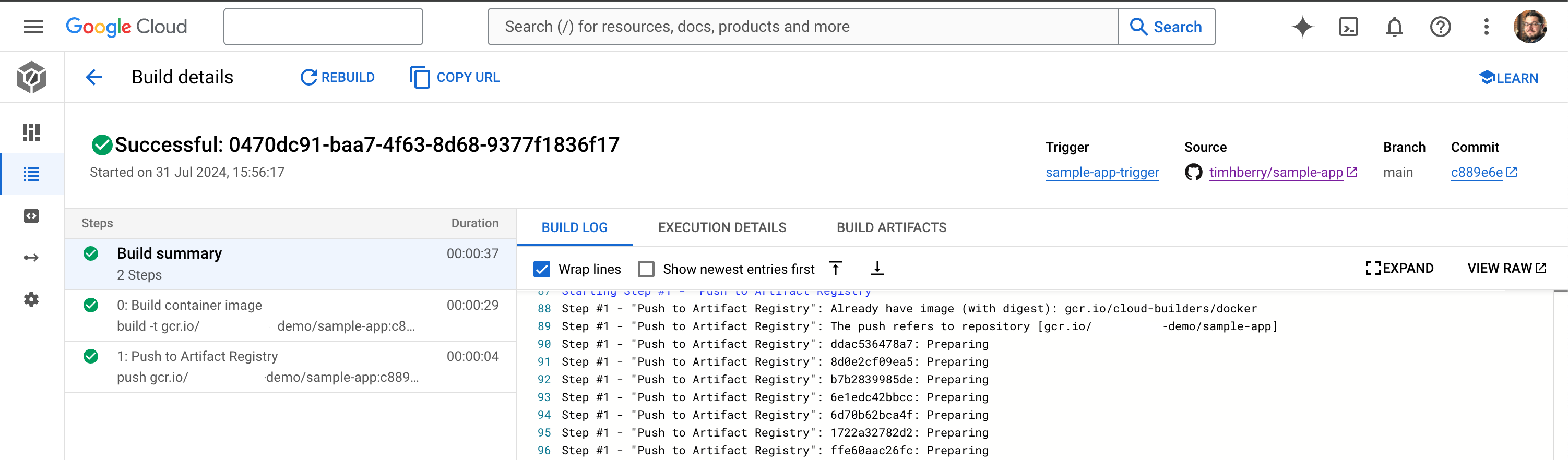

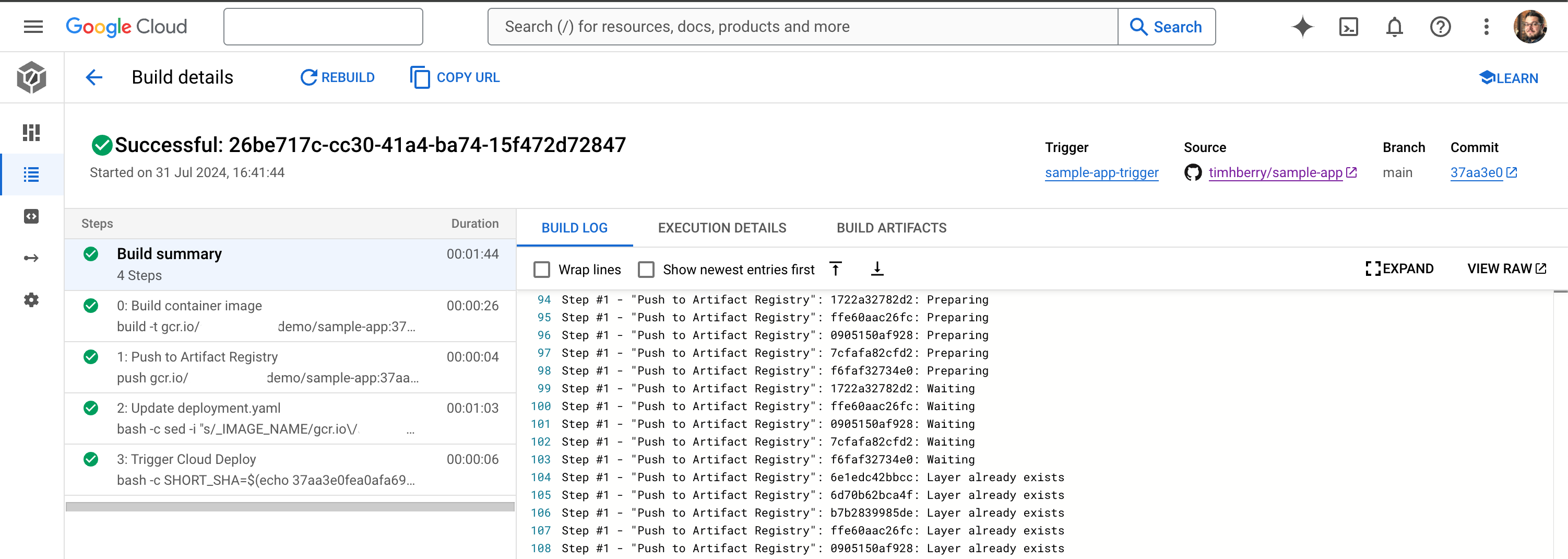

You can now go ahead and commit your code to your repo. Remember this should include server.py, Dockerfile, template.html, requirements.txt and cloudbuild.yaml. If you go back to your Cloud Build dashboard, you should now see that a build has been triggered. A short time later, you’ll see that Cloud Build has completed the steps that we defined: it has built your container image and stored it in the registry. You can select the build to see the completed build steps, as shown below:

We’ve now achieved the CI in CI/CD, as we have an automated workflow that continuously integrates changes to our code and automatically generates a new container image every time we do so. Next, we’ll add our Kubernetes objects and configure a pipeline to continuously deploy our changes as well.

Adding Kubernetes manifests

Our application will ultimately get deployed to our GKE clusters, so we need to add some Kubernetes objects to our repo. In a sub-directory called k8s/ we’ll create a deployment.yaml and service.yaml file. The service.yaml file is very straightforward and simply creates a LoadBalancer service for our app, exposing HTTP port 80:

apiVersion: v1

kind: Service

metadata:

name: sample-app

spec:

selector:

app: sample-app

ports:

- name: http

protocol: TCP

port: 80

targetPort: 8080

type: LoadBalancer

The deployment.yaml is also very straightforward at first glance:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: sample-app

name: sample-app

spec:

replicas: 1

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- image: _IMAGE_NAME

name: sample-app

imagePullPolicy: Always

Note the container image name however: _IMAGE_NAME. This is a placeholder! In a moment, we’ll update our Cloud Build steps so that the deployment YAML is overwritten with the latest build reference. Add these manifest files to your repo.

Let’s keep this simple! There's lots of moving parts here and we’re trying to keep the focus on Cloud Deploy. Overwriting a deployment YAML in our CI process is a very simplistic approach, but there’s nothing wrong with a bit of simplicity! Cloud Deploy also supports complex approaches with tools like Kustomize and Helm, if you’re already using these to build and deploy your applications. For more information see https://cloud.google.com/deploy/docs/using-skaffold/managing-manifests

Configuring Cloud Deploy pipelines and releases

Now we can add the configuration required for Cloud Deploy. There are two distinct concepts in Cloud Deploy we need to understand:

A pipeline is the configuration of how our CD process should run. It defines the targets we deploy to, what parameters they should use and other variables such as approval stages.

A release specifies a single run through the pipeline CD process that releases artifacts to targets. This is usually triggered by the end of a CI process.

We’ll create the pipeline manually first (although technically you could do this with infrastructure as code too!), and in a moment we’ll rely on Cloud Build to run a command that triggers a release. Here’s our clouddeploy.yaml file:

apiVersion: deploy.cloud.google.com/v1

kind: DeliveryPipeline

metadata:

name: sample-app

description: sample app pipeline

serialPipeline:

stages:

- targetId: staging

profiles: []

- targetId: prod

profiles: []

---

apiVersion: deploy.cloud.google.com/v1

kind: Target

metadata:

name: staging

description: staging cluster

gke:

cluster: projects/PROJECT_ID/locations/us-central1-c/clusters/staging-cluster

---

apiVersion: deploy.cloud.google.com/v1

kind: Target

metadata:

name: prod

description: production cluster

gke:

cluster: projects/PROJECT_ID/locations/us-central1-c/clusters/prod-cluster

In this file we define a pipeline with two stages: staging and production. We then create and associate targets with each stage, and within each target we reference our GKE clusters (make sure you update your zones and references to PROJECT_ID). This is a simple example, but we could expand on it to provide differing values or parameters to be applied to each target environment.

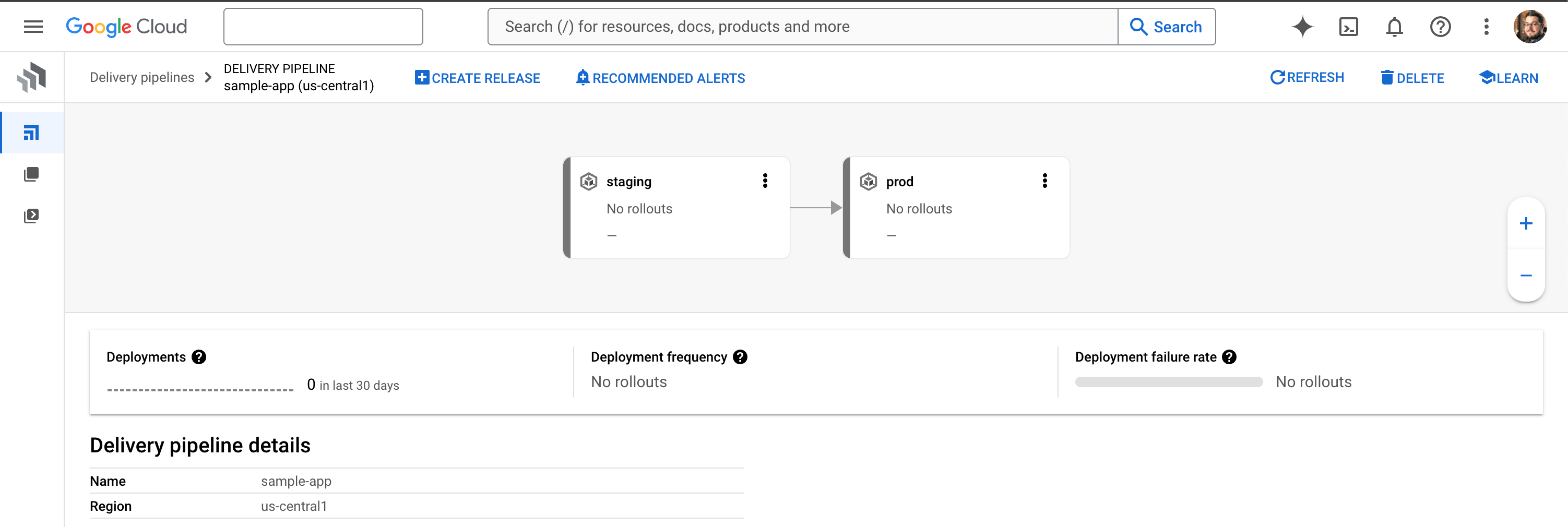

We can now create the pipeline with this command:

gcloud deploy apply --file=clouddeploy.yaml \

--region=us-central1

In the Cloud Deploy section of the Cloud Console, we should now see an empty delivery pipeline, as shown below. At this stage we’ve configured the pipeline, but nothing has run through it yet. But we’re nearly there!

Under the hood, Cloud Deploy leverages an automation framework called Skaffold. This framework manages Kubernetes manifests for Cloud Deploy, rendering them into their required state for deployment. So, we need to add a very simple skaffold.yaml to our repo:

apiVersion: skaffold/v4beta7

kind: Config

metadata:

name: sample-app

manifests:

rawYaml:

- k8s/*

deploy:

kubectl: {}

This file simply configures Skaffold to look in the k8s/ directory in our repo for our manifest files and processes them with kubectl. Once again there are many other things Skaffold can do for you, and it even functions as its own automation system outside of Cloud Deploy, so if you’d like to learn more then check out https://skaffold.dev/

Finally, we’ll add two more steps to our cloudbuild.yaml file, after the existing steps. Here’s the first one:

- id: Update deployment.yaml

name: 'gcr.io/google.com/cloudsdktool/cloud-sdk'

entrypoint: 'bash'

args:

- '-c'

- |

sed -i "s/_IMAGE_NAME/gcr.io\/$PROJECT_ID\/sample-app:$COMMIT_SHA/g" k8s/deployment.yaml

This is the step we use to update the placeholder in deployment.yaml. When our application is deployed, we want to make sure we’re using the latest build of our container image, so we use sed to update the file in place with the full image name including the SHA hash from the commit.

The final step we add to cloudbuild.yaml actually triggers a release in Cloud Deploy:

- id: Trigger Cloud Deploy

name: 'gcr.io/google.com/cloudsdktool/cloud-sdk'

entrypoint: 'bash'

args:

- '-c'

- |

SHORT_SHA=$(echo $COMMIT_SHA | cut -c1-7)

gcloud deploy releases create "release-$SHORT_SHA" \

--delivery-pipeline=sample-app \

--region=us-central1 \

--images=sample-app=gcr.io/$PROJECT_ID/sample-app:$COMMIT_SHA

Note that we reference the name of the pipeline we created earlier with --delivery-pipeline. We also provide a name for the release, which we construct by creating a shorter version of the commit SHA.

With all of these pieces now in place, push your changes to your repo and the following magic will happen:

Your Cloud Build pipeline will be triggered by your git repo changes

Cloud Build will build your application, tag it and push it to the registry

Cloud Build will also update your

deployment.yamlwith the new container image tagIt will then trigger a release in your Cloud Deploy pipeline

The Cloud Deploy pipeline will deploy your new application and the Kubernetes manifests to your staging cluster

Observing the CI/CD pipeline

First, your Cloud Build pipeline will run, only now it will contain the extra stages you defined. When it is complete, you should see that Cloud Deploy has been triggered, as shown below:

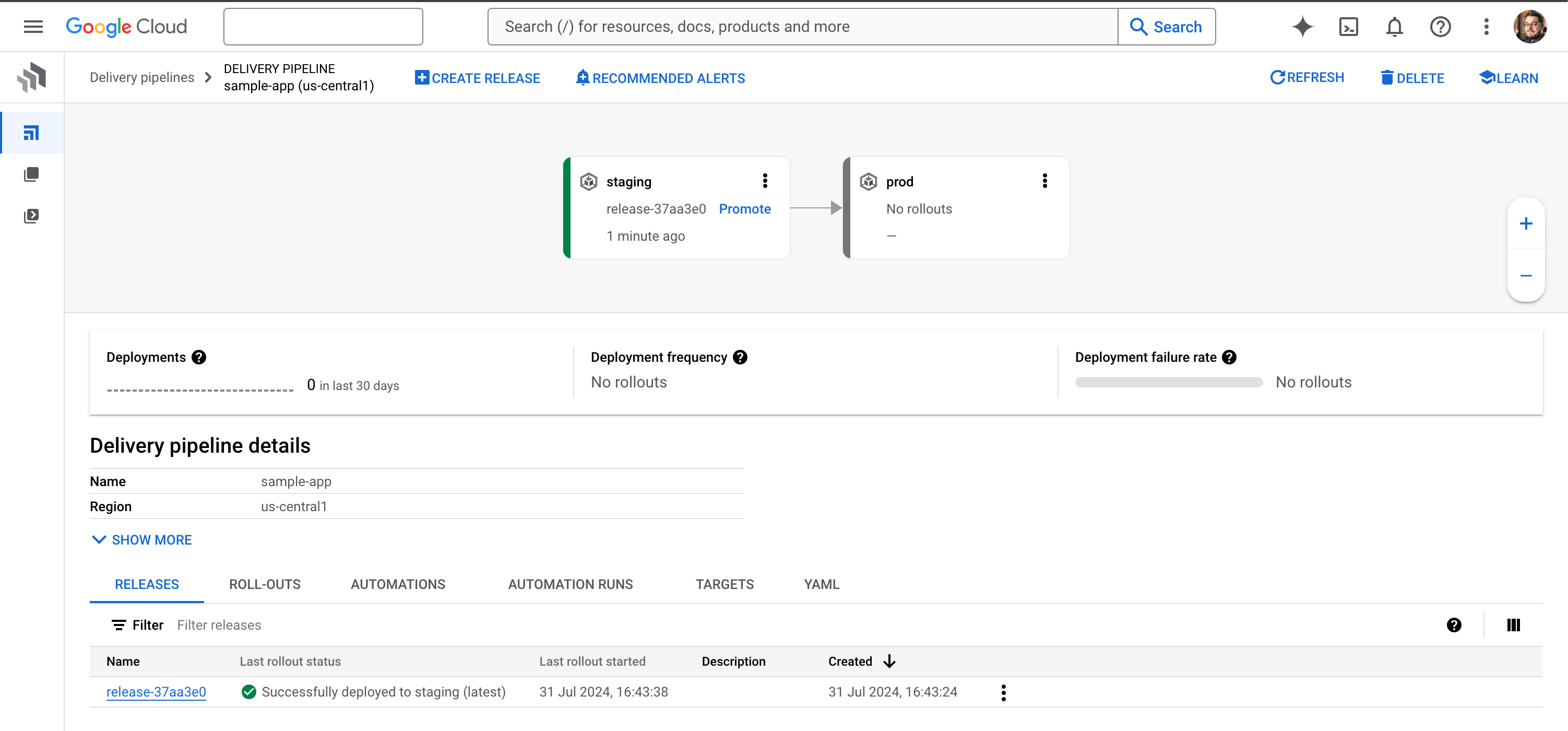

Now if you hop over to the Cloud Deploy dashboard in the Cloud Console, you should see that your pipeline has a new release. A successful release also creates a roll-out, which is simply when the desired artifacts get deployed to their target. In other words, your application has now been deployed to your staging cluster!

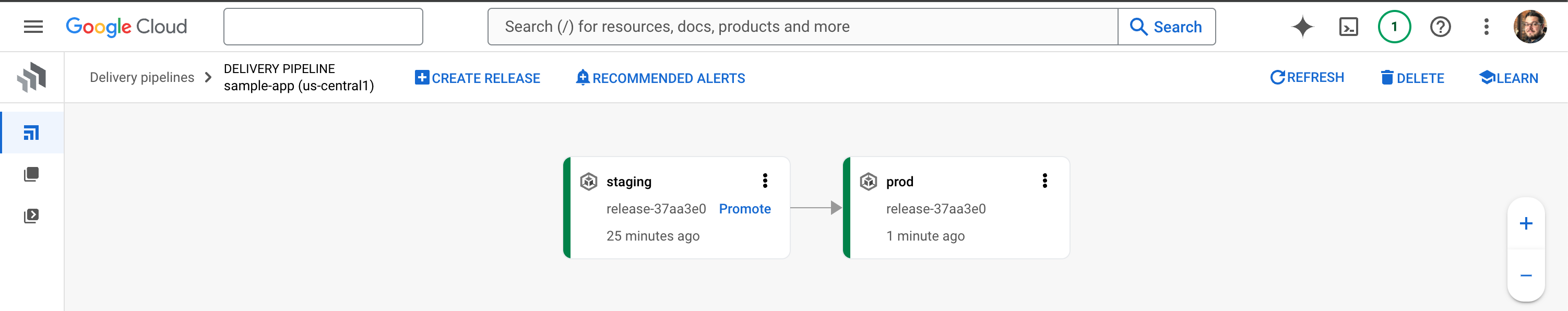

You can see this from the pipeline view in Cloud Deploy, as shown below:

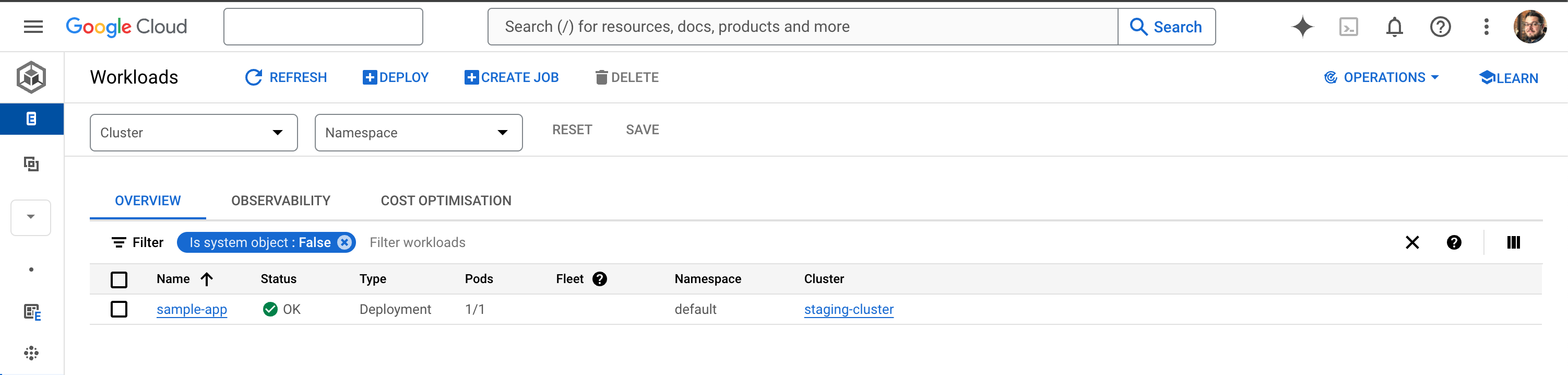

From the Kubernetes section in the Cloud Console, you can also look at the deployed workloads and see that your sample app is running on the staging cluster:

In the details of the deployment, you should be able to locate the external IP of the LoadBalancer service and load that in your browser. You should be greeted with the friendly blue page of a very boring Hello World web app:

In our sample developer workflow, tests could now be run on the latest version of the application in our staging environment. Once we are happy with the updated application, we can promote it to production directly from Cloud Deploy.

From the Cloud Deploy pipeline dashboard, we simply select Promote. This prompts us to confirm the promotion target (which in this case is our production cluster) and provides us with a summary of the actions that the system will take once we start the promotion. Go ahead and click Promote again and you can watch what happens:

The current release creates a new roll-out, this time targeting the production cluster.

The application artifact and Kubernetes manifests are rendered to this new target.

You’ll now have 2 workloads running – your sample app in each cluster:

Updating apps through the CI/CD workflow

Now we’ve finished the initial roll-out of the application, you can simulate the process for changes as well. Everything is now in place so that next time code is pushed to the main branch of the repo, the CI/CD process runs again. The new version of the app will be built and automatically pushed to the staging cluster, ready for you to test and approve for promotion to production.

You can try this for yourself by making a simple change – I would suggest altering the background colour of the app to something more appealing! You can find the bgcolor variable defined in server.py.

In this walkthrough, we’ve built a reference CI/CD architecture for Cloud Build and Cloud Deploy. But of course, we’ve only just scratched the surface of what these tools can do. For example:

Cloud Deploy can automatically promote rollouts for you, based on specific rules and targets.

Cloud Deploy also supports complex deployment patterns like canary testing and deploying to multiple targets at once.

Skaffold configuration can further customise each target, for example by defining a specific number of

Podreplicas per target.

Now you understand the basics of these systems, you may be ready for some more advanced approaches. An even more in-depth tutorial that includes frameworks for different programming languages can be found at https://cloud.google.com/kubernetes-engine/docs/tutorials/modern-cicd-gke-reference-architecture

Some considerations for private build infrastructure

Cloud Build and Cloud Deploy are fantastic tools for automating the various build and deploy stages of your CI and CD pipelines. Each step of your Cloud Build pipeline runs as an isolated and containerised process with access to a shared workspace for the pipeline's duration. Under the hood, when Cloud Deploy executes steps in your CD pipelines it also uses workers in Cloud Build.

However, by default these workers are running in a shared pool as part of their respective services. In many cases this might not be desirable: you may have regulatory requirements that mean all compute processes have to stay within certain network boundaries, or you may have components that only accept private connections within your VPC that are required for steps in your pipelines.

Thankfully, Cloud Build allows you to configure private pools, which are pools of worker resources that run within a dedicated private VPC. This service producer VPC is still Google-managed and does not exist within your project, however you can peer with it directly for a private connection that only requires internal IP addresses.

Alternatively, if you don’t want to configure VPC peering, you can use a dedicated private pool but allow it to use public endpoints to communicate with resources in your VPC. You might choose this option if your VPC already has public endpoints, and you just want a private pool to give you more options over the configuration of the Cloud Build workers.

You can create a private pool using the following command. There are lots of parameter placeholders here, which I’ll explain in moment:

gcloud builds worker-pools create <PRIVATEPOOL_ID> \

--project=<PROJECT_ID> \

--region=<REGION> \

--peered-network=<PEERED_NETWORK> \

--worker-machine-type=<MACHINE_TYPE> \

--worker-disk-size=<DISK_SIZE> \

--no-public-egress

Let’s walk through those parameters:

PRIVATEPOOL_IDis the unique name that you assign to your private pool.PROJECT_IDis the name of the project that you wish to use with this pool.REGIONis your chosen region where the private pool should run.PEERED_NETWORKis the VPC network in your project that should be peered with Google’s private service producer network. Note that you’ll need to specify the long resource name for this parameter, for example:projects/my-project/global/networks/my-vpc.MACHINE_TYPEallows you to choose the machine type for your workers. Several types of Compute Engine instance type are supported, and if you don’t specify a preference, ane2-standard-2will be used. Note that this parameter can also be overridden at runtime by passing the--machine-typeoption togcloud builds submit.DISK_SIZElets you adjust the disk size of the worker instances, which must be between 100 and 4000 GB. Once again this is optional; Cloud Build will default to a size of 100GB. This can also be overridden at runtime with the--disk-sizeoption.The

--no-public-egressoption ensures that workers are created without an external IP address.

Note that these parameters can also be passed in a configuration file which defines a private pool schema. The layout of this schema is documented at https://cloud.google.com/build/docs/private-pools/private-pool-config-file-schema

Once the private pool has been created, you can choose to use it any time Cloud Build executes a pipeline. If you’re submitting a build job manually, you can specify the name of the worker pool on the command line. For example:

gcloud builds submit --config=cloudbuild.yaml \

--worker-pool=projects/my-project/locations/us-central1/workerPools/my-privatepool

You can also specify the private pool directly in your cloudbuild.yaml, which helps if your builds are being triggered by a git commit. Simply add the pool configuration to an options section at the bottom of your cloudbuild.yaml file like this:

steps:

- name: 'bash'

args: ['echo', 'Previous steps up to this point']

options:

pool:

name: 'projects/my-project/locations/us-central1/workerPools/my-privatepool'

As we mentioned earlier, all Cloud Deploy steps also run within Cloud Build workers, so we can also specify a Cloud Build private pool to be used in our Cloud Deploy pipelines. Cloud Build is used for rendering manifests (using Skaffold) as well as deploying to clusters (using kubectl) and we can specify which worker pools to use as well as for which stages.

We do this by updating clouddeploy.yaml and adding an executionConfig to the object definition for each Target (ie. each cluster). Here’s an example using the production cluster from our earlier walkthrough:

apiVersion: deploy.cloud.google.com/v1

kind: Target

metadata:

name: prod

description: production cluster

gke:

cluster: projects/PROJECT_ID/locations/us-central1-c/clusters/prod-cluster

executionConfigs:

- privatePool:

workerPool: "projects/my-project/locations/us-central1/workerPools/my-privatepool"

usages:

- RENDER

- DEPLOY

Using private pool configurations like this means that you can still leverage Cloud Build and Cloud Deploy, even if you have completely private infrastructure. This is helpful if you’re using hybrid connections between your on-premises networks or other clouds via your VPC, or if you’re just using private GKE clusters.

Summary

In this post I’ve hopefully established a compelling argument for using automation tools and a CI/CD architecture for your software development lifecycle. As I stated earlier, while these tools and practices can enable rapid iteration and development, that’s normally not the goal in and of itself. In order to support fast but safe deployments, all of these additional mechanisms work together to make your deployments more reliable. This means you’ll have much more confidence changing the state of your workloads or fixing them if something goes wrong.

And that’s a wrap! (for now…)

Ten posts on a single subject seems like a good milestone! I’ve found it fascinating digging deep into the world of GKE Enterprise, and I hope through these posts I’ve made it a bit more accessible for anyone out there trying to learn more about these topics.

Stay tuned to my blog for more general Kubernetes and cloud writing, and maybe some other completely different stuff too! 😄

Subscribe to my newsletter

Read articles from Tim Berry directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by