RAG vs. Fine Tuning: Understanding Key Differences

TechKareer

TechKareer

Large Language Models are one of the greatest technical breakthroughs of this decade. It is no surprise that the field of AI itself has started moving at breakneck speeds, too fast to keep up for even the most dedicated researchers. However, the most common terms associated with business applications for LLMs are Retrieval Augmented Generation (RAG) and fine-tuning. So what is the difference between the two, really?

Let’s start with discussing how an LLM traditionally works, skip to this section if you’re already familiar with an LLMs inner workings.

Architecture

Mathematically, we can think of an LLM as a way to convert a set of words to another set of words. If you’ve taken any classes for higher engineering mathematics, you must have come across ‘transforms’ in linear algebra. This is similar. We take this set of input words (prompt) and break it down into smaller words that machines can understand (tokens, each approximately 4 letters long).

These are then parsed through the heart of the LLM, the transformer (remember transforms from linear algebra). The transformer is trained such that each word is represented as a vector (see here for what is a vector). This allows us to perform a lot of matrix mathematics on this vector, and mapping the result to a set of words, allowing us to predict the next token, hence giving the appearance of talking.

The aforementioned matrix maths involves the weights of the model. These are the layers of tensors (3D matrices) that are responsible for how the model behaves. The weights can be thought of defining the function that transforms an input (prompt) to an output (response). The “pre-training” part of the GPT is the process of finding the best weights (function).

Why bother with RAG or fine-tuning?

If you’ve ever asked any frontier LLM about recent news, they usually mention a cut-off date. These massive models are trained on vast datasets, which inherently limits their knowledge of recent events. A dataset for training is by necessity, slow moving. Day-to-day data cannot be incorporated and the training process is incredibly expensive and infeasible.

These models also may appear to be highly knowledgeable at the surface level, but attempting to go into any depth in one topic shows their cracks quite easily. These two approaches are ways to resolve the problem of knowledge and specificity

What is RAG?

Retrieval Augmented Generation, RAG for short, is a way to improve the knowledge of an LLM by providing it with some kind of information it may not have access to. It is in essence, a retriever, attached to the LLM. Upon a prompt by the user, a retriever function attempts to fetch the data that might be the most relevant to a user’s query and passes that to the LLM as context before formulating a final response. One of the simplest use cases of a RAG is to chat with textbooks, long research papers, or large corpuses of semi-structured/unstructured data.

However, a “chat-with-unstructured-data” RAG is incredibly simple. Let’s scale it up, let’s solve business problems with it. This is what I did at Proxima Mumbai, while building Torch. We had to solve for finding people from a database, purely with semantic search, while handling fast moving data as user’s signed up. RAG fits perfectly for this use case, as the incorporation of new data is hardly an issue.

There are downsides, however.

Retriever bottleneck: If your retrieval function is not good, the LLM will never get the data that is relevant to the question! It’s very important to be careful when designing your retriever component

Code complexity: Retrieval when deployed to production can quickly balloon in size, as many different retrieval techniques might be employed in the same service, thus developers need to be careful when developing RAG applications

Scale: Building a RAG that scales well, especially with millions of stored chunks becomes difficult and you have to deal with vector stores, and the hassle that comes with properly storing vectors while keeping access times low.

In-depth RAG Techniques

This section will discuss in depth some modern RAG techniques, what are their drawbacks and advantages.

At its core, RAG needs to retrieve data. There are many ways to go about it, the most common being similarity search using Vector Embeddings. However, methods like TF-IDF, or even SQL Search are also common.

The standard RAG model

The way the standard RAG model works is by using storing your documents in small chunks as vector embeddings. These vector embeddings are a way to represent words as a vector in a high dimensional space.

Source: https://miro.medium.com/v2/resize:fit:1400/1*h4VYQt1trrrJjNHaD4Utjg.png

The way the standard RAG model works is by using storing your documents in small chunks as vector embeddings. These vector embeddings are a way to represent words as a vector in a high dimensional space.

Developing an intuition for vector embeddings

How do vectors turn words into numbers? To understand this intuitively lets imagine a 3D space, where instead of X, Y, and Z, we associate one of the axes with “food”.

In this example, a vector associated with “Pizza” might look like this:

We see that pizza, in some vague sense, is associated with food.

Let’s say we draw another vector, for “Burger”

We see that in this 3D Space, where axes are associated with concepts like “Food”, “pizza” and “burger” end up quite close to each other, this shows that the vectors are closely associated with each other, they’re quite similar in some sense.

Now if we look at cockroach,

Cockroach is very far from being considered food, so we see that it’s quite far from all the other vectors.

From this we can see that words and sentences that represent similar things end up being closer to each other. This is the heart of the standard RAG model.

Now, instead of a 3D Space, we can generalize this to an abstract extremely high dimensional vector space. This may not be meaningful to humans, but machines understand it very well. This allows us to express human speech as vectors very well.

Thus, we can use Cosine Similarity, and retrieve the chunks of data most closely associated with our question.

Figure: A visual explanation of Cosine Similarity. Source: https://www.learndatasci.com/glossary/cosine-similarity/

Other common RAG methods

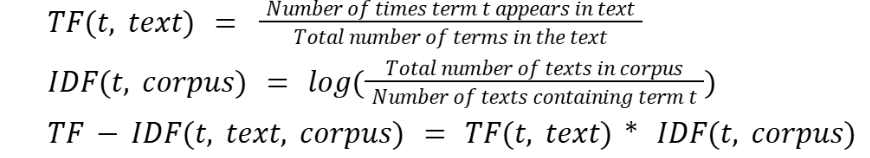

Term Frequency-Inverse Document Frequency (TF-IDF):

Term Frequency (TF): Measures how often a term appears in a text. It is calculated as the number of occurrences of a term divided by the total number of terms in the text.

Inverse Document Frequency (IDF): Measures the rarity of a term across all texts in the collection. It is calculated as the logarithm of the total number of texts divided by the number of texts containing the term.

TF-IDF: The product of TF and IDF, giving a higher weight to terms that are frequent in a text but rare in the entire corpus(entire body of text data that you are analyzing).

Structured Search: Use LLMs to search for relevant content by constructing SQL-like queries. Happenstance has an interesting way to go about it.

Newer upcoming RAG improvements:

Retrieval Interleaved Generation (RIG): A method of retrieval, where documents are dynamically retrieved at generation time rather than being retrieved before generation. It’s RAG that asks itself: “Do I even need to retrieve? If so, what do I really need to retrieve?”

RIG becomes incredibly useful when you need to retrieve different documents at different periods of an agent’s workflow. The best example is Cursor Compose, which dynamically decides which files to fetch, which files to refer to, and does so by reading and writing to them multiple times over the course of a prompt.

Reasoning Augmented Generation (ReAG): REAG (Retrieval-Enhanced Augmented Generation) extends RAG (Retrieval-Augmented Generation) by incorporating feedback loops, multi-stage retrieval, and iterative refinement to enhance both the retrieval and generation processes. Unlike RAG, which retrieves documents once and uses them in a single-pass generation, REAG continuously refines both retrieval and generation. Reinforcement Learning may also be used in the iterative refinement step mentioned above.

RAG, while new, may become useful in medical fields, where an inference may be drawn at some point of time, and if the model is not confident about its results, it may choose to retrieve further sources.

We can refer to this table for a quick difference between the three:

Feature | RAG (Static Retrieval) | RIG (Dynamic Retrieval) | REAG (Iterative Refinement) |

Retrieval Timing | Before generation | During generation | Before & dynamically during |

Feedback Loop | No | Limited | Reinforcement-based |

Best For | Static knowledge-based responses | Dynamic, evolving responses | Self-improving AI systems |

Adaptability | Limited | Adjusts retrieval per token | Self-refining w/ confidence scoring |

Use Case | Customer support chatbots | Code generation (Cursor) | Medical AI, Legal AI |

What is fine tuning?

Fine tuning is a way to directly alter the weights of the model. What does this mean? You might have seen an LLM’s power described by the number of parameters it has. Llama3-70b, 405b, InstructGPT-1.3b, so on, and so-forth. Fine-tuning is a way to directly alter the matrices (the transformation function of the model).

Now, messing with the architecture of the model can be risky but super useful. Generally, we change the final few layers of the model, this allows us to retain most of the generality of the model, while still making it ultra-smart for the specific use cases that it will be used in. Think of it as an aftermarket part to an existing car.

Fine-tuning does come with its own set of unique downsides. You must

Ensure high-quality data: Unclean data, especially one with too many links, will lead to a bad fine-tune and actually worsen model performance

Hosting: Hosting a fine-tuned model can prove to be challenging, and less cost effective than using frontier LLM service providers.

Iteration is expensive: Retraining a model, even a small 7-8B model can cost more money than is worth it, especially to solopreneurs and small startups.

Fine-Tuning Techniques

Standard Fine-tuning: This is what we can call “full” fine-tuning. We take an existing pre-trained LLM and continue training it further on a smaller dataset. In this case, all the weights of the model are updated. The learning rate is kept lower than pre-training to prevent catastrophic forgetting (losing the general knowledge the model already learned).

Pros:

- Potentially the highest performance, as all parameters are adapted to the new task.

Cons:

Computationally expensive

Storage intensive

Catastrophic forgetting: If not done carefully, the model can "forget" much of its original pre-trained knowledge.

Overfitting

Parameter-Efficient Fine-Tuning (PEFT): This is used as a general term for a set of techniques that aim to avoid the expenses that come with standard fine-tuning. The core idea is to avoid training all the weights. The general principle is to either

Add a small number of additional parameters, freeze original weights

Modify a small subset of the existing parameters

A combination of both of the above

There are many techniques in PEFT. Some of them include:

Low Rank Adaptation (LoRA): LoRA has gained great popularity of late, due to parameter efficiency. It is characterised by “Learning slower, but forgetting less.” In this, a small number of low rank (r) matrices are injected into the model architecture, usually in the larger layers which are difficult to finetune. These are much smaller matrices than the one used in the model itself.

Prefix Tuning: In this method, we add a new prefix of trainable vectors before the original input embeddings. The input embeddings themselves in the initial input to the model do not change, but since we add a prefix of trainable vectors before it, it acts as an “instruction”. You're learning better embeddings for the prompt tokens before they even enter the first layer. So, the modification happens right at the start.

P-Tuning: Like Prefix Tuning, P-Tuning efficiently adapts LLMs by adding trainable prompts. However, instead of using word-based prefixes, P-Tuning learns "soft prompts" – flexible, non-word-based instructions optimized by a small neural network. While P-Tuning can discover more nuanced prompts and potentially achieve better performance, Prefix Tuning remains popular due to its simplicity and often "good enough" performance for many tasks. Prefix Tuning is easier to implement and computationally lighter, making it a practical choice when ultimate performance gains from P-Tuning are not essential.

Miscellaneous Fine-Tuning Techniques:

Distillation: It is a technique that creates a smaller, more efficient version of an LLM. Consider the process of education. A student can learn from textbooks and independent study, but instruction from an expert teacher accelerates and enhances learning. Similarly, in model distillation, a smaller "student" model is trained not only on data, but also guided by a larger, more proficient "teacher" model. This process allows the student to achieve a significant portion of the teacher's performance in a more compact and efficient form.

Quantization: Quantization works on the principle of reducing the precision of a model to a lower value. So, for example, if you have a model with weights that are written in floating point 32, which is referred to as FP32, that means a floating point with 32 digits of accuracy. You can reduce this down to FP8, that is floating point eight, or you can discretize it to ones and zeros. This is the process of quantization. This helps to reduce model size signficiantly with relatively less loss in quality

Summary - when to use what?

We can consult the given table for a general guide on when to use what

RAG | Fine-tuning |

Adds a retriever function | Fundamentally changes model weights |

Useful for fast moving data | Useful for slow moving data |

Retriever function is the bottleneck | Data quality and money is the bottleneck |

Scaling is difficult | Hosting is not as straightforward |

For most general purpose applications, RAG works best as you can dynamically fetch the relevant data to provide to models as context, however with changing times even RAG's relevance is shifting. Gemini’s latest models have context windows of up to 2M tokens, you can directly push medical textbooks with 2000(!!) pages into the chat and not have to worry about RAG or fine tuning in many knowledge-intensive chat applications at all

While the future remains unseen, both of these techniques are useful in their own way, and play a significant role in modern AI applications.

Cover Credits : https://visionx.io/blog/rag-vs-fine-tuning/

Subscribe to my newsletter

Read articles from TechKareer directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by