Convolutional Neural Networks (CNN): A Detailed Guide

Tushar Pant

Tushar Pant

Introduction

In the realm of deep learning, Convolutional Neural Networks (CNNs) have revolutionized the way we approach computer vision tasks. From image classification to object detection and even video analysis, CNNs are the driving force behind state-of-the-art solutions.

What is a Convolutional Neural Network (CNN)?

A Convolutional Neural Network (CNN) is a type of deep neural network primarily used for image processing and computer vision tasks. Unlike traditional neural networks, CNNs are designed to automatically and adaptively learn spatial hierarchies of features from input images.

Key Characteristics:

Local Receptive Fields: CNNs focus on small regions of the input image, capturing local patterns like edges and textures.

Parameter Sharing: The same convolutional kernel (filter) is used across the image, reducing the number of parameters.

Spatial Hierarchies: Lower layers learn simple patterns (e.g., edges), while deeper layers learn complex features (e.g., objects).

Why Use CNNs for Image Processing?

Translation Invariance: CNNs recognize objects regardless of their position in the image.

Parameter Efficiency: By sharing weights, CNNs require fewer parameters than fully connected networks.

Hierarchical Feature Learning: CNNs learn features at multiple levels of abstraction, from edges to complete objects.

Improved Performance: CNNs have outperformed traditional machine learning algorithms in image-related tasks.

How Do CNNs Work?

CNNs consist of a series of layers that transform an input image into class probabilities or other desired outputs. The main layers are:

Convolutional Layer: Extracts features using filters.

Activation Function (ReLU): Introduces non-linearity.

Pooling Layer: Reduces the spatial dimensions.

Fully Connected Layer: Combines features for classification.

Example Architecture

Input Image → Conv Layer → ReLU → Pooling Layer → Fully Connected Layer → Output

Key Components of CNNs

1. Convolutional Layer

The Convolutional Layer is the core building block of a CNN. It extracts features from the input image by performing convolution operations using learnable filters (kernels).

Filter (Kernel): A small matrix that slides over the input image to compute feature maps.

Stride: The step size by which the filter moves across the image.

Padding: Adding zeros around the input image to control the output size.

Same Padding: Output size is the same as the input size.

Valid Padding: No padding, leading to smaller output dimensions.

Example

A 3x3 filter detects edges by calculating the weighted sum of pixel values.

Filter:

[[-1, -1, -1],

[ 0, 0, 0],

[ 1, 1, 1]]

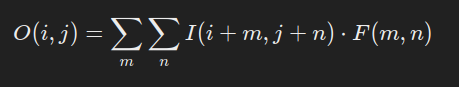

Mathematical Operation

For an input image II and a filter FF, the convolution operation is:

where O(i,j) is the output feature map.

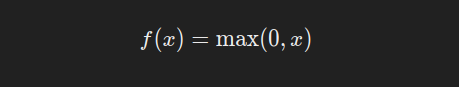

2. Activation Function (ReLU)

ReLU (Rectified Linear Unit) is the most commonly used activation function in CNNs. It introduces non-linearity, allowing the network to learn complex patterns.

Function

Key Properties

Non-linear: Enables learning of non-linear relationships.

Efficient Computation: Simple and fast to compute.

Prevents Saturation: Unlike sigmoid or tanh, ReLU does not saturate for positive inputs.

3. Pooling Layer

The Pooling Layer reduces the spatial dimensions of feature maps, preserving important features while reducing computational complexity.

Types of Pooling

Max Pooling: Returns the maximum value in each patch.

Average Pooling: Returns the average value in each patch.

Example

Max Pooling with a 2x2 filter and stride of 2:

Input:

[[1, 3, 2, 4],

[5, 6, 7, 8],

[9, 2, 3, 1],

[4, 6, 5, 8]]

Max Pooled Output:

[[6, 8],

[9, 8]]

Benefits

Spatial Invariance: Maintains the most important features regardless of their location.

Dimensionality Reduction: Reduces computation and overfitting.

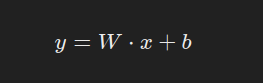

4. Fully Connected Layer

The Fully Connected Layer connects every neuron in one layer to every neuron in the next layer. It integrates the features extracted by convolutional and pooling layers for the final classification or regression task.

Function

W = Weights

x = Input vector

b = Bias

Popular CNN Architectures

LeNet-5: First CNN for digit recognition.

AlexNet: Revolutionized CNNs with ReLU and GPU acceleration.

VGGNet: Used deep networks with small 3x3 filters.

Inception (GoogLeNet): Introduced inception modules with different filter sizes.

ResNet: Solved vanishing gradient problems with skip connections.

Applications of CNNs

Image Classification: Identifying objects in images (e.g., cats vs. dogs).

Object Detection: Locating objects within an image (e.g., YOLO, SSD).

Semantic Segmentation: Classifying each pixel in an image.

Video Analysis: Action recognition and object tracking.

Medical Image Analysis: Detecting diseases from X-rays or MRIs.

Facial Recognition: Security and authentication systems.

Advantages and Limitations

Advantages

High accuracy in computer vision tasks.

Automatically extracts relevant features.

Reduces the need for manual feature engineering.

Limitations

Data Requirement: Requires large datasets for training.

Computational Cost: Needs powerful GPUs for training.

Lack of Interpretability: Difficult to understand decision-making.

Implementation Example in Python (TensorFlow/Keras)

import tensorflow as tf

from tensorflow.keras import layers, models

# Load and preprocess dataset

(X_train, y_train), (X_test, y_test) = tf.keras.datasets.cifar10.load_data()

X_train, X_test = X_train / 255.0, X_test / 255.0

# Define CNN architecture

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax')

])

# Compile model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Train model

model.fit(X_train, y_train, epochs=10, validation_data=(X_test, y_test))

Conclusion

Convolutional Neural Networks have revolutionized computer vision and image processing. Their ability to learn hierarchical features with spatial hierarchies makes them the go-to choice for image classification, object detection, and more.

Subscribe to my newsletter

Read articles from Tushar Pant directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Tushar Pant

Tushar Pant

Cloud and DevOps Engineer with hands-on expertise in AWS, CI/CD pipelines, Docker, Kubernetes, and Monitoring tools. Adept at building and automating scalable, fault-tolerant cloud infrastructures, and consistently improving system performance, security, and reliability in dynamic environments.