Maximize AWS Savings: Automate Snapshot Cleanup on AMI Deregistration

Pradeep AB

Pradeep AB

Introduction

When you create an Amazon Machine Image (AMI) from an EC2 instance, AWS automatically generates a snapshot for each attached EBS volume, capturing its data at that moment.

However, deleting an AMI does not automatically remove these associated snapshots. Over time, these orphaned snapshots can accumulate, increasing storage costs and cluttering your AWS environment.

Manually identifying and deleting these snapshots is both tedious and inefficient. To streamline this process and optimize storage costs, I’ve built a serverless automation solution that ensures the immediate deletion of orphaned snapshots whenever an AMI is deregistered.

In this article, I'll guide you through setting up an automated workflow using Python (Boto3) and Terraform, triggered by AWS EventBridge, to handle snapshot cleanup effortlessly.

You can find the complete solution on GitHub: delete-snapshots-on-ami-deregister-main.

Additionally this article assumes prior knowledge of setting up Terraform, AWS CLI, an S3 bucket for remote state storage, and a DynamoDB table for state locking, so those steps will not be repeated here.

Step 1: Setting the Stage with Terraform

Terraform is an Infrastructure as Code (IaC) tool that allows you to define and deploy cloud infrastructure declaratively. In this setup, we’ll use Terraform to provision the necessary AWS components, including a Lambda function, an EventBridge rule, IAM roles, and policies.

Terraform Initialization: Versions and Providers

First, configure Terraform and the AWS provider. Specify your provider version to ensure compatibility and set up the backend for remote state storage using an S3 bucket. This setup will keep the state file consistent across team members.

Change the

required_versionvalue as per theterraform -versionoutput.Update the

bucket,key, anddynamodb_tablevalues for the S3 backend to match what you have created.

terraform {

required_version = ">=1.5.6"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.72.0"

}

}

backend "s3" {

bucket = "terraform-state-files-0110"

key = "delete-snapshot-on-ami-deregister/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "tf_state_file_locking"

}

}

provider "aws" {

region = "us-east-1"

}

Step 2: Creating an IAM Role and Policy for the Lambda Function

To grant our Lambda function permissions to describe and delete snapshots, create an IAM role with an attached policy. This role will allow Lambda to perform the necessary actions, such as querying and deleting snapshots and logging in CloudWatch.

resource "aws_iam_role" "lambda_role" {

name = "terraform_delete_snapshot_on_ami_deregister_role"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": { "Service": "lambda.amazonaws.com" },

"Effect": "Allow"

}

]

}

EOF

}

resource "aws_iam_policy" "iam_policy_for_lambda" {

name = "terraform_delete_snapshot_on_ami_deregister_policy"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:*:*:*"

},

{

"Effect": "Allow",

"Action": ["ec2:Describe*", "ec2:DeleteSnapshot"],

"Resource": "*"

}

]

}

EOF

}

resource "aws_iam_role_policy_attachment" "attach_iam_policy_to_iam_role" {

role = aws_iam_role.lambda_role.name

policy_arn = aws_iam_policy.iam_policy_for_lambda.arn

}

Step 3: Deploying the Lambda Function with Terraform

With our IAM role and policy set up, we’ll deploy the Lambda function. First, we use the archive_file resource to package the Python script, and then configure Lambda to use this ZIP file as the code source.

data "archive_file" "lambda_zip" {

type = "zip"

source_file = "${path.module}/python/delete-snapshot-on-ami-deregister.py"

output_path = "${path.module}/python/delete-snapshot-on-ami-deregister.zip"

}

resource "aws_lambda_function" "lambda_function" {

filename = data.archive_file.lambda_zip.output_path

function_name = "delete-snapshot-on-ami-deregister"

role = aws_iam_role.lambda_role.arn

handler = "delete-snapshot-on-ami-deregister.lambda_handler"

runtime = "python3.12"

timeout = 30

depends_on = [aws_iam_role_policy_attachment.attach_iam_policy_to_iam_role]

}

Step 4: EventBridge Rule to Trigger Lambda on AMI Deregistration

To detect when an AMI is deregistered, we configure an EventBridge rule. This rule triggers the Lambda function whenever an AMI changes state to “deregistered.”

resource "aws_cloudwatch_event_rule" "rule" {

name = "ami-termination-event-capture"

description = "Capture AMI termination event"

event_pattern = <<EOF

{

"source": ["aws.ec2"],

"detail-type": ["EC2 AMI State Change"],

"detail": { "State": ["deregistered"] }

}

EOF

}

resource "aws_cloudwatch_event_target" "target" {

rule = aws_cloudwatch_event_rule.rule.name

target_id = "delete-snapshot-on-ami-deregister"

arn = aws_lambda_function.lambda_function.arn

}

resource "aws_lambda_permission" "trigger" {

statement_id = "AllowExecutionFromEventBridge"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.lambda_function.function_name

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.rule.arn

}

Step 5: Python Boto3 Code for Snapshot Deletion

Let’s dive into the Python code that performs the snapshot deletion. Our Lambda function uses Boto3 to interact with AWS and performs the following tasks:

List all snapshots in the account.

Identify snapshots associated with the deregistered AMI.

Delete each matched snapshot.

import boto3

import json

import logging

logger = logging.getLogger()

logger.setLevel(logging.INFO)

def lambda_handler(event, context):

ec2_cli = boto3.client("ec2")

logger.info("Event Details: %s", json.dumps(event, indent=2))

ami_id = event["detail"]["ImageId"]

response = ec2_cli.describe_snapshots(OwnerIds=["self"])

for snapshot in response["Snapshots"]:

snap_desc = snapshot.get("Description")

first, *middle, last = snap_desc.split()

if last == ami_id:

snapshot_id = snapshot.get("SnapshotId")

logger.info("Snapshot to be deleted: %s", snapshot_id)

try:

ec2_cli.delete_snapshot(SnapshotId=snapshot_id)

logger.info(f"Snapshot {snapshot_id} deleted successfully")

except Exception as e:

logger.error(f"Error deleting snapshot {snapshot_id}: {e}")

return {

"statusCode": 500,

"body": f"Error deleting snapshot {snapshot_id}: {e}"

}

return {"statusCode": 200}

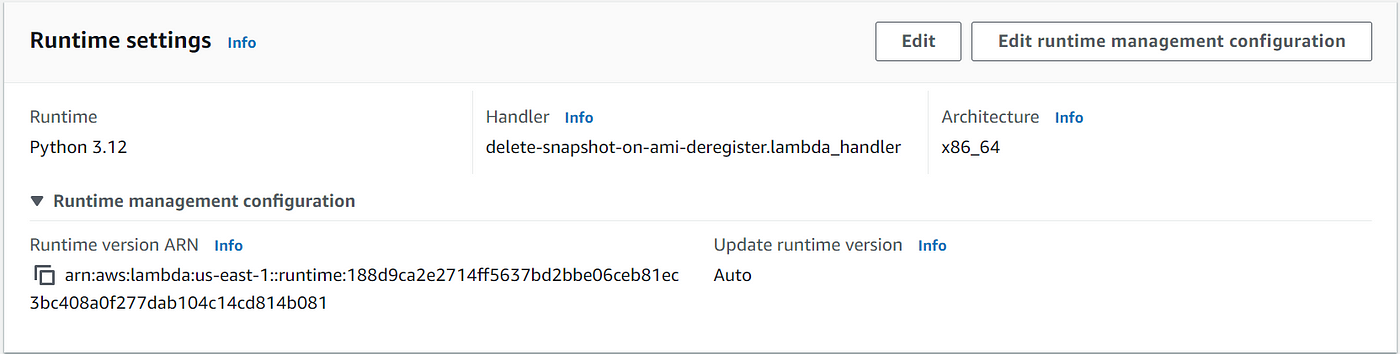

After running Terraform and deploying the code to the Lambda function, ensure you update the "Handler" value in the Runtime settings to your Python file's name (for example, 'delete-snapshot-on-ami-deregister') followed by the function name ('lambda_handler').

Step 6: Running and Testing the Setup

After applying your Terraform configuration and deploying the Lambda function, you can test the setup:

Deregister an AMI in the AWS console or via CLI.

Check CloudWatch Logs for logs generated by the Lambda function, confirming that it received the event and successfully deleted the associated snapshot.

Enhancements and Best Practices

Permissions Best Practices: The Lambda’s IAM policy allows for broad permissions (e.g.,

"Resource": "*"). In a production environment, consider scoping resources more narrowly, such as specific snapshot ARNs, to enhance security.Monitoring and Alerts: Integrate CloudWatch Alarms to monitor the Lambda function’s health, ensuring timely alerts if failures occur.

Output Logging: Use structured logging in Lambda to facilitate easier analysis and debugging of logs.

Cost Management: Automated snapshot cleanup not only saves storage costs but also keeps your AWS environment clutter-free, which is beneficial for both cost and security.

Conclusion

This setup combines Terraform, Python and EventBridge to deliver a scalable, efficient, and automated solution to AWS snapshot cleanup. By handling the lifecycle of AMIs and their snapshots, we’ve ensured a streamlined and cost-effective AWS environment that reduces the chances of orphaned snapshots taking up space.

Subscribe to my newsletter

Read articles from Pradeep AB directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pradeep AB

Pradeep AB

Passionate Cloud Engineer | AWS Certified Solutions Architect | Multi-Cloud Expertise in AWS, Azure, GCP & Oracle | DevSecOps Enthusiast | Proficient in Linux, Docker, Kubernetes, Terraform, ArgoCD & Jenkins | Building Scalable & Secure CI/CD Pipelines | Automating the Future with Python & Github