Netflix's Big Bang Load Spike: How to Tackle Sudden Traffic Spikes in the Cloud

Sathya

Sathya

As previously discussed, Netflix is using an Active-Active architecture, and we also mentioned a technology called Kubernetes.

Kubernetes is one solution to address the issue, but it’s not enough to fully tackle this challenge. In the next blog, we'll dive into Kubernetes in detail. For now, let’s focus on how Netflix solved the load spike problem. We’ll take a deep dive into all the solutions they implemented! Earlier, we discussed the buffer, which plays a key role in their solution space. However, one hero is not enough load spikes need to be handled by many heroes, including ML engineers, DevOps, Network engineers, SREs, and many other teams. Kubernetes, for instance, helps scale infrastructure based on CPU, RAM, and user demand. But scaling up 1000 at a time is not always feasible, as there are limits to how many can be launched simultaneously. Let’s get started!

It was a record-breaking night for Netflix.

The Jake Paul vs. Mike Tyson fight became the most-streamed sporting event in history, peaking at a remarkable 65 million concurrent streams, with 38 million in the US alone. At its peak, more than half of all TV viewing in the US was tuned into Netflix. Meanwhile, the Katie Taylor and Amanda Serrano became the most-watched professional women’s sports event in US history, with 47 million average minute viewers in the US.

This massive global success wasn’t just about the events themselves it also highlighted Netflix’s incredible ability to handle unprecedented traffic surges, thanks to its evolved infrastructure. From moving away from bare-metal servers to adopting virtual machines and ultimately embracing containers and cloud-native technologies, Netflix scaled its systems to meet the growing demand seamlessly.

Key to this transformation were Site Reliability Engineers (SREs) and DevOps practices that ensured service reliability and smooth deployments. Container orchestration with Kubernetes played a crucial role in enabling Netflix to scale on-demand, while load balancing optimization through Istio and Envoy ensured traffic was efficiently managed. Additionally, advanced data structures and algorithms optimized resource allocation, ensuring high quality streaming worldwide.

Diving into the strategies used by Netflix to address the problem statement

Consider this example: If you already know a load spike is coming, you need to stay ahead of time to handle it effectively.

A real-life analogy is the festival season, such as Makar Sankranti, Shivaratri, and Ugadi when the demand for products like flowers and fruits increases significantly. For example, sugarcane is in high demand during Sankranti, and flowers are needed for both Sankranti and Shivaratri. These are observable trends in the market where supply must meet increased demand.

Similarly, in Netflix’s case, the demand spike is driven by the surge in customers, and it is crucial for Netflix to ensure that the app remains accessible at all times, even when there is high traffic. The key is anticipating demand ahead of time and preparing the infrastructure to handle it smoothly, just as vendors prepare for festival seasons to meet market demands.

When you have an idea what that load spike look like? What will you do ?

An example of this can be seen during the launch of titles like Stranger Things , Squid Game Season 2, One piece Season 2, If the launch is scheduled for 7 PM, the development team must anticipate the expected load starting one hour before, at 6 PM. This expected load spike must be accounted for in advance so that the Netflix team is prepared to handle the surge in users.

This can be achieved by increasing the success buffer to ensure the system remains stable and responsive during high traffic periods. The auto-scaling concept from Kubernetes is core to this success, but as mentioned, scaling is not designed to handle thousands of servers at once. It’s not feasible to preemptively scale up thousands of servers in mass ahead of time. Instead, it’s more of a reactive measure where tools like failover tools are used to scale up the entire fleet when necessary.

Yes, you heard right! Netflix has its own failover detection tool called Chaos Monkey, which is part of a larger suite of tools known as the Simian Army.

How does this work in practice in real-life challenges ?

Earlier, we discussed the starts-per-metrics graph and how it works. This graph is essential as it maps the starts per second to RPS (Requests Per Second) for every different service internal to Netflix. One start per second might result in 4 RPS (Requests Per Second) to one service, 2 RPS to another, 20 RPS to another service, and so on.

If the algorithm thinkers and development team know how much the starts per second will increase, they can scale up all their services accordingly in an optimal way. This allows Kubernetes' auto-scaling to scale the cluster by 2x in response to the increased RPS, scale up by a certain percentage to another cluster, and be ready to handle the spike.

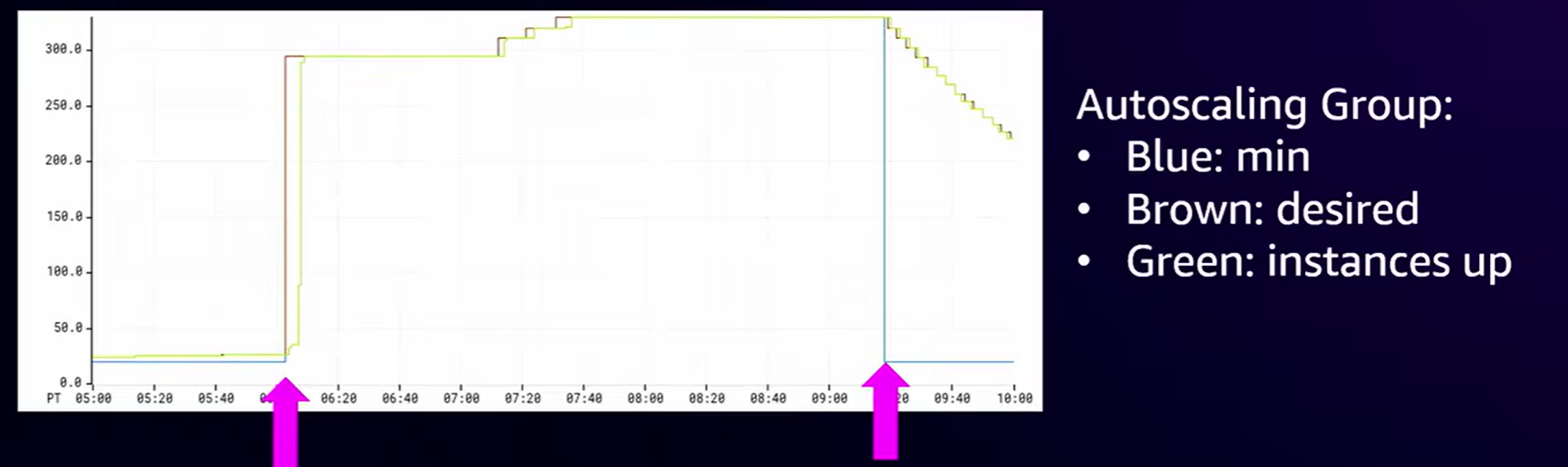

In the above graph from the AWS reinvestment task, we can see the minimum (represented by the blue color) in the auto-scaling groups, which adjusts to match the expected load. Netflix was surprised to see that the load spike happened as predicted. After some time, the monitoring tool showed a decrease, as the load gradually went down. The auto-scaling feature then scaled down the pods accordingly

Finally, the Netflix team has this proactive toolkit!

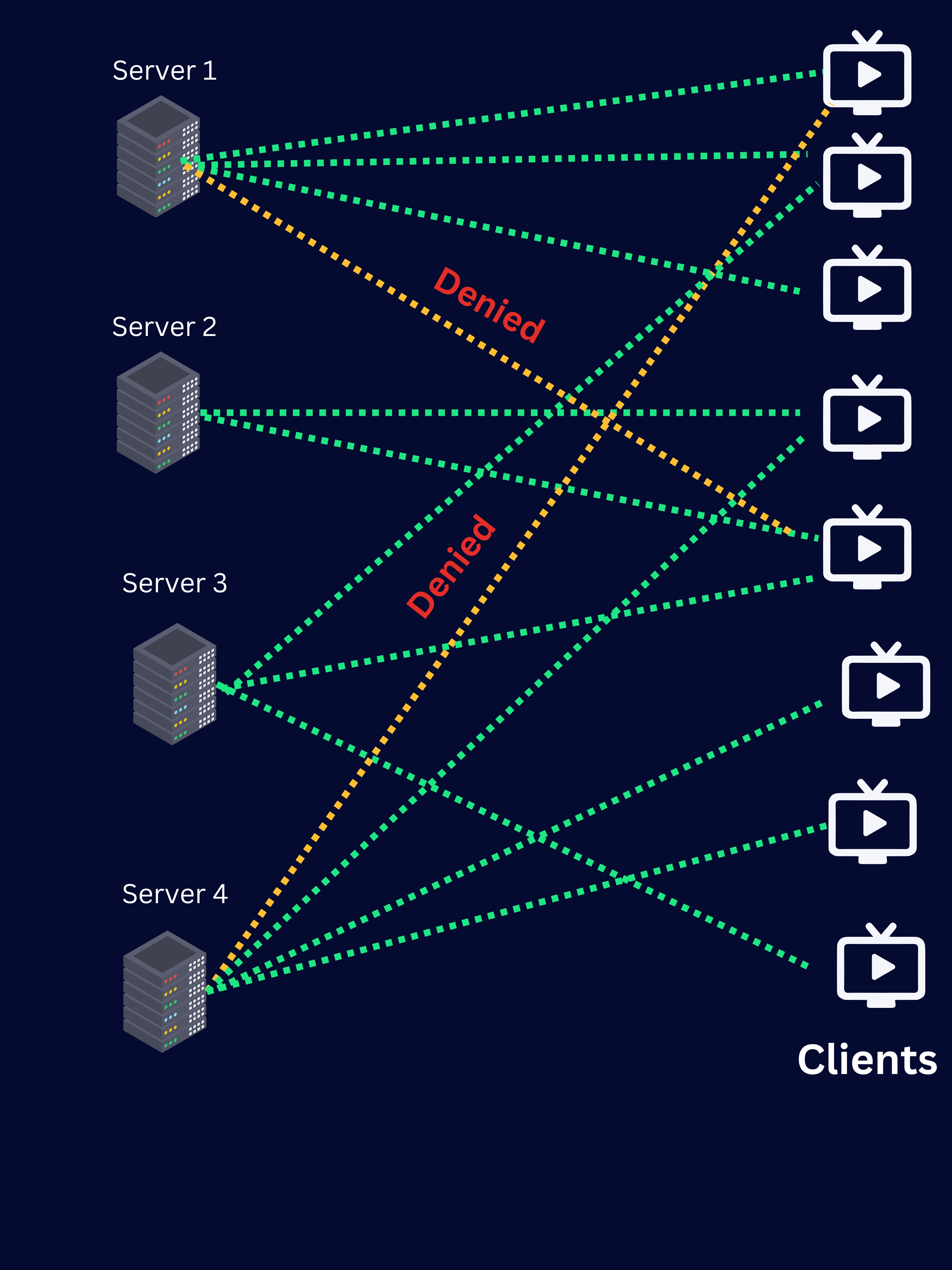

Shaping traffic is needed because of regional policy restrictions.

The reason is that, even though they have big global title launches, there are certain scenarios where some titles are restricted to specific regions. For example, some titles may be popular in India, North America, or South Korea. Developers need to uniformly balance traffic across all four regions. The idea here is that, instead of users going to the closest region, the developers scale up one or two regions significantly. They planned to scale up each region little by little based on demand to reduce the risk of losing subscribers globally.

Once this is achieved, auto-scaling will work as intended across all four different regions. Data and services for users are distributed among different locations with isolated environments inside pods.

Scaling out of trouble requires predicting issues and taking proactive measures!

hey came up with a plan and decided that the best way to protect against load spikes is through two methods:

Prediction and Precaution

Scaling up clusters before it happens

Sometimes, it is challenging for them, even though they have this idea!

How do they even adjust their scaling policy to react to sudden load increases, like during live events?

Here is an image of a graph as an example

As shown in the graph, traffic increases gradually and smoothly over time. As the demand reaches a certain level, the system's capacity scales up accordingly. Once the demand drops, the system adjusts the capacity back down. This cycle has been consistently observed year after year through historical data. By analyzing this data, Netflix has been able to develop highly tuned auto-scaling policies. These policies are now so finely calibrated that they align with Netflix's load surge patterns, leveraging machine learning techniques like Coefficient and Correlation to predict and respond to fluctuations in demand. This data-driven approach helps ensure that the system remains efficient and responsive, even during sudden traffic spikes.

Lets discuss how that pattern works with sudden load spike !!

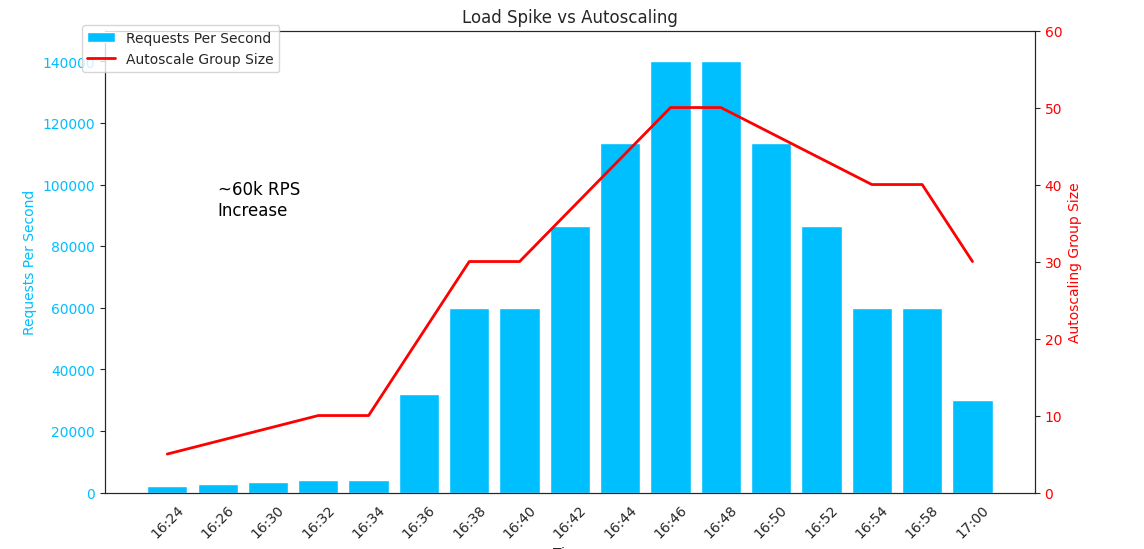

This is a similar example of how one of their microservices handles traffic, requests per second, and the number of desired instances.

At the onset of a load increase, the request rate surged from 35,000 to 60,000 requests per second. However, Netflix's auto-scaling was relatively slow, as its detection time was gradual.

As a result, the Netflix team faced a detection time issue since, in this agile world, every second matters. The auto-scaling detection time was taking around 10–20 minutes.

How did they solve this detection time issue? What was causing the problem?

To address this issue, Netflix's ML team analyzed log data and uncovered valuable insights.

The time to detect the load increase was almost 4 minutes.

After detection, once the instances were provisioned, the boot-up time took around 6 minutes.

Because the system did not detect the surge early, it couldn't scale up fast enough, leading to an additional 2-minute delay. This forced them to restart the entire process from the baseline.

In total, it took around 20 minutes for the system to detect, scale, and fully recover (TTR – Time to Recovery).

They went deep into the issue and found that the dominating factor was not just 1 or 2 causes there were plenty of reasons for the slow detection time.

So, what solution did the Netflix engineering team come up with?

To solve this issue, the Netflix team came up with the idea of breaking components down to reduce recovery time. This is the same concept we will be discussing in the Docker optimization section of a future blog.

Step_1:

Detection: " Identifying the Issue "

Questions:

- " When does the load arrive ? "

- " When do they want to start scaling ? "

For this, their solution idea bloomed. Here is a Python example of the same.

def idea():

question = "Load Happens"

solution = [

"Provision of Control Plane from AWS",

"System Startup",

"Application Startup",

"Traffic Startup"

]

output = "Only traffic is served to those instances, allowing them to achieve recovery."

print("Question:", question)

print("Solution:")

for i, step in enumerate(solution, start=1):

print(f"{i}. {step}")

print("Output:", output)

idea()

Netflix came up with an experimental plan to test and ensure resilience in case of real-time load surges. In this test, the development team synthetically generated load as a simulation. They then compared the baseline performance with the experimental load to analyze how the old policy behaved versus the new policy. Since multiple configuration policies were tested simultaneously, the team could determine which one was the most optimal to work with.

They found the movement of Load serge depends on work flow of service they start such as ,

Load Detection → App Startup → System Startup → Hardware Startup → Provisioning

Even though provisioning happens quickly, the detection phase was affected by various factors that impacted its functionality. The Dev team found that it took 4 minutes for the load spike to be identified by autoscaling during the Detection Phase.

Now, another question arises: How did they tackle this?

Load Detection

To address this issue, the Dev team started by increasing their RPS. They also introduced a new policy called the RPS Hammer Policy.

Previously, they were using a CPU targeting policy, which worked well with minor modifications each time. However, it wasn’t enough to provide more detailed information, such as detecting 10x or 30x load spikes. The internal detection algorithm was not functioning optimally. The system was designed to detect typical CPU utilization around 50% as part of the CPU target tracking policy.

This led to a challenge: If a 2x increase in traffic caused the CPU to reach 100%, similarly, a 4x or 10x traffic spike would also drive the CPU to 100%. As a result, the autoscaling group lost the ability to differentiate between a 2x, 10x, or even 100x load spike.

This was much more challenging for the Dev team, as manually shedding load led to a wastage of resources, especially if they didn’t know how much load could occur.

So, the team came up with an idea, instead of doing it manually, why not do it in one go and get it right? They realized they just needed to implement a target tracking policy.

Idea: The idea was to implement a system that detects an increase in RPS (Requests Per Second) beyond what they had expected. Once detected, the system would automatically allocate the right amount of CPU and RAM to meet client demands.

However, this wasn’t good enough, as when a 2x load spike occurred, the load couldn’t be targeted by the target tracking policy. Manually managing this was impossible, especially for live events like the World Cup. So, they needed to figure out what to do next. The systems needed to be able to balance the load and scale the resources by the right percentage. To achieve this, they needed a highly optimized algorithm, which is where DSA (Data Structures and Algorithms) played a vital role.

Even a 0.001% improvement in the solution through optimized algorithms would lead to significant profits for the company.

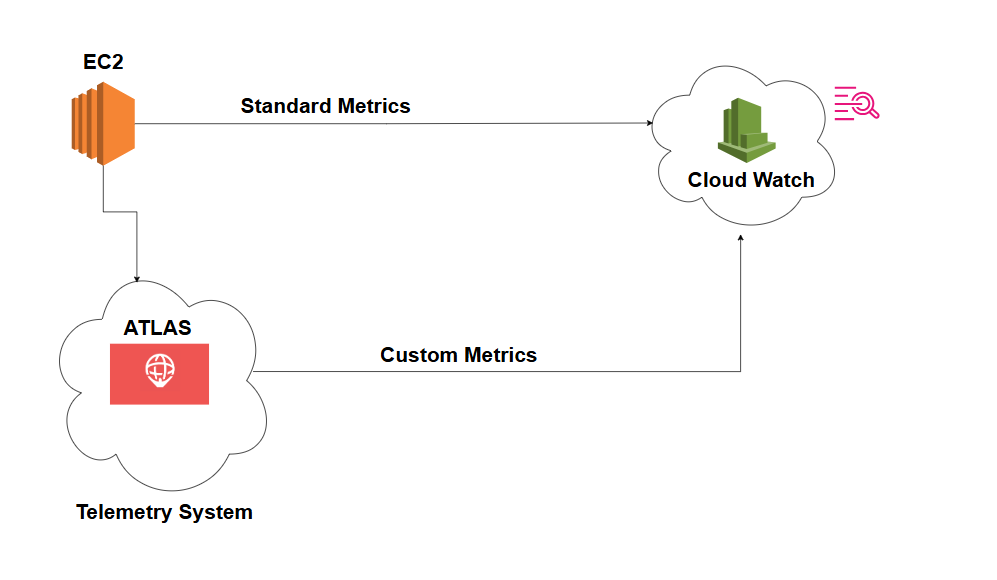

The next thing the team did was incorporate a system with higher resolution cloud metrics, and thanks to AWS CloudWatch, they were able to achieve this.

Even though the monitoring was basic and had a 5 minute resolution, they decided to improve it. Instead of waiting for 5 minutes, they reduced the monitoring interval to 1 minute. This was the first idea that came to their minds, so they dug deeper into the logs and enabled detailed monitoring for every 1 minute. Then, they focused on resolving these metrics, which also helped improve their autoscaling policy. However, they were also running custom metrics for RPS (Requests Per Second). To achieve this, they had to route traffic through their internal telemetry system (Atlas) before it went to CloudWatch. Unfortunately, this was still not good enough, as it also had a 1 minute resolution. They identified this issue by analyzing log data and reviewing historical events that had occurred in the past.

The best part was that AWS CloudWatch supports high-resolution metric monitoring, which presented a great opportunity for Netflix to enhance their architecture. By implementing higher-resolution metric monitoring for every 5 seconds, logs were sent to CloudWatch every 5 seconds. This provided them with additional data points, allowing the system to detect that traffic was increasing much faster. With this modification in their scaling policy, Netflix was able to solve one of its issues with load spike detection and saw a 3x improvement in load spike detection.

APP Startup

It's a whole new story from here on. The SRE team dug deep into insights from historical data of past events, like big title launches such as Squid Game Season 2. As data scientists, it's really important to know how to find insights from data, and they are responsible for predicting what will happen in the future. Data is used to find insights using programming languages like Python, along with libraries like Matplotlib or other visualization tools. Visualizing helps to analyze data as quickly as possible.

Here, the Netflix team predicted and scaled up instances when load occurred.

However, there was an issue during app startup, as it was taking more time than expected.

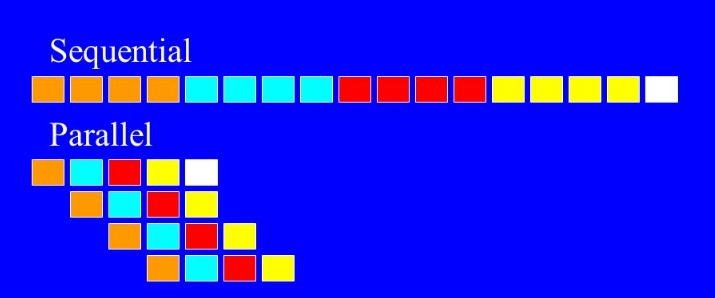

When they looked into the data, they found that the system processes were able to start in under a minute. However, there were some exceptions where a few processes were taking longer, causing others to wait for a long period, much like a queue data structure.

They found out that it was an optimization problem, where processes had to wait for the previous process to finish before they could start. Even if the 2nd or 3rd process only took 1 or 2 seconds to finish, they still had to wait. To solve this, the Netflix engineering team decided to eliminate long-running processes that were not necessary, allowing them to focus only on essential tasks. By doing this, they were able to make their system load faster and perform better.

System Startup

Above is just an example of what the startup process looks like. The Netflix team leveraged a base OS, possibly a Linux distro, on which all the Netflix services run. Even if the team makes an improvement, even as small as 0.00001%, it will lead to significant gains and a broader reach, ultimately increasing profits.

After analyzing a year of data logs, they discovered some insights. They found that they were performing sequential startups. Upon analyzing the sequential startups, they identified the issue and decided to change the process to parallel paths. This allowed them to start multiple processes in parallel and then join them, ultimately achieving a faster startup.

After the modifications, they achieved even more improvements and saw a 2x performance boost. Following this, they did a test run and observed a huge improvement more than 2x in load handling. Auto scaling also joined forces with the developers' success to provide a rapid, single-step startup boost!

Now, the detection time was only 1 minute, and the load balancer could react to load spikes much quicker!

The boot time was reduced to 2 minutes as they optimized their techniques.

Since they identified the spike during startup, they no longer needed to do anything manually—everything would now be handled by auto-scaling.

This led to an overall recovery time reduction to just 3 minutes.

How did the SRE team ensure high availability and a good user experience during critical load spikes, especially within the crucial 3-minute window, while balancing request handling without overloading the CPU?

CONCLUSION

Finally, Netflix’s approach is a combination of prediction, precaution, and adaptation to handle any load spike, ensuring that users can enjoy uninterrupted streaming. The multi disciplinary collaboration between SREs, ML engineers, DevOps, and network teams has been pivotal to creating this resilient infrastructure.

In the next part of this series, we will dive deeper into the technologies that power this seamless scaling, from Docker and Kubernetes to tools like Ansible, and discuss how these innovations continue to shape Netflix's infrastructure to handle future challenges. Additionally, we will explore how Netflix solved the critical 3-minute recovery time problem and the detailed solutions behind this achievement. Stay tuned for more in-depth insights on how these technologies work together behind the scenes to ensure smooth operations.

Thank you for reading!

References:

1: CNBC: https://x.com/FuelVC/status/1876282100317577652?mx=2

2: Auto Scaling Graph: AWS re:Invent 2024

3. Pictures created using Canvas, Gathered from Internet sources.

Link to Part 1 Blog : Managing Sudden Cloud Traffic Surge

Subscribe to my newsletter

Read articles from Sathya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by