Understanding AWS Lambda Concurrency

Harshwardhan Choudhary

Harshwardhan Choudhary

In the world of serverless computing, AWS Lambda stands out as a powerful solution that lets developers focus on code without worrying about server management. However, to effectively use Lambda in production, understanding its concurrency model is crucial. Let's dive deep into how Lambda handles multiple simultaneous executions and what it means for your applications.

The Basics: What is Lambda Concurrency?

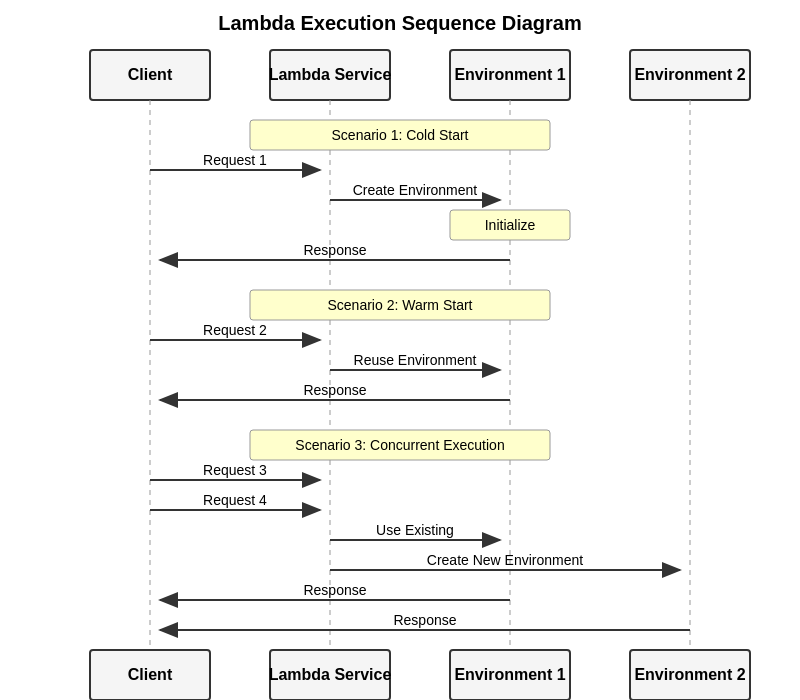

Think of Lambda concurrency as your function's ability to handle multiple requests simultaneously. Each time your Lambda function receives a request, it needs an execution environment - essentially, a secure, isolated space where your code runs. This environment can be either freshly created (a "cold start") or reused from a previous execution (a "warm start").

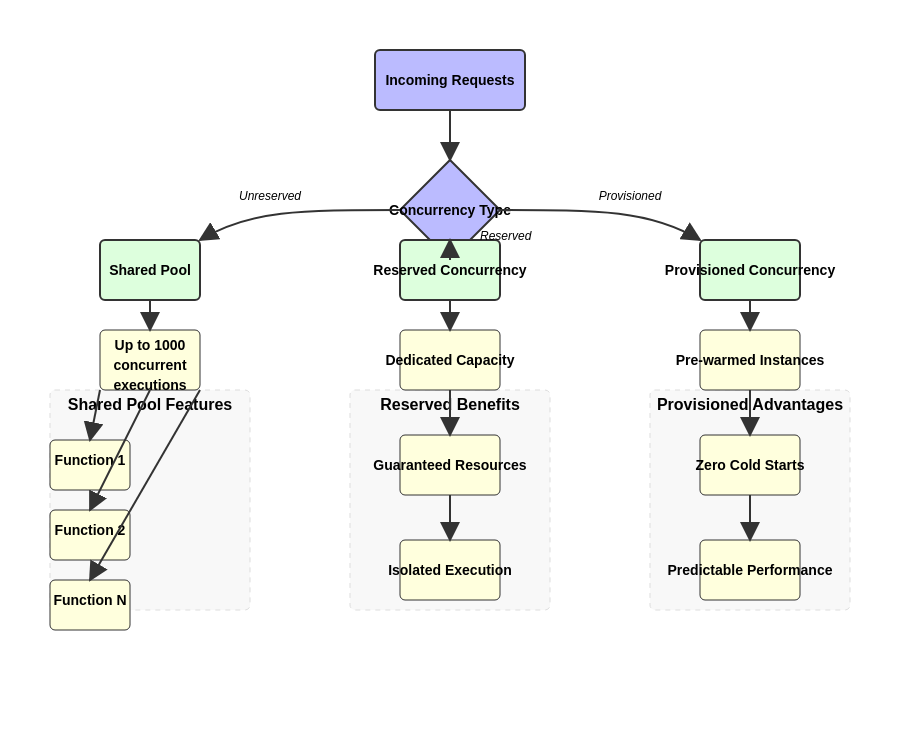

Visual Overview of Lambda Concurrency

Let's visualize how different types of concurrency work in AWS Lambda:

The Three Flavors of Concurrency

1. Unreserved Concurrency: The Default Pool

By default, AWS gives you a pool of 1,000 concurrent executions per region. Think of this as a shared resource pool that all your Lambda functions can dip into when they need to handle requests. It's like having 1,000 workers ready to tackle incoming tasks, but they're not assigned to any specific function until needed.

However, there's a catch: if one function gets really busy, it could potentially use up most of these workers, leaving other functions waiting in line. This is where more sophisticated concurrency controls come into play.

2. Reserved Concurrency: Your Private Pool

Reserved concurrency is like having bouncers at a club who ensure VIP tables are always available for specific guests. When you reserve concurrency for a function, you're essentially saying, "Always keep this many execution slots available for this specific function."

For example, if you reserve 400 concurrent executions for your payment processing function, you guarantee it will always have the capacity to handle up to 400 simultaneous requests, regardless of what other functions are doing. The trade-off? These reserved slots are subtracted from your total pool of 1,000, leaving fewer resources for other functions.

3. Provisioned Concurrency: The Premium Experience

If cold starts are giving you headaches, provisioned concurrency is your aspirin. Instead of waiting for execution environments to be created on demand, provisioned concurrency keeps a specified number of environments warm and ready to go.

It's like having cars pre-warmed and running in winter - they're ready to drive immediately, no waiting required. This comes at an additional cost, but for latency-sensitive applications, the improved performance can be worth it.

Making the Right Choice for Your Application

The key to effective Lambda concurrency management lies in understanding your application's needs:

If you have a critical function that must always be responsive, reserved concurrency is your friend. It ensures your function always has the resources it needs, even during peak loads.

For applications where consistent, low-latency performance is crucial, provisioned concurrency can eliminate cold start delays, providing a more consistent user experience.

For everything else, the default unreserved concurrency pool often works just fine, especially when your functions have moderate, predictable load patterns.

Code Examples

Let's look at some practical Python examples of working with Lambda functions and managing concurrency.

1. Basic Lambda Function with Concurrency Logging

import json

import time

import os

from datetime import datetime

import boto3

# Initialize AWS clients

lambda_client = boto3.client('lambda')

cloudwatch = boto3.client('cloudwatch')

def lambda_handler(event, context):

# Log execution start time and request ID

start_time = datetime.now()

request_id = context.aws_request_id

# Simulate some work

time.sleep(0.2) # 200ms of processing

# Log metrics about this execution

cloudwatch.put_metric_data(

Namespace='LambdaConcurrency',

MetricData=[{

'MetricName': 'FunctionExecution',

'Value': 1,

'Unit': 'Count',

'Dimensions': [{

'Name': 'FunctionName',

'Value': context.function_name

}]

}]

)

return {

'statusCode': 200,

'body': json.dumps({

'message': 'Execution completed',

'requestId': request_id,

'executionTime': str(datetime.now() - start_time)

})

}

2. Function to Monitor Concurrency Limits

import boto3

from datetime import datetime, timedelta

def monitor_concurrency():

cloudwatch = boto3.client('cloudwatch')

lambda_client = boto3.client('lambda')

# Get function configuration

function_name = 'YourFunctionName'

function_config = lambda_client.get_function_configuration(

FunctionName=function_name

)

# Get concurrent execution metrics

metrics = cloudwatch.get_metric_statistics(

Namespace='AWS/Lambda',

MetricName='ConcurrentExecutions',

Dimensions=[

{'Name': 'FunctionName', 'Value': function_name}

],

StartTime=datetime.utcnow() - timedelta(hours=1),

EndTime=datetime.utcnow(),

Period=60,

Statistics=['Maximum']

)

# Calculate concurrency usage percentage

reserved_concurrency = function_config.get('ReservedConcurrentExecutions', 0)

max_concurrency = max([datapoint['Maximum'] for datapoint in metrics['Datapoints']], default=0)

usage_percentage = (max_concurrency / reserved_concurrency * 100) if reserved_concurrency else 0

return {

'function_name': function_name,

'max_concurrent_executions': max_concurrency,

'reserved_concurrency': reserved_concurrency,

'usage_percentage': usage_percentage

}

3. Setting Up Provisioned Concurrency

import boto3

import json

def configure_provisioned_concurrency():

lambda_client = boto3.client('lambda')

function_name = 'YourFunctionName'

qualifier = 'LATEST' # Or specific version/alias

try:

# Put provisioned concurrency config

response = lambda_client.put_provisioned_concurrency_config(

FunctionName=function_name,

Qualifier=qualifier,

ProvisionedConcurrentExecutions=10 # Number of pre-warmed instances

)

# Wait for provisioned concurrency to be ready

waiter = lambda_client.get_waiter('function_active')

waiter.wait(

FunctionName=function_name,

Qualifier=qualifier

)

return {

'status': 'success',

'message': 'Provisioned concurrency configured successfully',

'config': response

}

except lambda_client.exceptions.ResourceNotFoundException:

return {

'status': 'error',

'message': 'Function or version/alias not found'

}

except Exception as e:

return {

'status': 'error',

'message': str(e)

}

Concurrency Patterns Visualization

Here's how different concurrency patterns affect function execution:

Best Practices and Considerations

When implementing concurrency controls, keep these points in mind:

Monitor Your Usage: Keep an eye on your function's concurrent execution metrics. This helps you identify potential bottlenecks and optimize your concurrency settings.

Cost vs. Performance: While provisioned concurrency can improve performance, it comes with additional costs. Make sure the benefits justify the expense for your use case.

Function Criticality: Reserve concurrency for your most critical functions first. These are typically customer-facing operations where performance and reliability are paramount.

Conclusion

AWS Lambda's concurrency model offers powerful tools for managing how your serverless applications handle multiple simultaneous requests. By understanding and properly implementing these concurrency controls, you can build more reliable, performant, and cost-effective serverless applications.

Whether you stick with the default unreserved pool, set aside capacity with reserved concurrency, or opt for the premium experience of provisioned concurrency, the key is matching your concurrency strategy to your application's specific needs and requirements.

Remember, there's no one-size-fits-all solution. The best approach often involves a mix of different concurrency types across your Lambda functions, aligned with each function's role and requirements in your broader application architecture.

Subscribe to my newsletter

Read articles from Harshwardhan Choudhary directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Harshwardhan Choudhary

Harshwardhan Choudhary

Passionate cloud architect specializing in AWS serverless architectures and infrastructure as code. I help organizations build and scale their cloud infrastructure using modern DevOps practices. With expertise in AWS Lambda, Terraform, and data engineering, I focus on creating efficient, cost-effective solutions. Currently based in the Netherlands, working on projects that push the boundaries of cloud computing and automation.