Discover technology behind your Smartphones: AI/ML

Sulabh Sharma

Sulabh Sharma

Machine learning is a type of artificial intelligence that performs data analysis tasks without explicit instructions. Machine learning technology can process large quantities of historical data, identify patterns and predict new relationships between previously unknown data. Running machine learning (ML) models directly on mobile devices has become increasingly feasible, enabling applications to function offline, reduce latency, and enhance user privacy. Frameworks like TensorFlow Lite, advancements in Large Language Models (LLMs) and tools from OpenAI have been pivotal in this evolution. Running ML on Mobile Phones - TensorFlow/LLM/Gemini/OpenAI" refers to the application and integration of machine learning (ML) technologies directly on mobile devices using specific tools and frameworks

Why Bring AI/ML to Mobile Phones?

The integration of Artificial Intelligence (AI) and Machine Learning (ML) into mobile phones is revolutionizing the way we interact with technology. Here’s why bringing AI/ML to mobile devices is essential:

1. Enhanced User Experience

AI/ML powers smarter apps, such as voice assistants, predictive text and camera enhancements like object recognition and scene optimization, offering personalized and intuitive experiences.

2. Offline Functionality

On-device AI ensures access to features like translations and voice commands without internet connectivity. This is particularly valuable for remote areas or privacy-focused applications like health tracking.

3. Reduced Latency

With computations performed on the device, response times are faster, making applications like real-time gaming and navigation seamless and efficient.

4. Improved Privacy

Processing data locally minimizes the need for cloud servers, ensuring sensitive information stays secure. For example AI-powered facial recognition systems work directly on the phone.

5. Cost and Energy Efficiency

Mobile AI reduces reliance on cloud computing, lowering data transfer costs and energy usage. Frameworks like TensorFlow Lite optimize these computations for low-power devices.

6. Democratization of Technology

AI/ML on phones makes advanced tools like learning apps and translation accessible to a wider audience, empowering users across different regions and demographics

Technologies Powering Mobile AI/ML

1. tensorFlow Lite

tensorFlow Lite (TF Lite) is an open-source, cross-platform deep learning framework launched by Google for on-device inference, which is designed to provide support for multiple platforms, including Android and iOS devices, embedded Linux and microcontrollers. It can convert tensorFlow pre-trained models into special formats that can be optimized for speed or storage. It also helps developers run tensorFlow models on mobile, embedded and IoT devices.

How does TensorFlow Lite work?

1. Select and train a model

Suppose you want to perform an image classification task. The first is to determine the model for the task. You can choose:

–Use pre-trained models like Inception V3, MobileNet, etc.

You can check out the https://www.tensorflow.org/lite/examples here. Explore pre-trained TensorFlow Lite models and learn how to use them for various ML applications in the sample application.

–Create your custom models

Create models with custom datasets using the https://www.tensorflow.org/lite/guide/model_maker

–Apply transfer learning to pre-trained models

2. Transform models using Converter

After the model training is complete, you need to use the TensorFlow Lite Converter to convert the model to a TensorFlow Lite model. The TensorFlow Lite model is a lightweight version that is very efficient in terms of accuracy and has a smaller footprint. These properties make TF Lite models ideal for working on mobile and embedded devices.

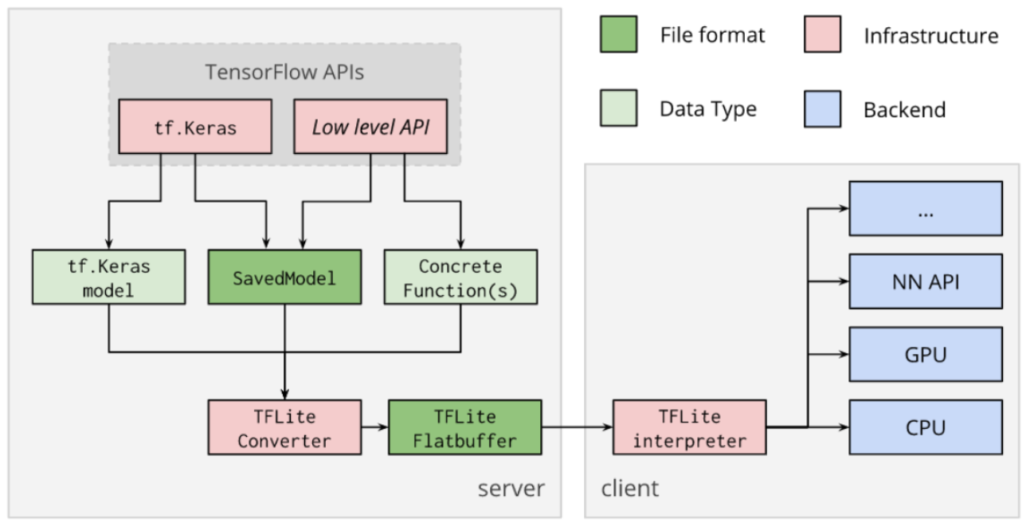

Schematic diagram of TensorFlow Lite conversion process

Source: https://www.tensorflow.org/lite/convert/index

Regarding the selection of pre-trained models section, you may want to get more information about this. TensorFlow Lite already supports many trained and optimized models:

MobileNet: A class of vision models capable of recognizing 1000 different object classes, specially designed for efficient execution on mobile and embedded devices.

Inception v3: Image recognition model, similar in functionality to MobileNet, offering higher accuracy but larger.

Smart Reply: An on-device conversational model that enables one-click replies to incoming conversational chat messages. First- and third-party messaging apps use this feature on Android Wear.

Inception v3 and MobileNet have been trained on the ImageNet dataset. You can retrain on your own image dataset through transfer learning.

You may refer to the information on http://ai.googleblog.com/2017/11/on-device-conversational-modeling-with.html

Get started with LiteRT

This guide introduces you to the process of running a LiteRT (short for Lite Runtime) model on-device to make predictions based on input data. This is achieved with the LiteRT interpreter, which uses a static graph ordering and a custom (less-dynamic) memory allocator to ensure minimal load, initialization, and execution latency.

LiteRT inference typically follows the following steps:

Loading a model: load the

.tflitemodel into memory, which contains the model's execution graph.Transforming data: Transform input data into the expected format and dimensions. Raw input data for the model generally does not match the input data format expected by the model. For example, you might need to resize an image or change the image format to be compatible with the model.

Running inference: Execute the LiteRT model to make predictions. This step involves using the LiteRT API to execute the model. It involves a few steps such as building the interpreter, and allocating tensors.

Interpreting output: Interpret the output tensors in a meaningful way that's useful in your application. For example, a model might return only a list of probabilities. It's up to you to map the probabilities to relevant categories and format the output.

This guide describes how to access the LiteRT interpreter and perform an inference using C++, Java, and Python.

2. Large Language Models(LLM)

Large Language Models (LLMs) are changing smartphone technology, subtly reshaping everything from the device’s core architecture to user interaction. As generative AI integrates deeper into mobile devices, we’re witnessing transformative changes in various aspects of our mobile devices.

How do large language models work?

Machine learning and deep learning

At a basic level, LLMs are built on machine learning. Machine learning is a subset of AI, and it refers to the practice of feeding a program large amounts of data in order to train the program how to identify features of that data without human intervention.

LLMs use a type of machine learning called deep learning. Deep learning models can essentially train themselves to recognize distinctions without human intervention, although some human fine-tuning is typically necessary.

Deep learning uses probability in order to "learn." For instance, in the sentence "The quick brown fox jumped over the lazy dog," the letters "e" and "o" are the most common, appearing four times each. From this, a deep learning model could conclude (correctly) that these characters are among the most likely to appear in English-language text.

Realistically, a deep learning model cannot actually conclude anything from a single sentence. But after analyzing trillions of sentences, it could learn enough to predict how to logically finish an incomplete sentence, or even generate its own sentences.

LLM neural networks

In order to enable this type of deep learning, LLMs are built on neural networks. Just as the human brain is constructed of neurons that connect and send signals to each other, an artificial neural network (typically shortened to "neural network") is constructed of network nodes that connect with each other. They are composed of several "layers”: an input layer, an output layer, and one or more layers in between. The layers only pass information to each other if their own outputs cross a certain threshold.

LLM transformer models

The specific kind of neural networks used for LLMs are called transformer models. Transformer models are able to learn context — especially important for human language, which is highly context-dependent. Transformer models use a mathematical technique called self-attention to detect subtle ways that elements in a sequence relate to each other. This makes them better at understanding context than other types of machine learning. It enables them to understand, for instance, how the end of a sentence connects to the beginning, and how the sentences in a paragraph relate to each other.

This enables LLMs to interpret human language, even when that language is vague or poorly defined, arranged in combinations they have not encountered before, or contextualized in new ways. On some level they "understand" semantics in that they can associate words and concepts by their meaning, having seen them grouped together in that way millions or billions of times

3. Gemini

Gemini, formerly known as Bard, is a generative artificial intelligence chatbot developed by Google. Based on the large language model (LLM) of the same name, it was launched in 2023 after being developed as a direct response to the rise of OpenAI's ChatGPT. It was previously based on PaLM, and initially the LaMDA family of large language models.

How does Gemini work?

1. Set Objectives

The process of integrating Google Gemini Pro begins by understanding your business needs and setting clear goals. Whether you aim to improve user engagement, streamline workflows, automate tasks, or strengthen security, having a solid grasp of your objectives is essential for a successful integration.

2. Utilize Gemini Pro APIs and SDKs

To seamlessly integrate Gemini AI, make use of its APIs and SDKs. This entails incorporating the required code and interfaces to help communication between the mobile app and Gemini Pro’s AI features. Your Google Gemini Pro developer can also utilize tools like Google AI Studio and Google Cloud Vertex Gemini AI to connect Gemini models into your applications.

3. Mapping Data and Configuration

Next, you need to map how data moves and set up Gemini Pro to handle and understand the relevant data sources. This is essential for the AI model to generate useful insights and provide smart responses tailored to the mobile app’s specific context.

4. Testing and Quality Assurance

Thoroughly test the integrated solution to pinpoint and address any possible issues, errors, or bugs. This stage includes testing for technical issues, app performance, security measures, and user experience, guaranteeing that Gemini Pro operates smoothly within the mobile app.

5. Deployment and Monitoring

Once testing is complete, deploy the integrated mobile app. After deployment, closely monitor its performance in real-world situations and use analytics to understand user interactions. This helps in the continuous improvement and optimization of the app.

6. Continuous Improvement and Updates

The final step is to establish a system for ongoing improvement by keeping up-to-date with Google Gemini Pro updates. Businesses should regularly review user feedback and industry trends to make incremental updates, keeping the integrated solution innovative and effective

4. Open AI

OpenAI is a research company that specializes in artificial intelligence (AI) and machine learning (ML) technologies. Its goal is to develop safe AI systems that can benefit humanity as a whole. OpenAI offers a range of AI and ML tools that can be integrated into mobile app development, making it easier for developers to create intelligent and responsive apps.

How Does Open AI works?

OpenAI provides developers with a range of tools and APIs that can be used to incorporate AI and ML into their mobile apps. These tools include natural language processing (NLP), image recognition, predictive analytics and more.

OpenAI’s NLP tools can help improve the user experience by providing personalized recommendations, chatbot functionality, and natural language search capabilities. Image recognition tools can be used to identify objects, people, and places within images, enabling developers to create apps that can recognize and respond to visual cues.

OpenAI’s predictive analytics tools can analyze data to provide insights that can be used to enhance user engagement. For example, predictive analytics can be used to identify which users are most likely to churn and to provide targeted offers or promotions to those users.

OpenAI’s machine learning algorithms can also automate certain tasks, such as image or voice recognition, allowing developers to focus on other aspects of the app.

Future of Artificial Intelligence in Mobile Phone

Artificial Intelligence (AI) is poised to revolutionize the mobile phone experience in the coming years. As AI continues to evolve, it will make your smartphone smarter, more intuitive, and increasingly personalized. The key revolution you can expect in the context of the future of artificial intelligence for mobile phone technology:

1.Advancements in AI Chipsets

One of the key drivers of the future of artificial intelligence in mobile phones is the rapid advancements in AI-focused chipsets. Major chip manufacturers like Qualcomm, MediaTek, and Samsung are developing dedicated AI processors that will power the next generation of AI-capable smartphones. These chipsets will enable more AI processing to be done directly on the device, leading to faster response times and enhanced privacy.

2.Generative AI and Multimodal Capabilities

Generative AI, which can create new content like images, text, and audio, is expected to have a significant impact on mobile phones. By 2027, over 1 billion https://www.counterpointresearch.com/insights/genai-capable-smartphone-shipments-to-grow-over-4x-by-2027/ will be shipped that will enable features like AI-powered photo editing, virtual assistants with more natural conversations, and enhanced accessibility for users with disabilities.

3.Personalization and Predictive Features

The future of artificial intelligence (AI) will continue to enhance personalization in mobile phones, with algorithms that learn user preferences and adapt the device's functionality accordingly. This will include features like predictive text, smart content curation, and intelligent task automation. As AI becomes more advanced, it will be able to anticipate user needs and proactively offer suggestions and assistance, making the mobile experience more seamless and efficient.

Conclusion

The integration of AI and ML into mobile devices is revolutionizing technology, enhancing user experiences, reducing latency, and ensuring privacy. Frameworks like TensorFlow Lite, LLMs, Google’s Gemini and OpenAI APIs empower mobile apps with smarter features, real-time processing, and personalized interactions. These advancements make AI more accessible and drive innovation across industries. As AI evolves, mobile development will continue to deliver intelligent, responsive, and transformative solutions for the digital age.

References

https://beonperf.ch/fr-CH/blog-de-beonperf/large-language-model-llm

https://glance.com/us/articles/future-of-artificial-intelligence

https://datasciencedojo.com/blog/openai-and-mobile-app-development/

Subscribe to my newsletter

Read articles from Sulabh Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by