Deep Dive into Boosting Code Performance with Bitwise Optimization

Debajyati Dey

Debajyati Dey

Bit-level programming techniques can often replace more complex data structures like maps or sets, resulting in significant improvements in both speed and memory usage. We will examine the fundamental concepts, discussing how compilers transform arithmetic operations into efficient bit-level instructions, and provide practical examples in both C/C++ and Python.

TLDR Prior to Reading the Article

This article explores bit-level programming techniques, examining how bitmasks, bitwise indexing, and precomputed Hamming weights can optimize code by improving speed and memory efficiency. Through practical examples in C/C++ and Python, we demonstrate how these techniques can substitute complex data structures such as maps and sets. Furthermore, we discuss compiler optimizations that convert arithmetic operations into efficient bitwise instructions, making them suitable for performance-critical applications.

Synopsis

I initially tackled the "Bit Array" problem (Hard) on HackerRank with a conventional approach, relying on standard data structures - sets and arrays. However, despite multiple optimizations, 8 test cases failed due to memory constraints and inefficiencies. Frustrated, I reluctantly unlocked the Editorial solution, expecting a straightforward fix—only to be completely baffled by the crazy bitwise operations.

For two days, I struggled to understand the concepts of bit manipulation and bitwise indexing. However, once I explored bitmasks, precomputed Hamming weights, and bitmaps, it all clicked. Now I want all these crazy techniques to make sense to you too. So, let’s begin!

Bitmasks and Bitwise Indexing

A bitmask is a numeric value where each bit represents a flag or a Boolean state. By using bit-level operations, multiple flags can be stored and manipulated within a single integer variable. Bitwise indexing leverages these operations to quickly access or modify individual bits.

Why use Bitmasks?

Memory Efficiency: A single integer (typically 32 or 64 bits) can store many Boolean flags, reducing the memory overhead compared to using arrays or sets.

Speed: Bitwise operations are directly mapped to single CPU instructions, making them extremely fast.

Simplification: Many critical problems involve subsets or flags where bitmasks provide a concise and elegant solution.

Let’s have a look on some common operations.

Common Operations

Setting a Bit:

To mark an element as present, use the following operation:

bitmask |= (1 << x);

Example in C++:

#include <iostream>

using namespace std;

int main() {

unsigned int bitmask = 0;

int x = 3;

bitmask |= (1 << x); // Sets the 3rd bit

cout << "Bitmask after setting bit " << x << ": " << bitmask << endl;

return 0;

}

Example in python:

bitmask = 0

x = 3

bitmask |= (1 << x) # Sets the 3rd bit

print("Bitmask after setting bit", x, ":", bitmask)

Checking if a Bit is Set:

To verify if a particular bit is set:

if (bitmask & (1 << x)) {

// Bit is set

}

Example in C++:

if (bitmask & (1 << x)) {

cout << "Bit " << x << " is set." << endl;

}

Example in python:

if bitmask & (1 << x):

print("Bit", x, "is set.")

Clearing a Bit:

To remove or clear a bit:

bitmask &= ~(1 << x)

Example in C++:

bitmask &= ~(1 << x); // Clears the 3rd bit

cout << "Bitmask after clearing bit " << x << ": " << bitmask << endl;

Example in python:

bitmask &= ~(1 << x) # Clears the 3rd bit

print("Bitmask after clearing bit", x, ":", bitmask)

Toggle a Bit (Flip 1 to 0, 0 to 1)

bitmask ^= (1 << x);

Example: -

5 ^ (1 << 2) = 1 → 0101 ^ 0100 = 0001

Bitwise Indexing in Data Structures

A common use case in problem solving is to store a large number of Boolean flags in a compact way.

For example, consider an array f of unsigned integers used to represent a bitmap covering a range of values. Each integer can store 32 flags (or 64 on 64-bit machines).

In C++:

const int MAX = 1 << 26; // Number of 32-bit blocks to cover 2^31 bits

unsigned f[MAX] = {0};

unsigned s = 45;

// Setting the bit for s:

f[s >> 5] |= 1u << (s & 31);

Here, s >> 5 determines the index in the array (because 5 bits are needed to represent 32 values), and s & 31 (which is equivalent to s % 32) identifies the specific bit within that integer.

Python Example: Python does not have fixed-size integers by default, but we’ve modules like bitarray to apply similar logic -

from bitarray import bitarray

# Initialize a bit array with 2^31 bits set to 0.

n_bits = 2**31

f = bitarray(n_bits)

f.setall(0)

s = 45

f[s] = 1 # Directly set bit for s in bitarray

Precomputed Hamming Weight

The Hamming weight of a number is the number of bits set to 1. In many algorithms, it is necessary to count the number of set bits quickly. A direct bit-by-bit iteration has a linear cost in the number of bits. Precomputation using a lookup table reduces this to constant time per query.

Let’s go through and examine two popular methods:

Brian Kernighan’s Algorithm (O(1) Time Complexity)

int count_set_bits(int n){

int count = 0; // count accumulates the total bits set

while(n != 0){

n &= (n-1); // clear the least significant(lowest) bit set

count++;

}

return count;

}

Precomputed Lookup Table (Even Faster and Super-Fast!)

In a typical implementation, a lookup table is created for all possible 16-bit integers (0 to 65,535), allowing you to count the bits in a 32-bit number by splitting it into two 16-bit halves.

Example in C++:

const int K = 16;

const int SIZE = 1 << K;

int kb[SIZE];

// Precompute Hamming weight for every 16-bit number

for (int i = 0; i < SIZE; i++) {

kb[i] = (i & 1) + kb[i >> 1];

}

// Usage: Count bits in a 32-bit integer

unsigned int value = 0b10110000101000011010001000110000;

int count = kb[value & 0xFFFF] + kb[value >> 16]; // See, no loops needed!

Python Example: For Python 3.10 and later, the built-in bit_count() method can be used. For earlier versions, you can build a lookup table similarly:

K = 16

SIZE = 1 << K

kb = [0] * SIZE

for i in range(SIZE):

kb[i] = (i & 1) + kb[i >> 1]

def popcount_32(value):

lower = value & 0xFFFF

upper = value >> 16

return kb[lower] + kb[upper]

value = 0b10110000101000011010001000110000

print("Hamming weight:", popcount_32(value))

Why Build lookup tables?

Speed: Once precomputed, the lookup is an O(1) operation.

Efficiency: It avoids iterating through each bit, which is beneficial when processing a large number of values.

Bitmaps for Data Storage

A bitmap (or bit array) is a compact data structure used to represent a collection of Boolean values. It is often used to indicate the presence or absence of elements within a fixed range.

Applications

Memory-Efficient Sets: Bitmaps can represent large sets where each bit corresponds to an element.

Flags and Indicators: Useful in algorithms that require marking states or conditions.

Replacing Complex Data Structures: In many cases, using a bitmap can eliminate the need for hash maps or other complex data structures that have additional overhead.

Consider a scenario where we need to record the appearance of integers in the range [0, 2³¹-1]. Using a bitmap:

In C:

#include <stdio.h>

const unsigned M = (1UL << 31) - 1;

const int MAX = 1 << 26; // 2^26 integers, each storing 32 bits -> covers 2^31 bits

unsigned f[MAX] = {0};

void markNumber(unsigned s) {

f[s >> 5] |= 1u << (s & 31);

}

int main() {

// Mark a sequence of numbers

unsigned int s = 100;

markNumber(s);

s = (s * 658061970u + 695098531u) & M;

markNumber(s);

// Continue processing...

return 0;

}

In python:

An efficient approach is to use the bitarray module: -

from bitarray import bitarray

# Create a bitmap to hold 2^31 bits

n_bits = 1 << 31 # same as 2**31

bitmap = bitarray(n_bits)

bitmap.setall(0)

def mark_number(s):

bitmap[s] = 1

# Example: Mark numbers

s = 100

mark_number(s)

# Use modulo arithmetic similar to C++ if needed.

Alternatively, a Python list of integers (acting as bit blocks) can be used, mimicking the C/C++ approach:

MAX = 1 << 26 # Number of 32-bit blocks

f = [0] * MAX

def mark_number(s):

block = s >> 5

pos = s & 31

f[block] |= 1 << pos

s = 100

mark_number(s)

Finding the Position of a Set Bit

The next examples are only for C and C++ because I don’t know similar built-in functions are available in python.

Find Lowest Set Bit:

int lowestBit(int x) {

return x & (-x);

}

Example: 101100 → 000100

Find Position of First Set Bit

int firstSetBitPos(int x) {

return __builtin_ctz(x); // Counts trailing 0s

}

Example: 8 (1000) → __builtin_ctz(8) = 3

Find Position of Highest Set Bit

int highestSetBitPos(int x) {

return 31 - __builtin_clz(x); // Counts leading 0s

}

Example: 8 (1000) → __builtin_clz(8) = 28, so position = 31 - 28 = 3

Some Bitmasking Tricks

Check if a Number is a Power of 2:

bool isPowerOfTwo(int x) {

return x && !(x & (x - 1));

}

Modulus Alternative

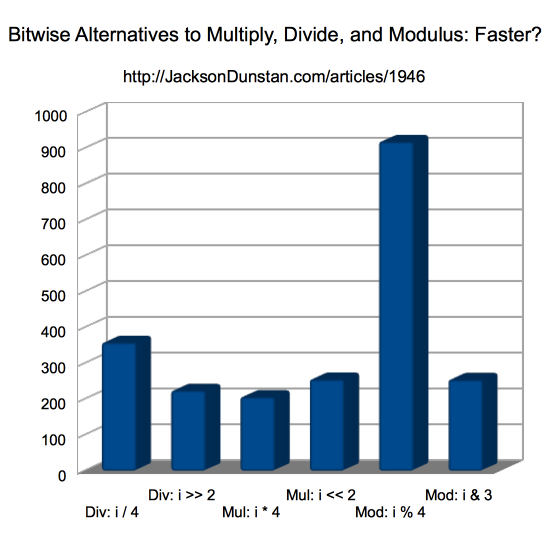

The Modulus operator is extremely slower than its bitwise equivalent. For evidence look at these well tested and approved records -

So, now you clearly see that if you're got a lot of divides or mods in your performance-critical code, better swap them over to the bitwise versions!

Let’s see how we do this -

if i and n are unsigned integers, i & (n-1) is equivalent of i % n.

Example: -

unsigned int modulus(unsigned int i, unsigned int n) {

return n & (i-1); // equivalent to n % i

}

Generate All Subsets of a Set

for (int mask = 0; mask < (1 << n); mask++) {

for (int i = 0; i < n; i++) {

if (mask & (1 << i)) {

cout << "Include element " << i << "\n";

}

}

}

The loop Iterates over 2^n subsets of an n-element set!

⚡Crazy Fast Techniques

Bitmap Storage (Save Space & Time)

Use this technique below when there is extreme memory constraint or hashing is too slow.

unsigned f[1 << 26]; // Store 2³² bits in an array

f[s >> 5] |= (1u << (s & 31)); // Set bit

if ( f[s >> 5] & (1u << (s & 31)) ) { /* Check bit */ }

XOR to Swap Two Integer Variables Without Temp

No extra memory needed.

#include <stdio.h>

int main() {

printf("Before swapping, a=%d and b = %d",a,b);

a ^= b;

b ^= a;

a ^= b;

printf("After swapping, a=%d and b = %d",a,b);

}

How Modern Compilers Transform Arithmetic to Bitwise Operations

Many modern compilers optimize arithmetic operations when the operands are constants or when certain patterns are detected, so that you don’t have to write these bitwise equivalents on your own most of the time (when situation is NOT critical).

Let’s see how that thing works: -

Multiplication and Division by Powers of Two:

Multiplication by2^nis converted into a left shift (<< n), and division by2^nis converted into a right shift (>> n).int x = 5; int result = x * 8; // Optimized to: x << 3 by compiler /* Similarly, - */ int another = x * 15; // Optimised to: (x << 4) - x by compilerBitwise AND, OR, XOR:

These operations are directly mapped to single machine instructions, offering the lowest possible latency.

Practical Implications

Speed: Bitwise operations are among the fastest operations available, often executed in a single CPU cycle.

Determinism: The transformations performed by the compiler are well-defined and consistent across platforms that follow the same architecture.

Memory Optimization: Using bit-level storage (bitmaps) minimizes memory usage, a critical factor in performance-sensitive applications.

Important Facts to Remember

Understanding low-level representation of data helps in writing code that is both faster and more memory efficient.

Compiler Intrinsics: Some compilers provide built-in functions (like

__builtin_popcountin GCC) that are highly optimized for counting bits.Language Differences: While C/C++ provides direct control over memory and low-level operations, languages like Python abstract these details. Even though python’s interpreter is inherently slower (because of the global interpreter lock) than C/C++ compiled binaries in critical loops, Python’s bitwise operations are implemented in C and are efficient for more elegant solutions.

Concluding

Bit-level programming techniques such as bitmasks, bitwise indexing, precomputed Hamming weight, and bitmaps offer powerful tools for writing optimized, performance-critical code. They help eliminate the need for more complex data structures like maps and sets by providing memory-efficient and fast operations.

My Final Takeaways:

Bitmasks and Bitwise Indexing: Enable efficient storage and retrieval of Boolean flags within a single integer.

Precomputed Hamming Weight: Reduces the time complexity of counting bits from linear to constant time.

Bitmaps: Provide a compact representation for large sets of Boolean values.

Compiler Optimizations: Modern compilers transform certain arithmetic operations into bitwise instructions for speed and efficiency.

Even with inherent performance limitations, Python also benefits from them through optimized C-level implementations.

If you found this POST helpful, if this blog added some value to your time and energy, please show some love by giving the article some likes and share it on LinkedIn, twitter.

Feel free to connect with me at - Twitter, LinkedIn or GitHub :)

Happy Coding 🧑🏽💻👩🏽💻! Have a nice day ahead! 🚀

Subscribe to my newsletter

Read articles from Debajyati Dey directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Debajyati Dey

Debajyati Dey

Linux Enthusiast, Terminal Nerd, interested in open source, web dev, React Native, Neovim BTW